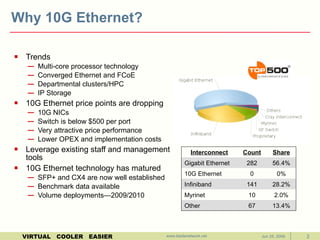

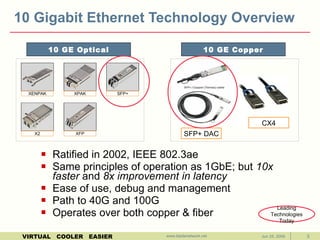

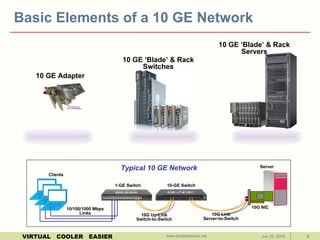

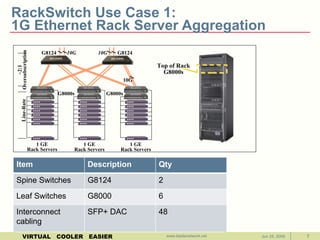

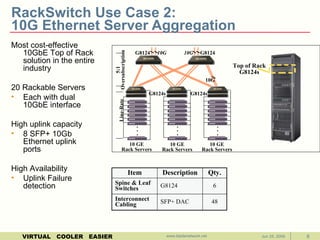

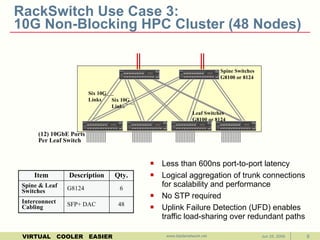

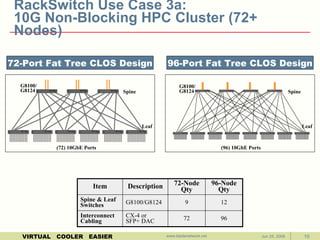

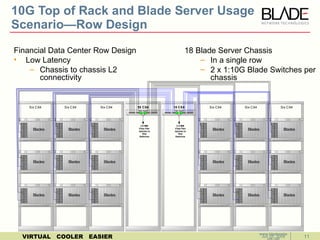

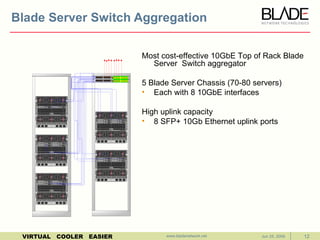

10G Ethernet provides faster connectivity that enables use cases like high performance computing clusters and IP storage. Key advantages of 10G Ethernet include higher speeds of 10Gbps, lower costs as prices drop for 10G network interface cards and switches, and the ability to leverage existing Ethernet infrastructure and management tools. The document discusses several use cases where organizations can benefit from 10G Ethernet, such as server aggregation, HPC clusters, and low latency solutions for financial data centers.