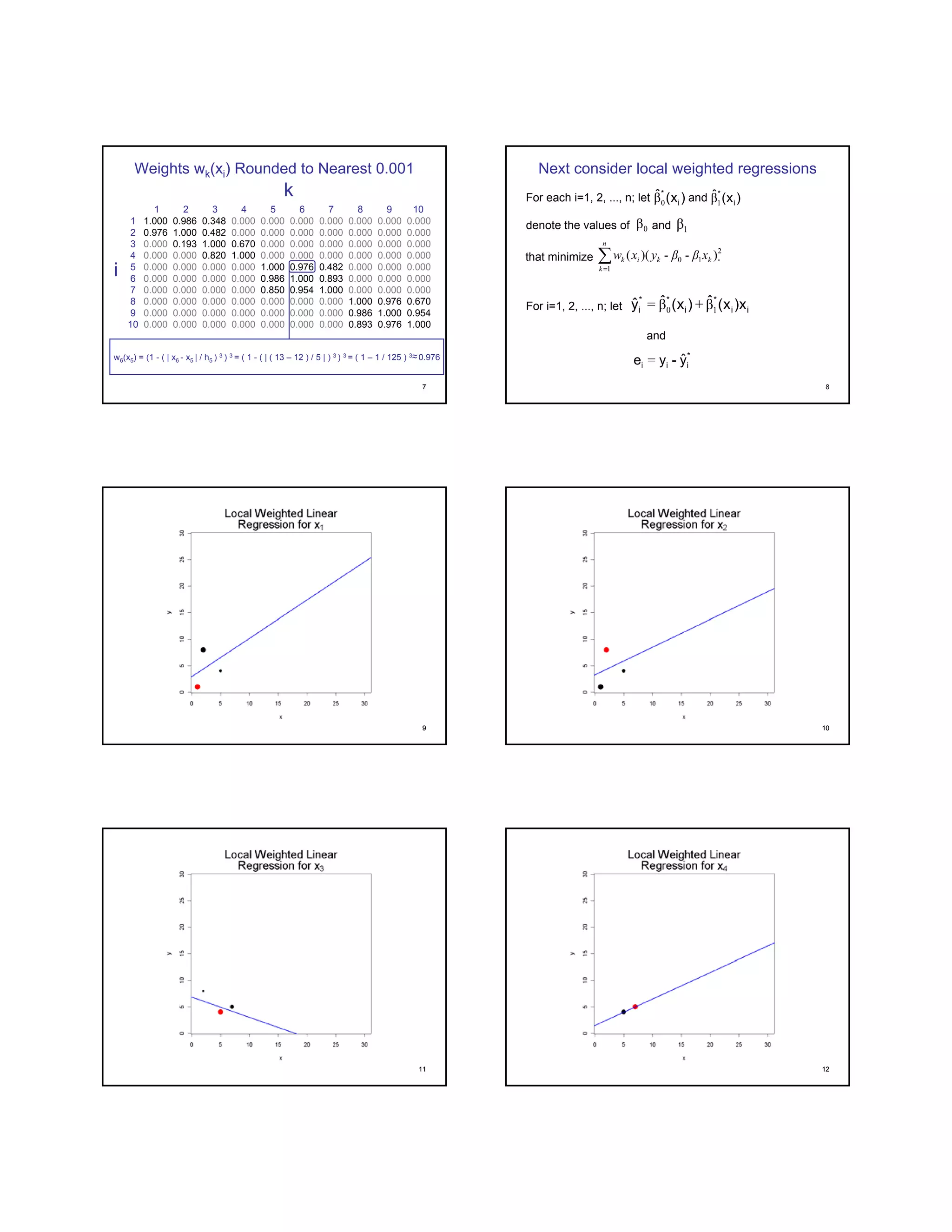

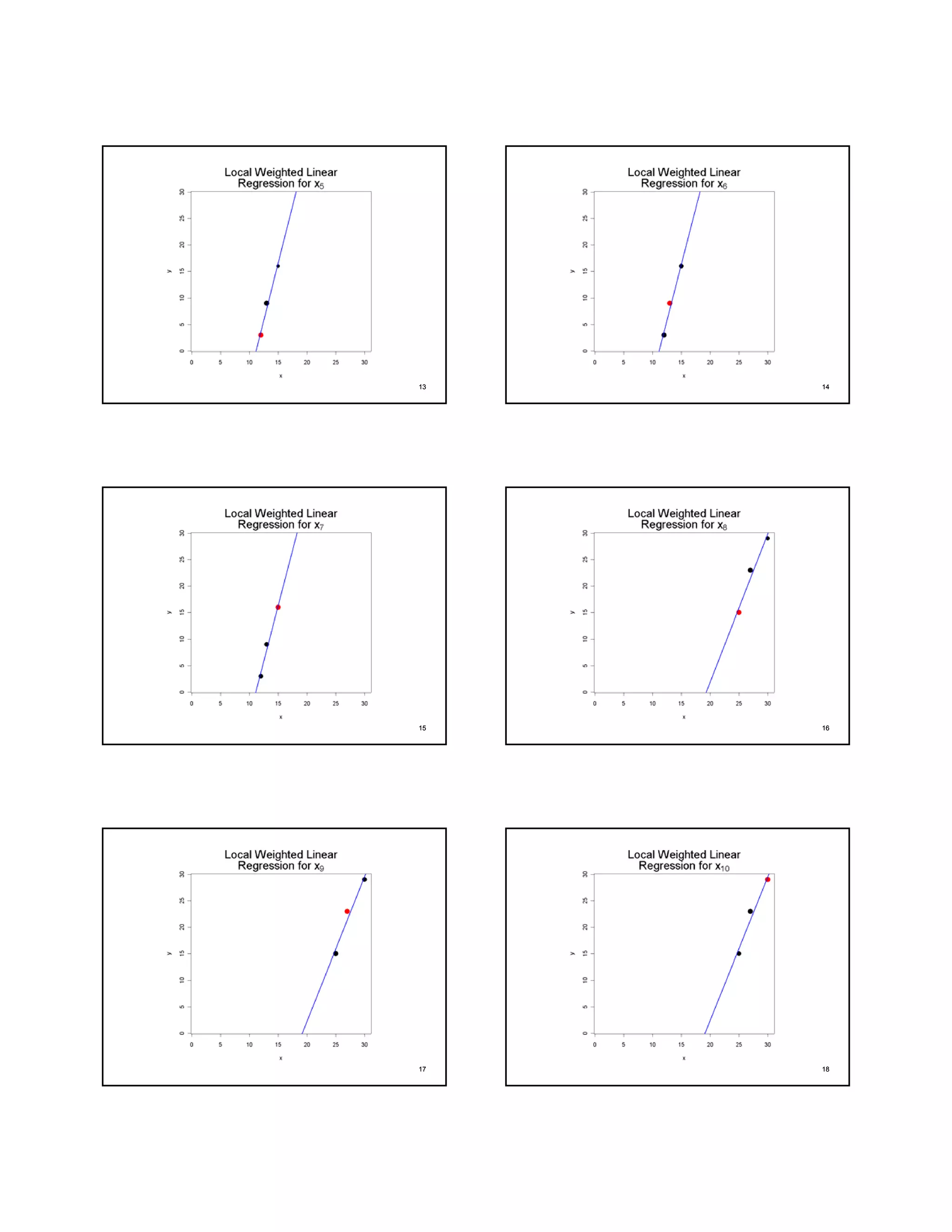

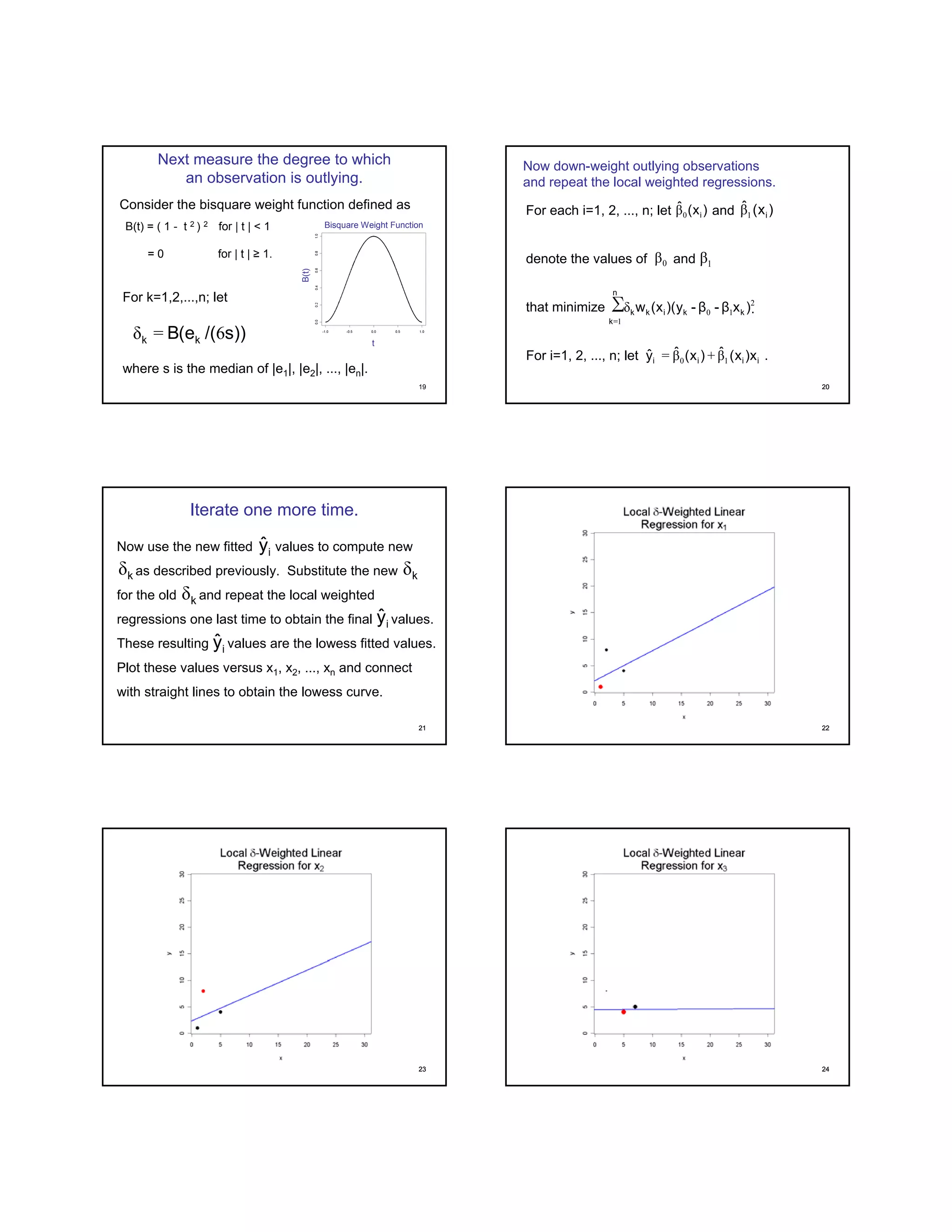

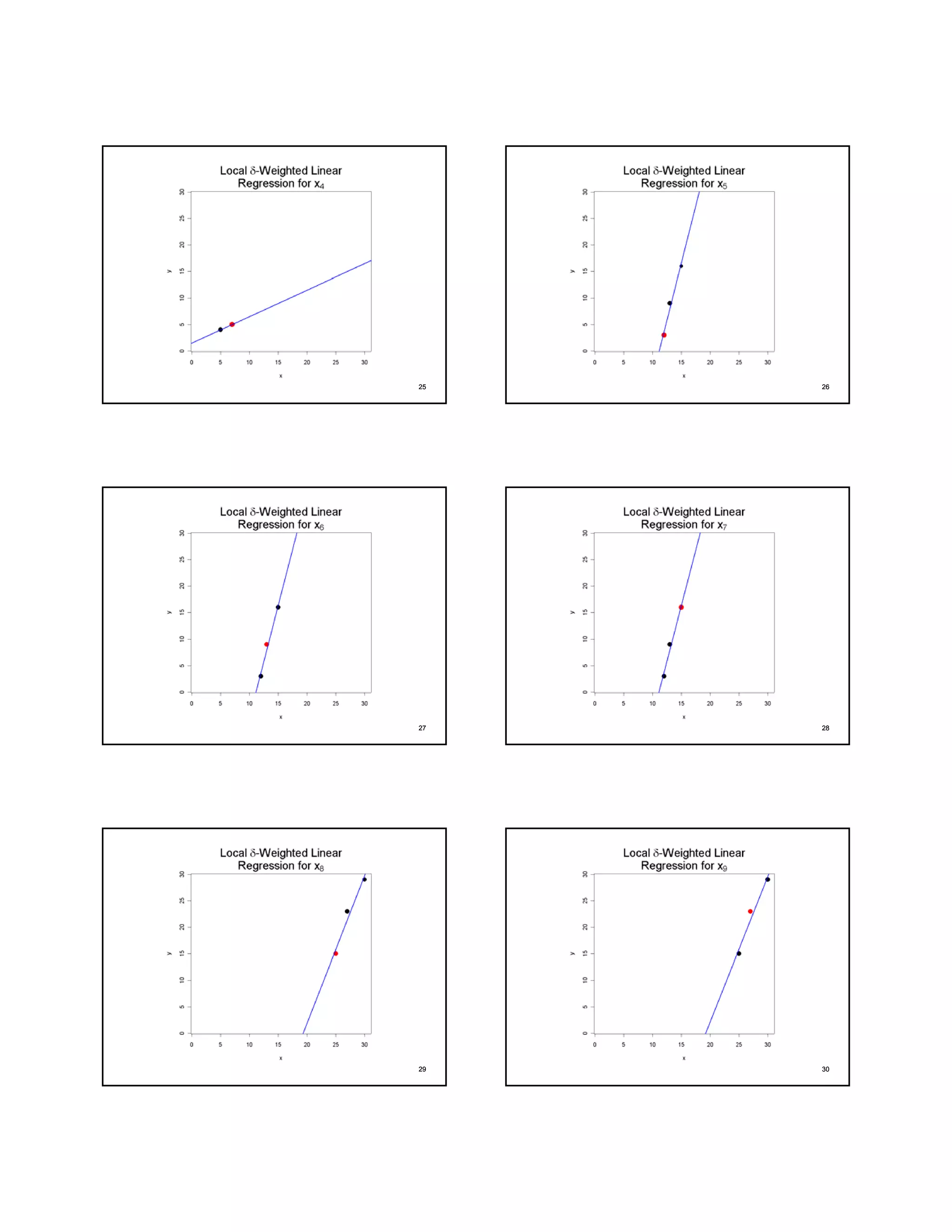

The document describes the LOWESS (LOcally WEighted polynomial regreSSion) smoothing method for fitting a curve through scattered data points. It involves iteratively calculating weighted least squares regressions using neighboring data points and tricube weights. Points farther away are given less weight, while points closer are given more weight. The fitted values from the previous iteration are then used to recalculate the weights and repeat the process to obtain the final smoothed curve. R functions like lowess() can be used to apply this method.