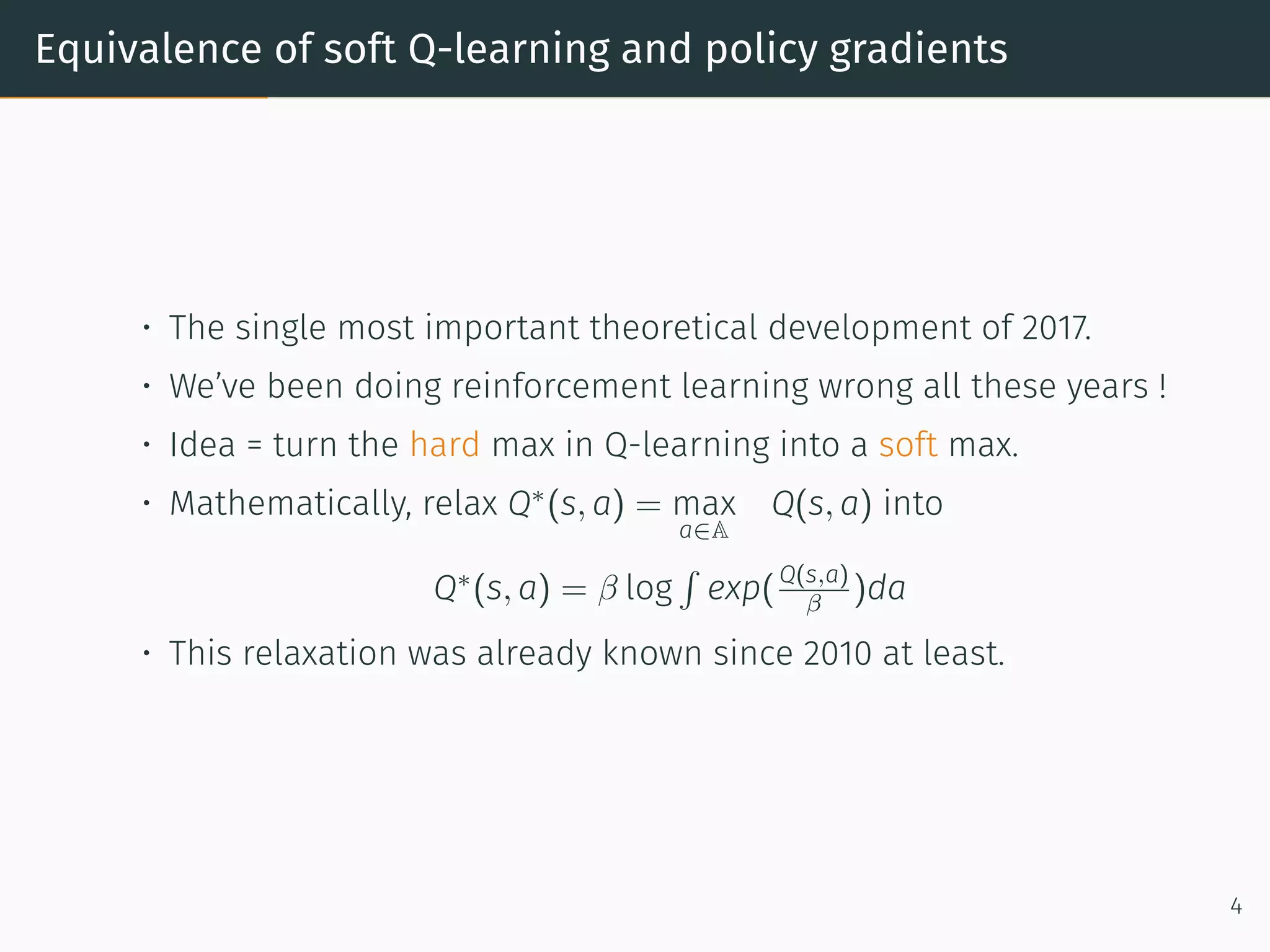

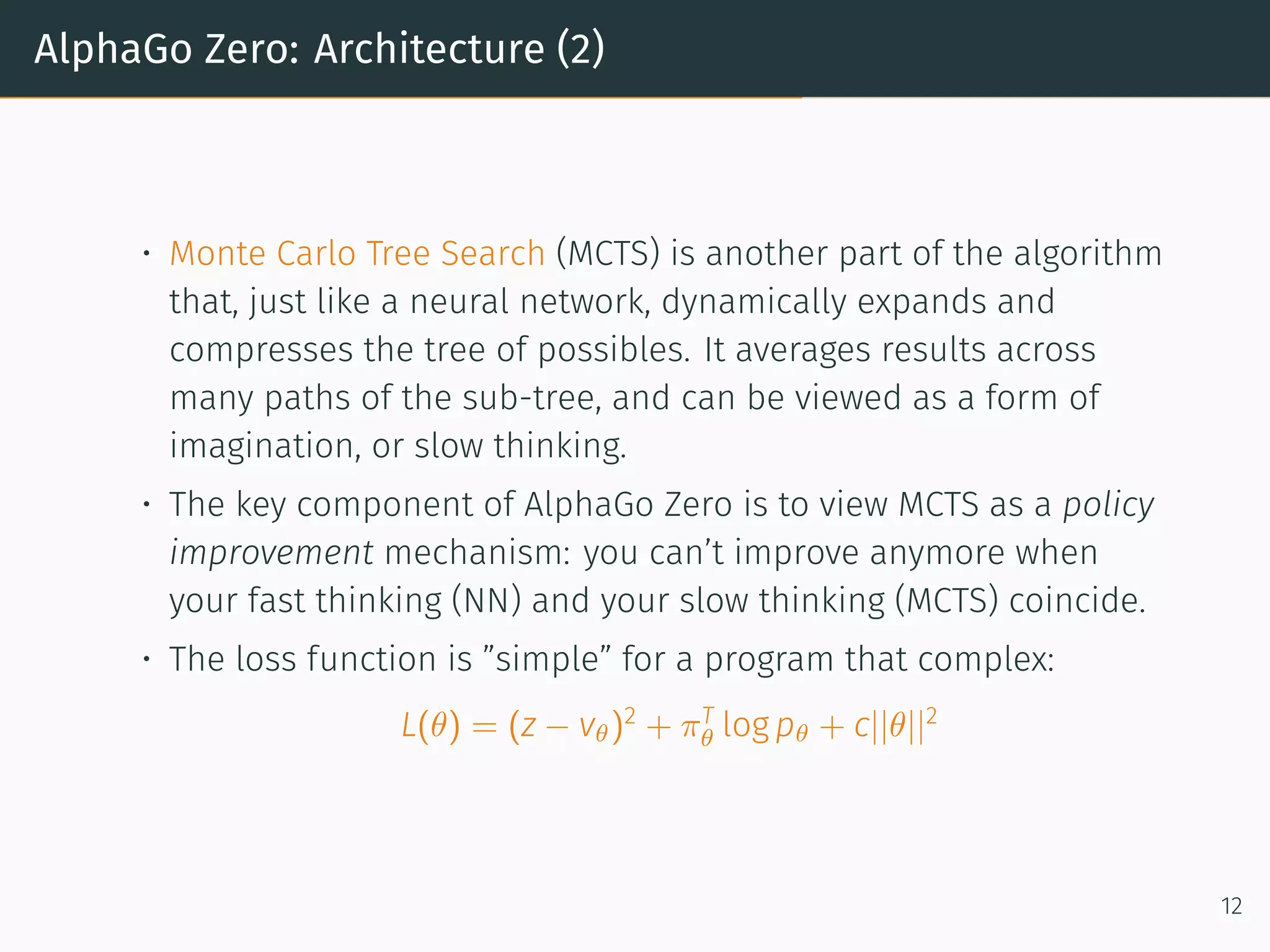

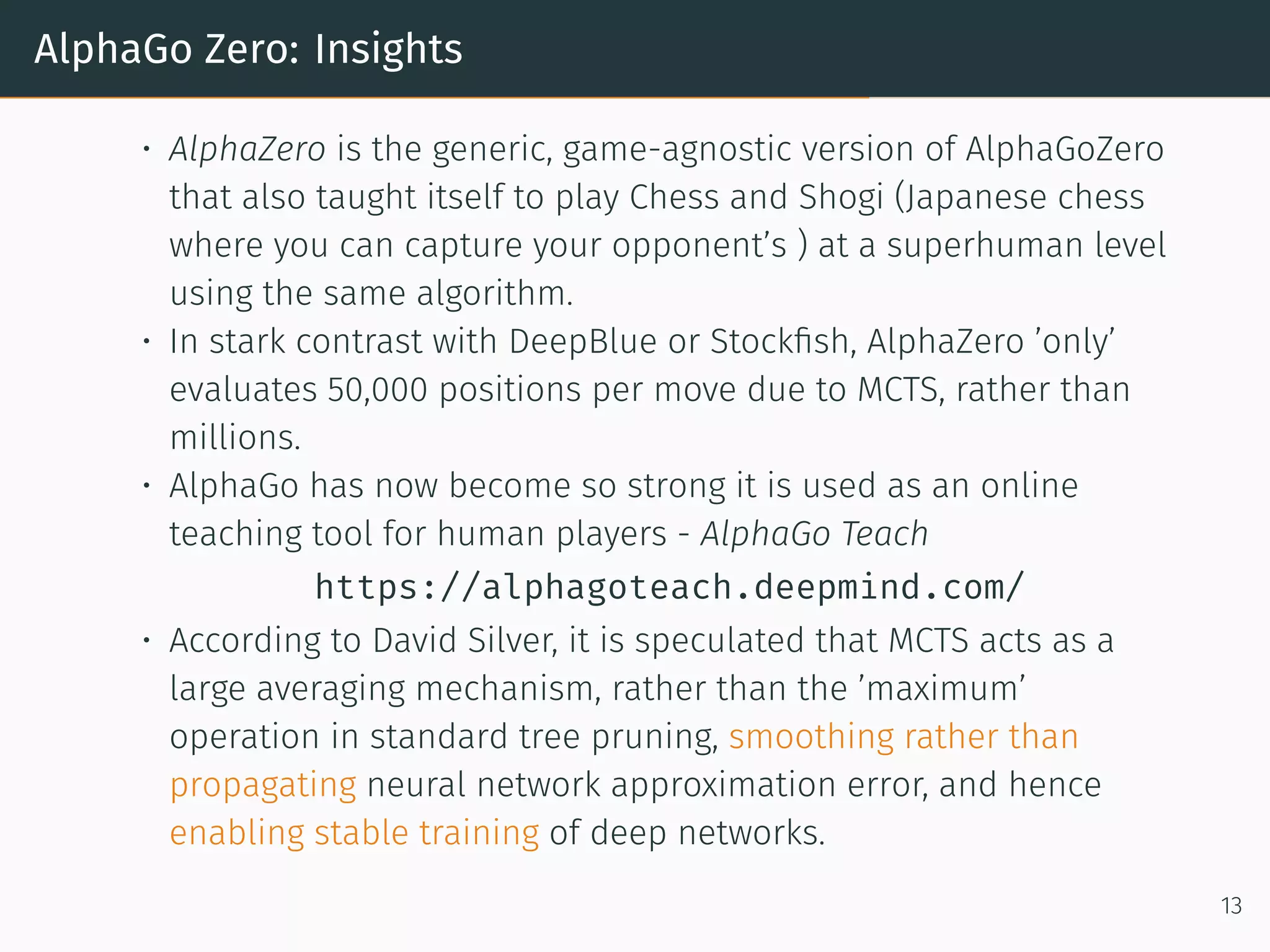

The document discusses advancements in reinforcement learning (RL), covering key theoretical developments, algorithmic improvements, and practical applications, such as AlphaGo Zero’s success in board games. It highlights the significance of soft Q-learning and policy gradients, the evolution of continuous control methods like TRPO and PPO, and emphasizes experiment design tips for reproducibility and efficiency. Moreover, it addresses challenges in sample efficiency and robustness, particularly in complex settings such as real-time strategy games.