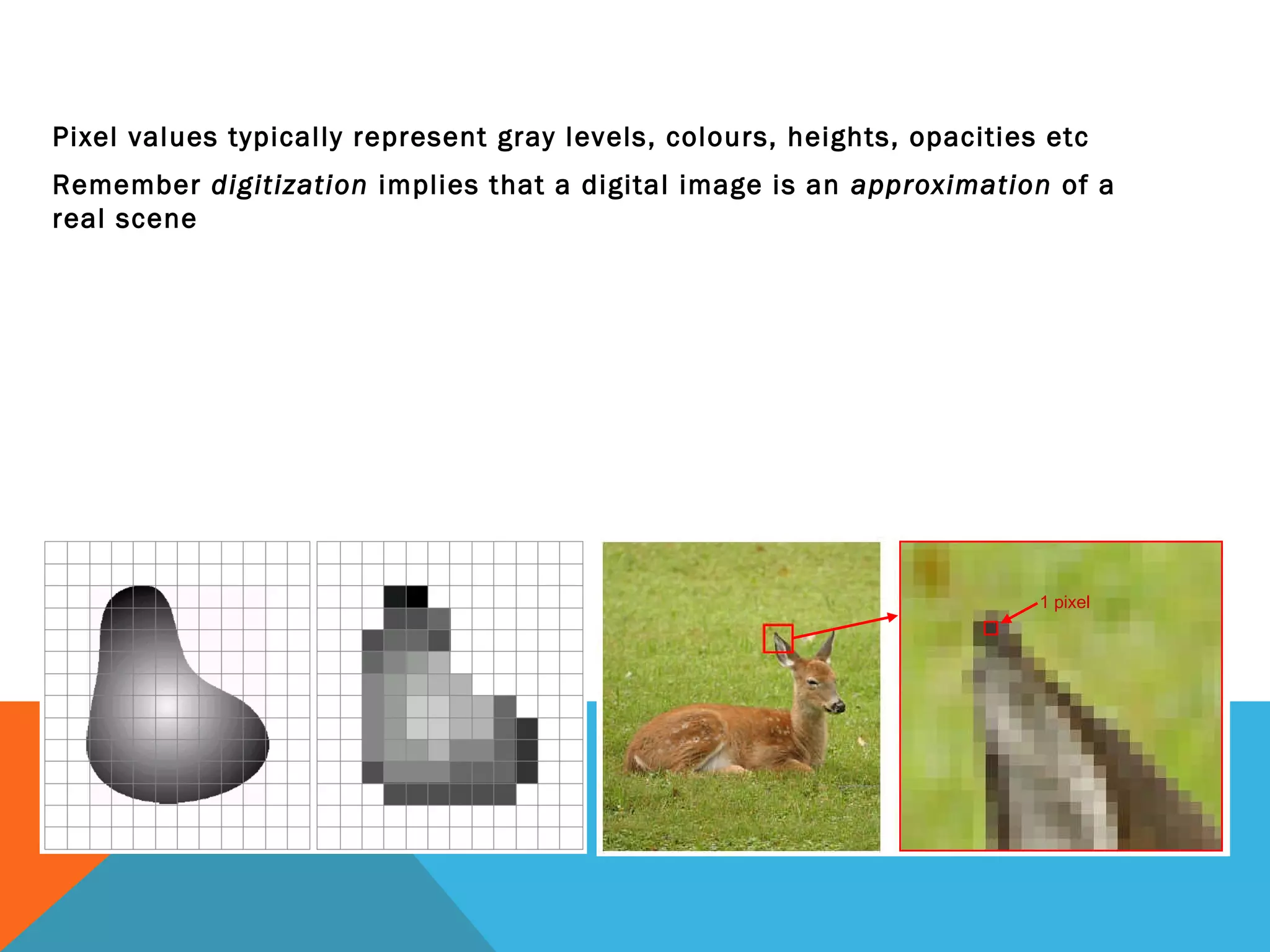

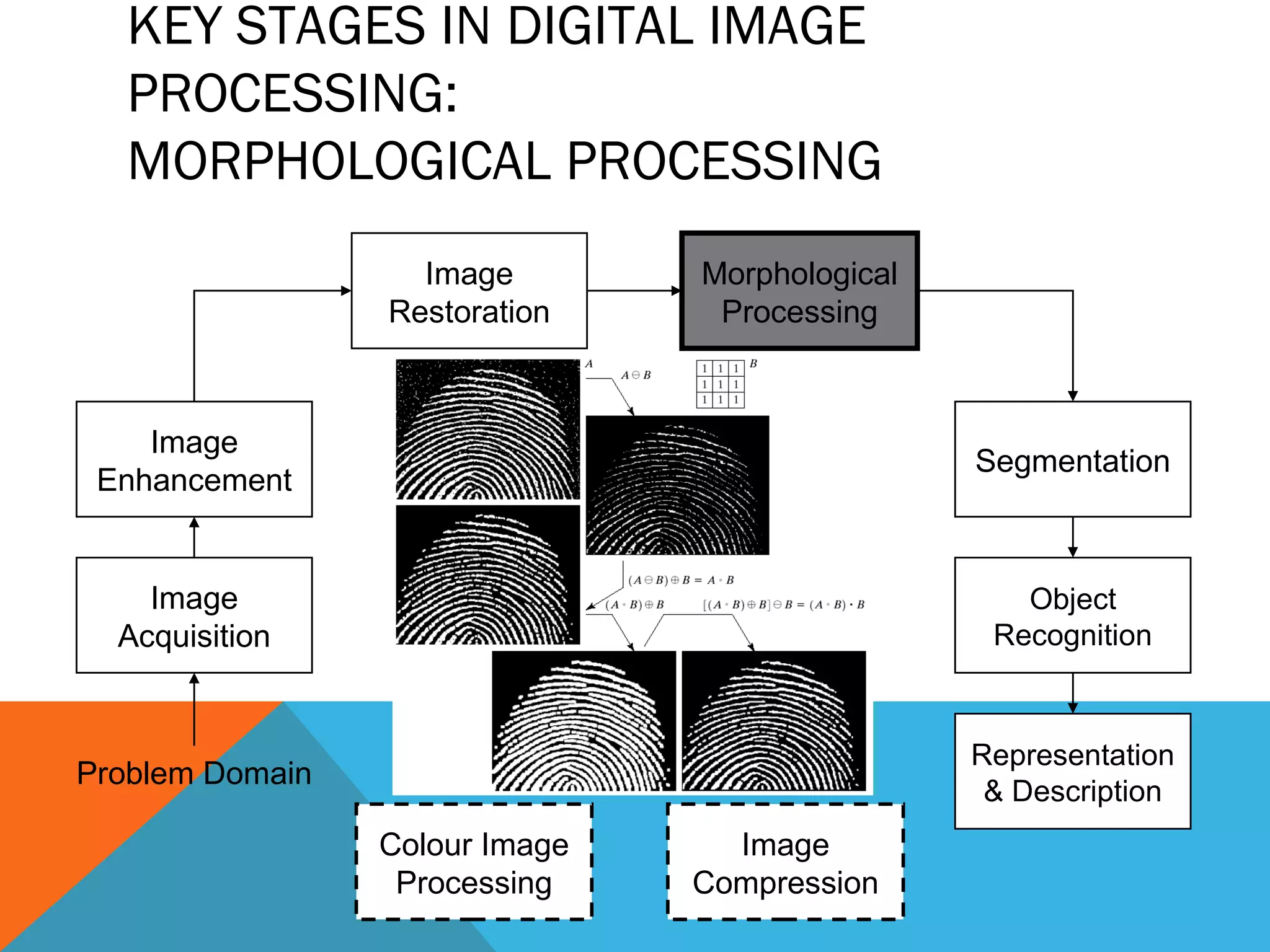

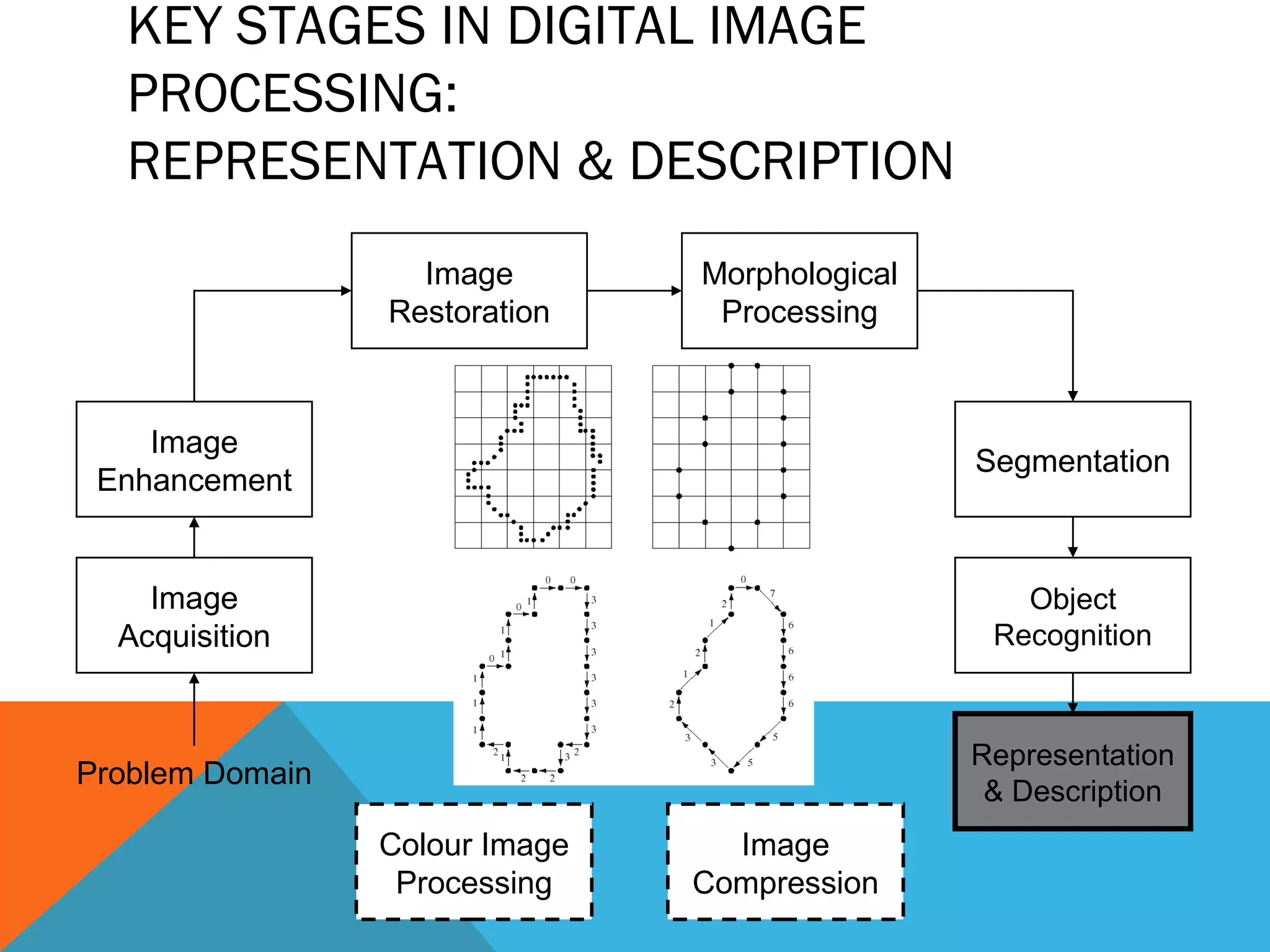

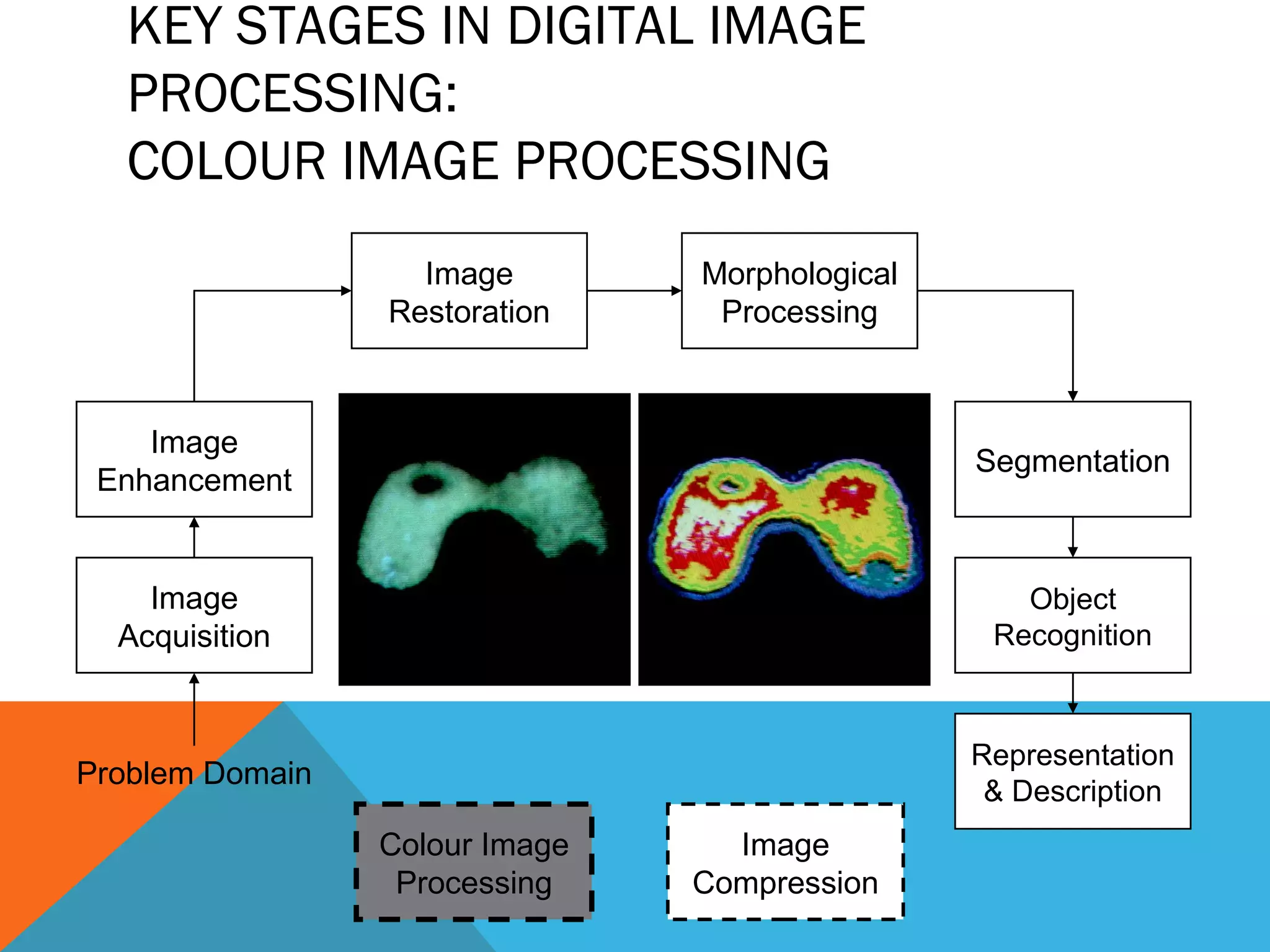

This presentation discusses digital image processing. It begins with definitions of digital images and digital image processing. Digital image processing focuses on improving images for human interpretation and processing images for machine perception. The history of digital image processing is then reviewed from the 1920s to today. Key examples of applications like medical imaging, satellite imagery, and industrial inspection are provided. The main stages of digital image processing are outlined, including image acquisition, enhancement, restoration, segmentation, and compression. The document concludes with an overview of a system for automatic face recognition using color-based segmentation.