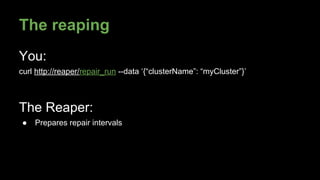

The document discusses the challenges of maintaining data consistency in Cassandra clusters and introduces the Cassandra Reaper, a tool developed by Spotify for automating repairs. The Reaper orchestrates the repair process, reduces disk I/O, and enhances repair efficiency while minimizing the need for manual oversight. Key features include carefulness to avoid node failures, resilience during errors, efficient parallelism, and persistent state management.