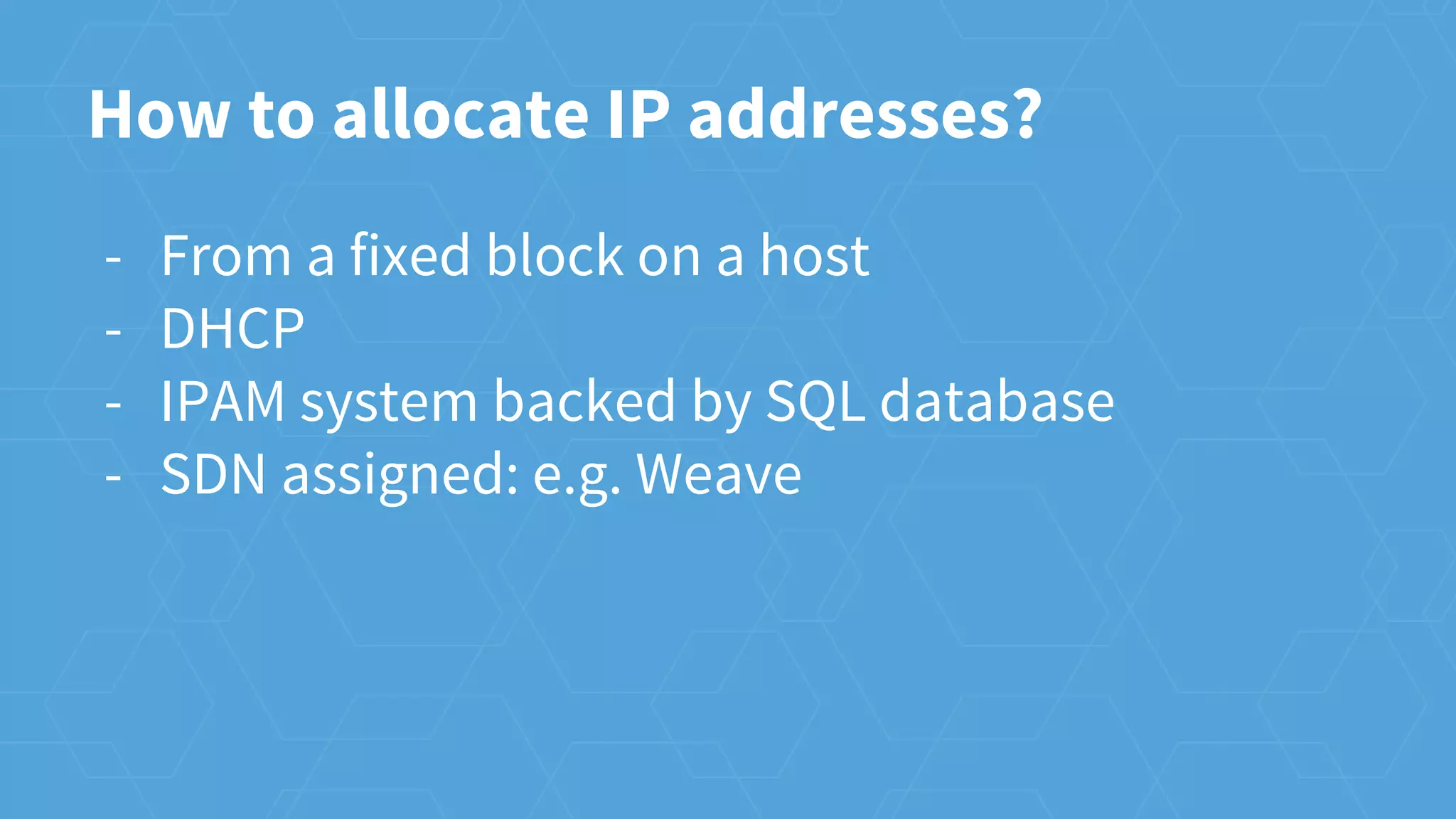

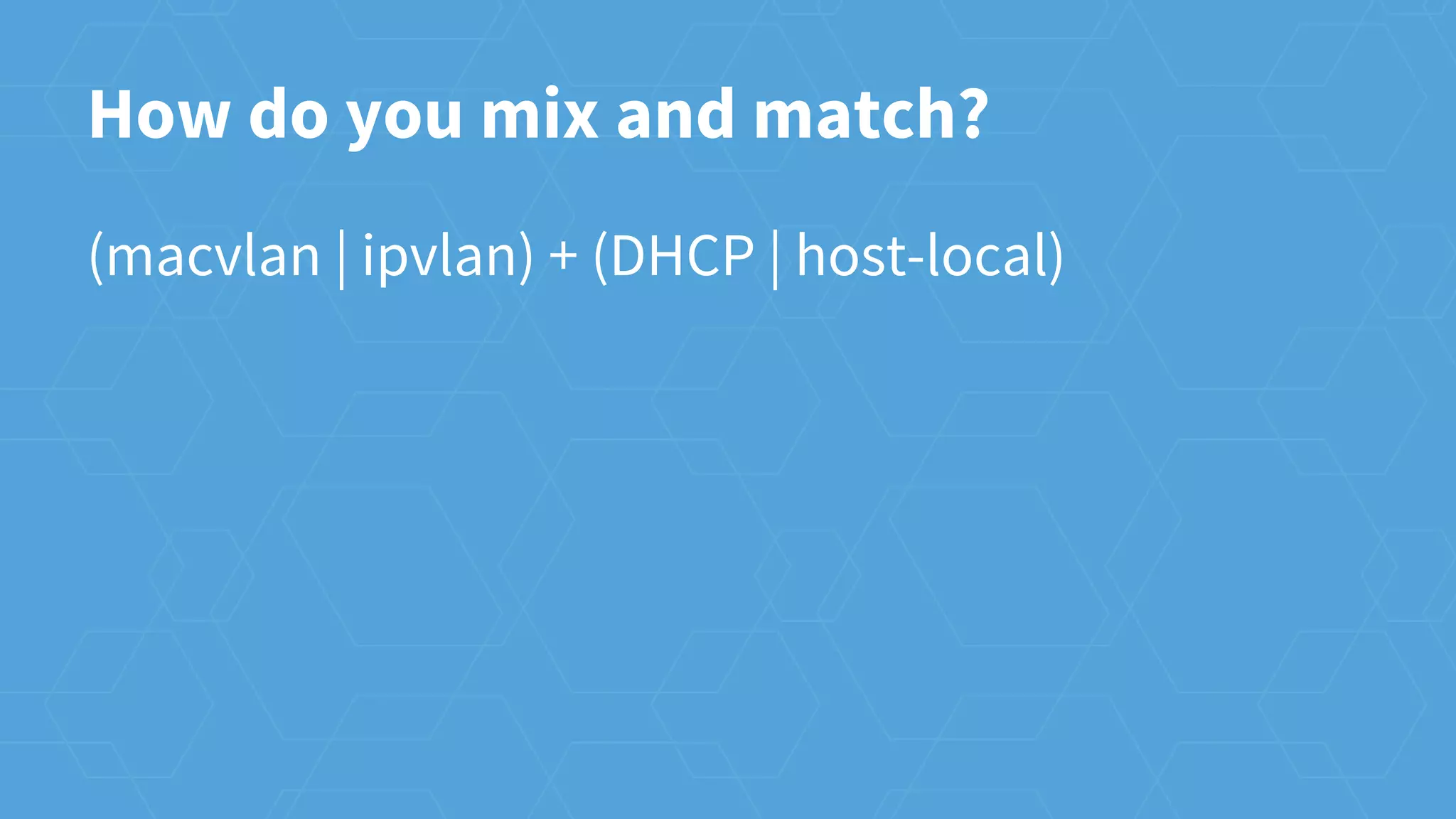

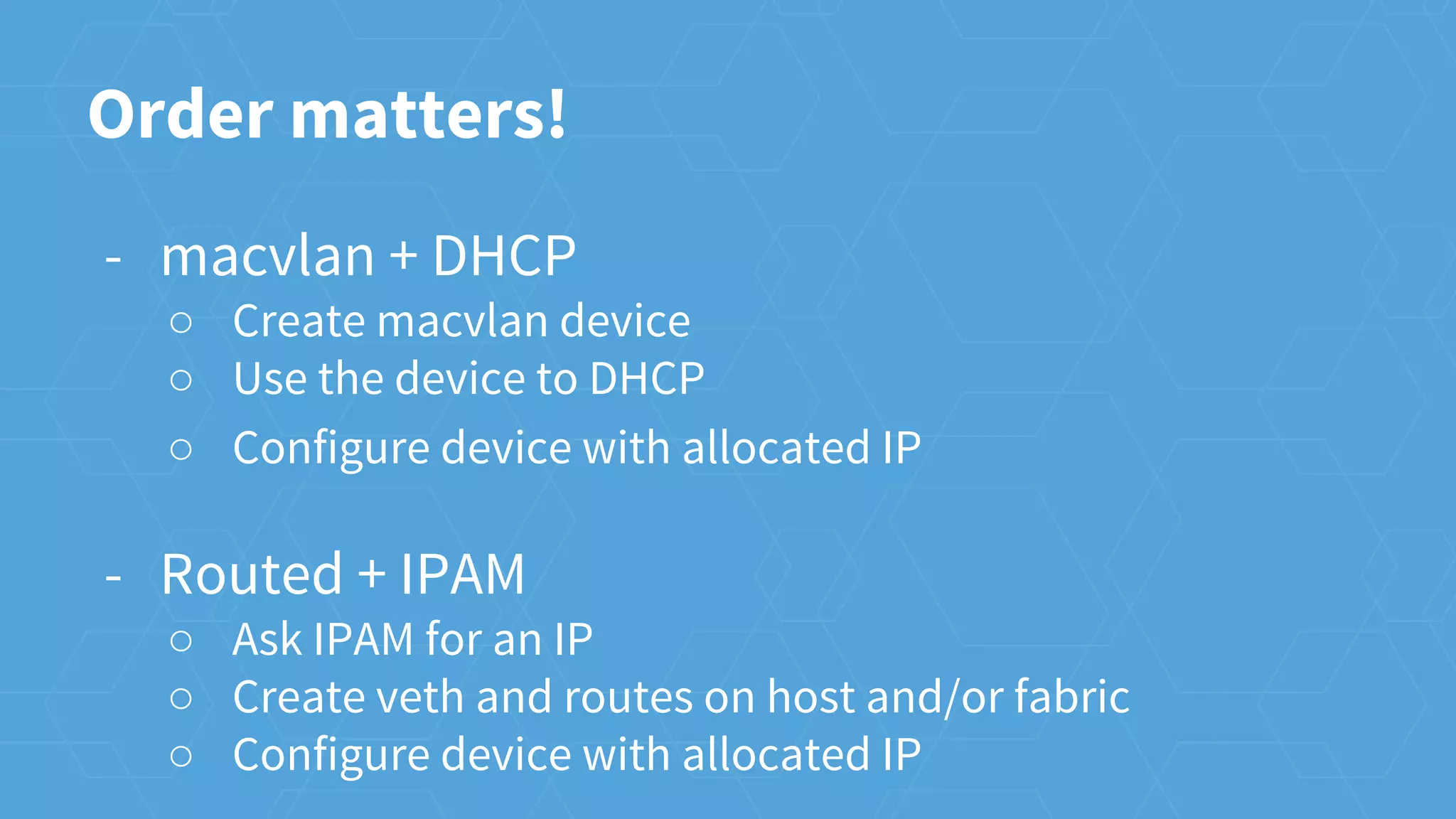

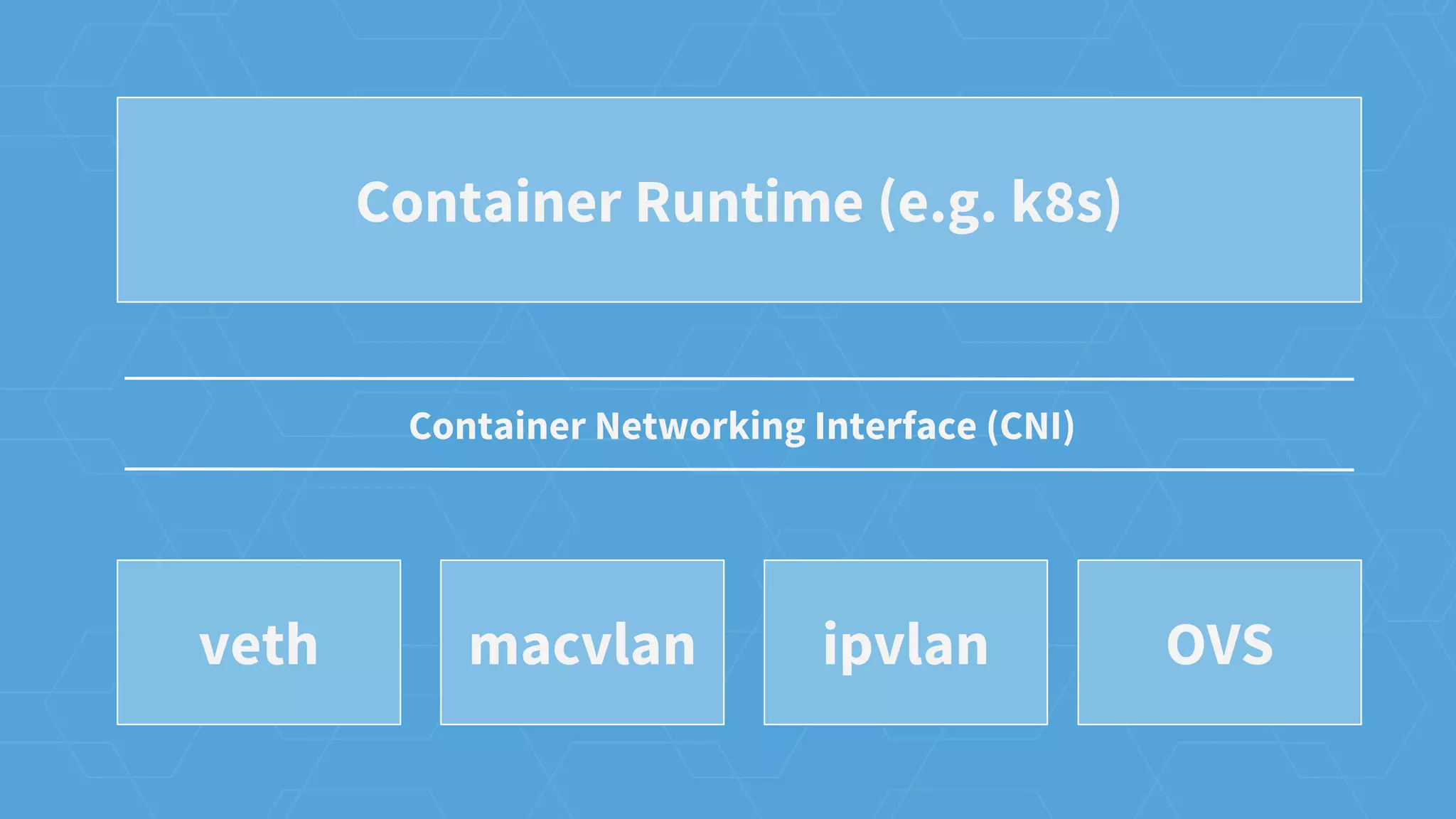

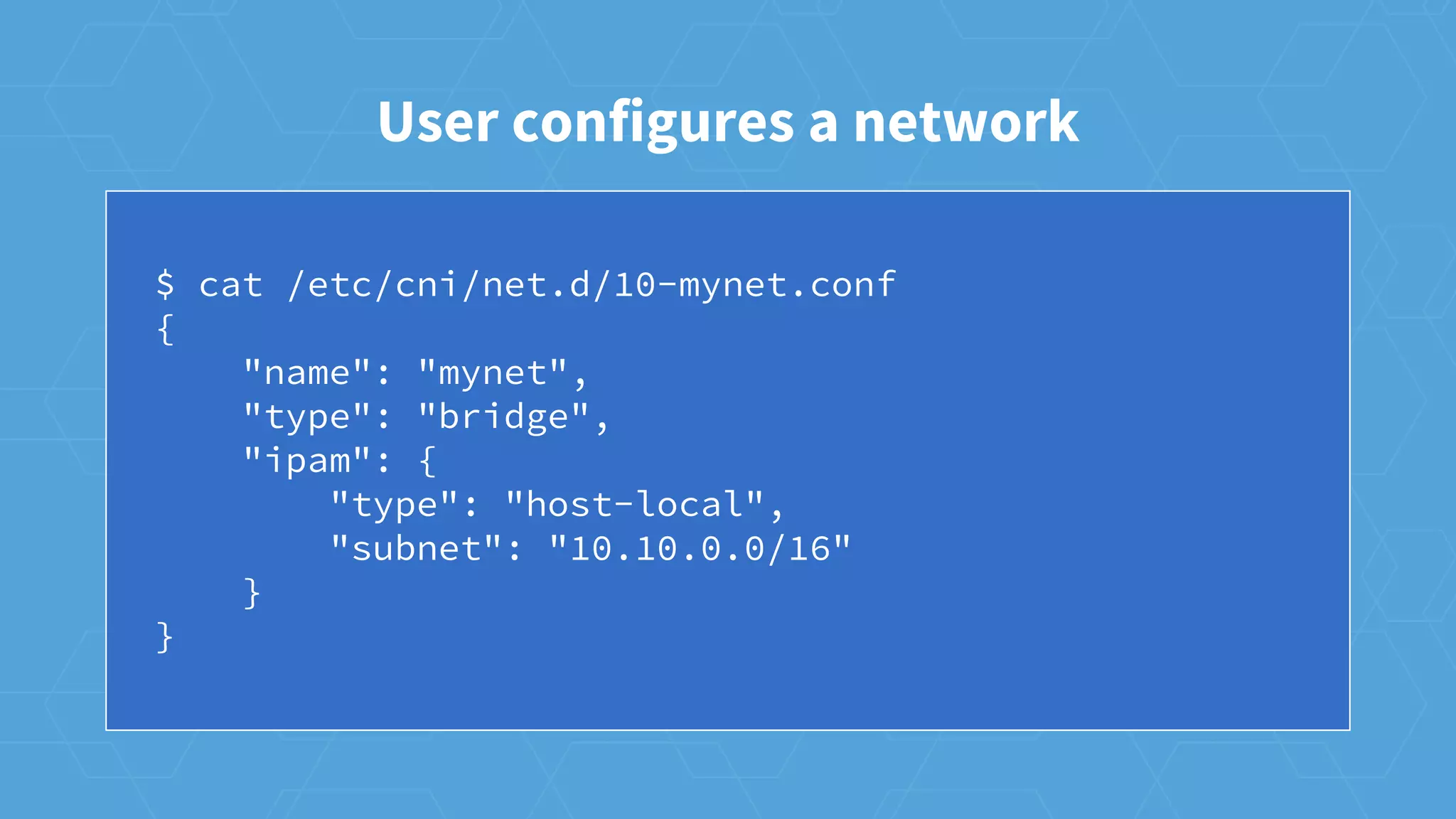

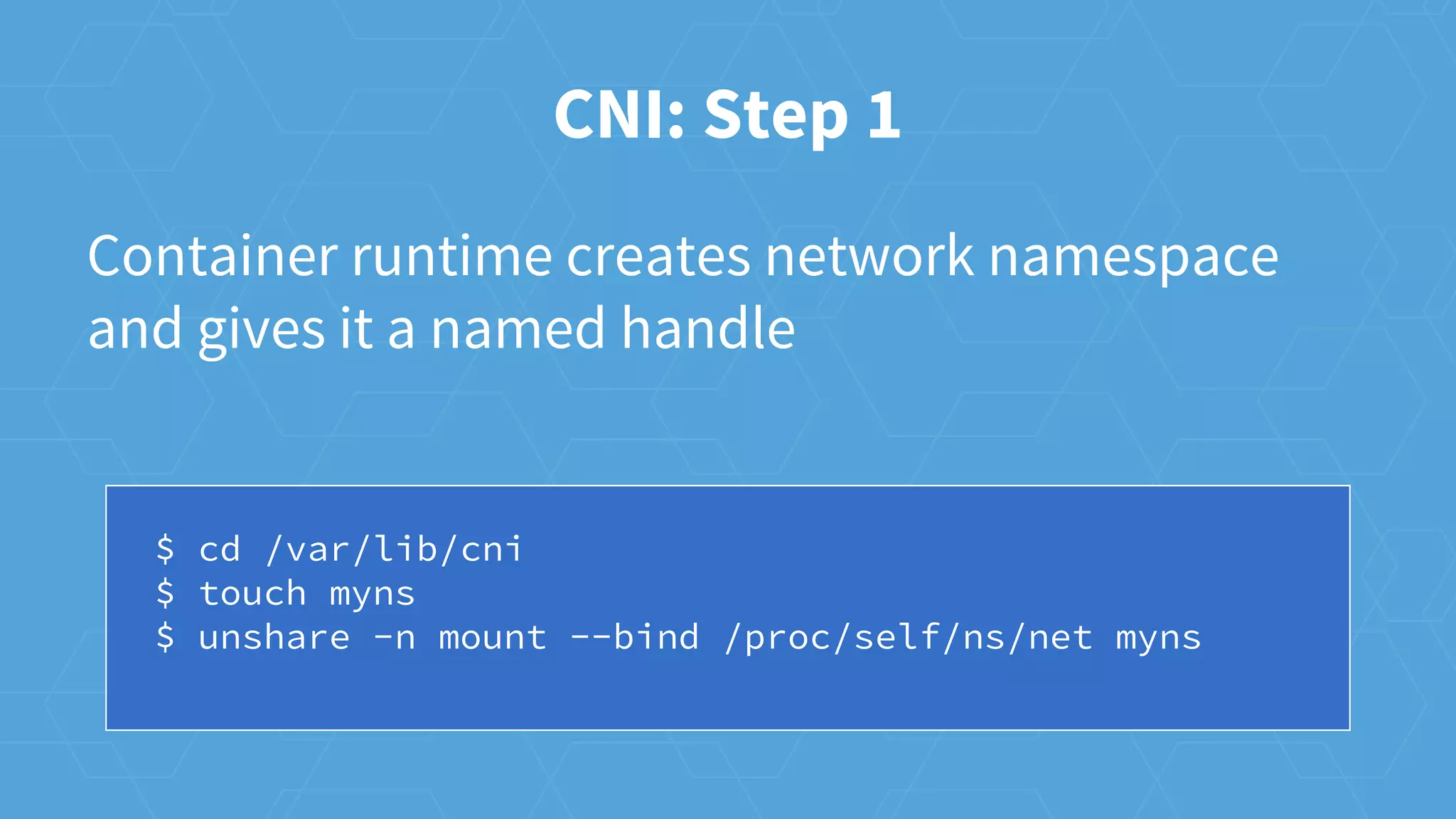

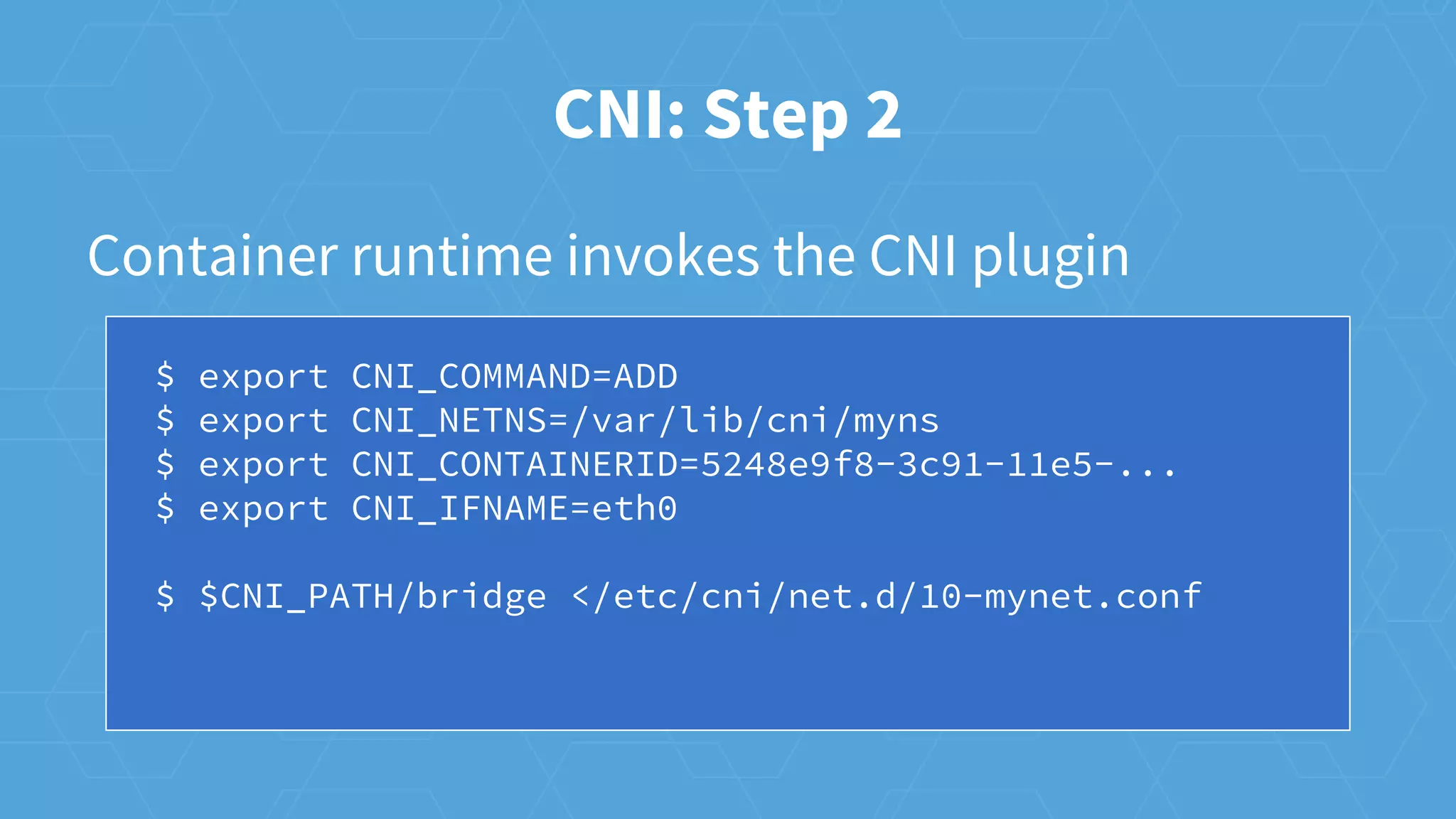

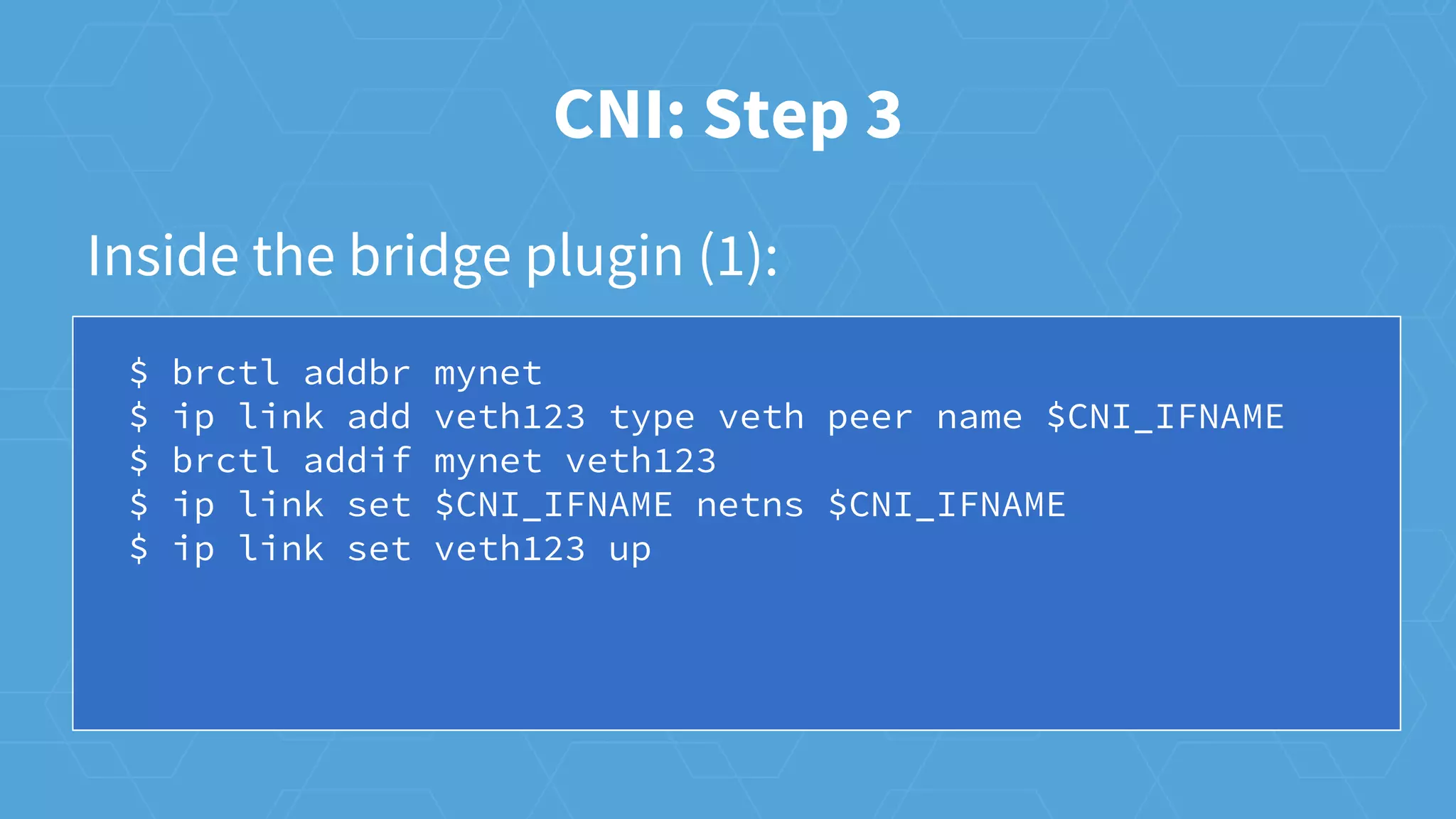

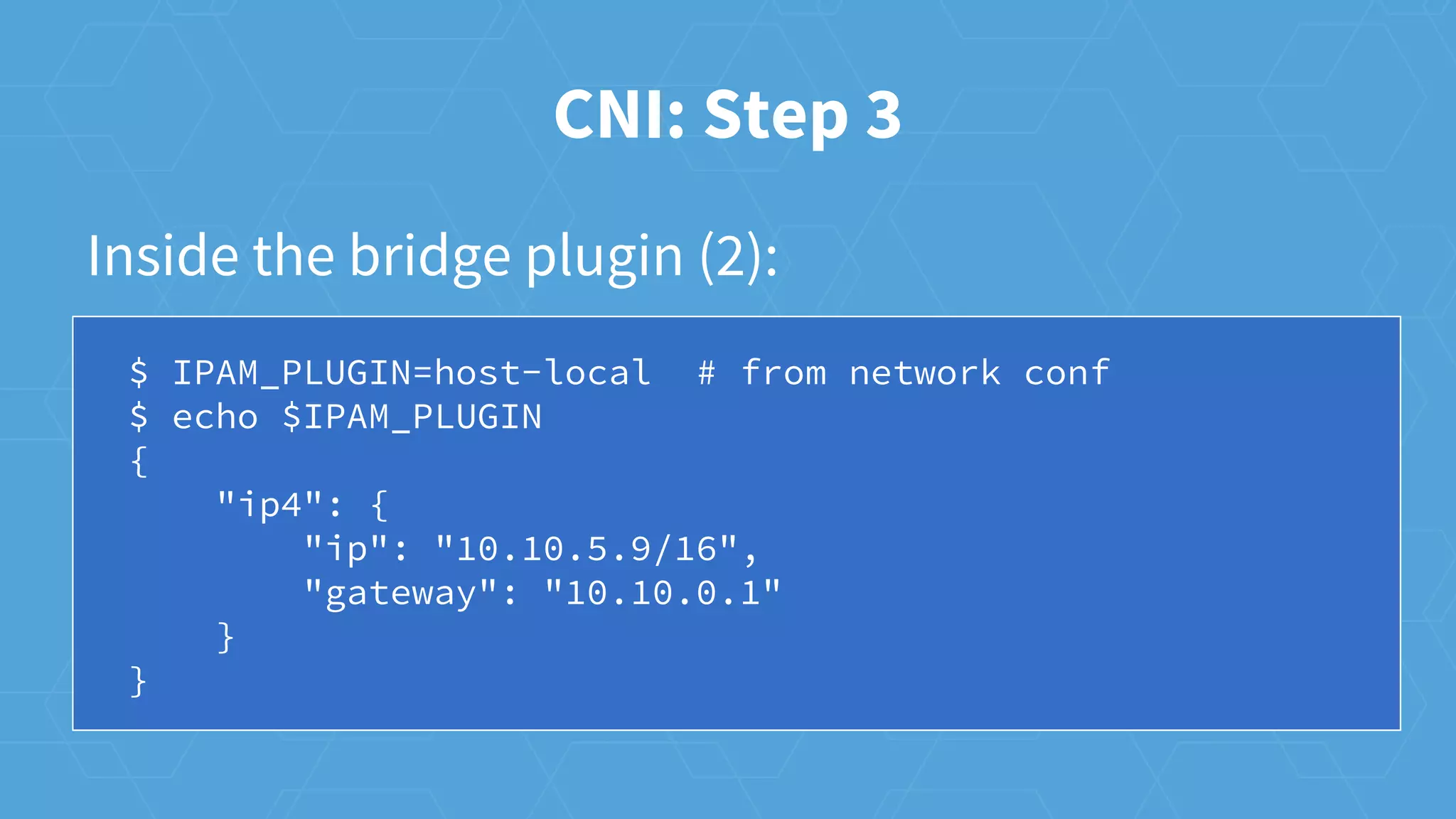

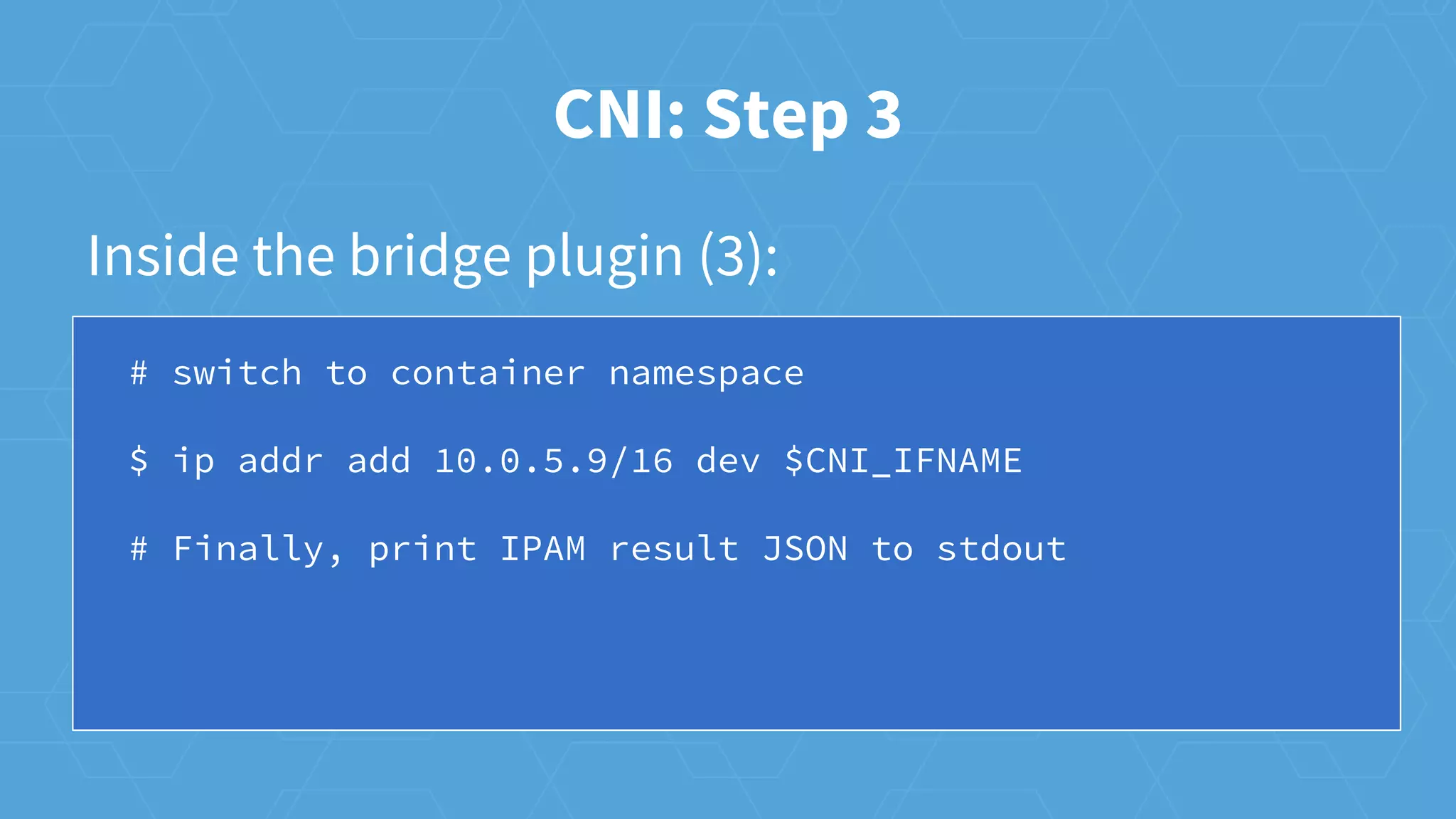

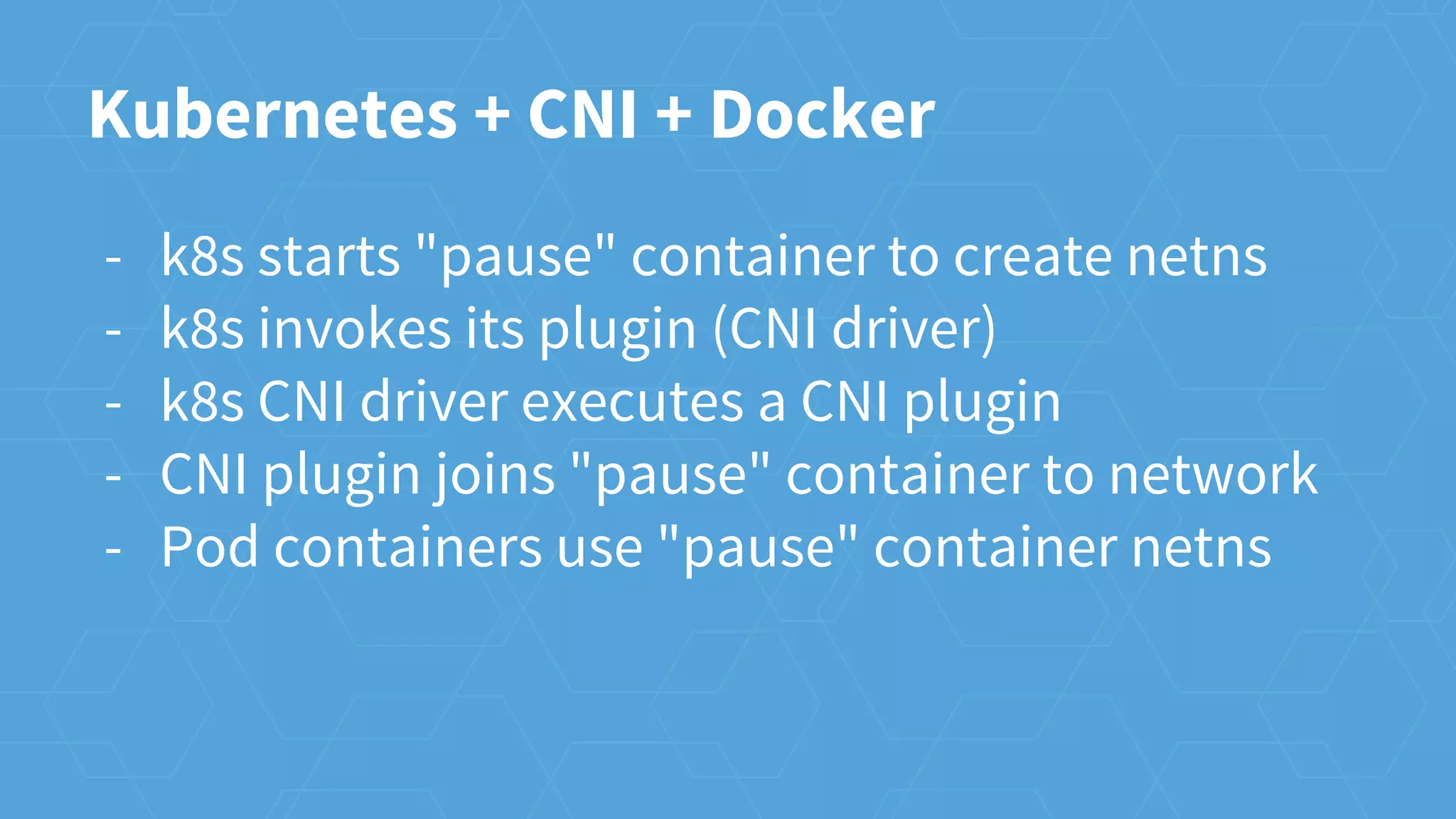

The document provides an overview of container networking in Kubernetes, highlighting the Kubernetes networking model which assigns IPs per pod and discusses various networking plugins like macvlan and ipvlan. It outlines the process of configuring Container Network Interfaces (CNI) using JSON, detailing steps involved in adding and removing containers from a network. The document also emphasizes the integration of CNI plugins with Kubernetes and the potential for future enhancements in networking capabilities.