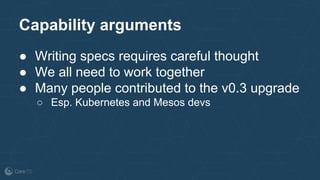

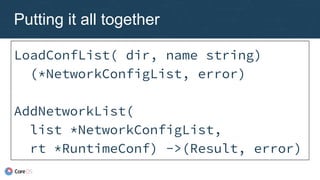

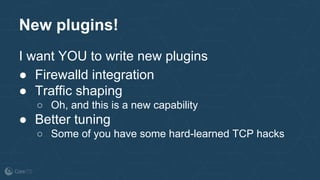

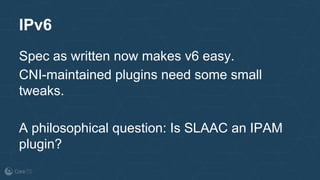

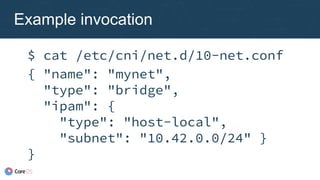

The document outlines the evolution and implementation of the Container Network Interface (CNI), a standard API for connecting containers to networks across various environments. It describes the collaborative nature of the CNI project, including its history of adoption, the development of plugins, and architectural enhancements made in version 0.3. The document also emphasizes community involvement and the ongoing need for new plugins and contributions to ensure the project's growth and relevance.

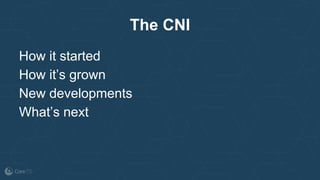

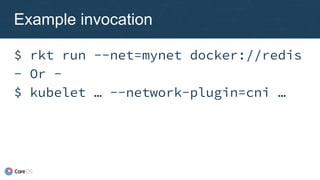

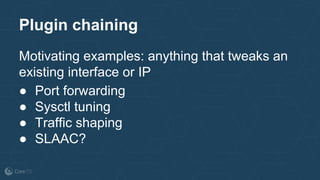

![Plugin chaining

New configuration

format

● Just a list of

configuration blocks

● Runtime chains

previous result

{ "name": "dbnet",

"plugins": [

{ "type": "bridge",

"ipam": {

"type": "host-local",

"subnet": "10.1.0.0/16" }

},

{ "type": "tuning",

"sysctl": {

"net.core.somaxconn":

"500" }

}

]

}](https://image.slidesharecdn.com/caseycallendrello-theevolutionofthecontainernetworkinterface-190227092045/85/OSDC-2017-The-evolution-of-the-Container-Network-Interface-by-Casey-Callendrello-39-320.jpg)

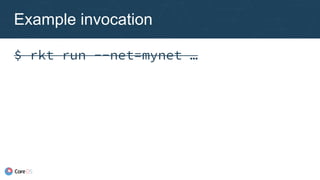

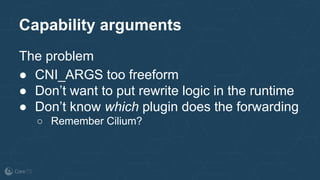

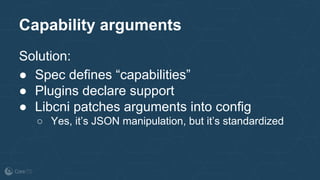

![Capability arguments

{

"type": "portmap-iptables",

"capabilities": {"portMappings": true},

}

Becomes:

{ "type": "portmap-iptables",

"runtimeConfig": {

"portMappings": [

{"hostPort": 80, "containerPort": 8080}

]},

}](https://image.slidesharecdn.com/caseycallendrello-theevolutionofthecontainernetworkinterface-190227092045/85/OSDC-2017-The-evolution-of-the-Container-Network-Interface-by-Casey-Callendrello-46-320.jpg)