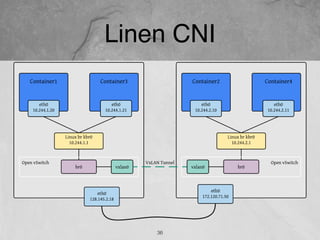

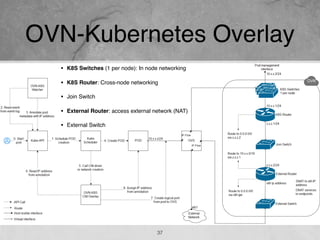

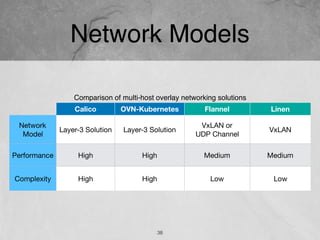

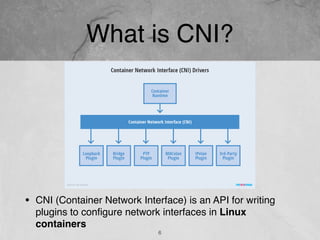

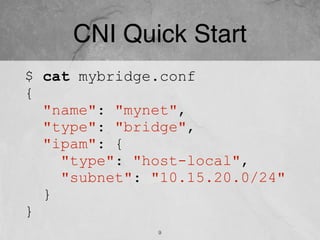

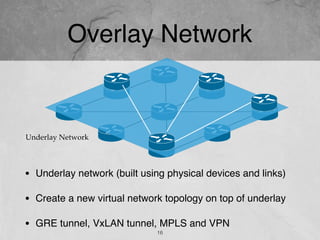

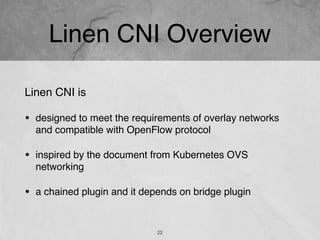

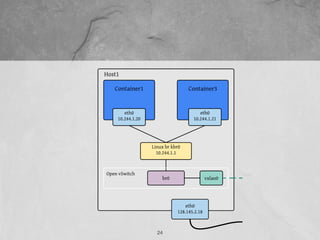

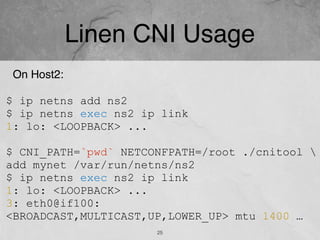

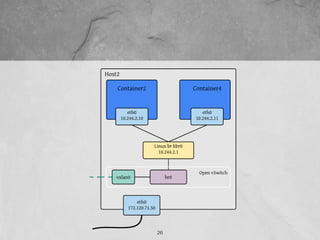

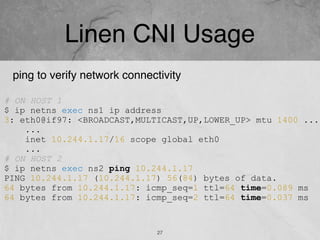

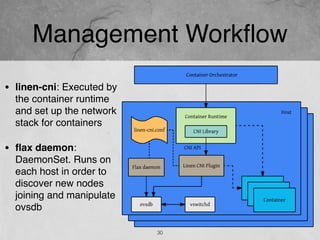

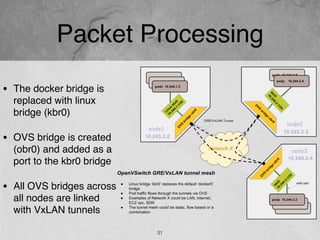

This document discusses CNI and the Linen CNI plugin. It begins with an introduction to CNI and how it allows plugins to configure network interfaces in containers. It then discusses the Linen CNI plugin, which is designed for overlay networks and uses Open vSwitch. It explains how Linen CNI works with Kubernetes and provides packet processing between nodes. The document also compares Linen CNI to other overlay networking solutions like OVN-Kubernetes.

![How to Build?

• parseConf: parses the network configuration from stdin

• cmdAdd is called for ADD requests

(When pod is created)

• cmdDel is called for DELETE requests

(When pod is deleted)

• Add your code to the cmdAdd and cmdDel functions.

• Simple CNI code sample at :

https://github.com/containernetworking/plugins/tree/master/plugins/sample

8

type PluginConf

func parseConfig(stdin []byte) (*PluginConf, error)

func cmdAdd(args *skel.CmdArgs) error

func cmdDel(args *skel.CmdArgs) error](https://image.slidesharecdn.com/20170921networkpluginsforkubernetesjohnlin-170922060956/85/Network-plugins-for-kubernetes-8-320.jpg)

![Network configuration reference

34

• ovsBridge: name

of the ovs bridge to

use/create

• vtepIPs: list of the

VxLAN tunnel end

point IP addresses

• controller: sets

SDN controller,

assigns an IP

address, port

number

{

"name":"mynet",

"cniVersion": "0.3.1",

"plugins":[

{

//… bridge configurations

},

{

"type":"linen",

"runtimeConfig":{

"ovs":{

"ovsBridge":"br0",

"vtepIPs":[

"172.120.71.50"

],

"controller":"192.168.2.100:6653"

}

}

}

]

}](https://image.slidesharecdn.com/20170921networkpluginsforkubernetesjohnlin-170922060956/85/Network-plugins-for-kubernetes-34-320.jpg)