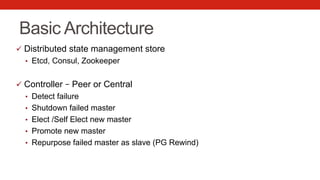

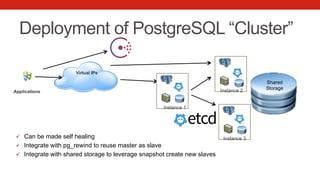

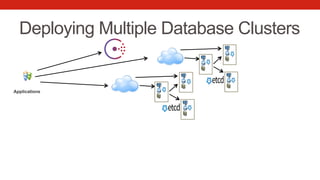

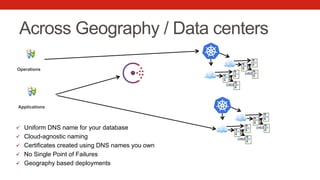

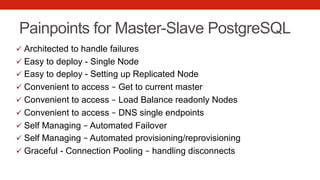

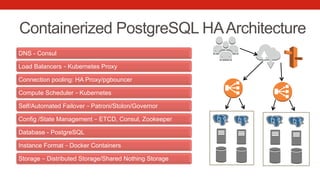

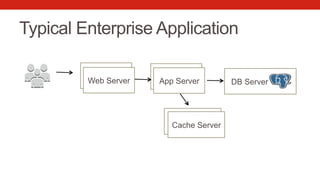

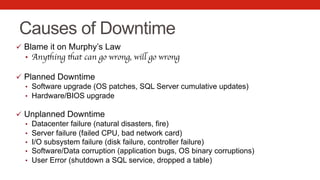

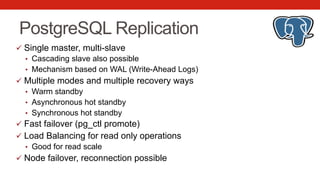

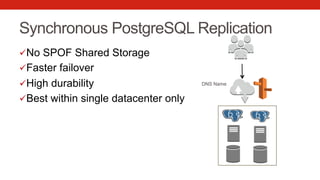

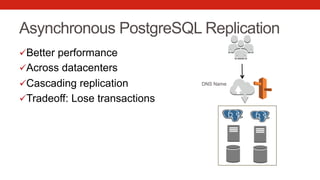

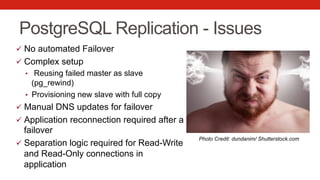

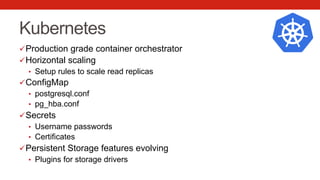

This document discusses high availability for PostgreSQL in a containerized environment. It outlines typical enterprise requirements for high availability including recovery time objectives and recovery point objectives. Shared storage-based high availability is described as well as the advantages and disadvantages of PostgreSQL replication. The use of Linux containers and orchestration tools like Kubernetes and Consul for managing containerized PostgreSQL clusters is also covered. The document advocates for using PostgreSQL replication along with services and self-healing tools to provide highly available and scalable PostgreSQL deployments in modern container environments.

![Consul

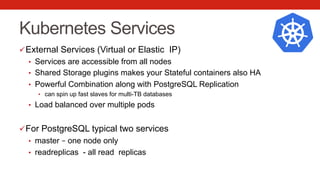

• Service Discovery

• Failure Detection

• Multi Data Center

• DNS Query Interface

{

"service": {

"name": ”mypostgresql",

"tags": ["master"],

"address": "127.0.0.1",

"port": 5432,

"enableTagOverride": false,

}

}

nslookup master.mypostgresql.service.domain

nslookup mypostgresql.service.domain](https://image.slidesharecdn.com/pgconfus2017harep-170330173014/85/PostgreSQL-High-Availability-in-a-Containerized-World-31-320.jpg)