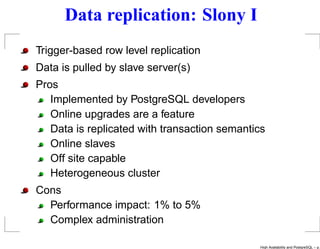

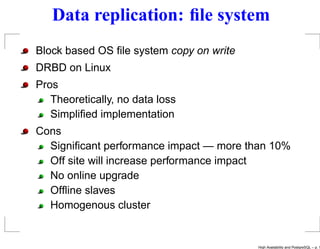

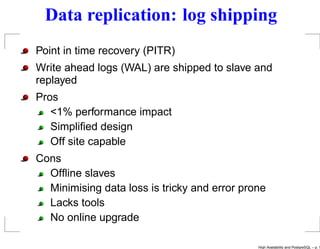

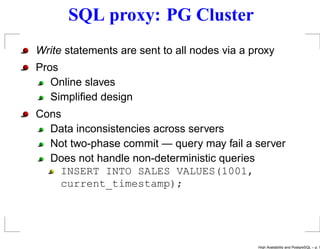

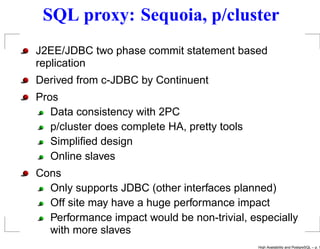

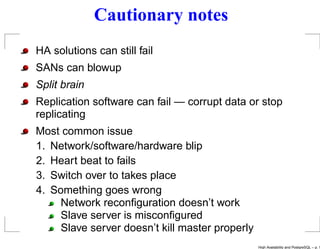

This document discusses high availability options for PostgreSQL databases. It defines high availability as a system to reduce application downtime using master and slave database servers. It explains that high availability solutions provide redundancy to ensure data and application continuity if the master database fails. The document then reviews several technologies for replicating data between master and slave databases like shared storage, Slony replication, log shipping, and SQL proxies. It also discusses factors to consider when choosing a high availability solution and best practices for testing and ensuring reliability.