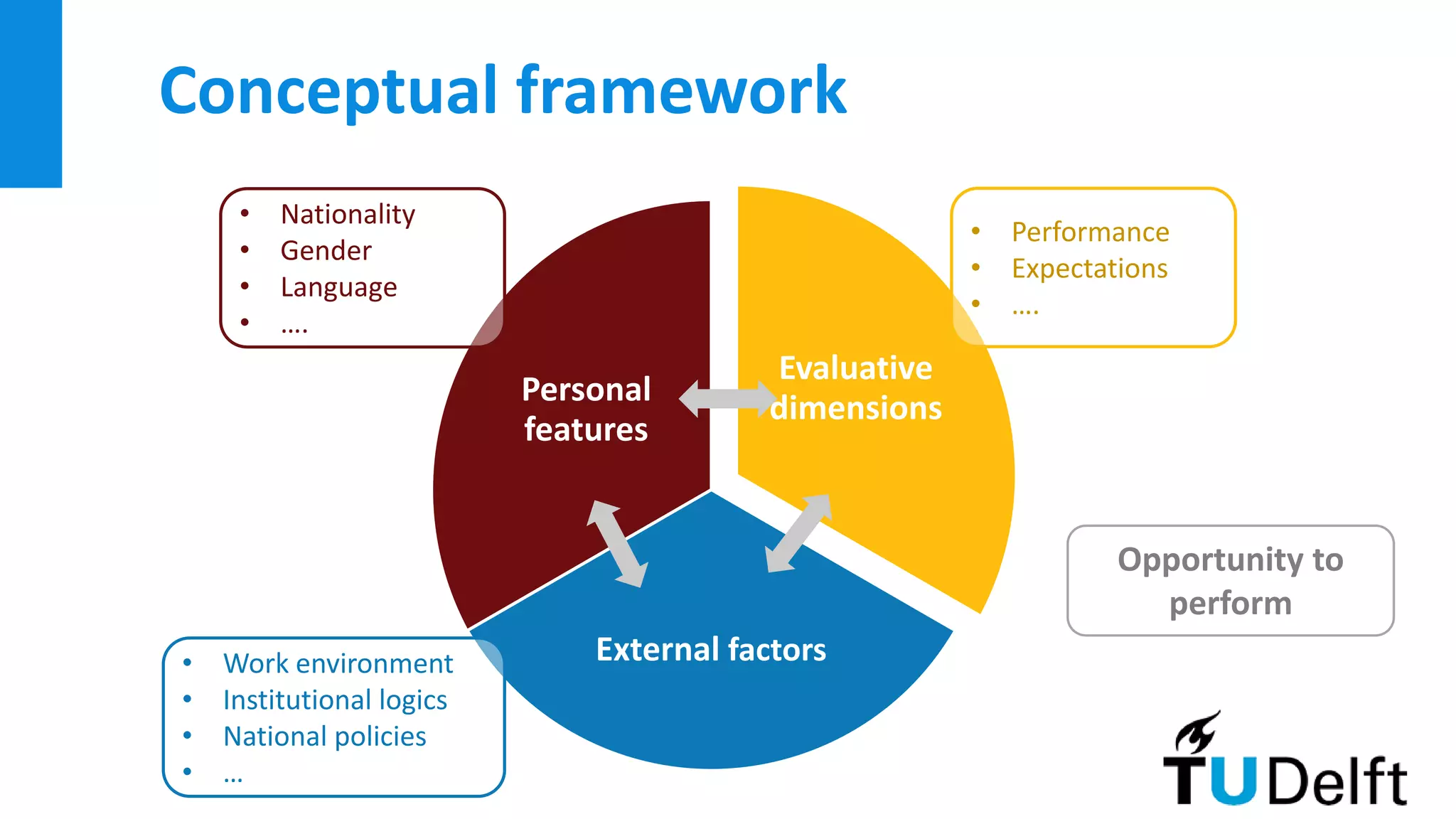

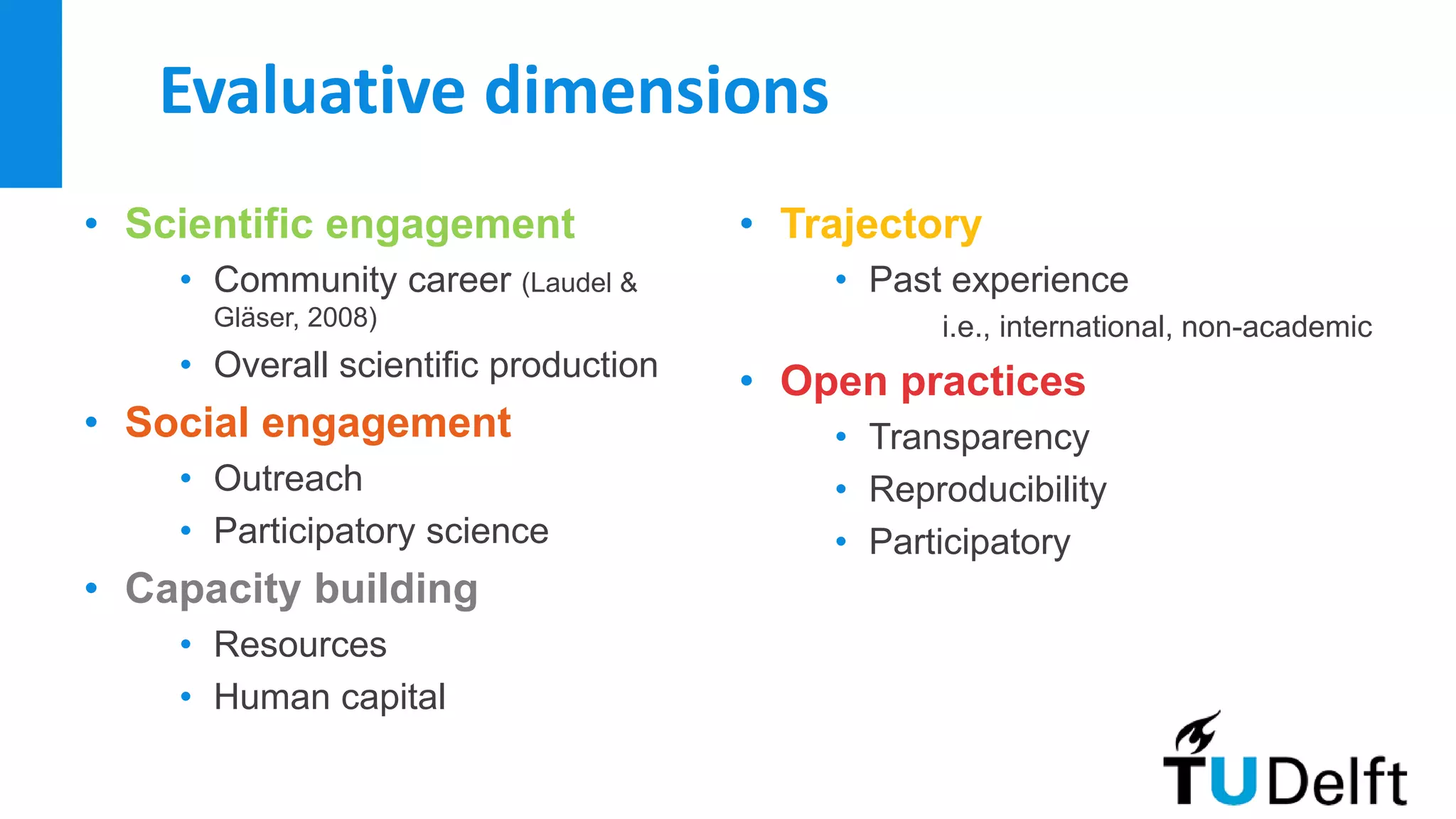

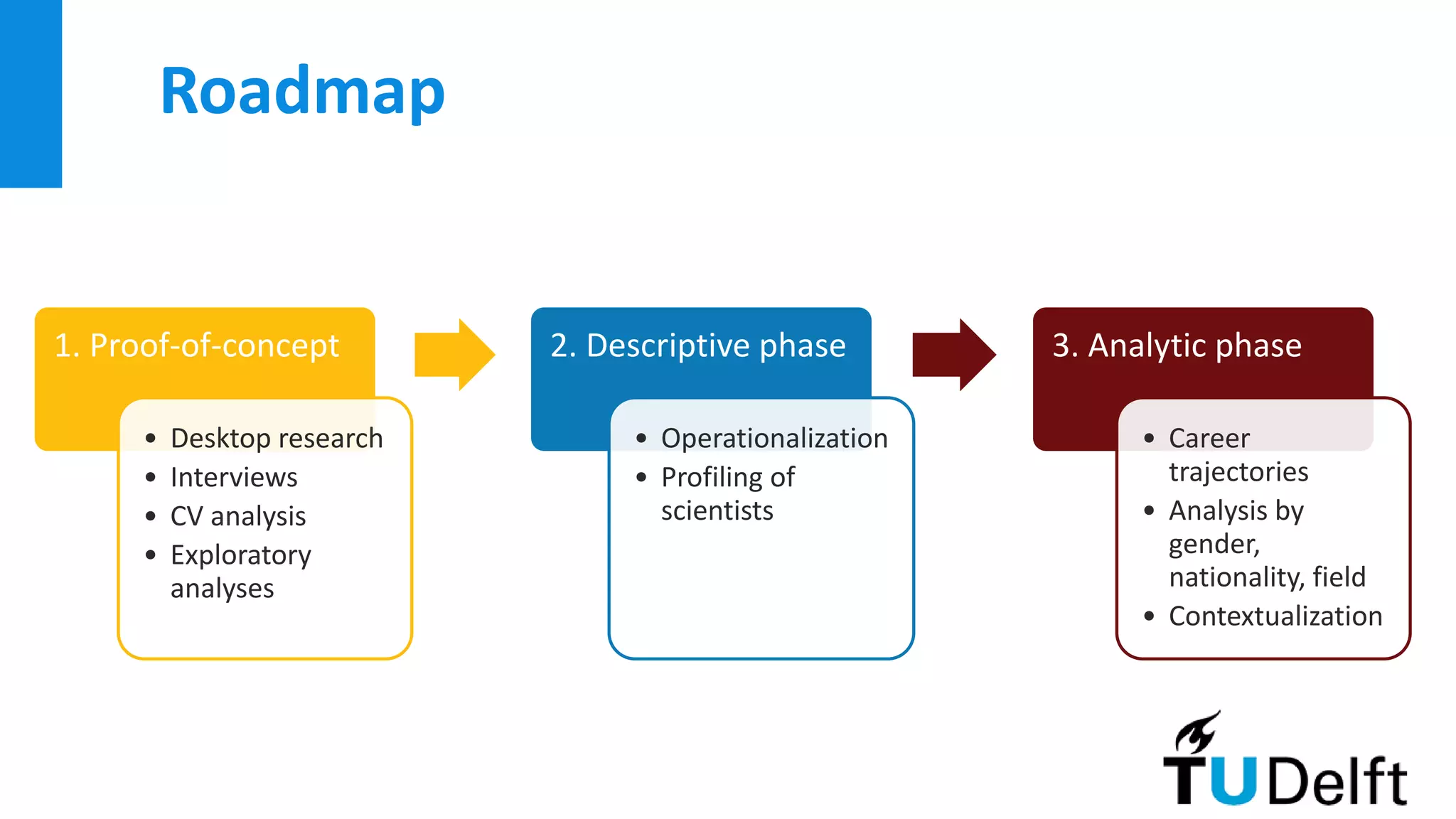

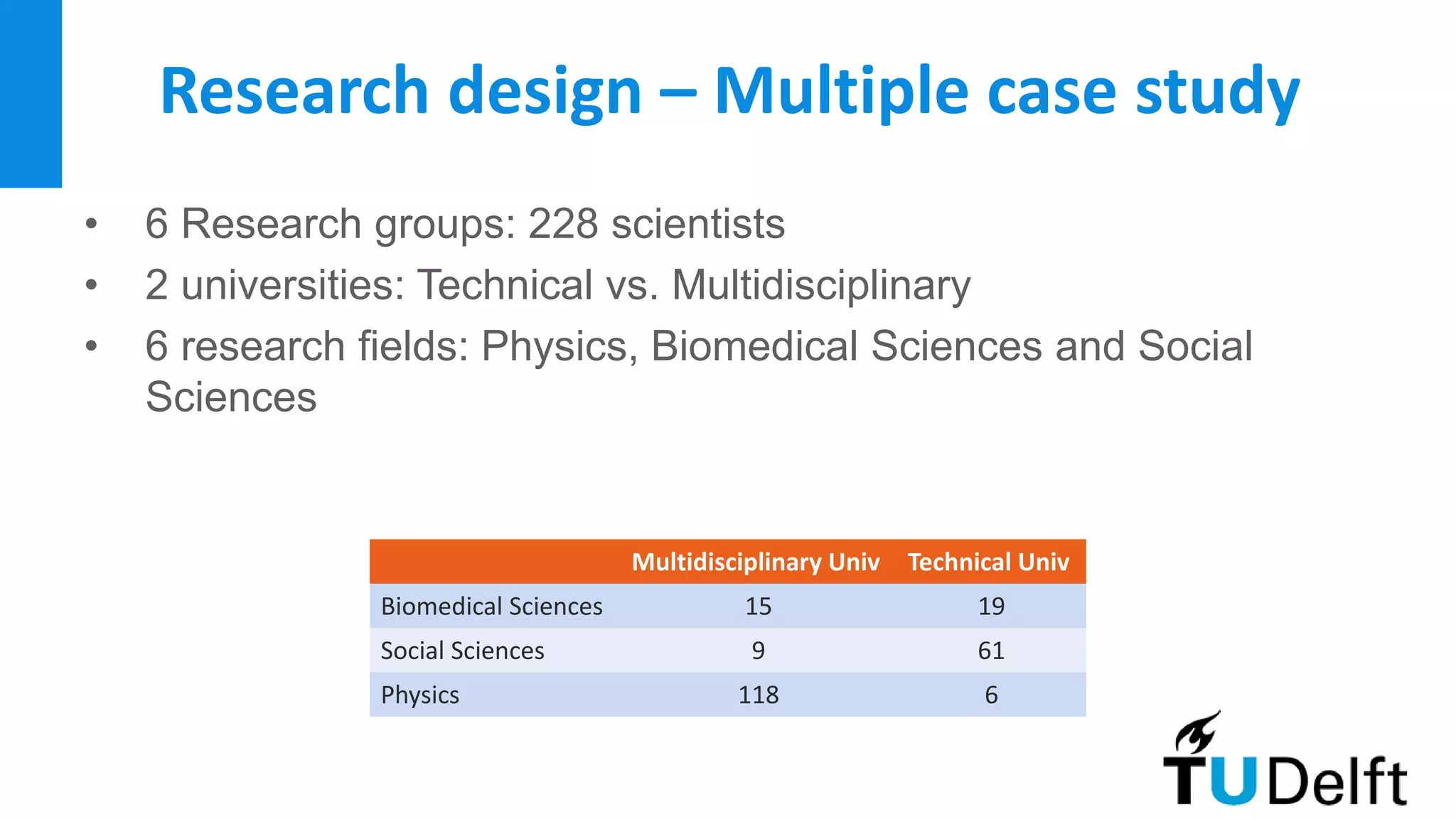

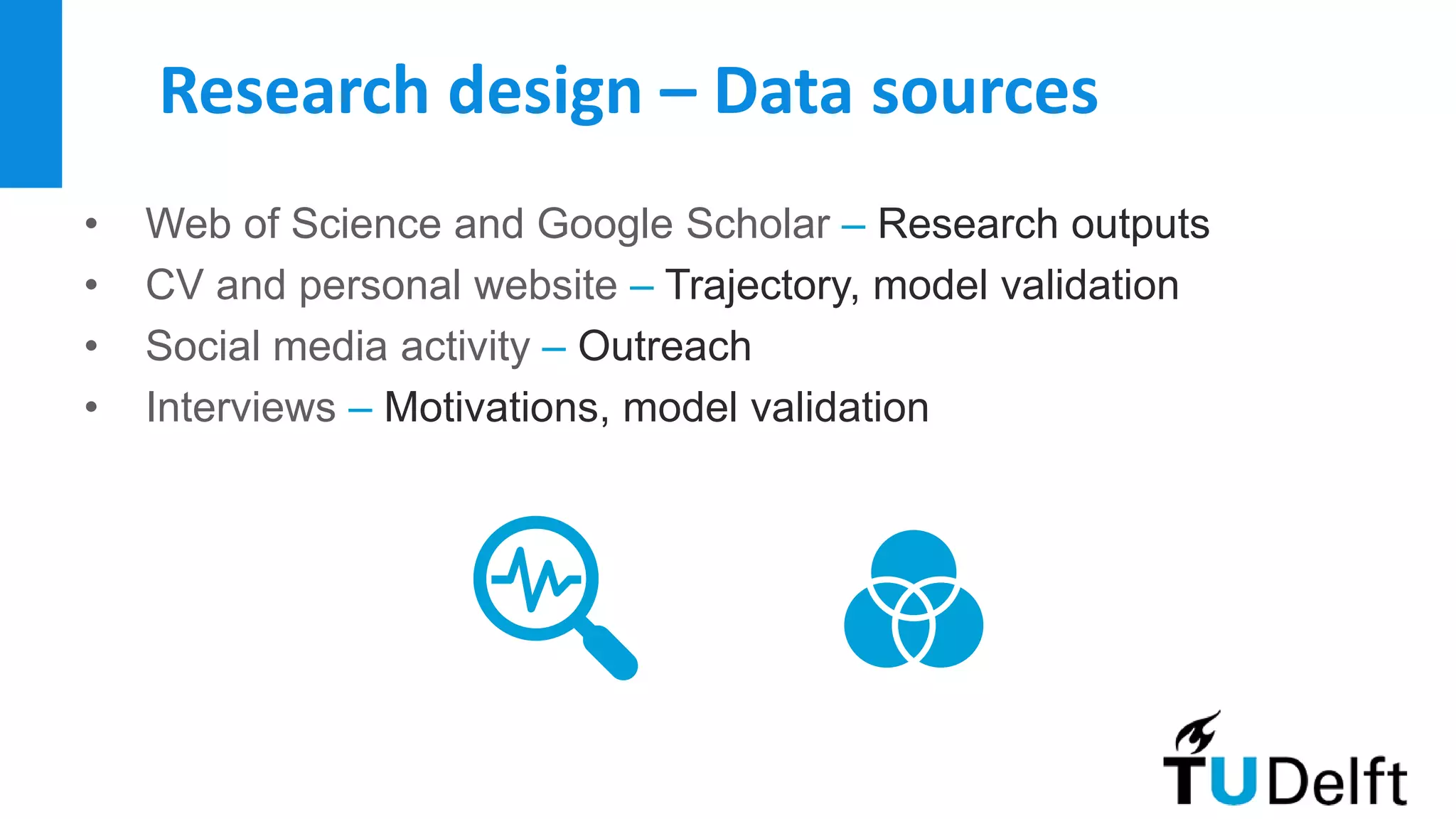

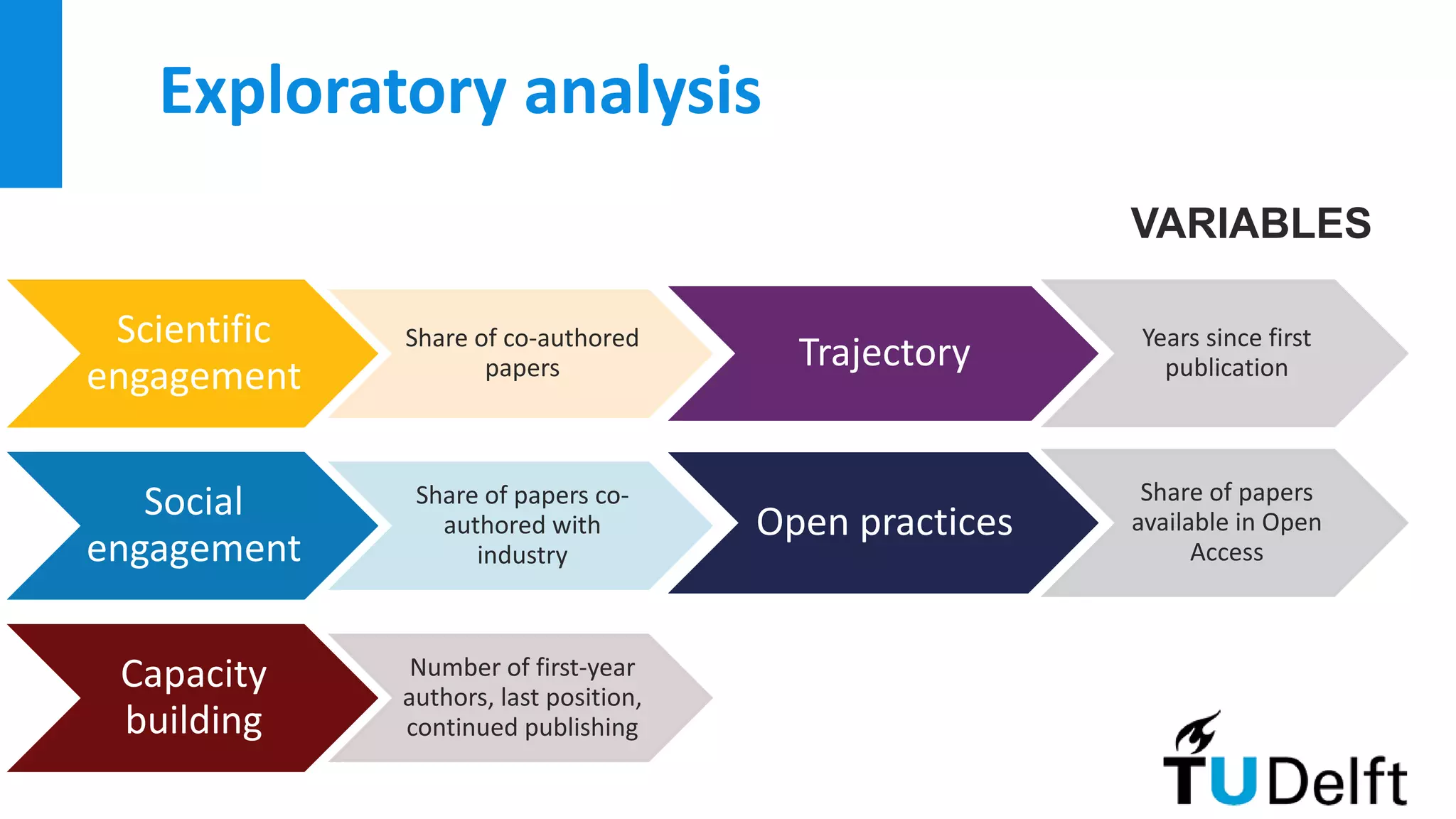

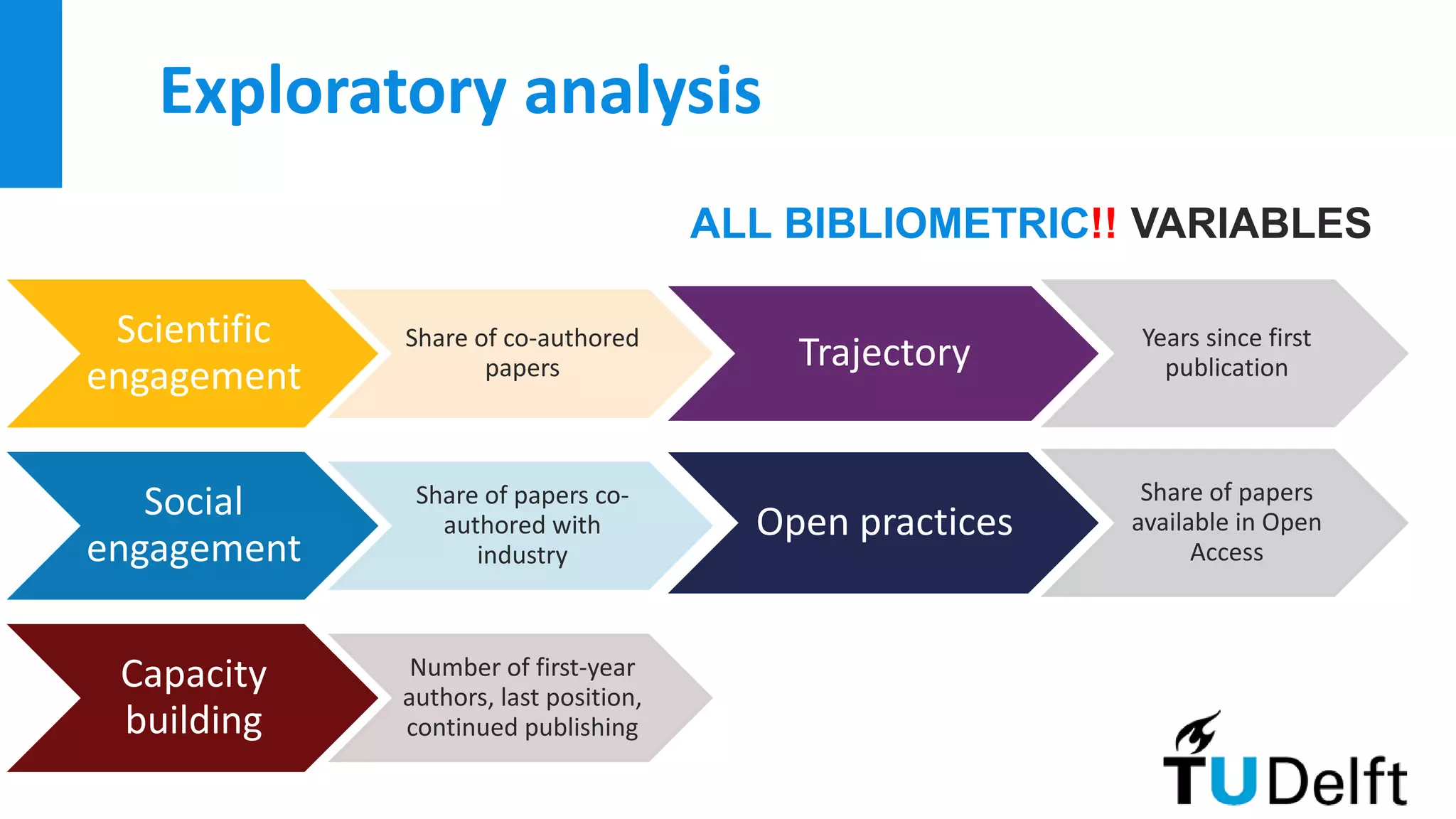

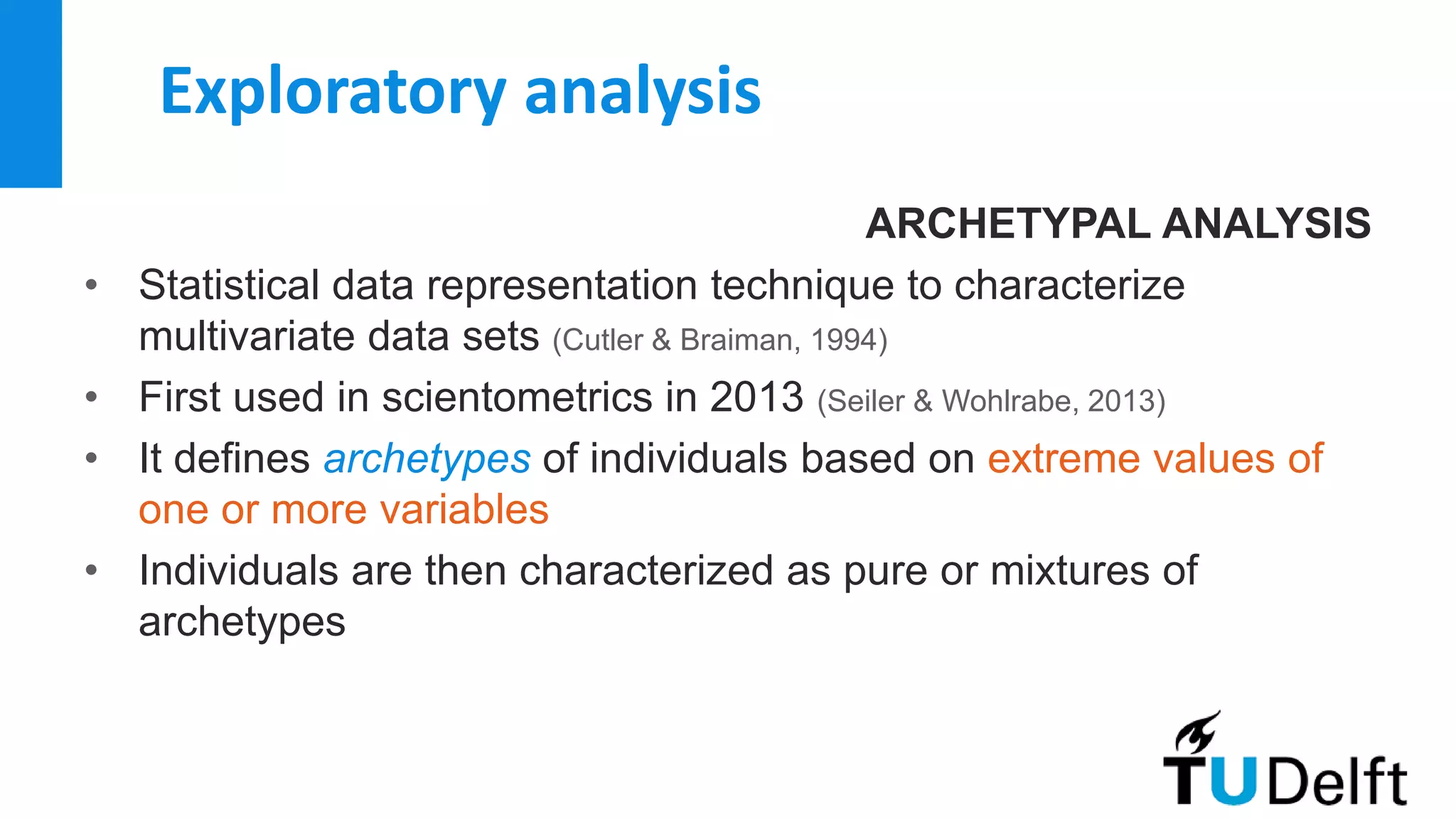

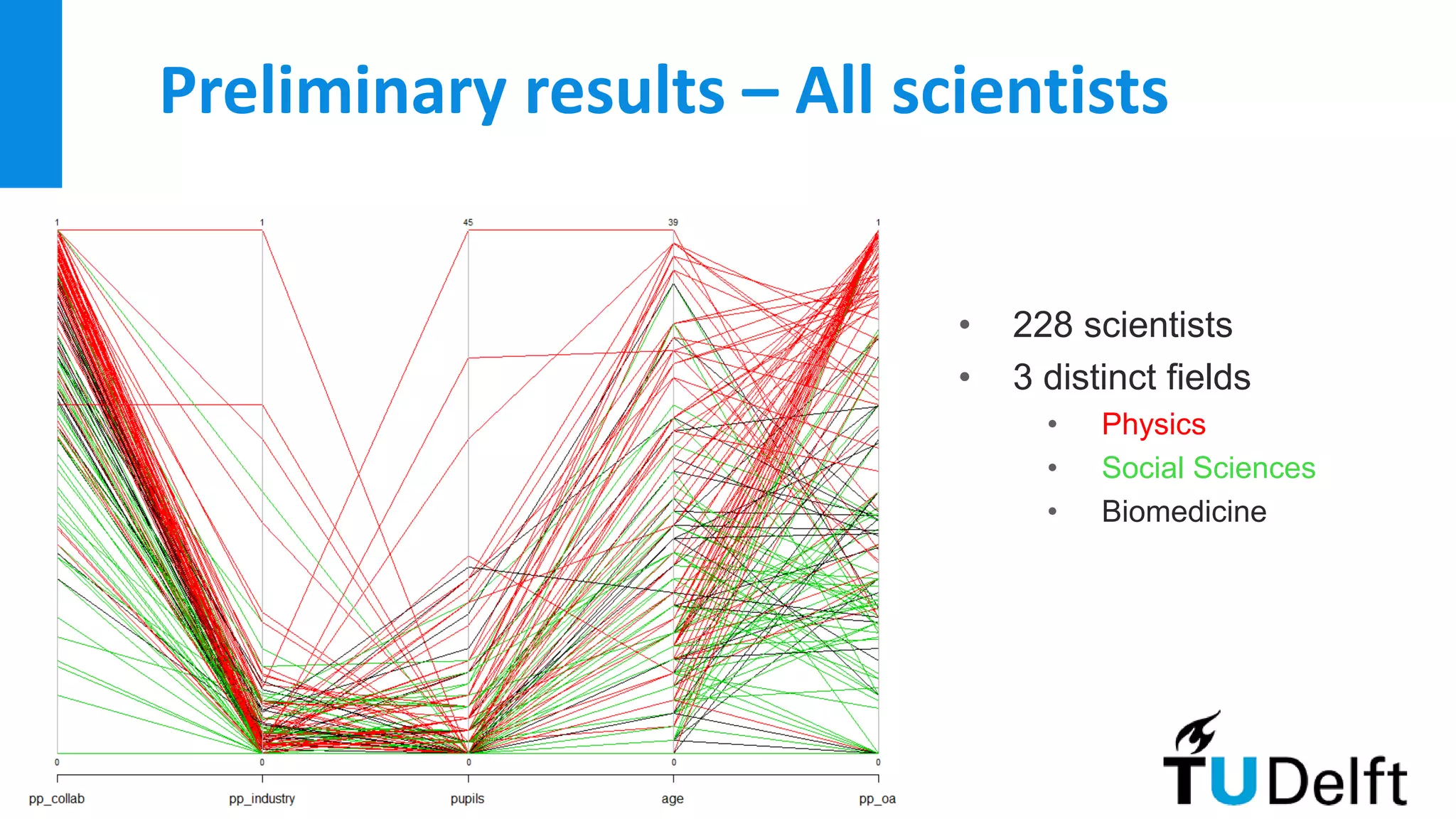

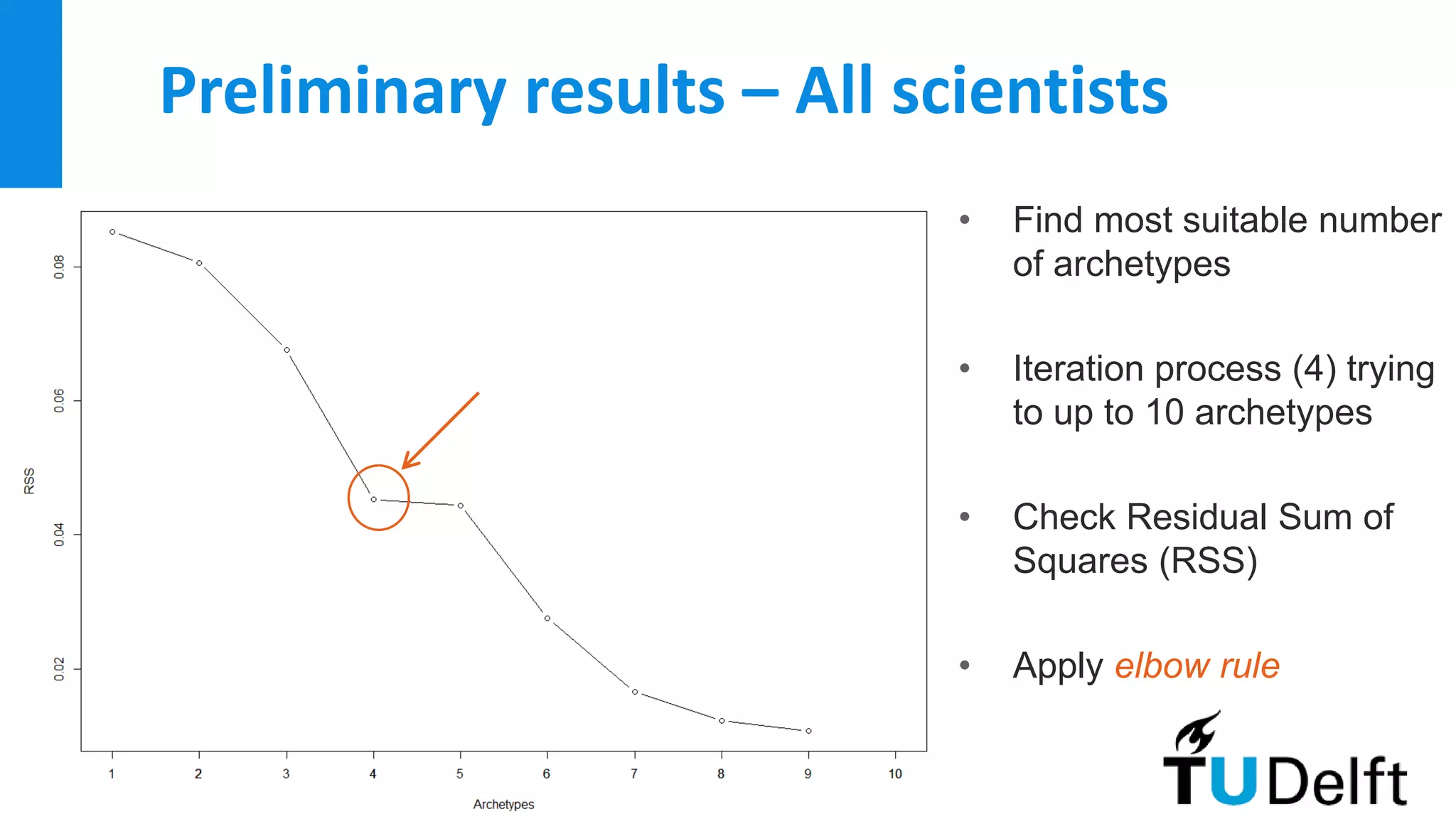

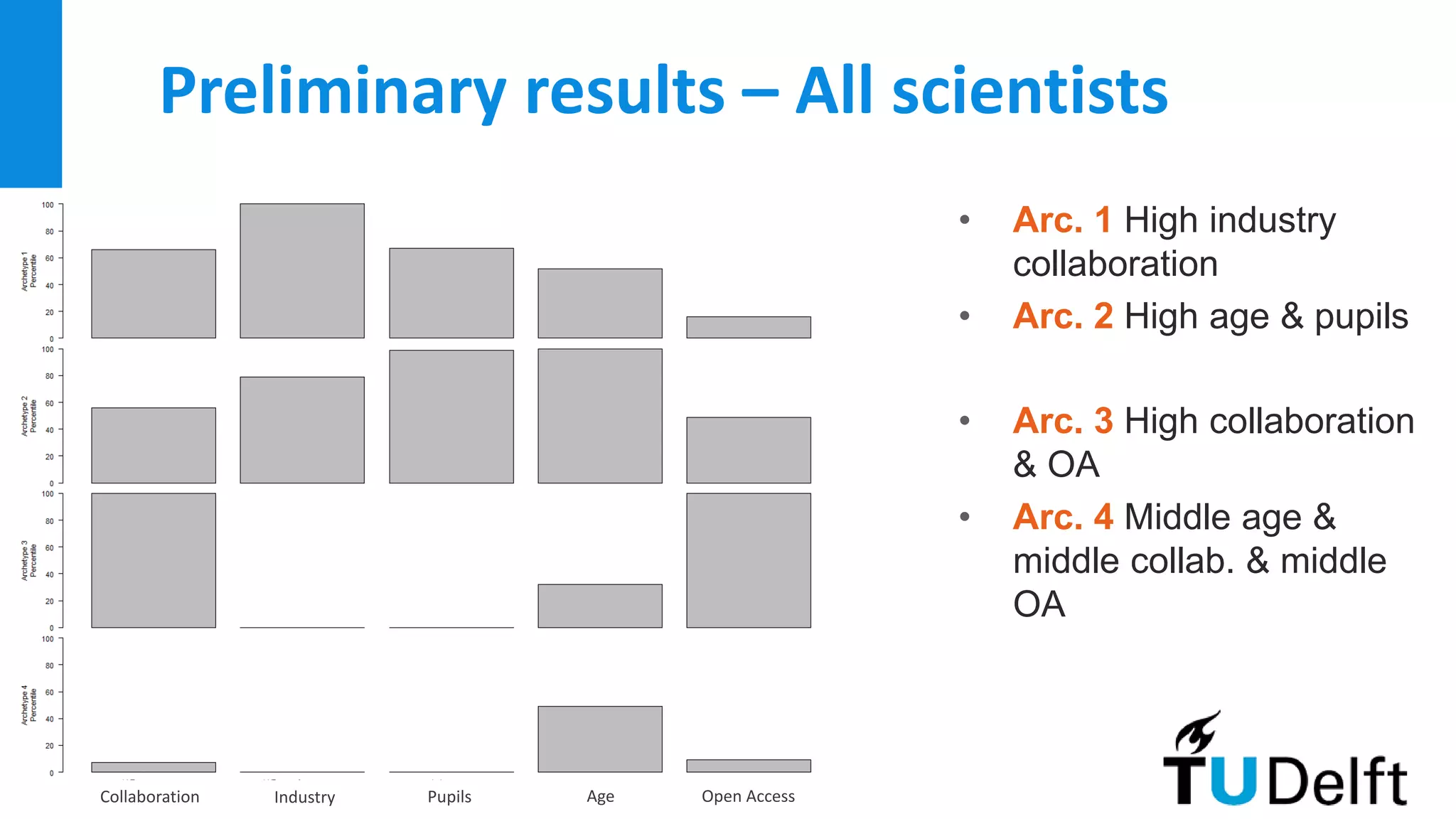

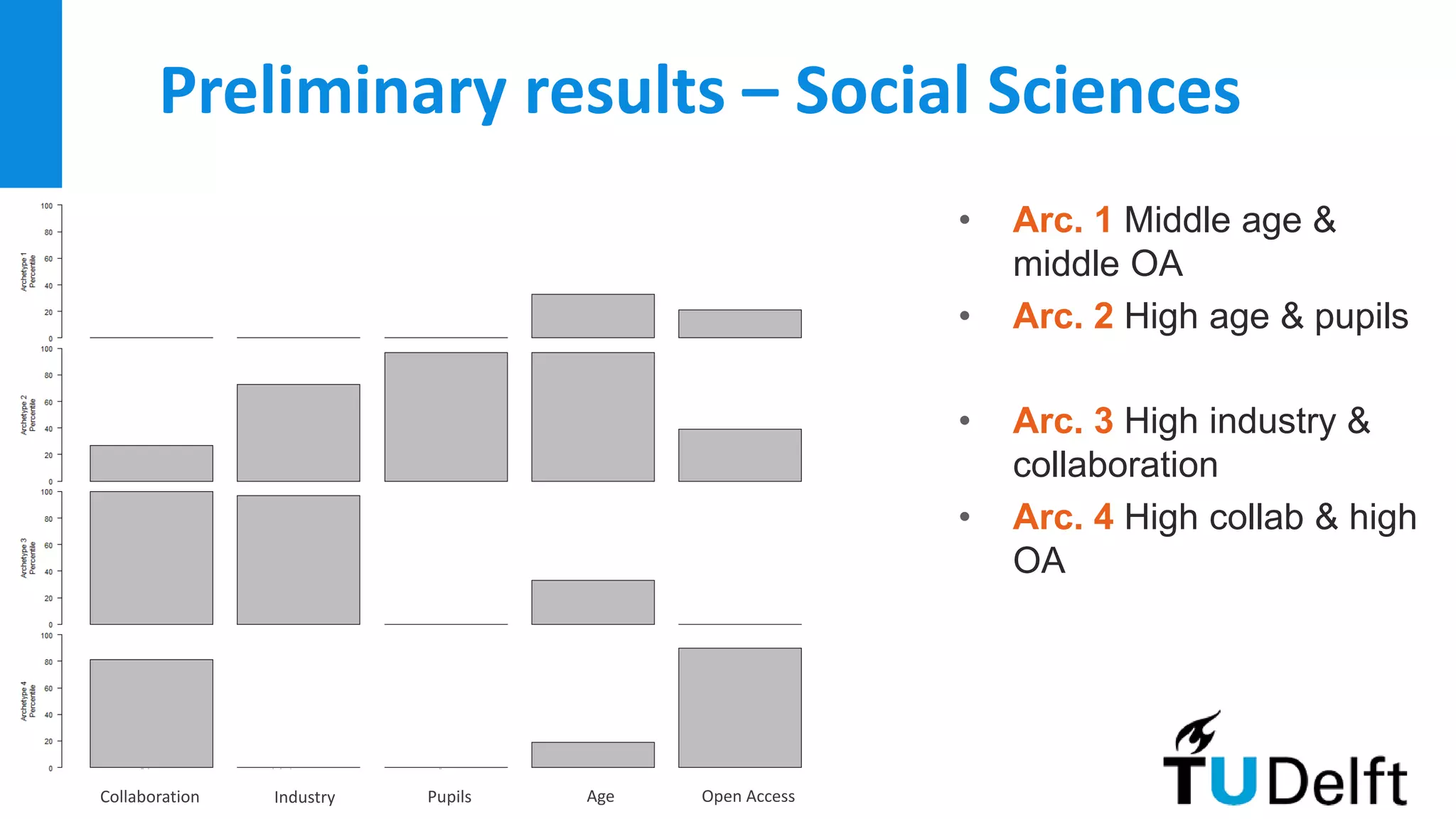

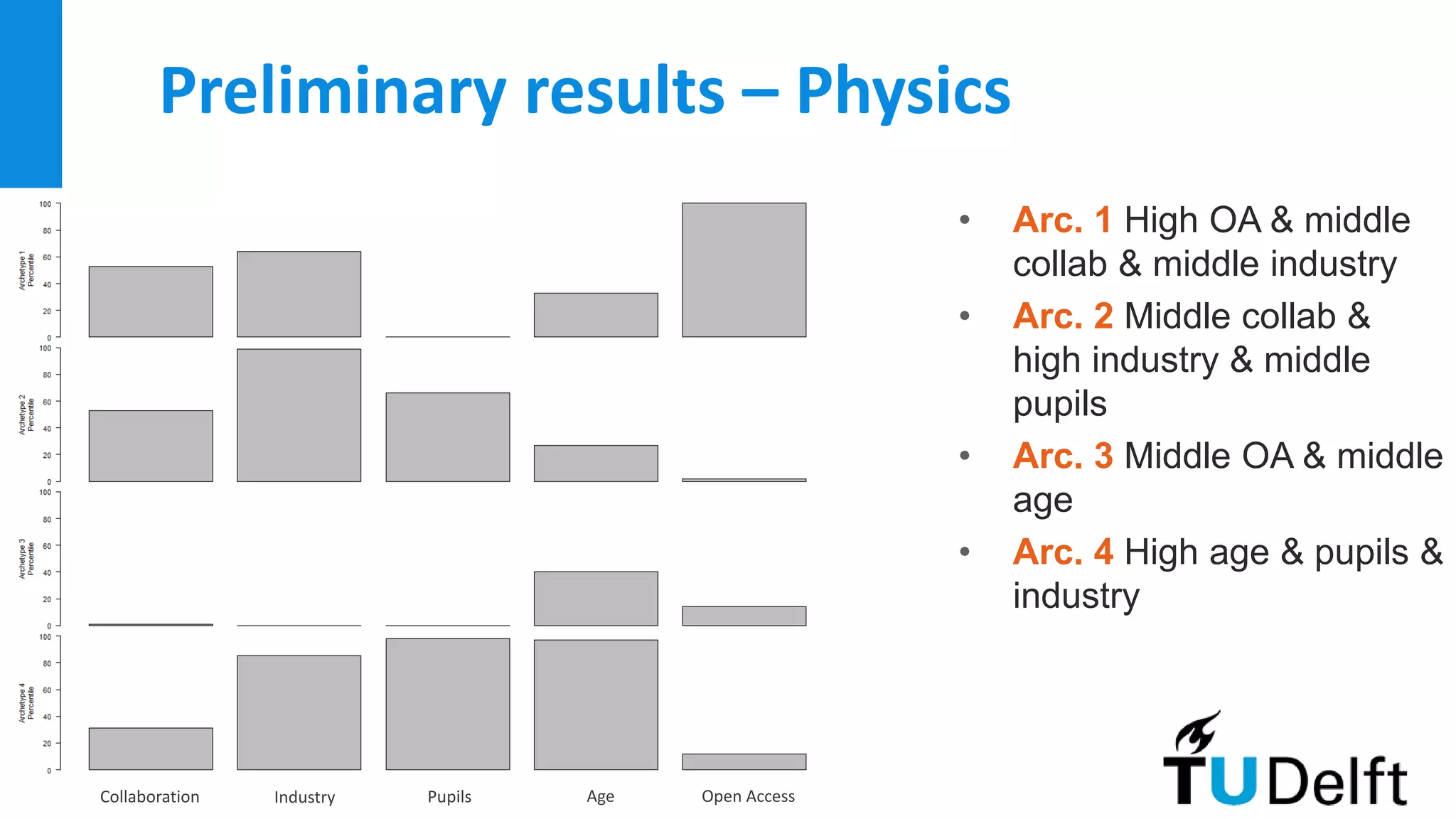

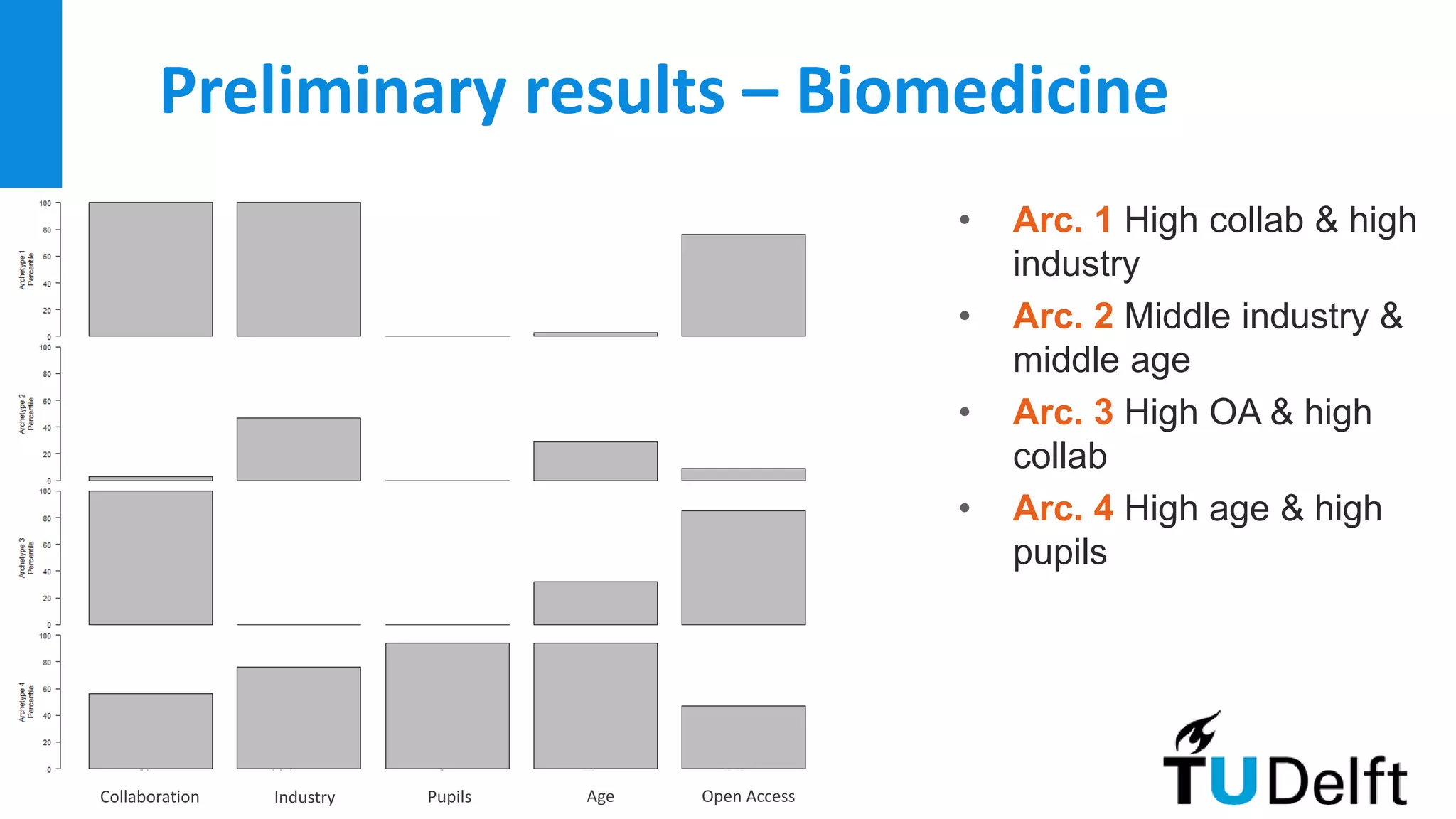

This document proposes a multidimensional model for evaluating scientists that moves beyond reliance on publications and citations. It discusses limitations of current metrics-based systems and threats they pose. A conceptual framework is presented incorporating dimensions like scientific engagement, social impact, and open practices. A research design is outlined using archetypal analysis to characterize profiles of 228 scientists across fields. Preliminary results identify 4 archetypes in each field reflecting differences in collaboration, career stage and open access. The study aims to develop a more balanced approach to research assessment accounting for a diversity of contributions.