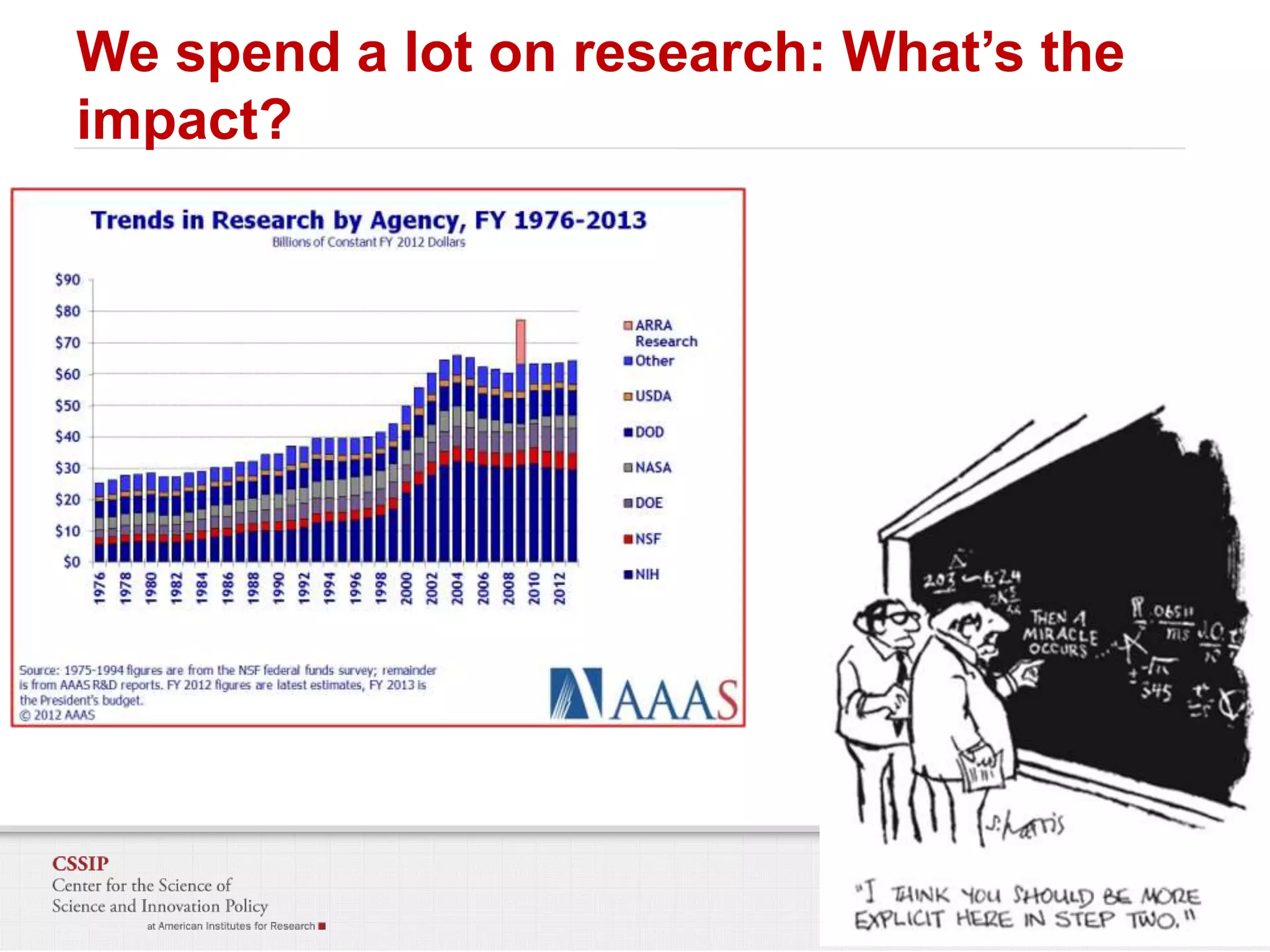

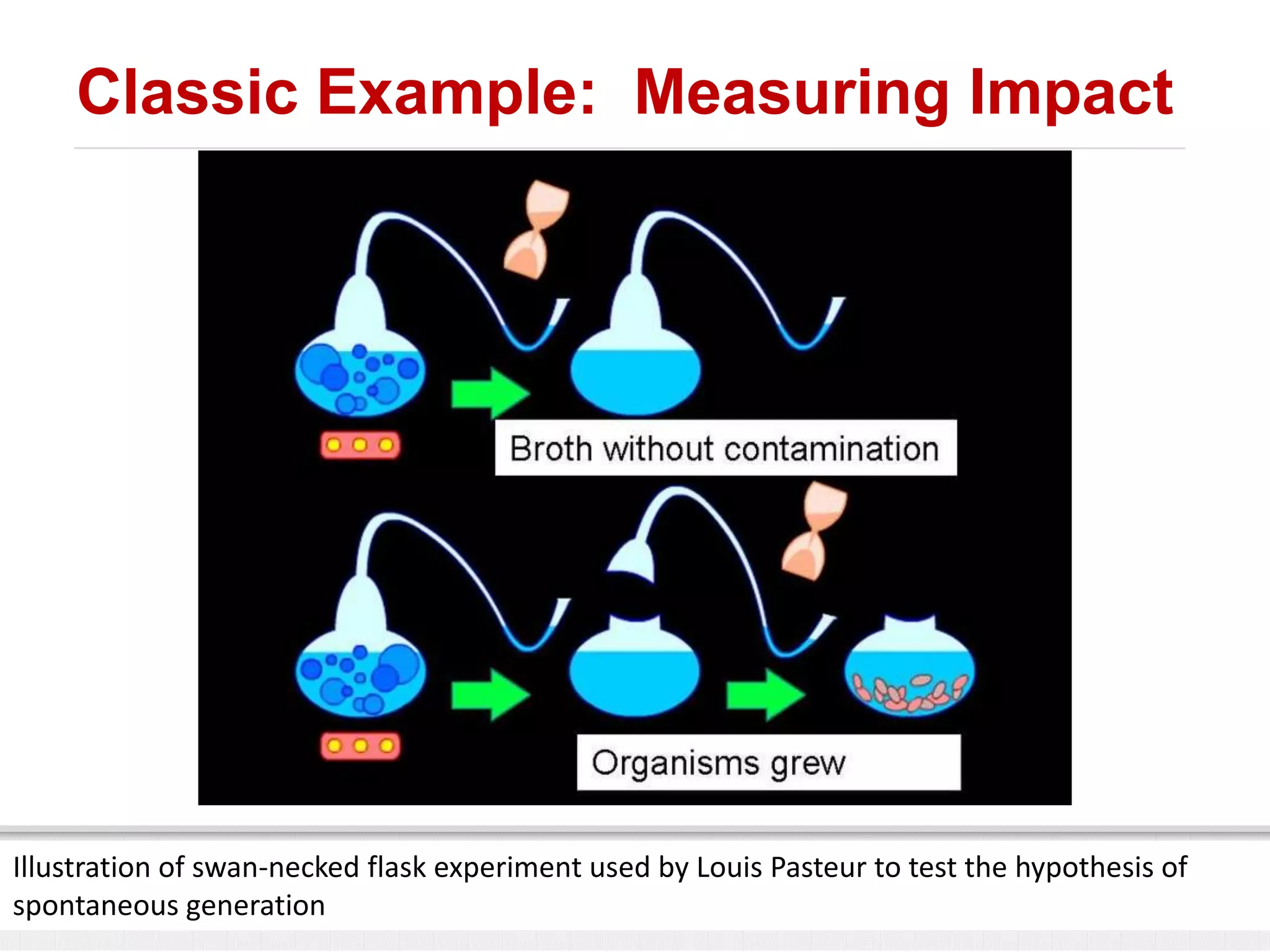

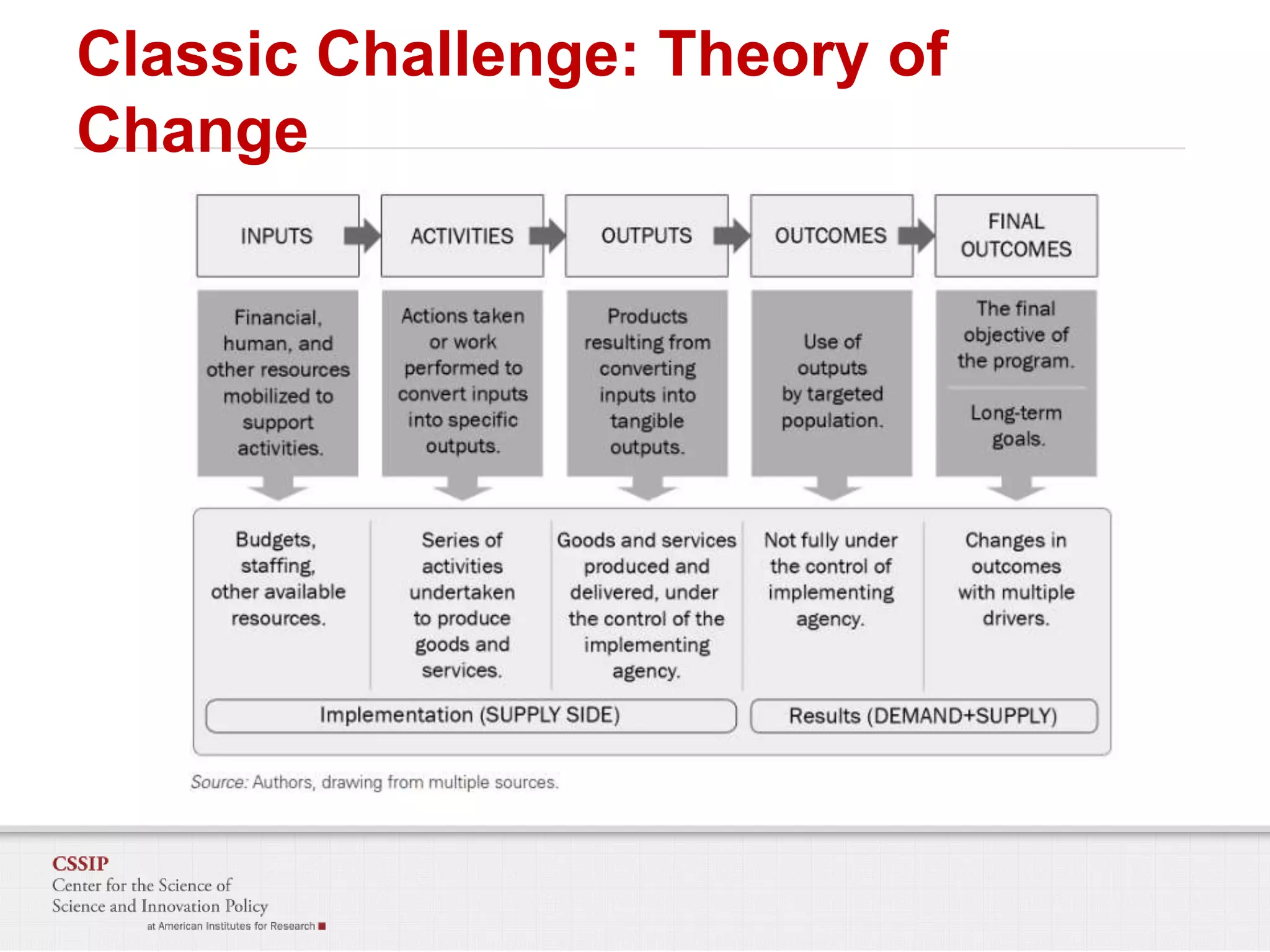

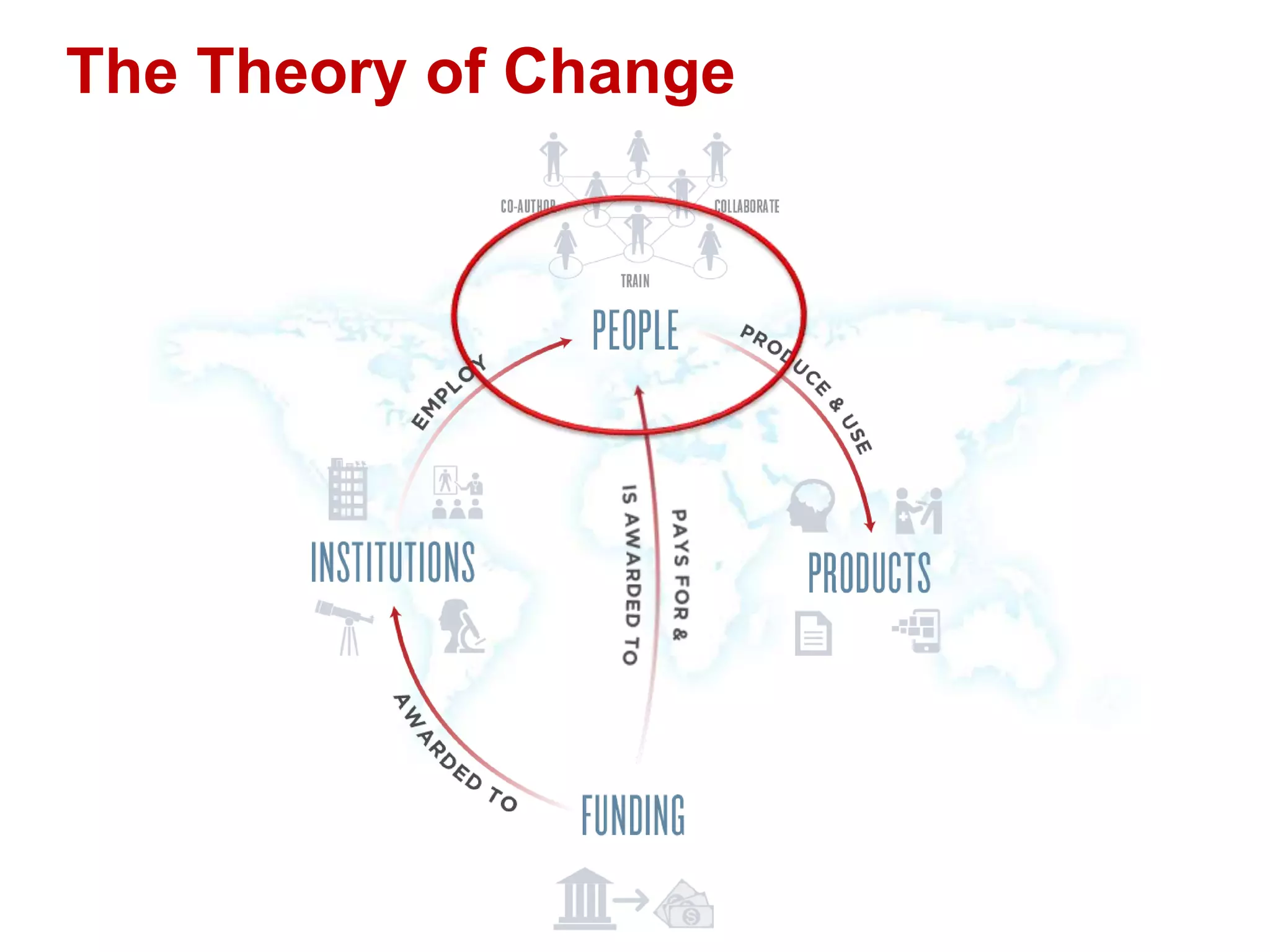

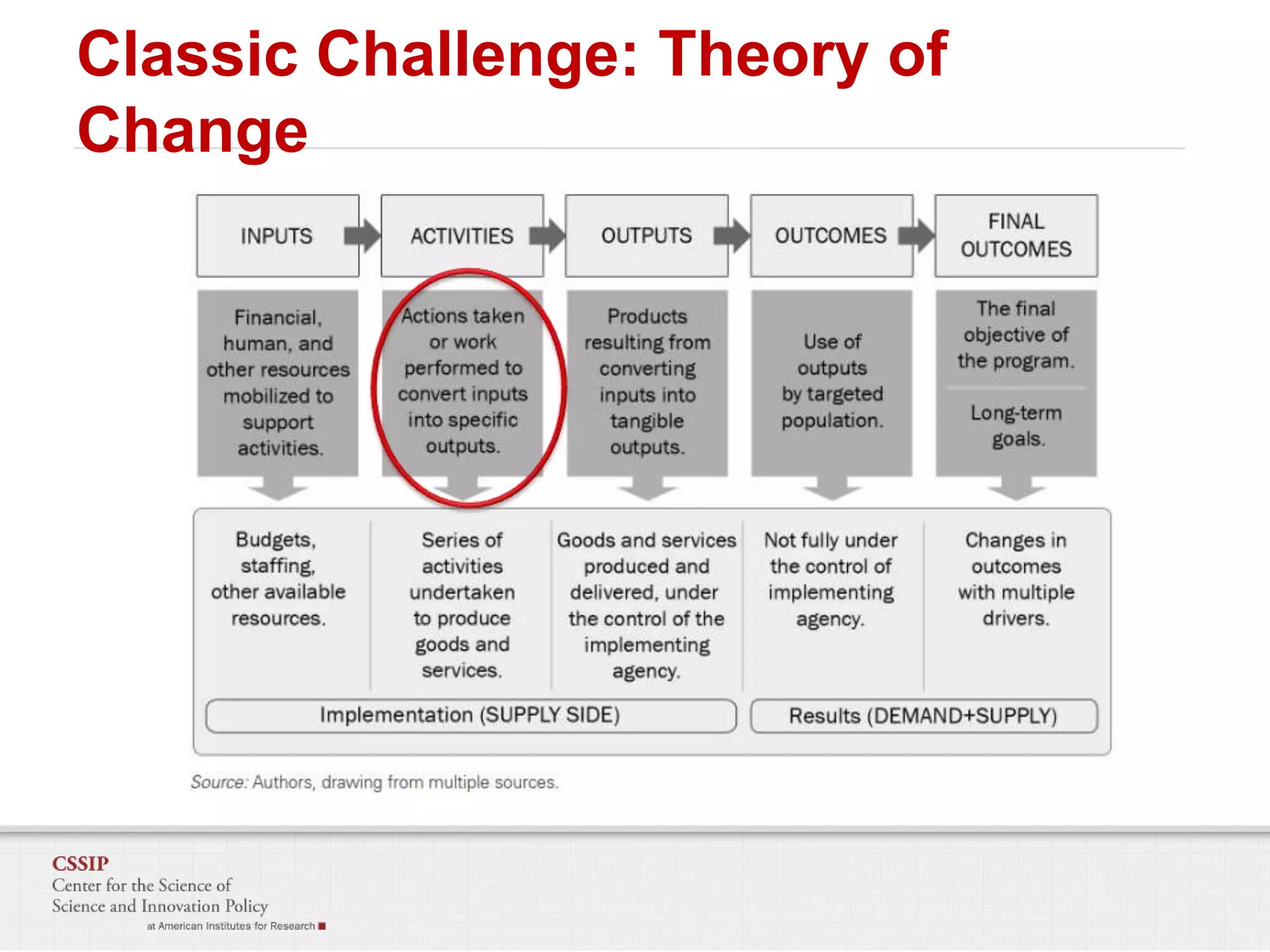

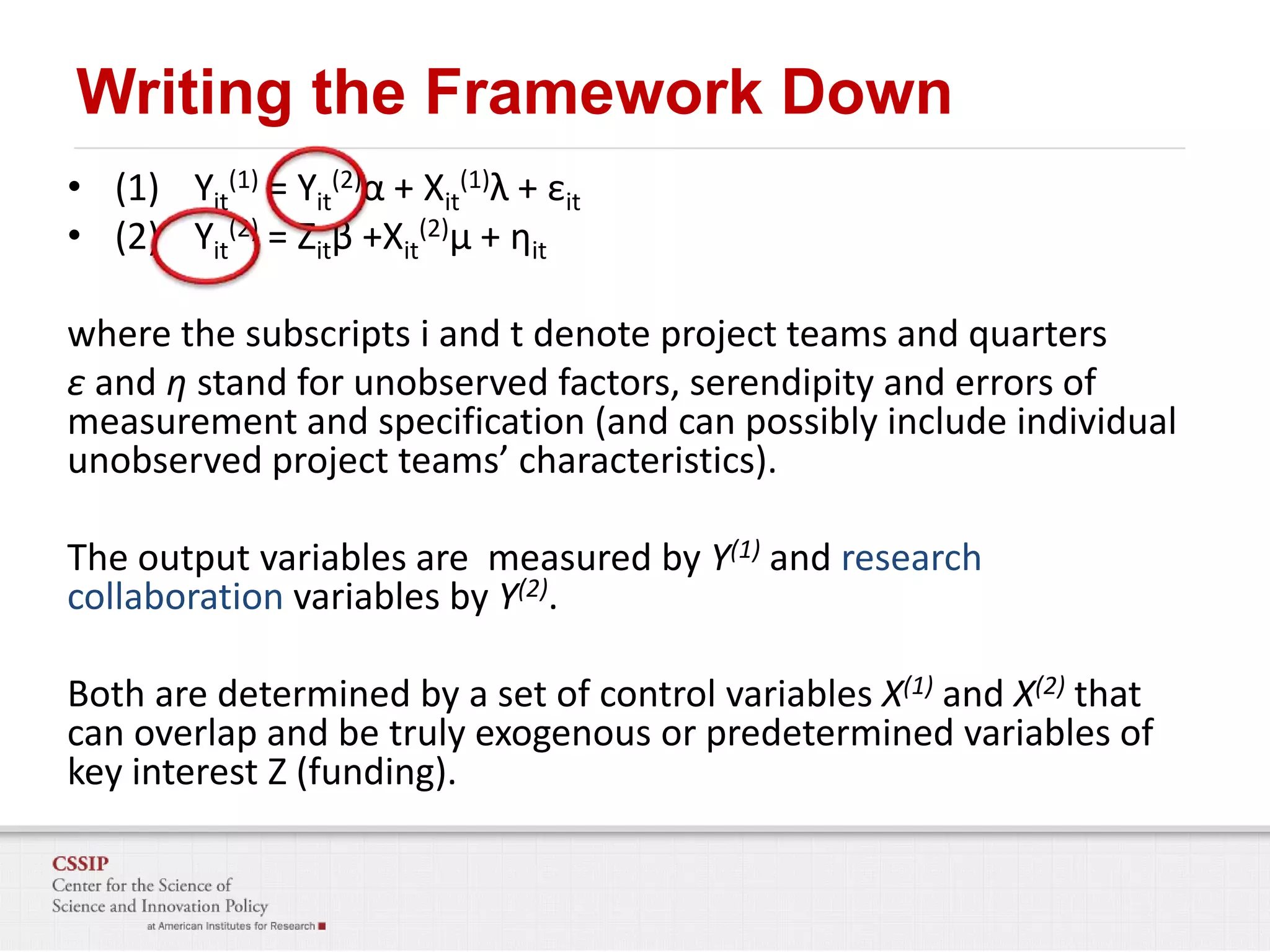

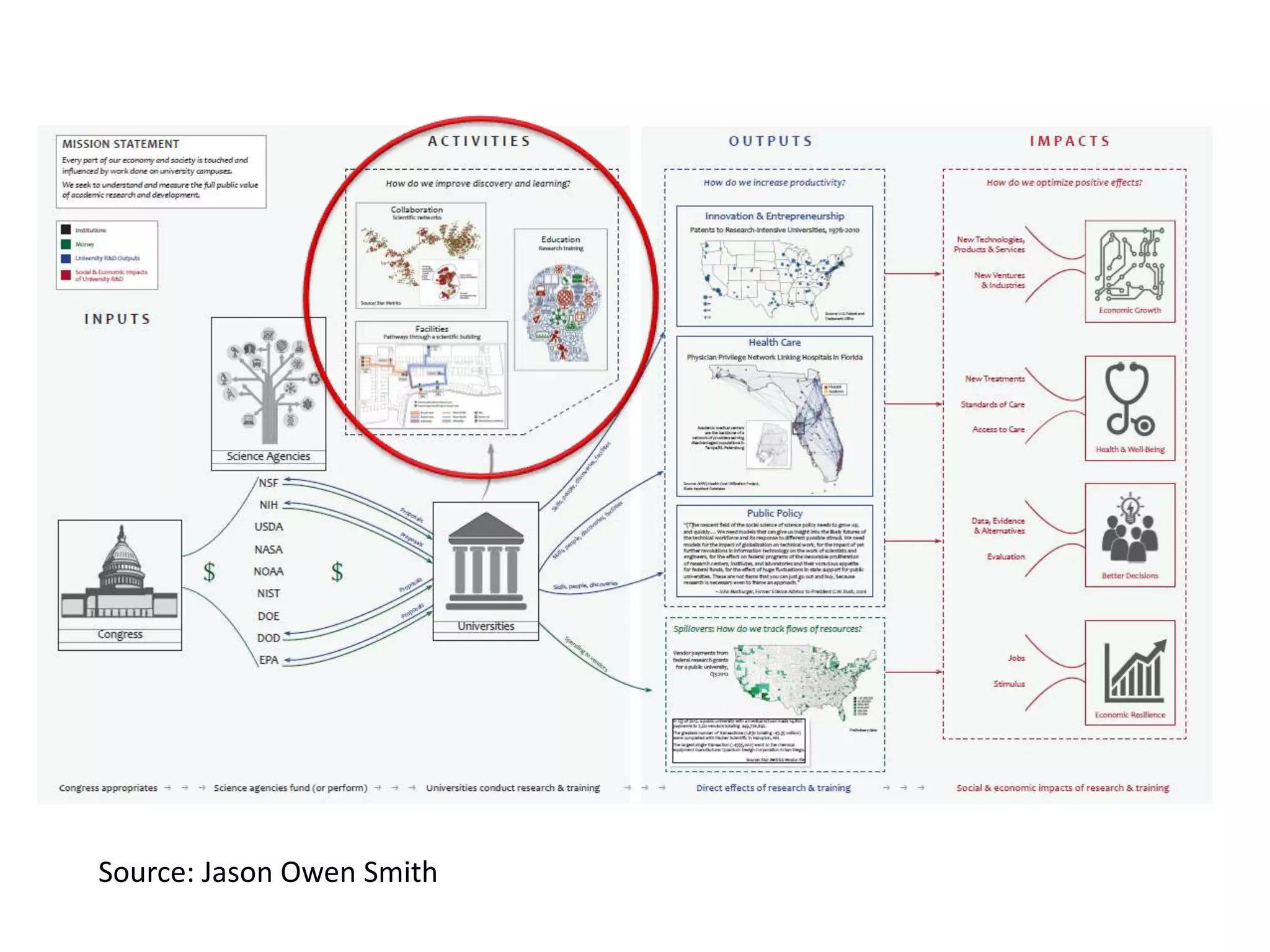

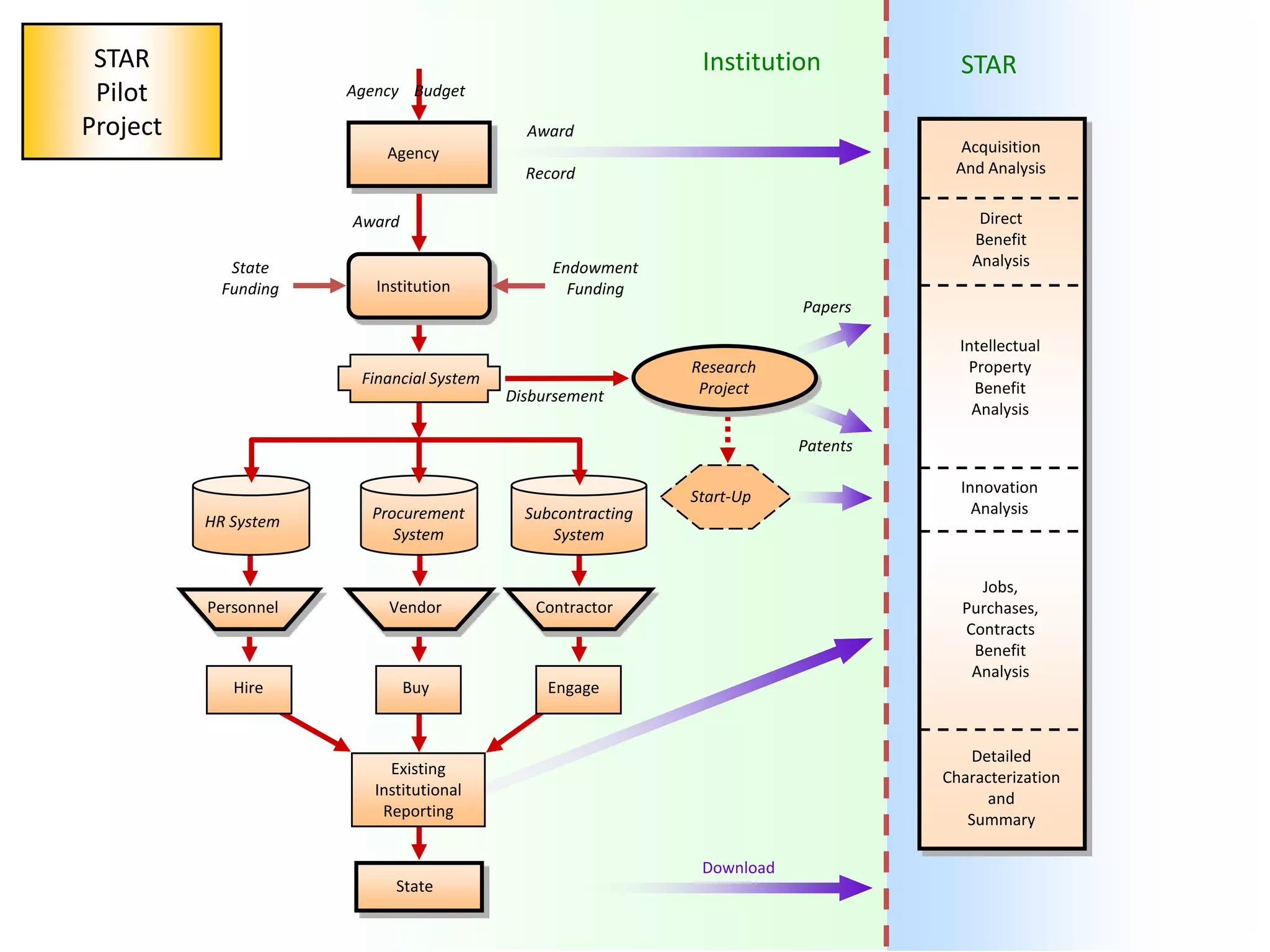

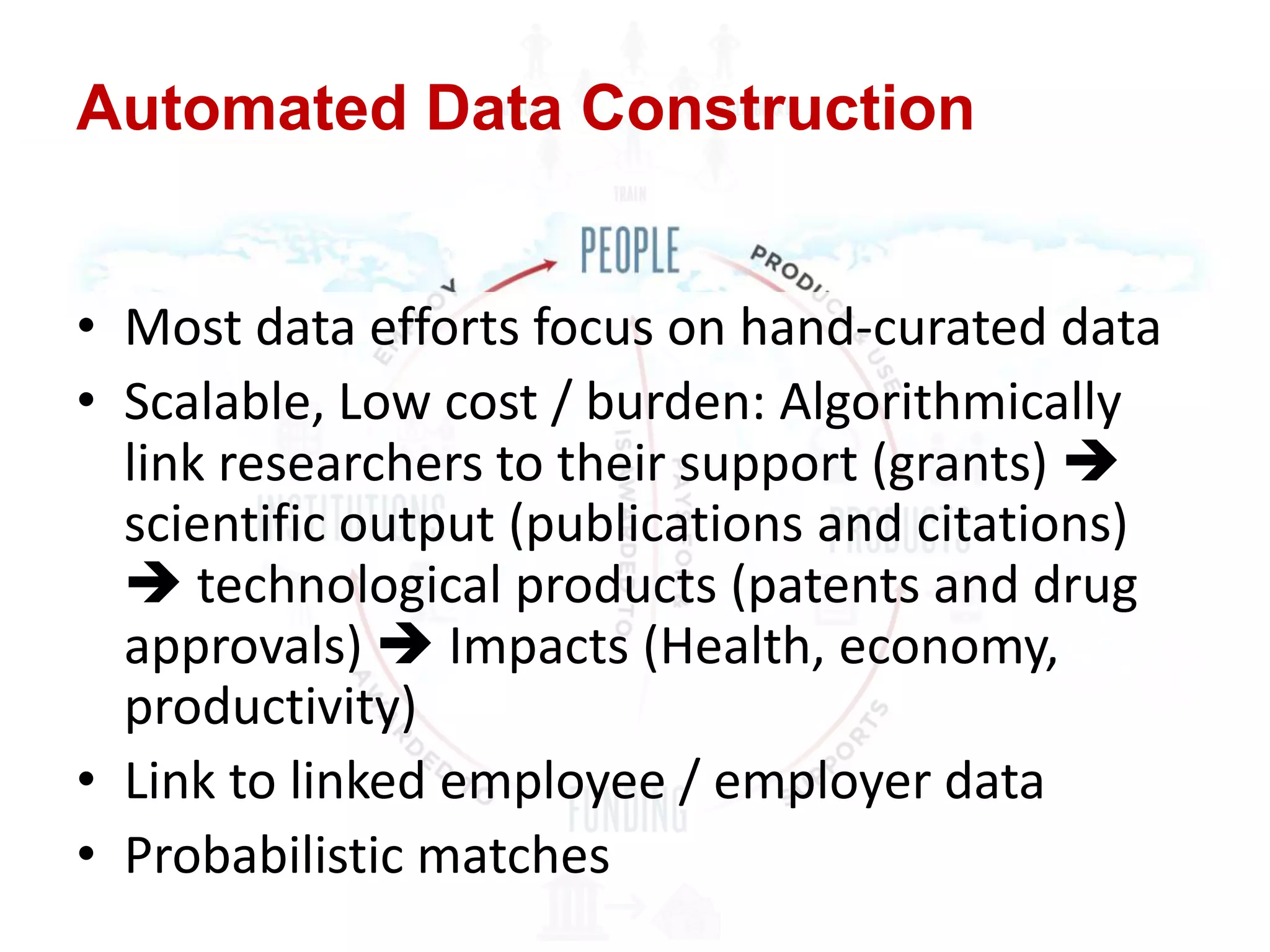

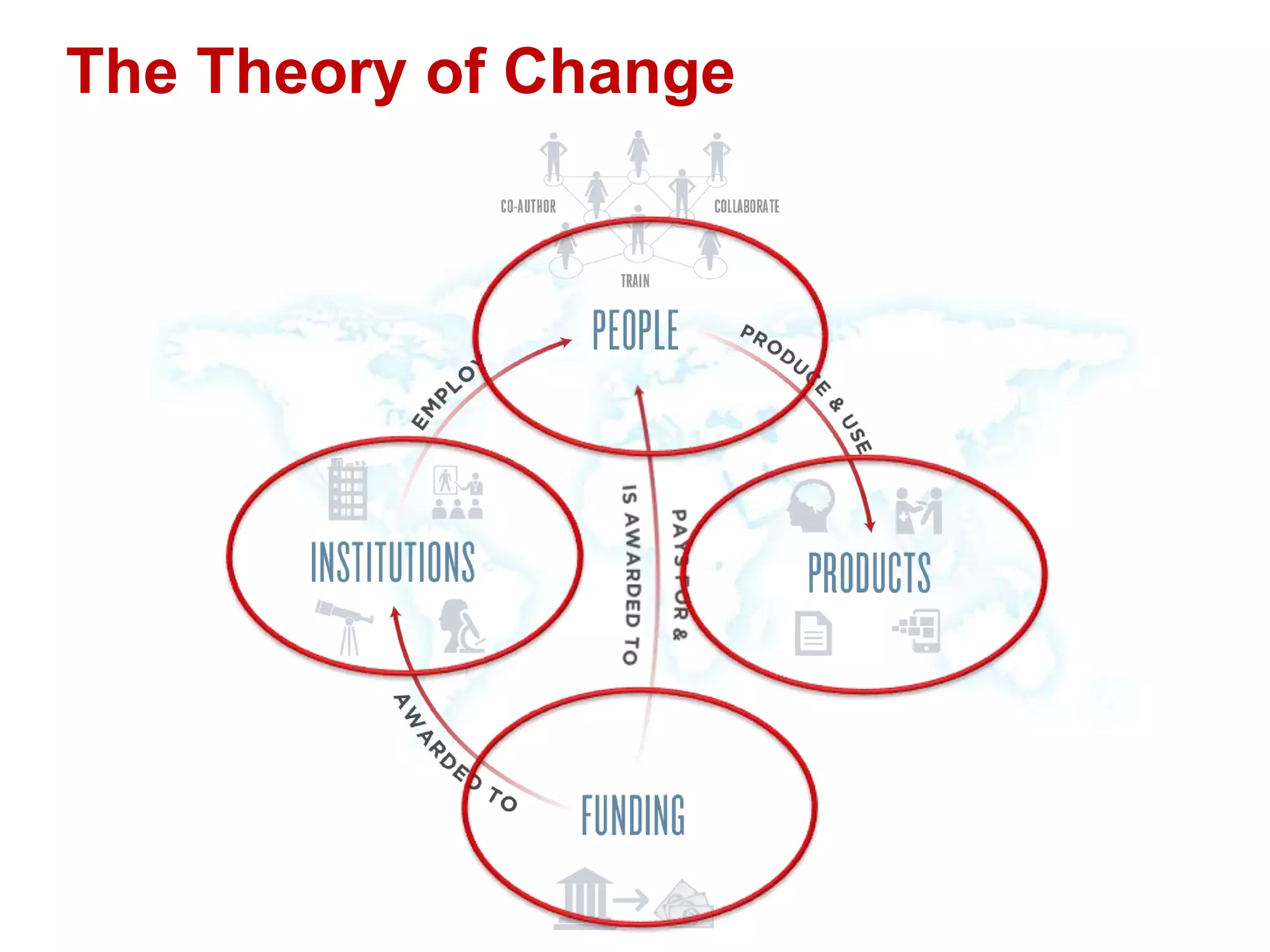

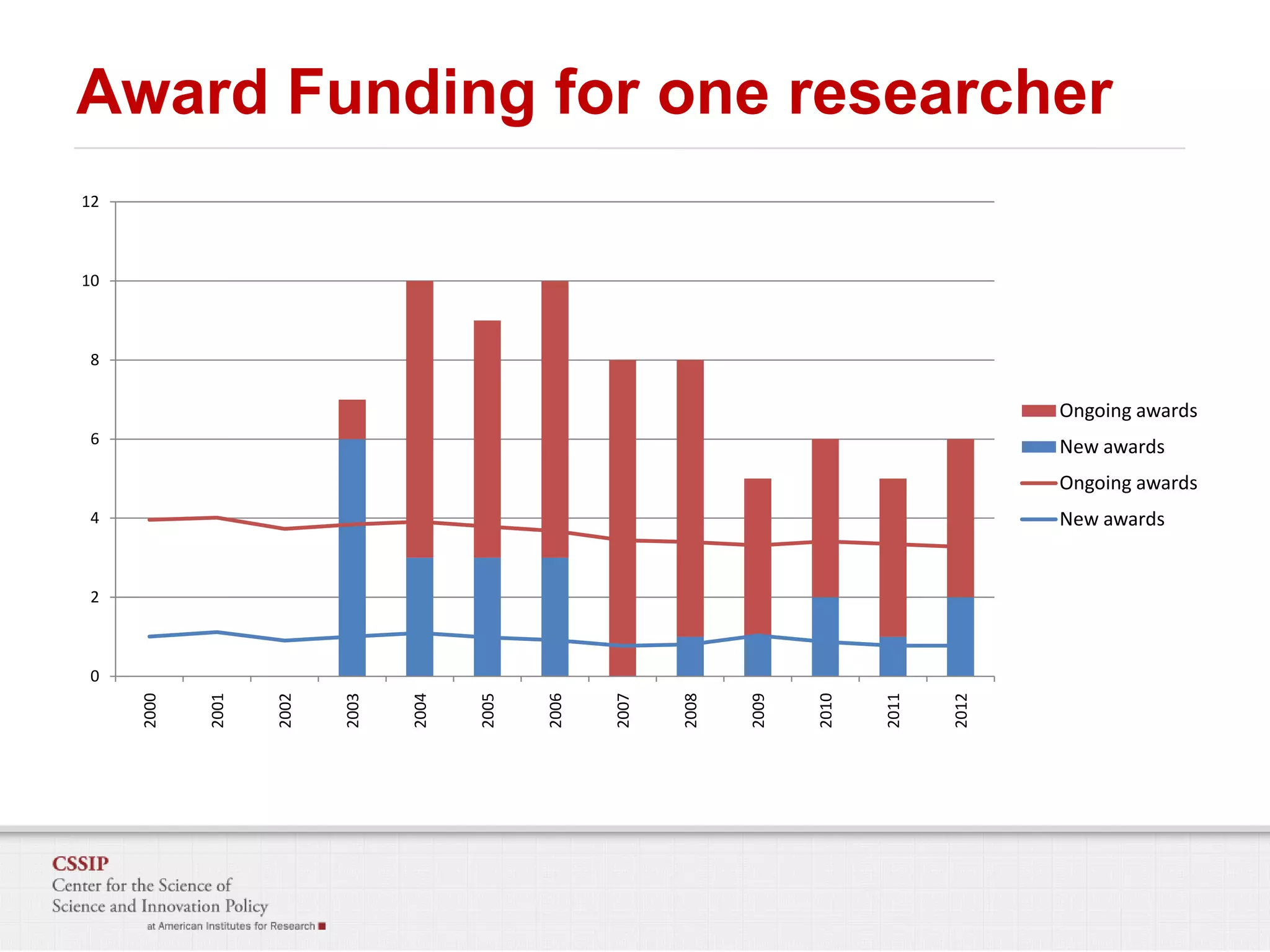

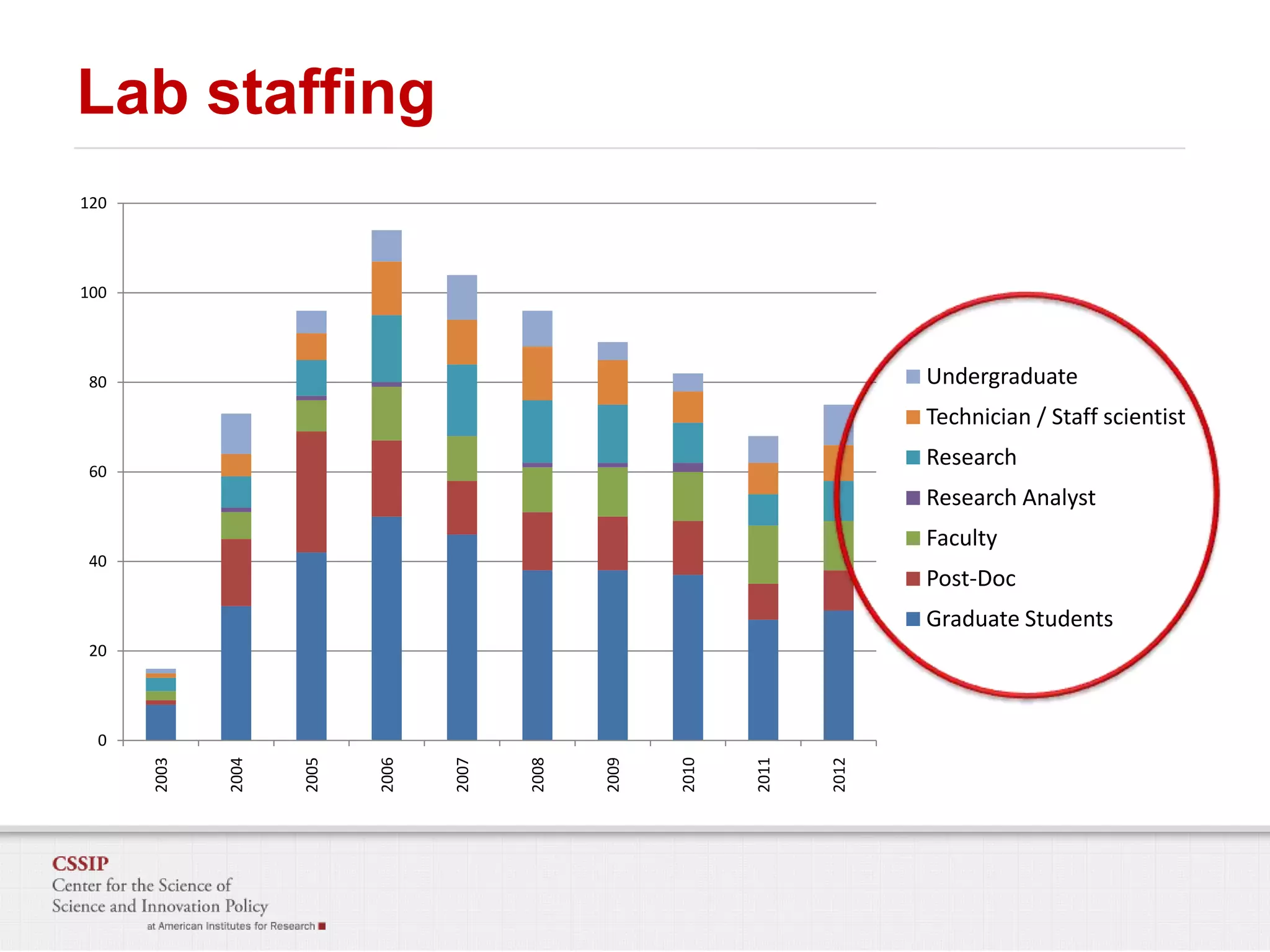

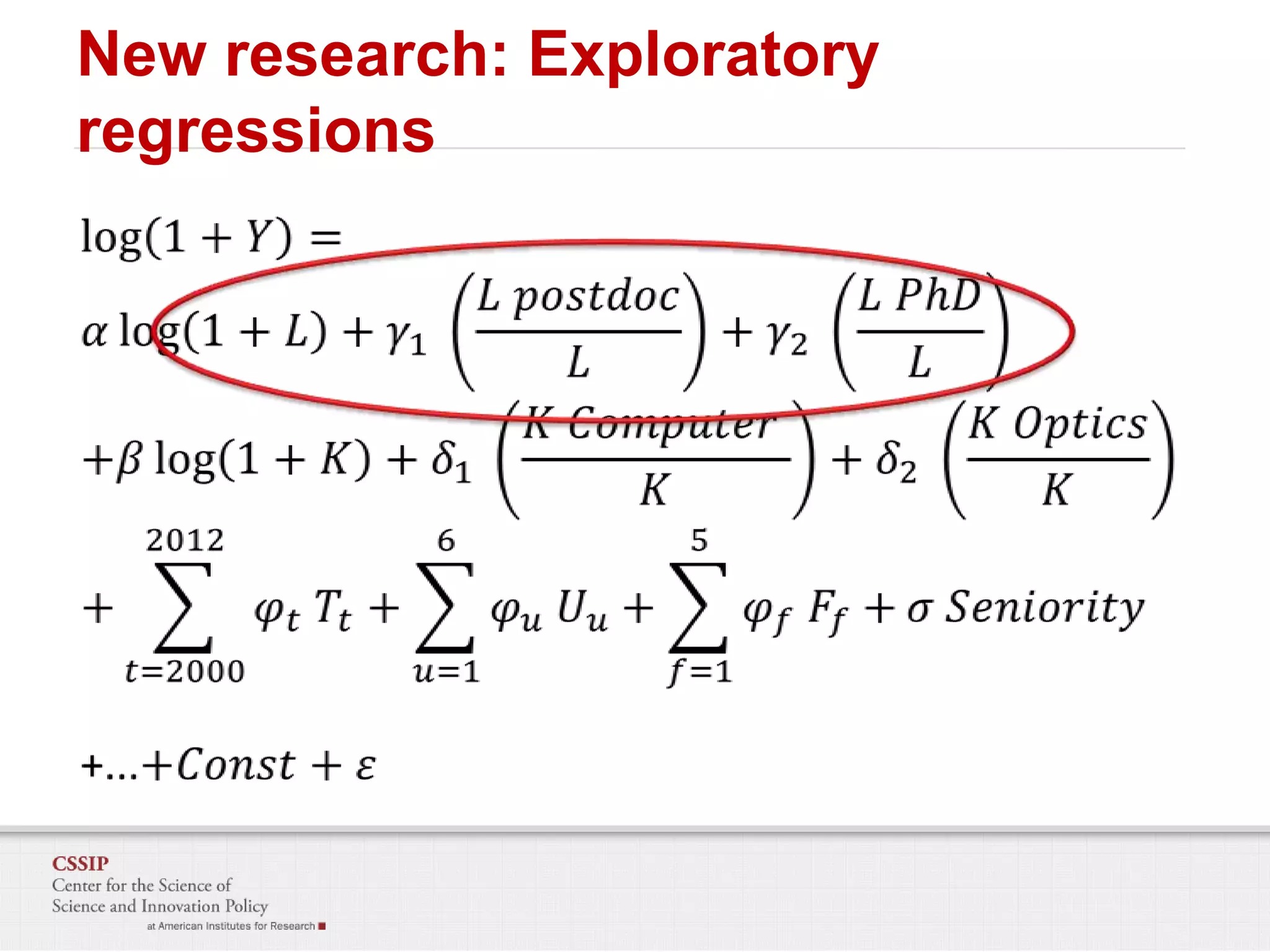

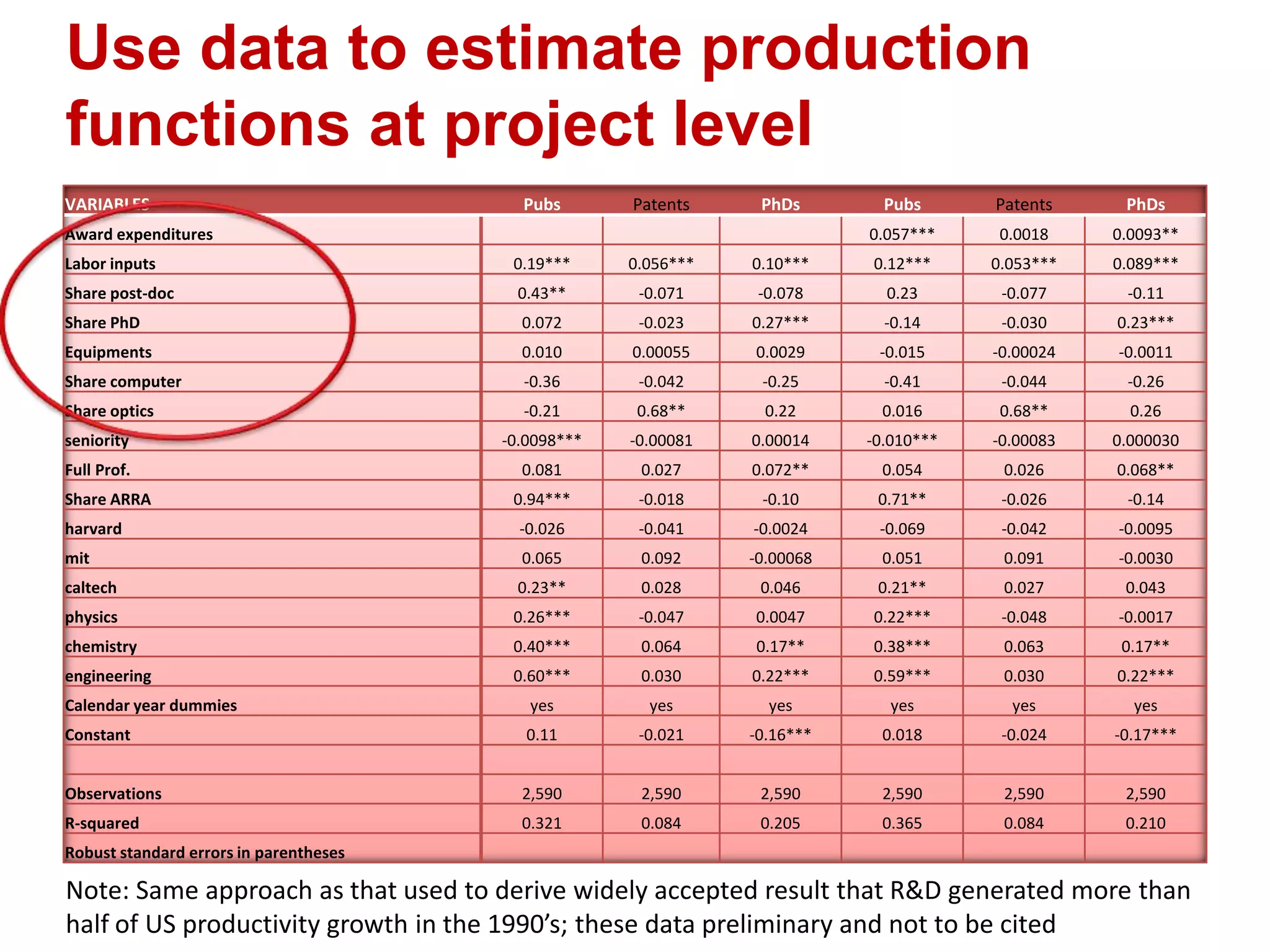

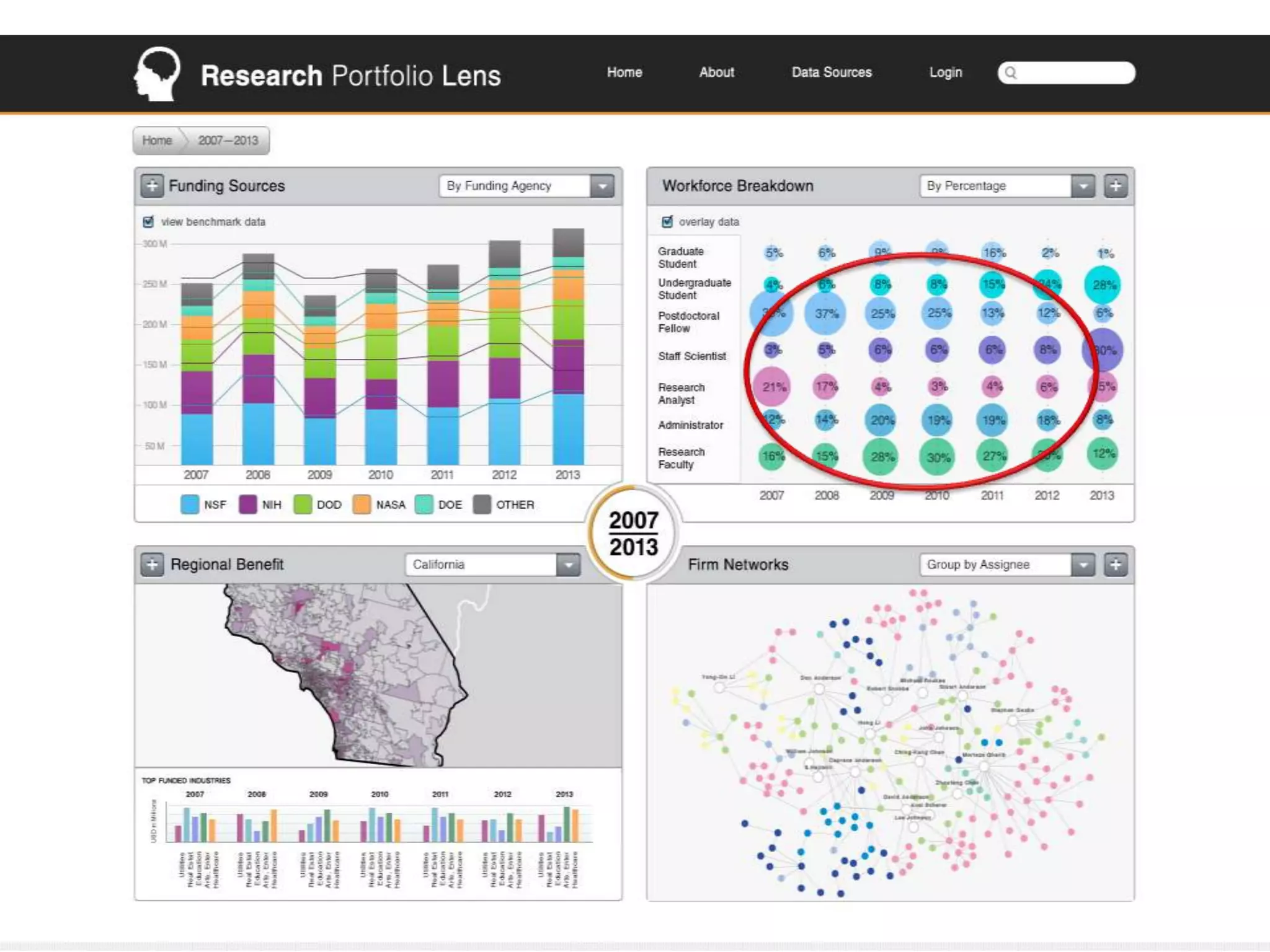

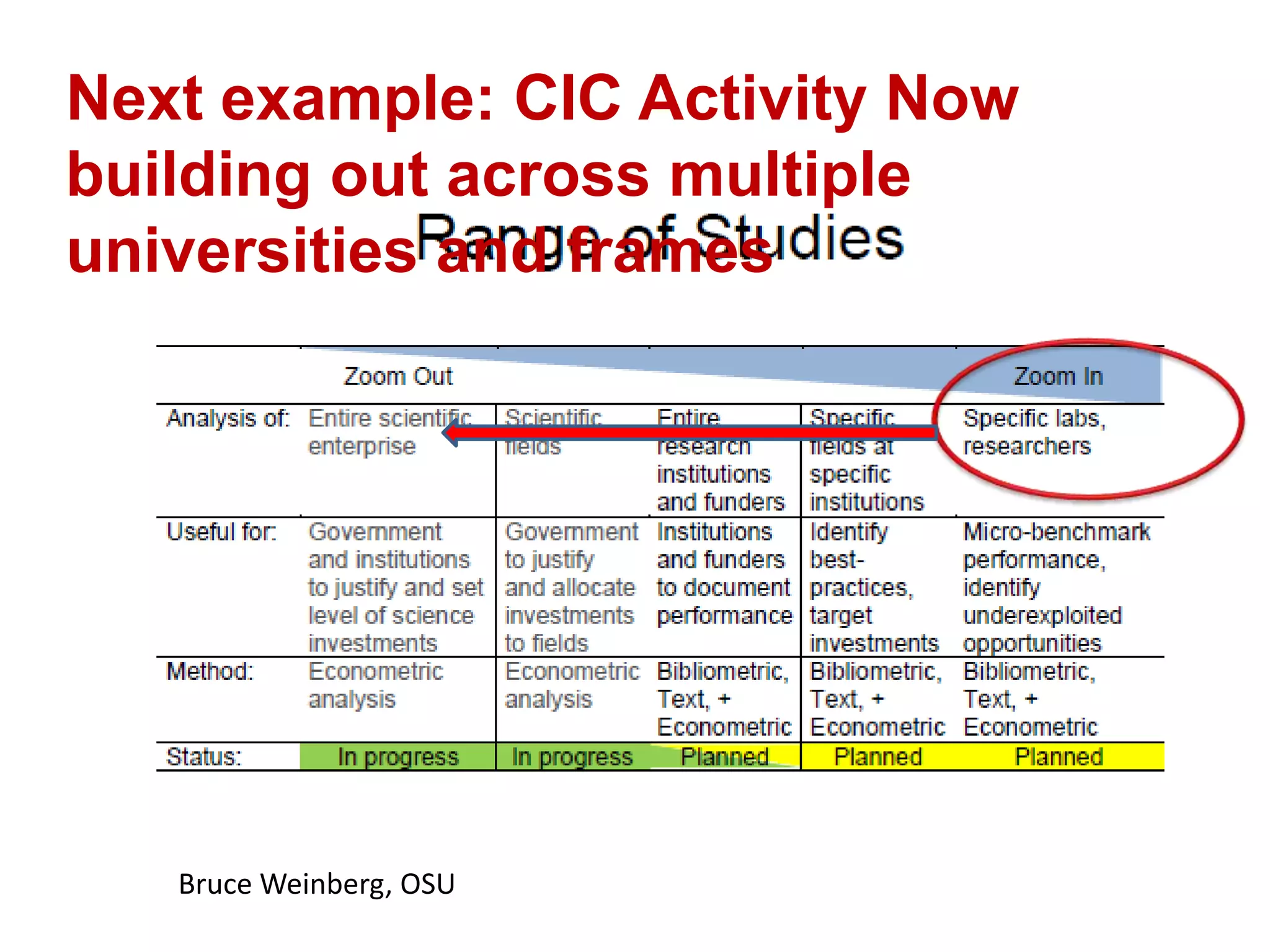

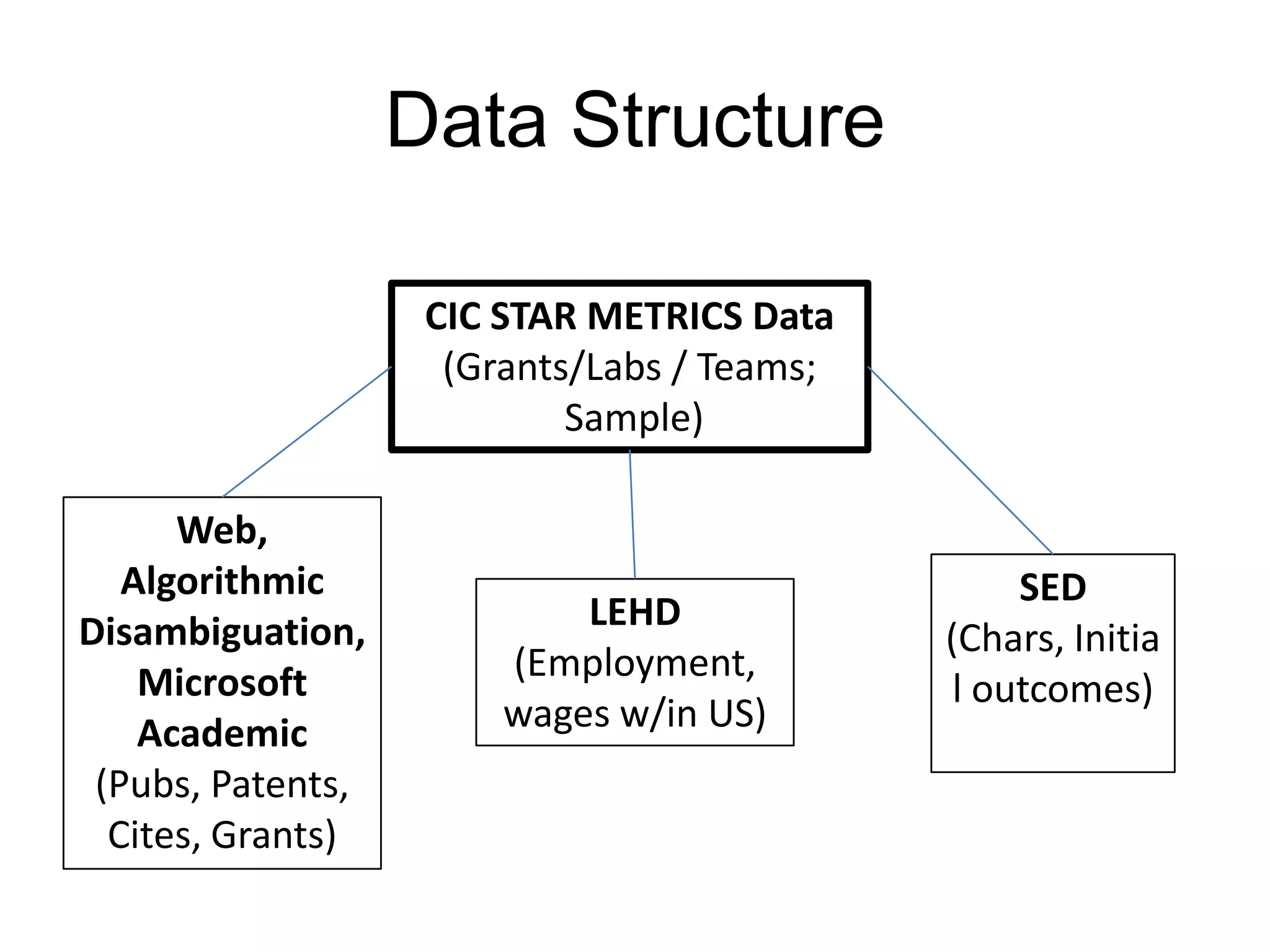

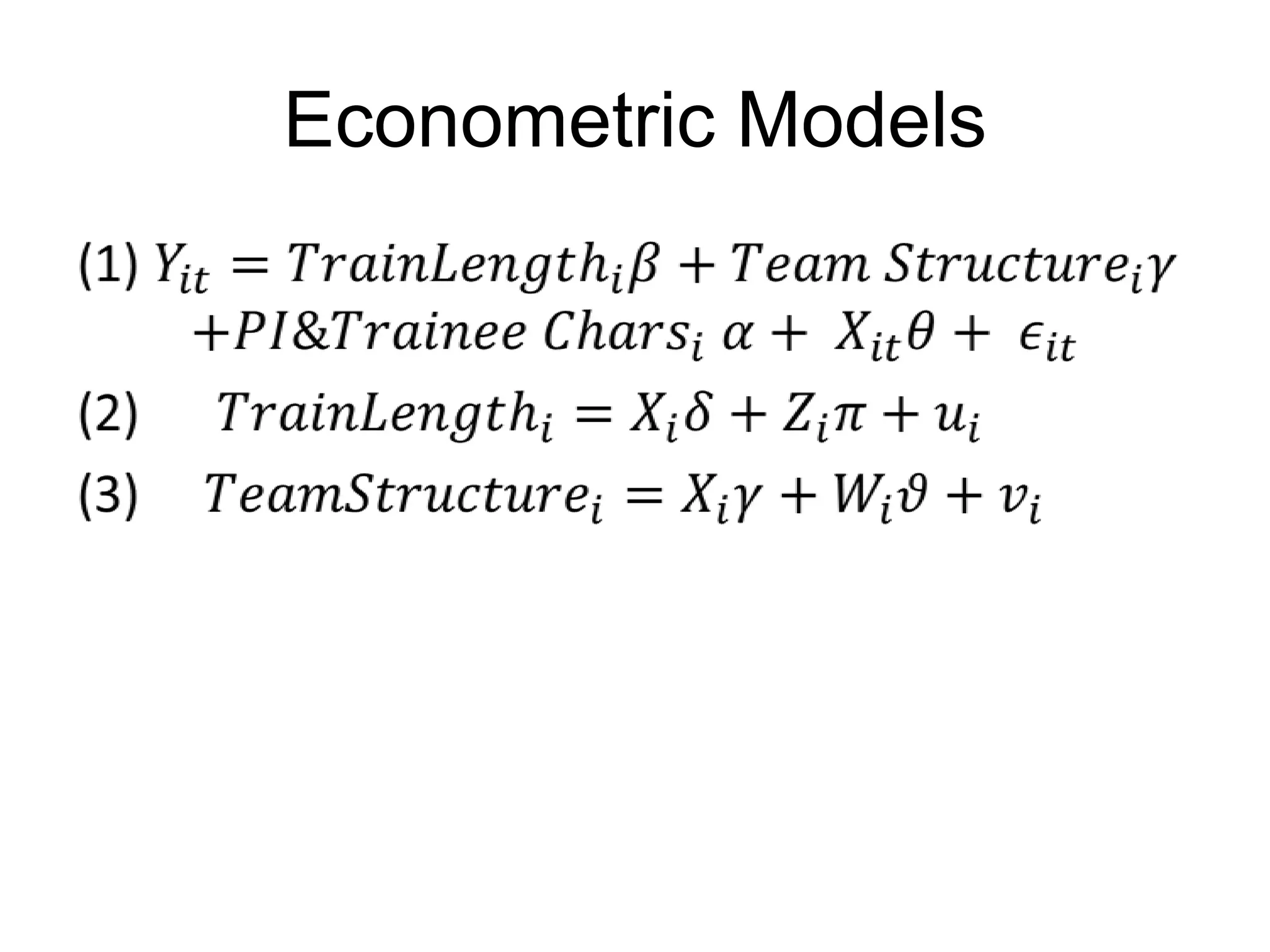

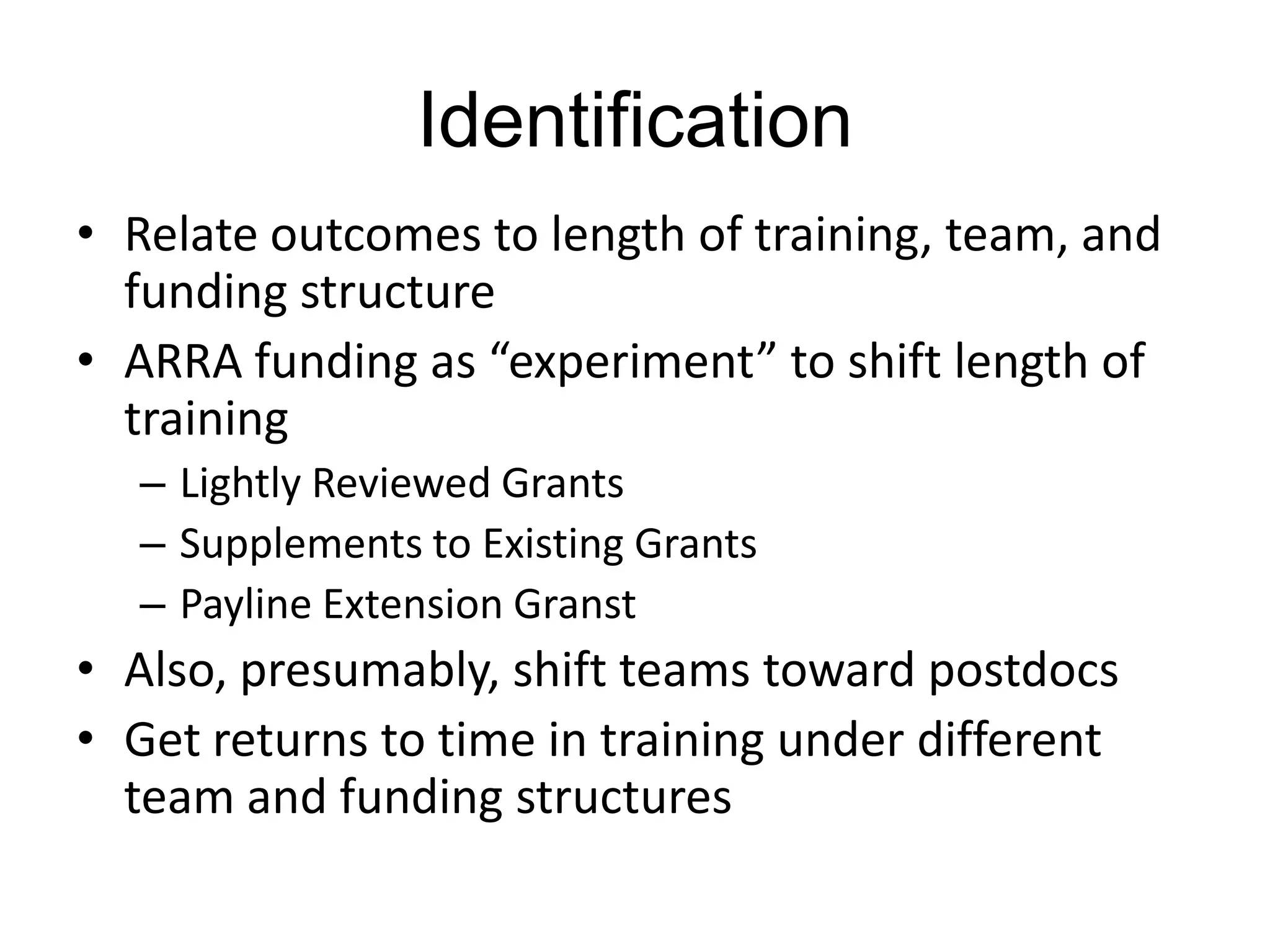

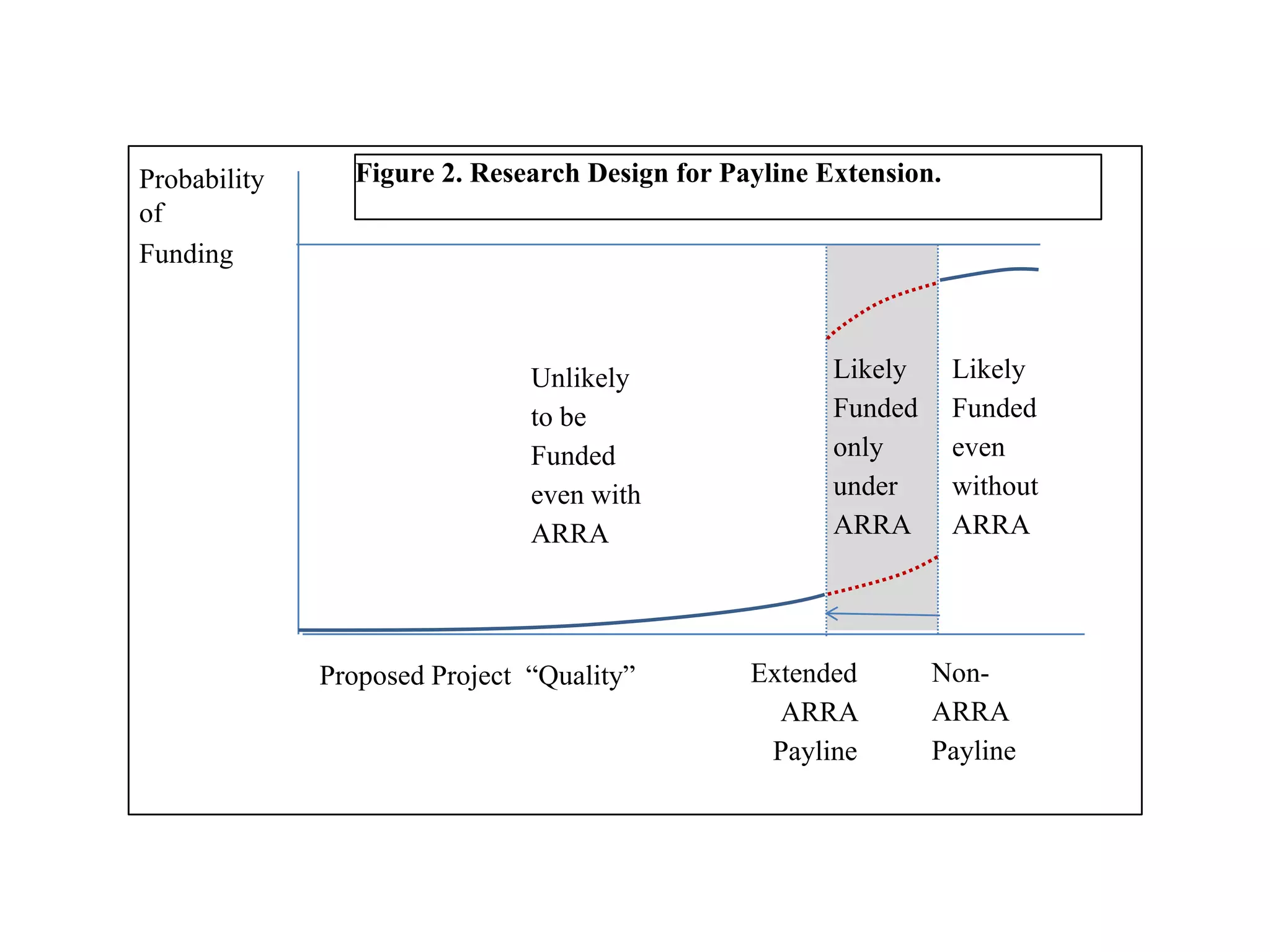

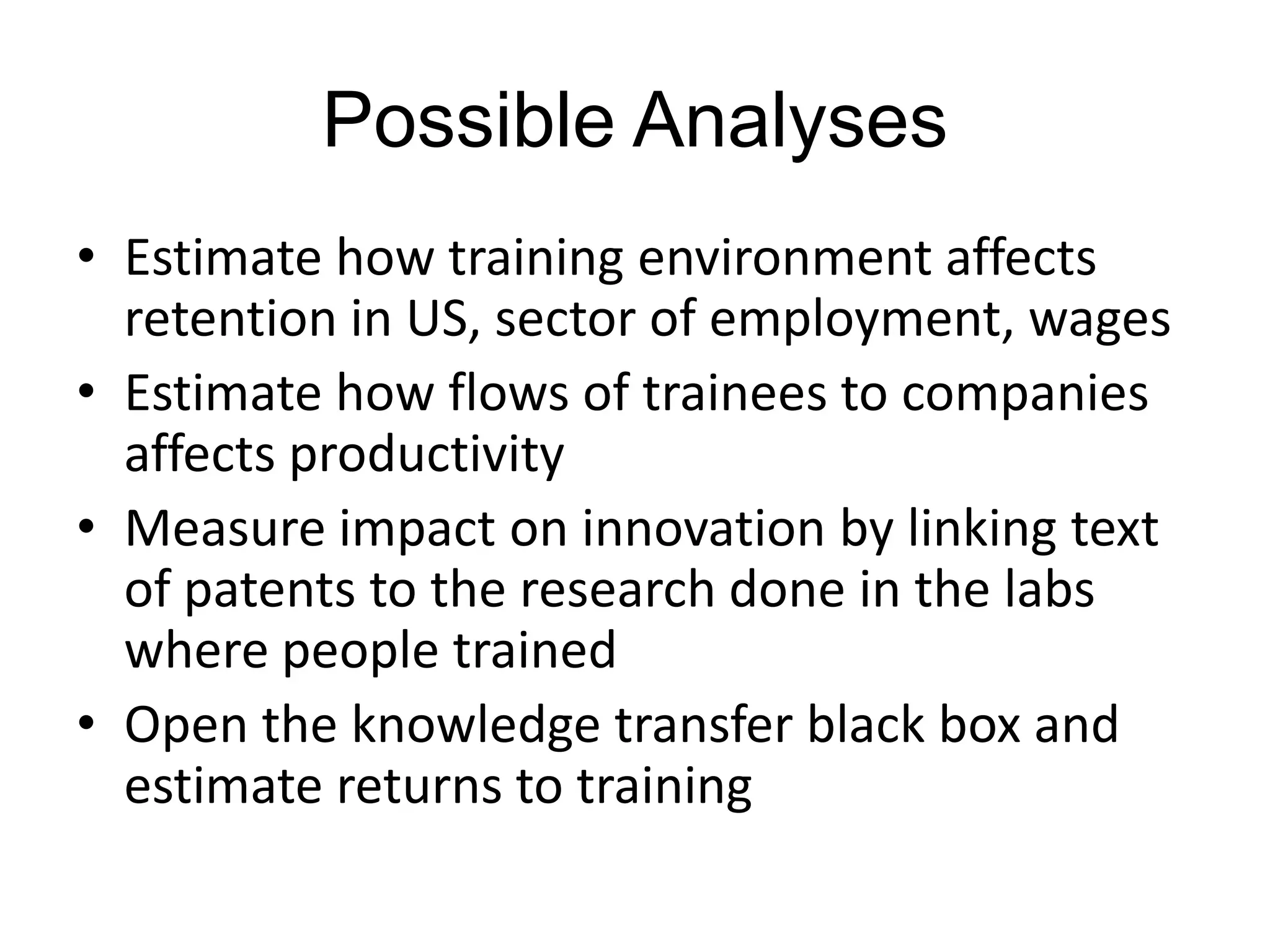

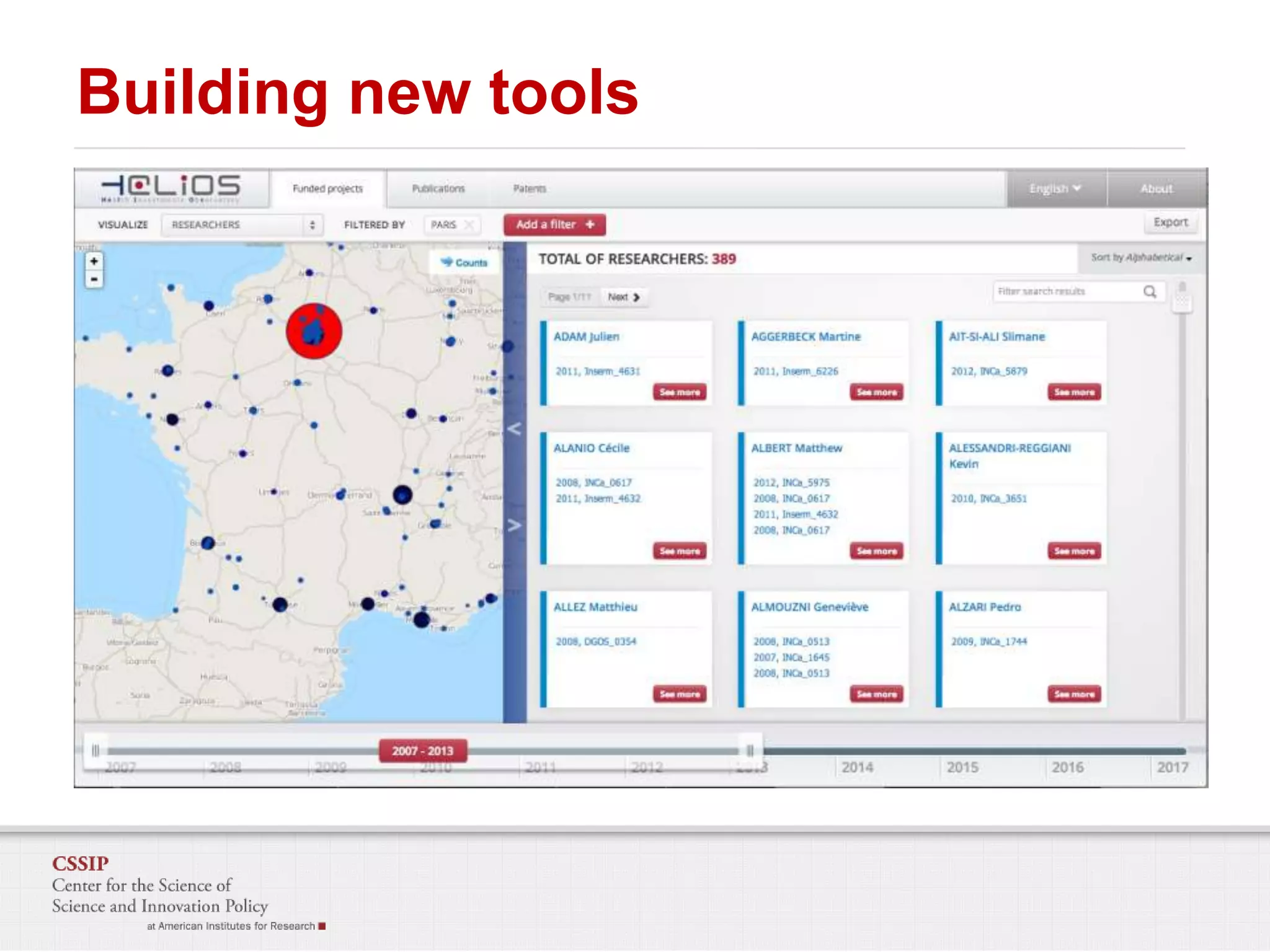

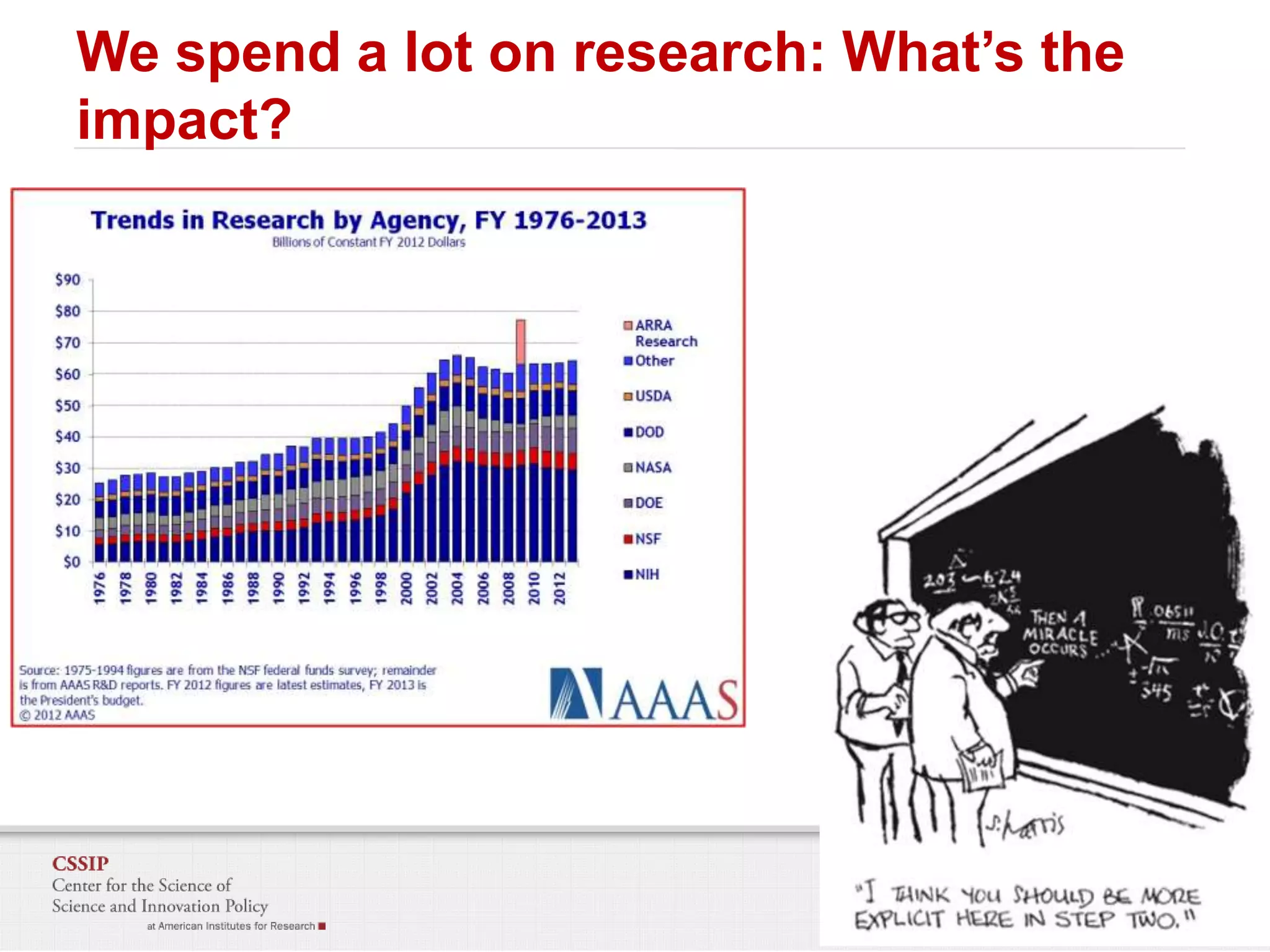

The document presents a framework for measuring the impact of research investments through a scientifically driven and internationally applicable approach. It emphasizes the importance of advanced technology for data collection and analysis while aiming to improve program performance for better accountability in government-funded research. Key concepts include a theory of change, empirical frameworks, and the need for collaboration across various institutions to evaluate research outcomes effectively.