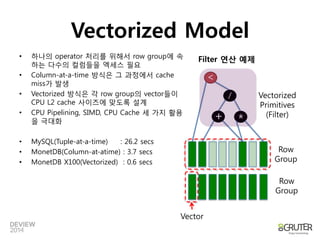

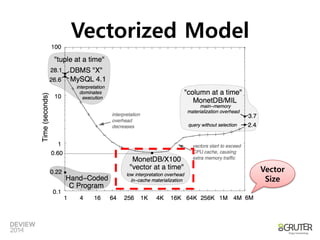

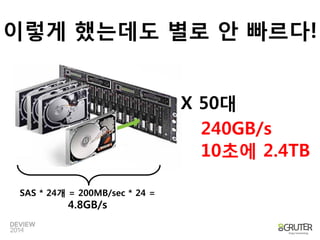

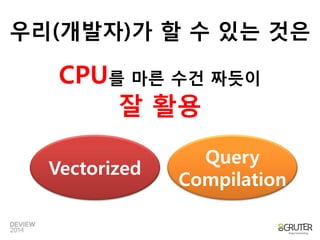

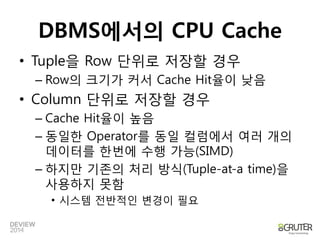

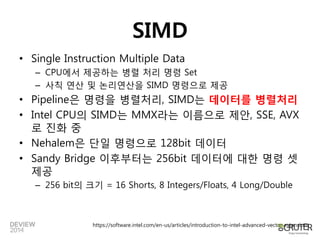

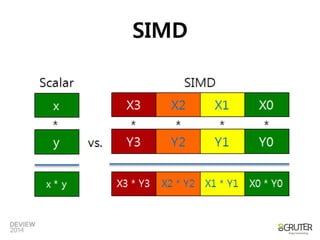

This document discusses techniques for optimizing database query performance by better utilizing CPU resources. It introduces vectorized processing which processes multiple data values simultaneously using SIMD instructions. This improves CPU pipeline utilization and reduces cache misses. Vectorized processing requires storing data in columns rather than rows and processing columns of data together in vectors to enable SIMD instructions.

![for (i= 0; i< 128; i++) {

a[i] = b[i] + c[i];

} for (i= 0; i< 128; i+=4) { a[i] = b[i] + c[i]; a[i+1] = b[i+1] + c[i+1]; a[i+2] = b[i+2] + c[i+2]; a[i+3] = b[i+3] + c[i+3]; }

Original Loop

Unrolled Loop

지시자: #pragmaGCC optimize ("unroll-loops")

컴파일옵션: -funroll-loops 등

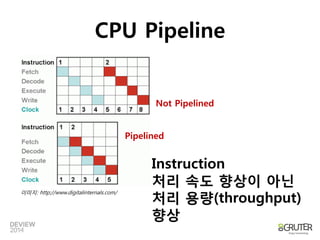

Loop unrolling

컴파일러옵션또는지시자로설정가능

Control Hazard 개선

조건비교](https://image.slidesharecdn.com/2a2vectorizedprocessinginanutshell-140929192632-phpapp02/85/2A2-Vectorized_processing_in_a_Nutshell-19-320.jpg)

![for (inti= 0; i< num; i++) {

if (data[i] >= 128) {

sum += data[i];

}

}

for (inti= 0; i< num; i++) {

int v = (data[i] -128) >> 31;

sum += ~v & data[i];

}

Branch version

Non branch version

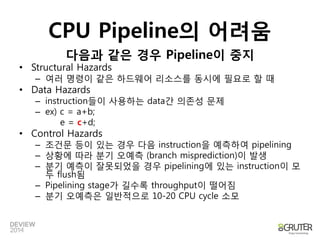

Branch avoidance

http://stackoverflow.com/questions/11227809/why-is-processing-a-sorted-array-faster-than-an-unsorted-array

Random data : 35,683 ms

Sorted data : 11,977 ms

11,977 ms10,402 ms

Control Hazard 개선](https://image.slidesharecdn.com/2a2vectorizedprocessinginanutshell-140929192632-phpapp02/85/2A2-Vectorized_processing_in_a_Nutshell-20-320.jpg)

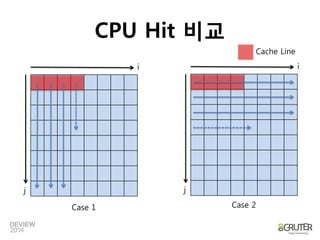

![for (i= 0 to size)

for (j = 0 to size)

do something with ary[j][i]

for (i= 0 to size)

for (j = 0 to size)

do something with ary[i][j]

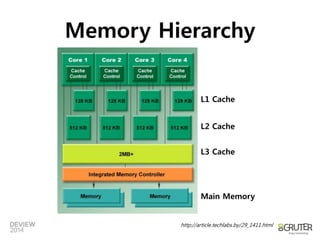

인접하지않은메모리를반복해서

읽기때문에비효율적

순차읽기로인한높은cache hit

Case 1

Case 2

CPU Hit 비교

Classic Example of Spatial Locality](https://image.slidesharecdn.com/2a2vectorizedprocessinginanutshell-140929192632-phpapp02/85/2A2-Vectorized_processing_in_a_Nutshell-23-320.jpg)

![OR

출처:[Marcin2009]

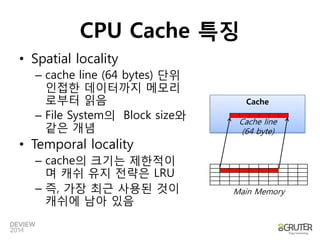

TupleMemory Model

N-array Storage Model

Decomposed Storage

Model](https://image.slidesharecdn.com/2a2vectorizedprocessinginanutshell-140929192632-phpapp02/85/2A2-Vectorized_processing_in_a_Nutshell-30-320.jpg)

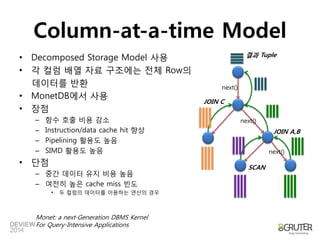

![Column-at-a-time Model

•성능비교

–MySQL: 26.2 sec, MonetDB: 3.7 sec

–TPCH-Q1(1 Table Groupby, Orderby, 결과데이터는4건)

–In-memory 데이터에대한비교실험결과

•논리연산/사칙연산별로별도Primitive 연산자필요

/* Returns a vector of row IDs’ satisfying comparison

* and count of entries in vector. */

intselect_gt_float(int* res, float* column, float val, intn) {

intidx=0;

for (inti= 0; i< n; i++) {

idx+= column[i] > val;

res[idx] = j;

}

return idx;

}

Greater-than primitive 연산자의예

기본데이터타입, 사칙연산, 논리연산에약5천여개필요](https://image.slidesharecdn.com/2a2vectorizedprocessinginanutshell-140929192632-phpapp02/85/2A2-Vectorized_processing_in_a_Nutshell-36-320.jpg)