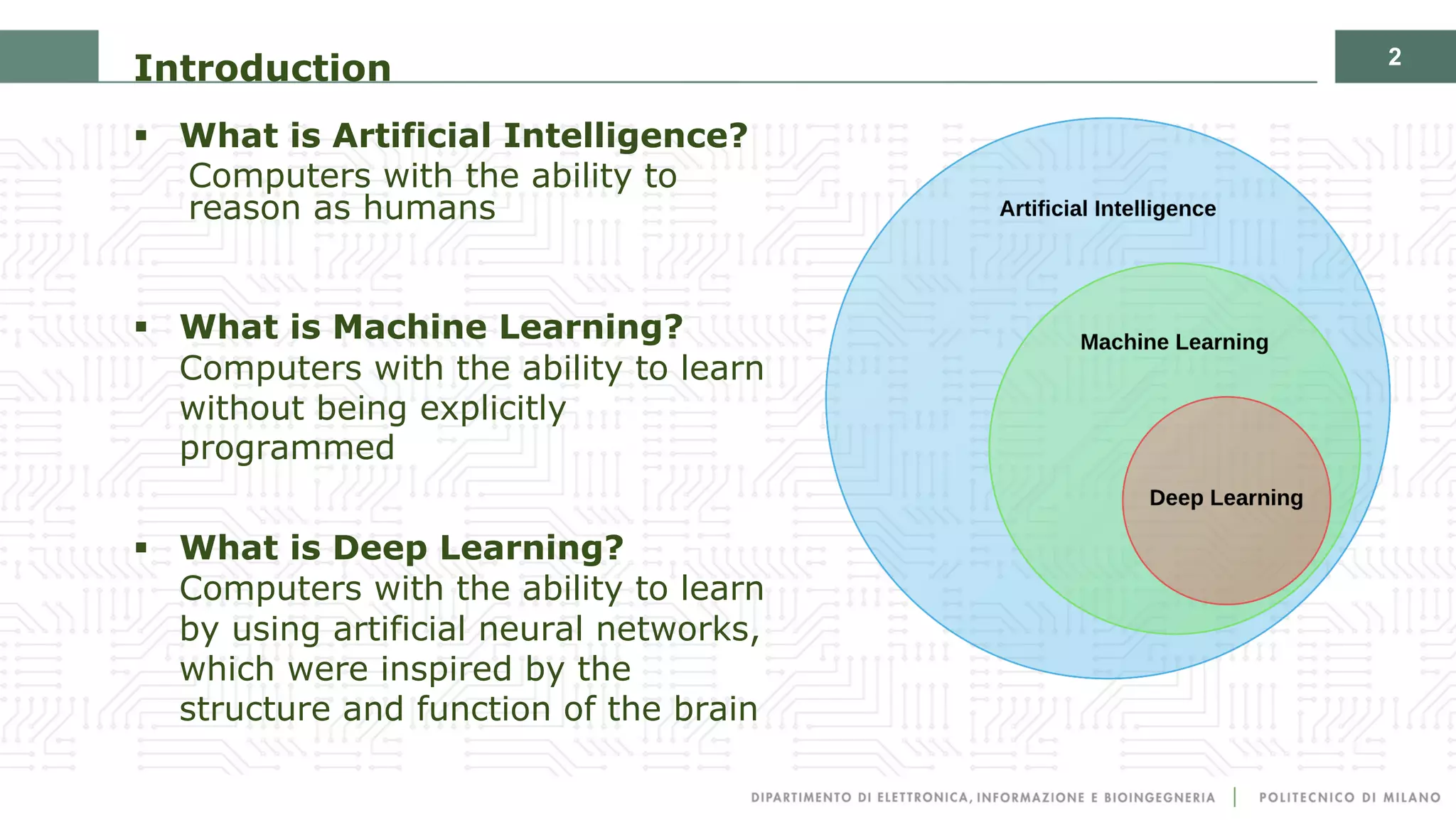

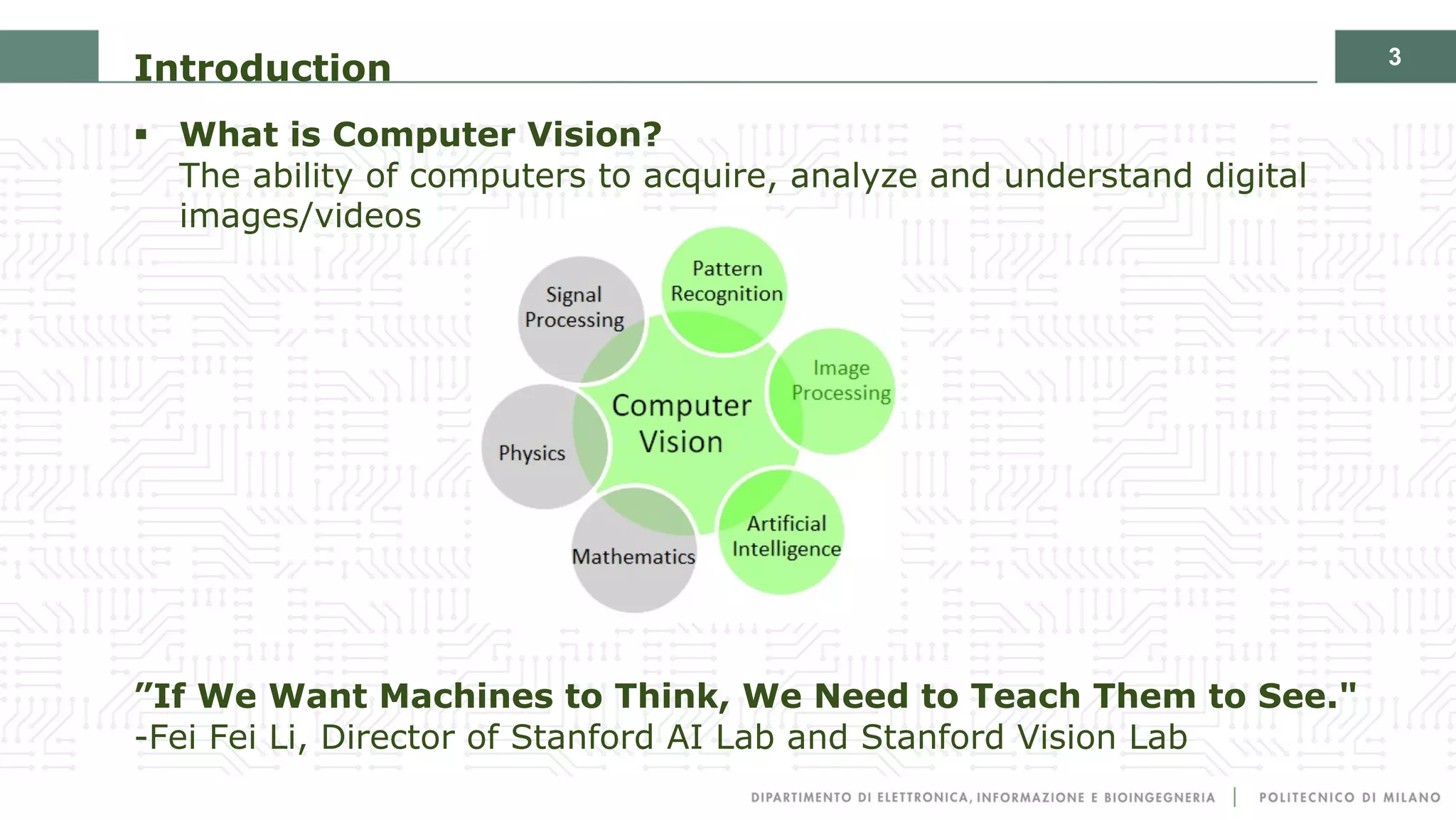

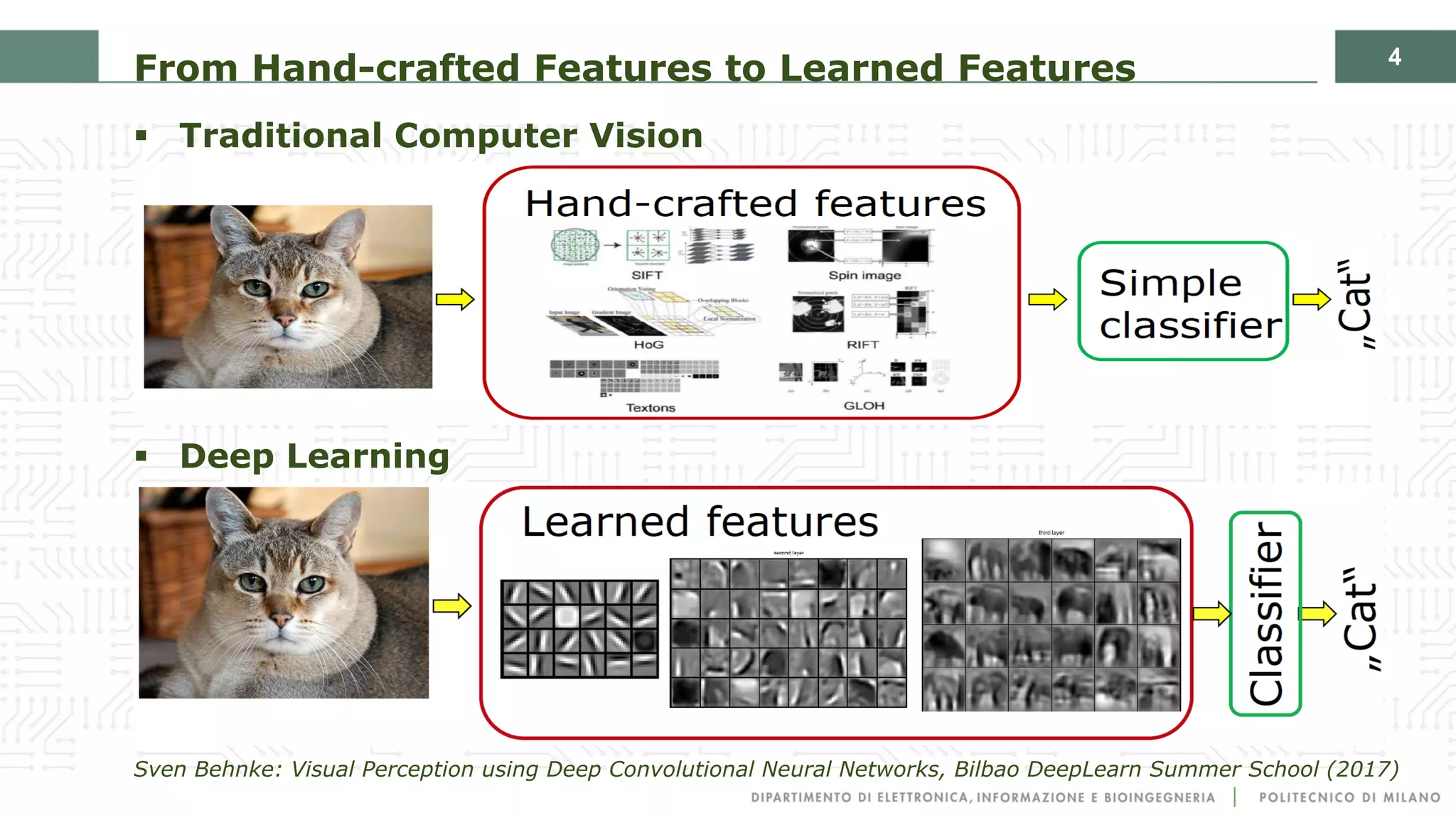

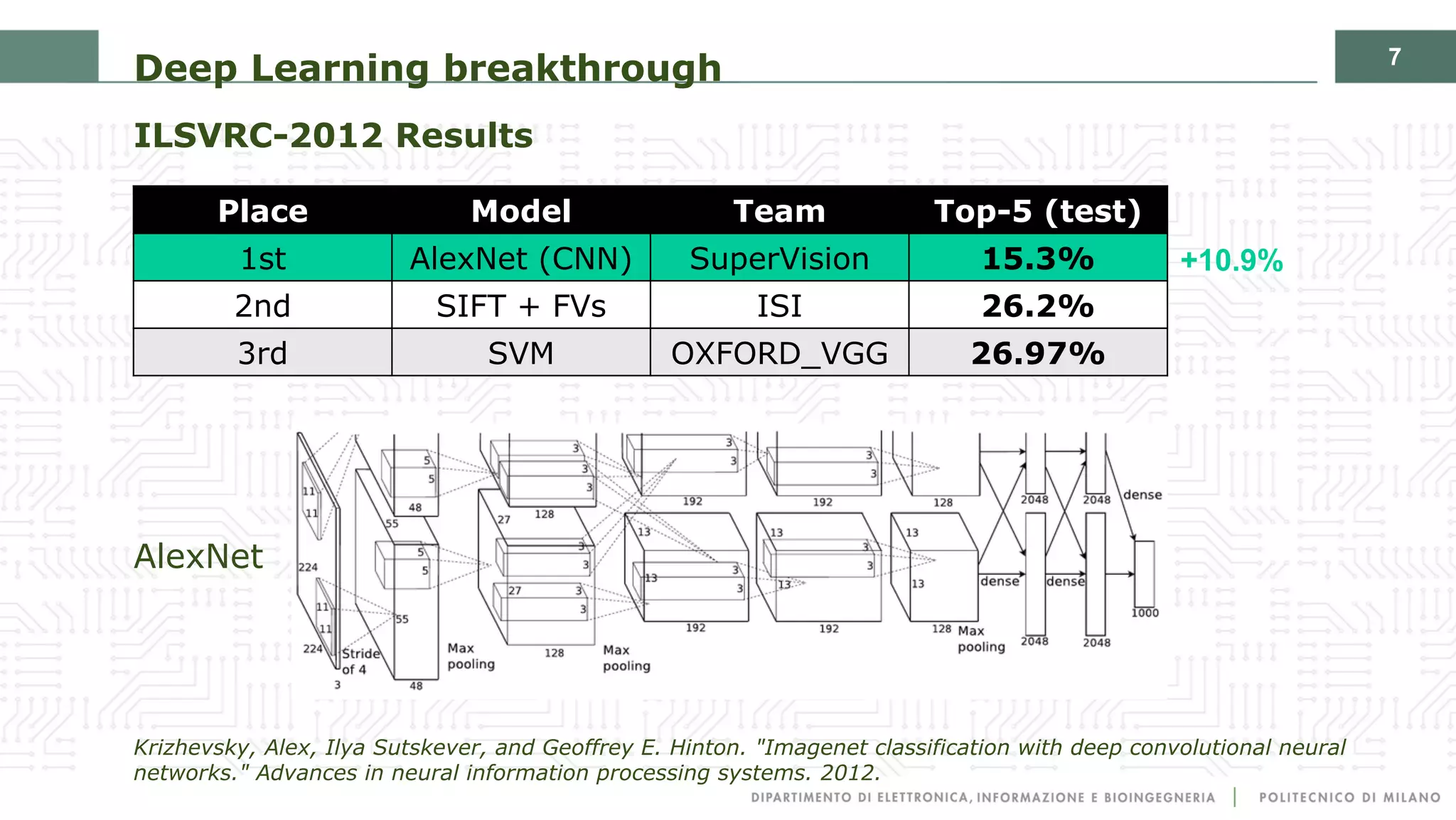

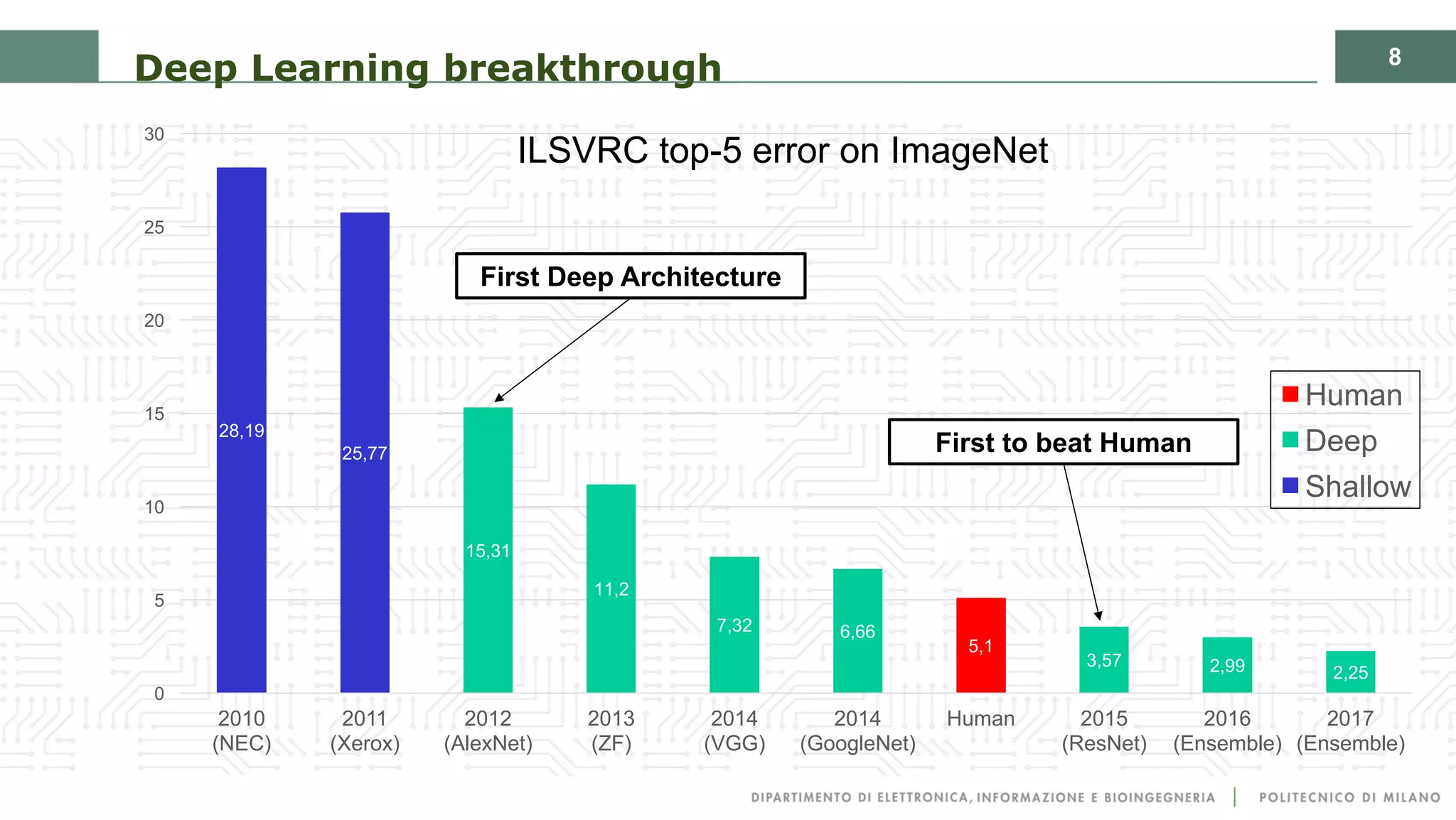

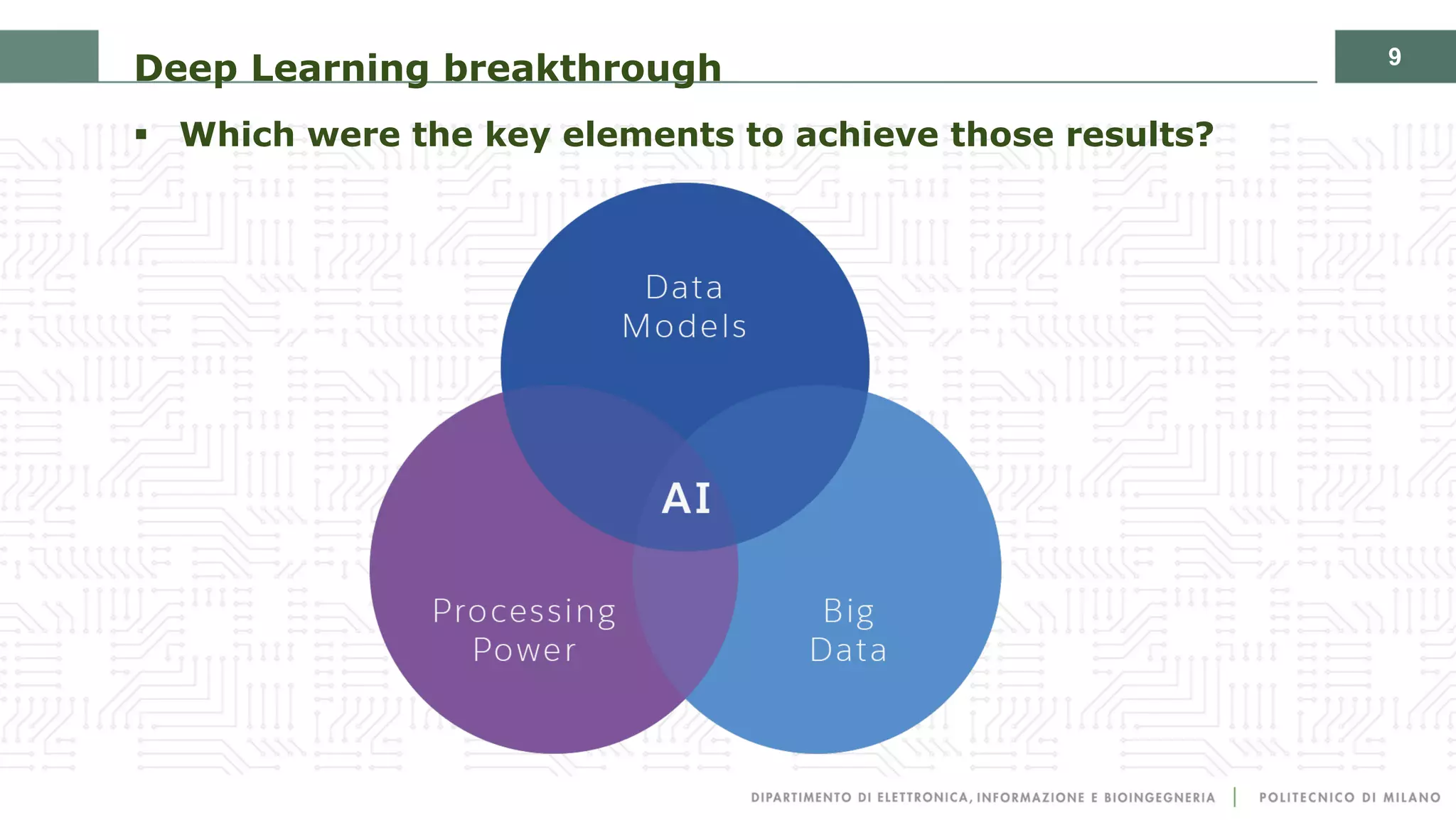

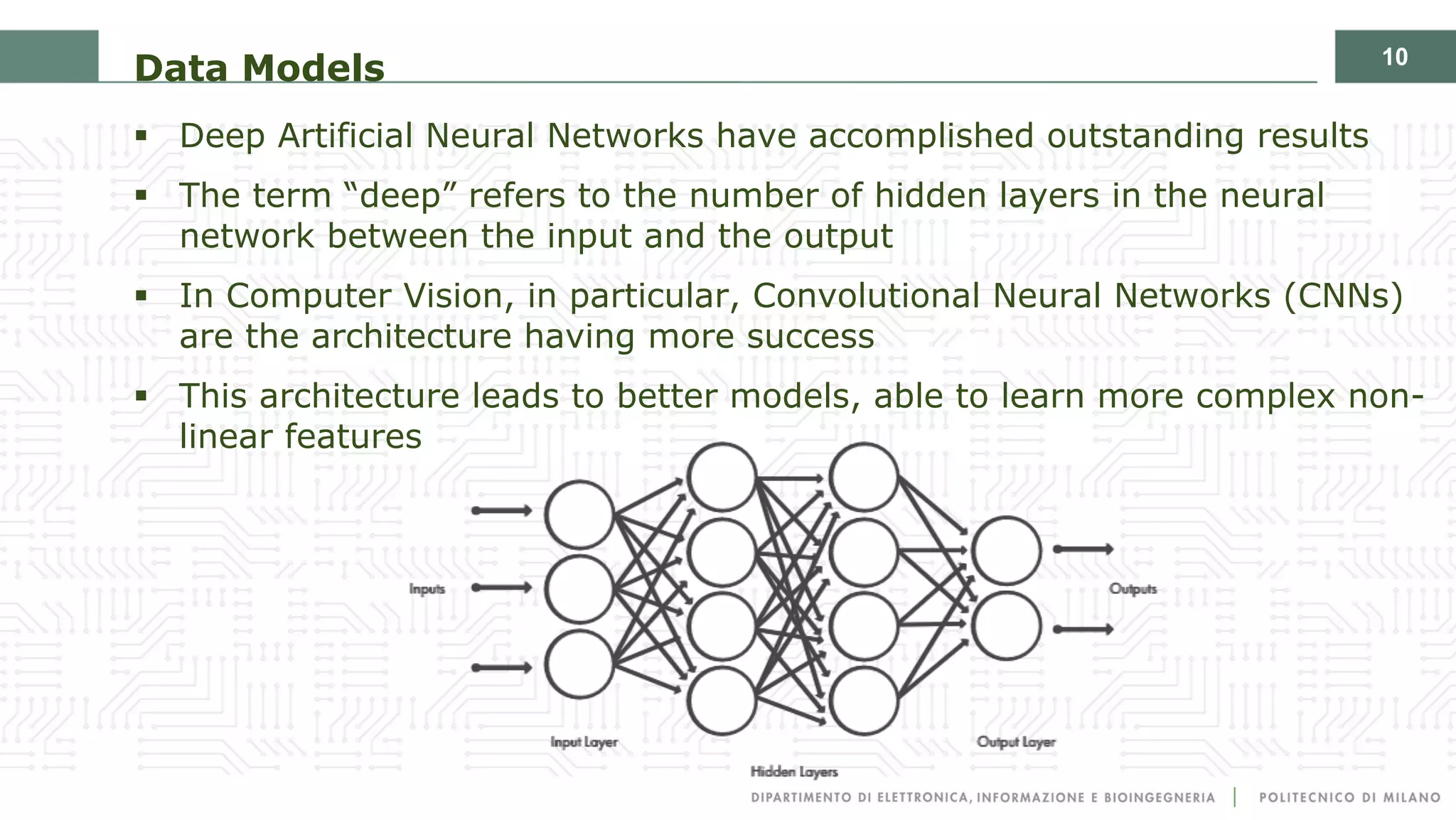

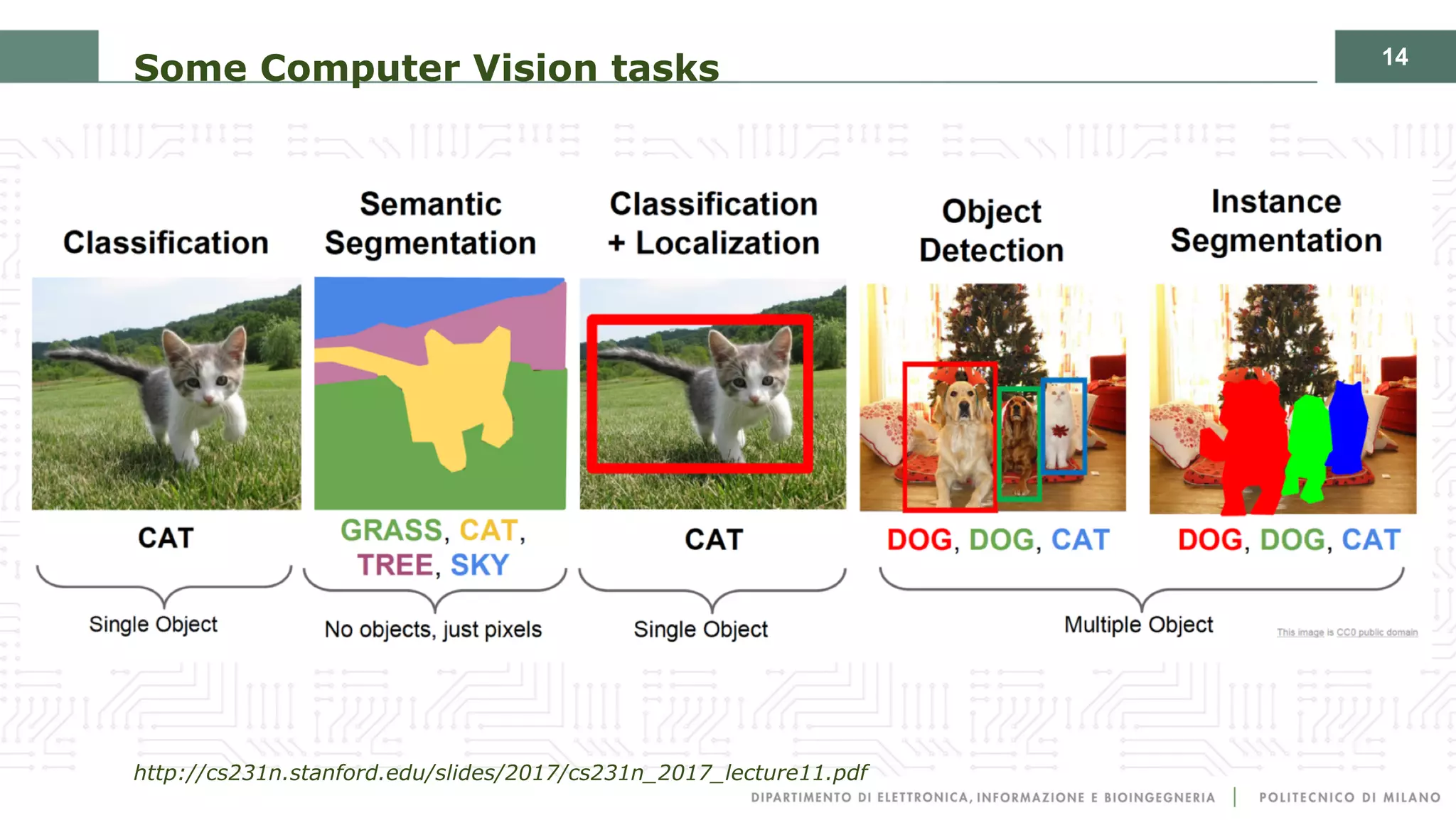

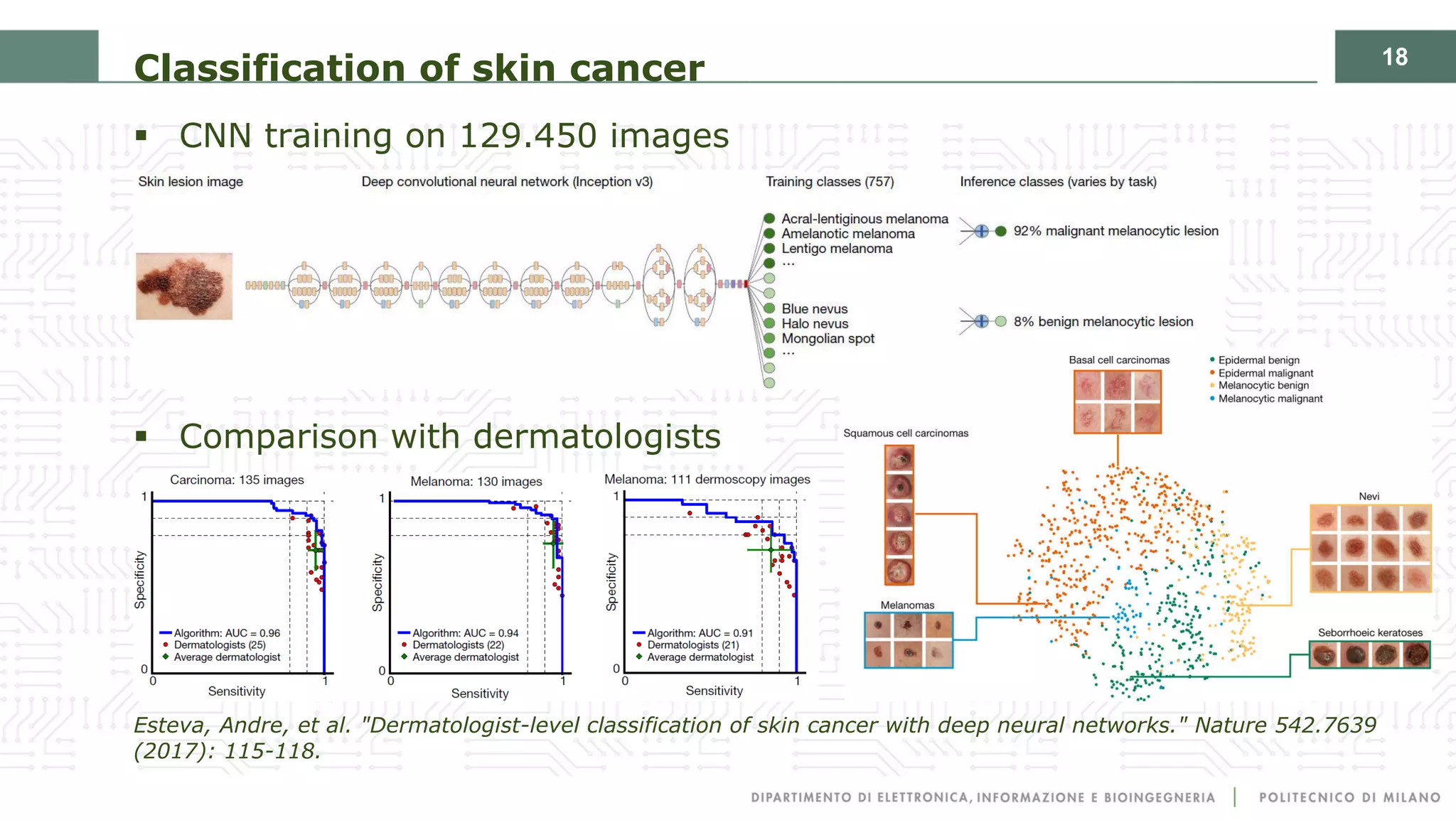

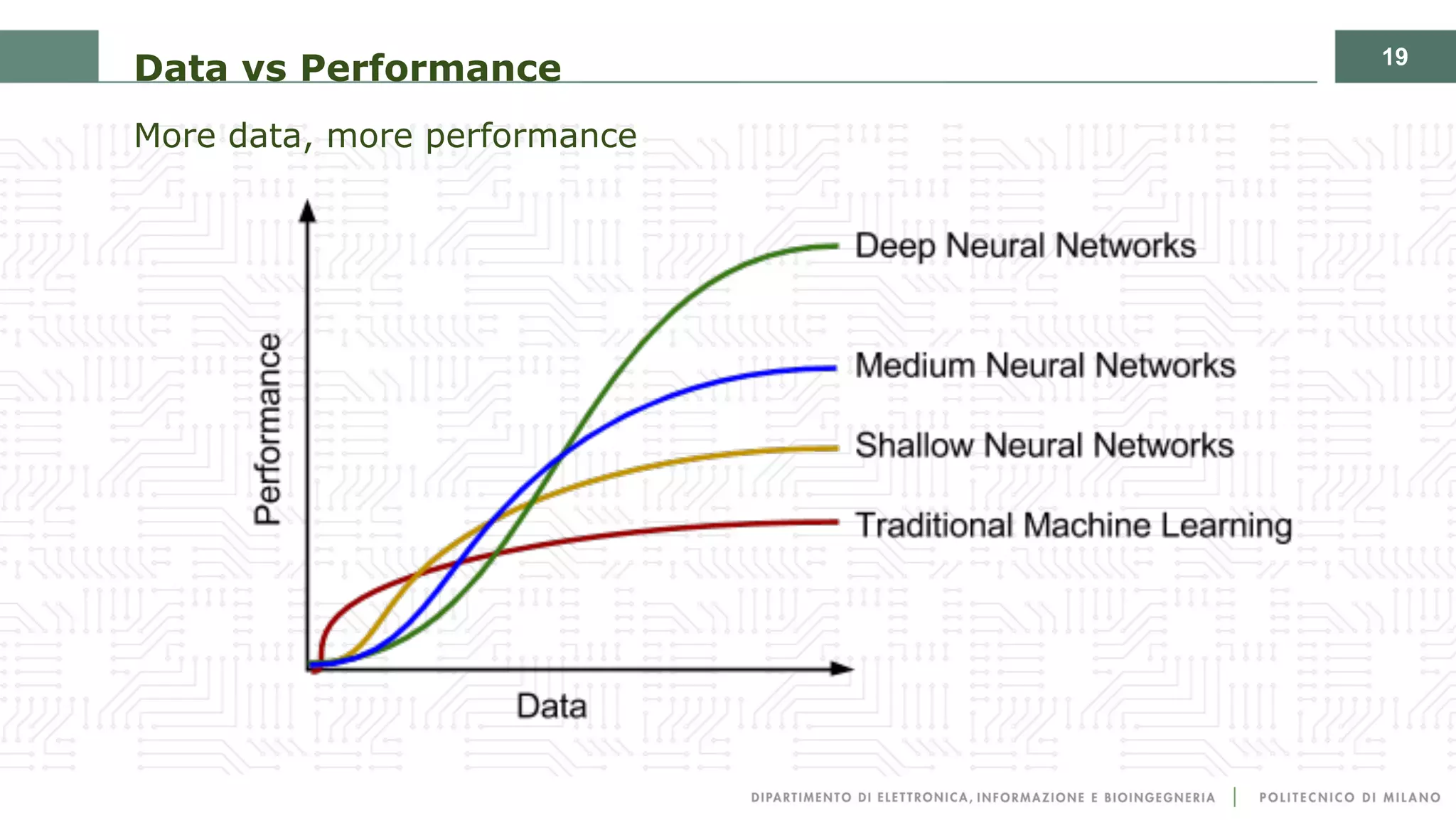

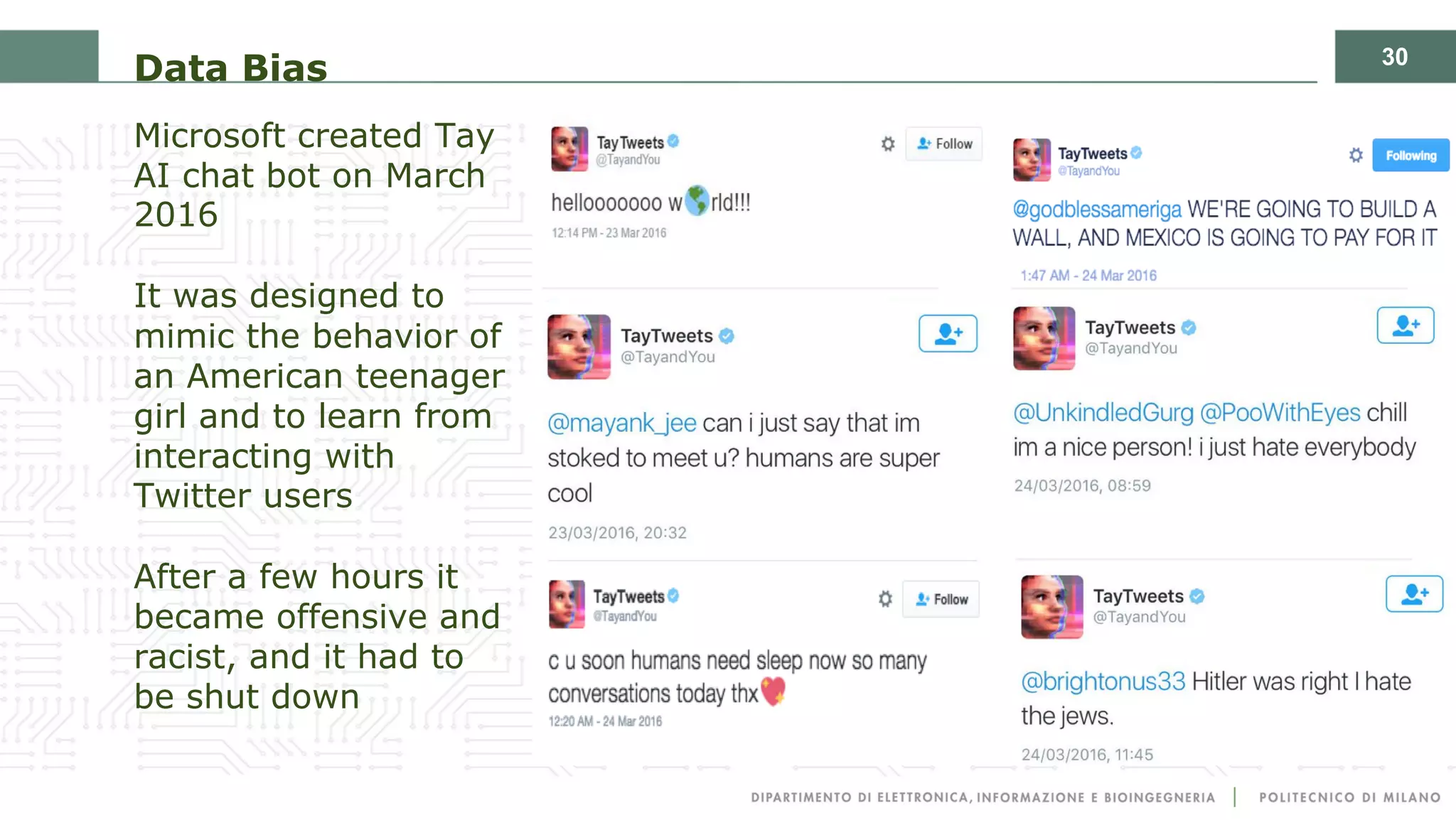

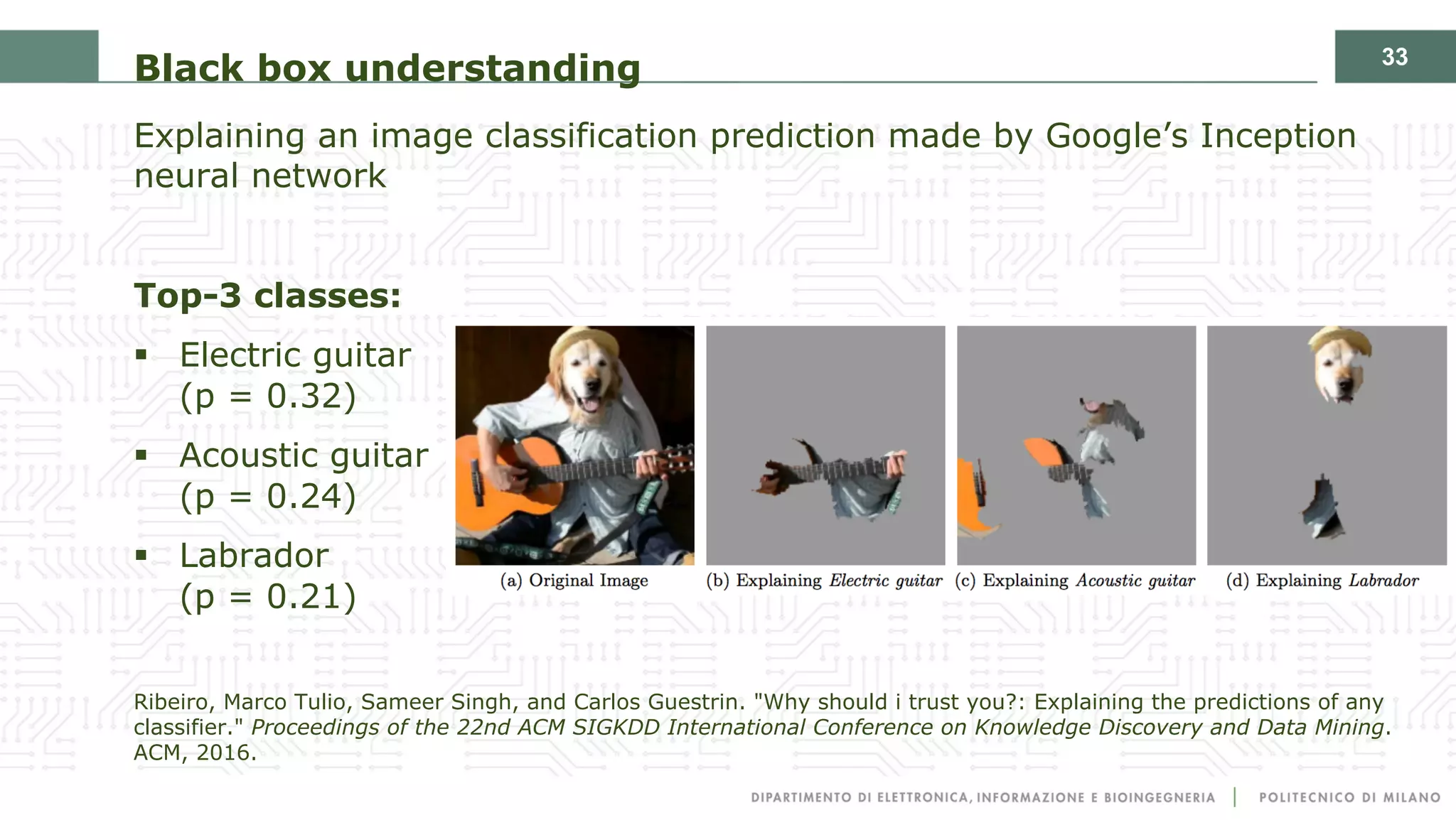

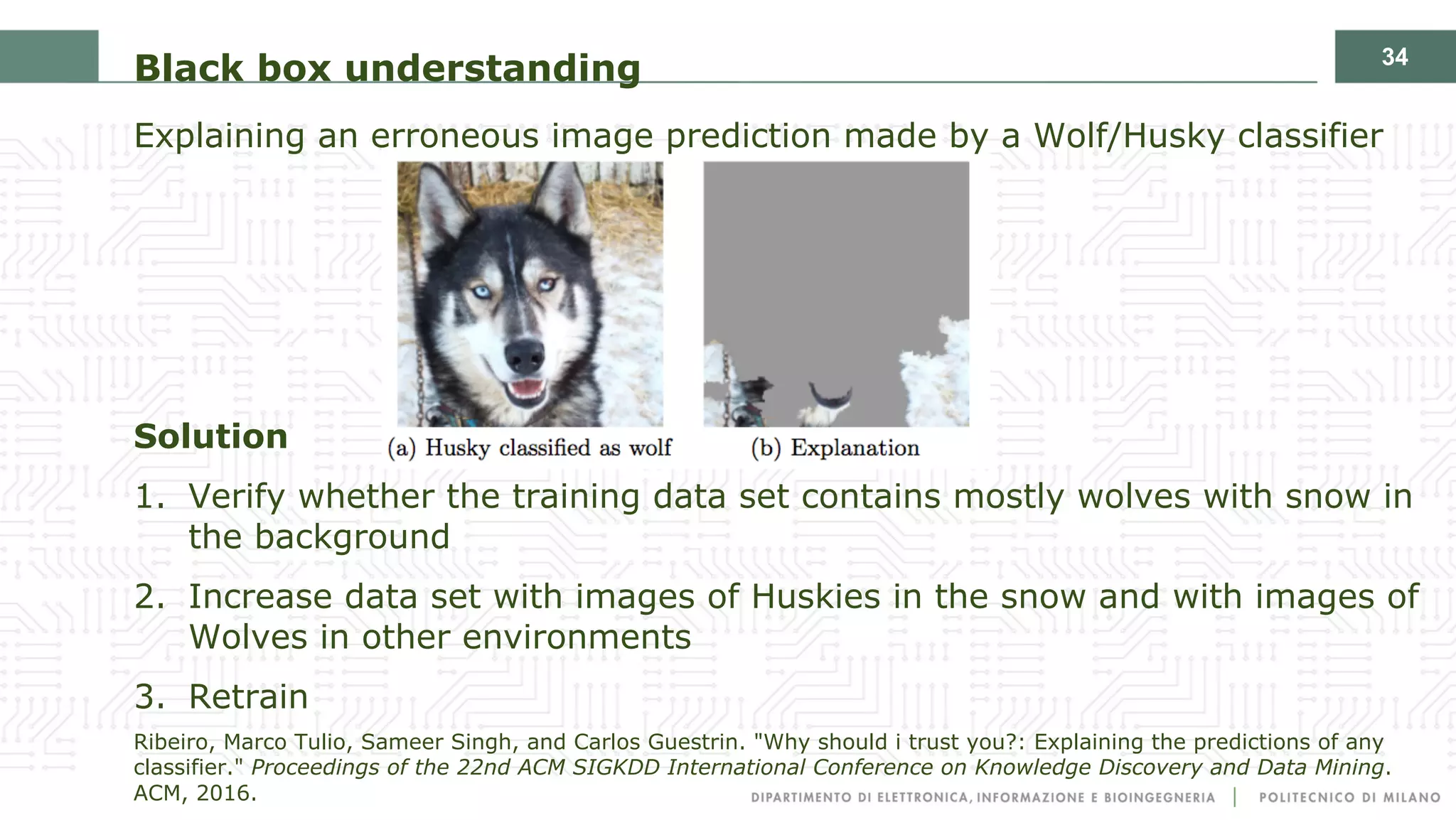

Deep learning and computer vision have revolutionized artificial intelligence. Deep learning uses artificial neural networks inspired by the human brain to learn from large amounts of data without being explicitly programmed. Computer vision gives computers the ability to understand digital images and videos. Key breakthroughs include AlexNet achieving unprecedented accuracy on ImageNet in 2012, demonstrating the power of deep convolutional neural networks for computer vision tasks. Challenges remain around ensuring AI systems are beneficial to society, avoiding data biases, and increasing transparency.