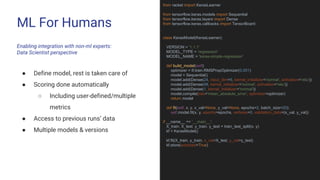

The document discusses the development of machine learning products using Flask and TensorFlow Serving, emphasizing challenges and solutions in deployment, iteration, and model management. It presents 'Racket', a minimalistic framework for model deployment, which automates versioning and provides a rich RESTful interface, enhancing integration for non-ML experts. Key takeaways highlight the importance of reducing friction between research and development and providing the right tools to benefit the entire organization.

![Takeaways

● To make great products

○ Do machine learning like the great engineer you are, not like the great machine learning expert

you aren’t.

[Google’s best practices for ML]

○ Provide DS with the right tooling, and the entire organization will benefit](https://image.slidesharecdn.com/restfulmachinelearningwithflaskandtensorflowserving-190104023422/85/RESTful-Machine-Learning-with-Flask-and-TensorFlow-Serving-Carlo-Mazzaferro-7-320.jpg)