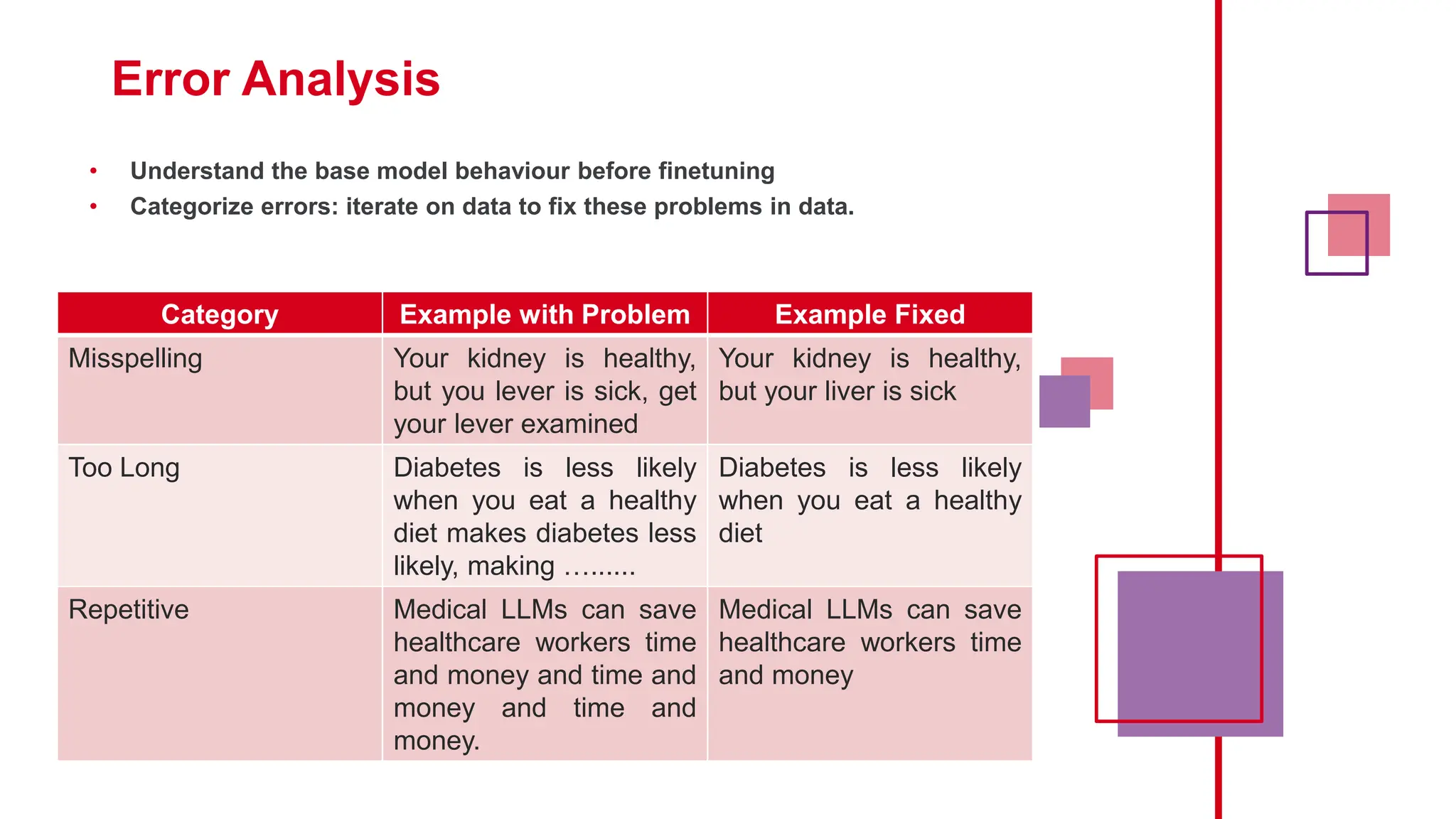

The document details the process of fine-tuning a large language model (LLM), including the differences between pre-trained and fine-tuned models, and highlights the importance of data preparation and error analysis. It discusses techniques such as instruction fine-tuning and parameter-efficient fine-tuning (PEFT) to optimize model performance while minimizing computational costs. Key steps involved include defining tasks, collecting data, and evaluating the model's behavior to ensure successful adaptation to specific use cases.

![Tokenization

This is an input text.

[CLS] This is an input text . [SEP]

101 2023 2003 1037 7953 2058 1012 102

ENCODING](https://image.slidesharecdn.com/howtofine-tuneanddevelopyourownlargelanguagemodel-240209084606-a04703b5/75/How-to-fine-tune-and-develop-your-own-large-language-model-pptx-23-2048.jpg)