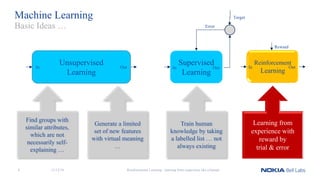

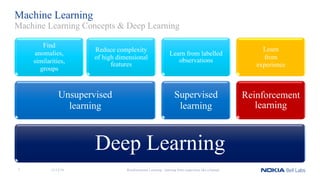

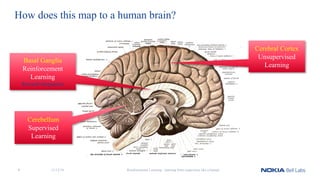

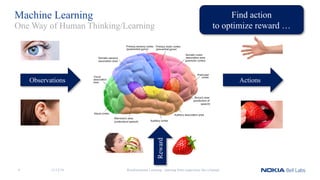

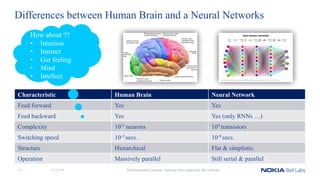

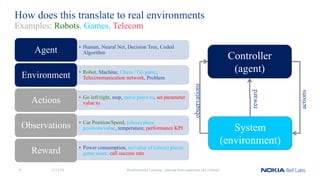

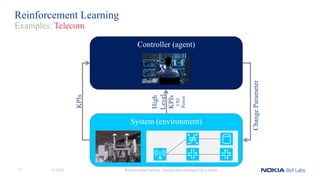

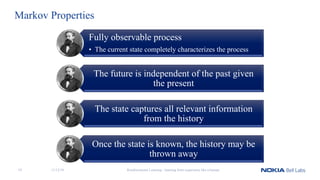

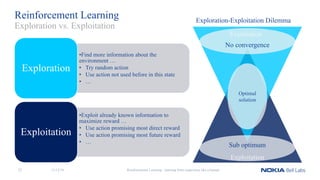

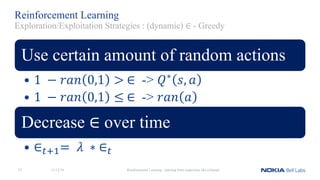

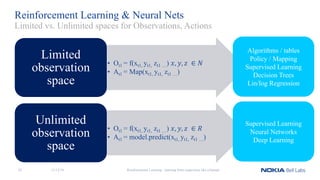

The document explores the concepts of reinforcement learning, comparing it to human learning through experience and trial-and-error methods. It discusses various types of machine learning, including supervised and unsupervised learning, as well as applications of reinforcement learning in robotics, optimization, and decision-making processes. Key components, agent-environment interactions, and various strategies for exploration and exploitation are also outlined, emphasizing the importance of feedback and iterative learning.

![Reinforcement Learning

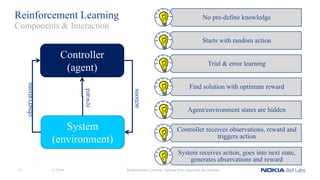

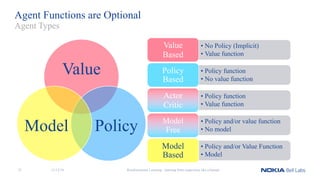

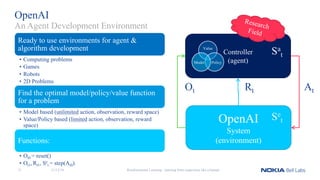

Observability

• Full: Sa

t = Se

t

• Partial: Sa

t ≠ Se

t

Agent functions

• Policy (predicted action based on state)

• a = 𝜋(𝑠)

• Value (pred. of future reward)

• vπ(s, a) = 𝐸 𝜋[𝑅𝑡 + 1,

𝑅 𝑡 + 2 …

]

• Model

• Build transition model of the system

Reinforcement Learning - learning from experience like a human

Formalisms

Controller

(agent)

System

(environment)

Ot Rt At

Sa

t

Se

t

Value

PolicyModel

11/12/1820](https://image.slidesharecdn.com/reinforcementlearning-learningfromexperiencelikeahuman-181129091017/85/Reinforcement-Learning-Learning-from-Experience-like-a-Human-20-320.jpg)

![Reinforcement Learning Balance inverted pendulum

• Simplified for 1 dimension

State

• Cart position [-2.4, 2.4]

• Cart Velocity [-inf, inf]

• Pole Angle [-41°, 41°]

• Pole velocity at tip [-inf, inf]

Actions

• Impacts cart direction & velocity

• Push cart to left

• Push cart to right

Termination

• Cart position at boundary (fails)

• Angle outside [-12, 12] (fails)

• More than 200 steps (terminates successfully)

Reward

• +1 for every step not terminating

Reinforcement Learning - learning from experience like a human

Example: CartPole

By using random actions pole returns to stable state

11/12/1833](https://image.slidesharecdn.com/reinforcementlearning-learningfromexperiencelikeahuman-181129091017/85/Reinforcement-Learning-Learning-from-Experience-like-a-Human-33-320.jpg)