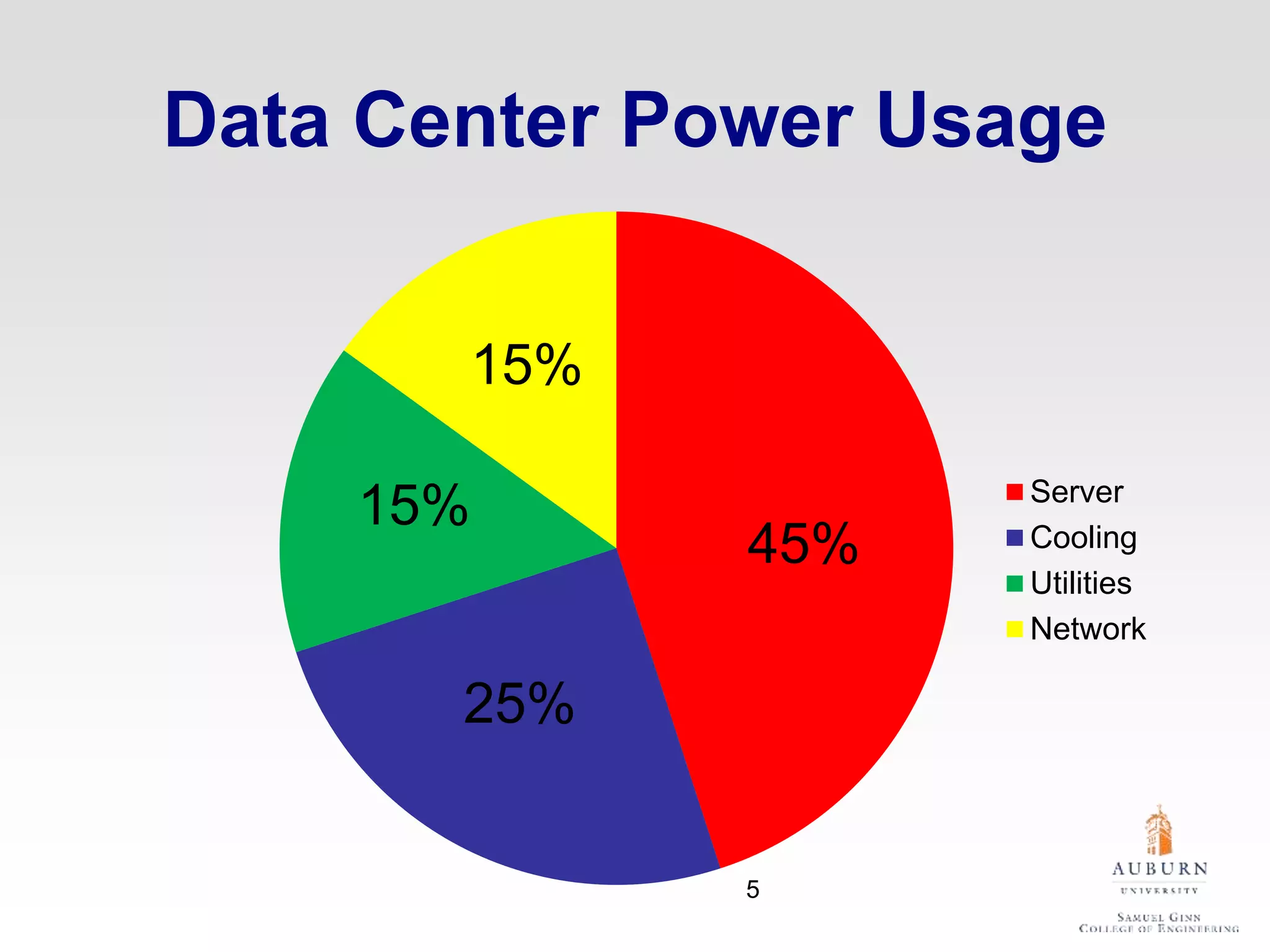

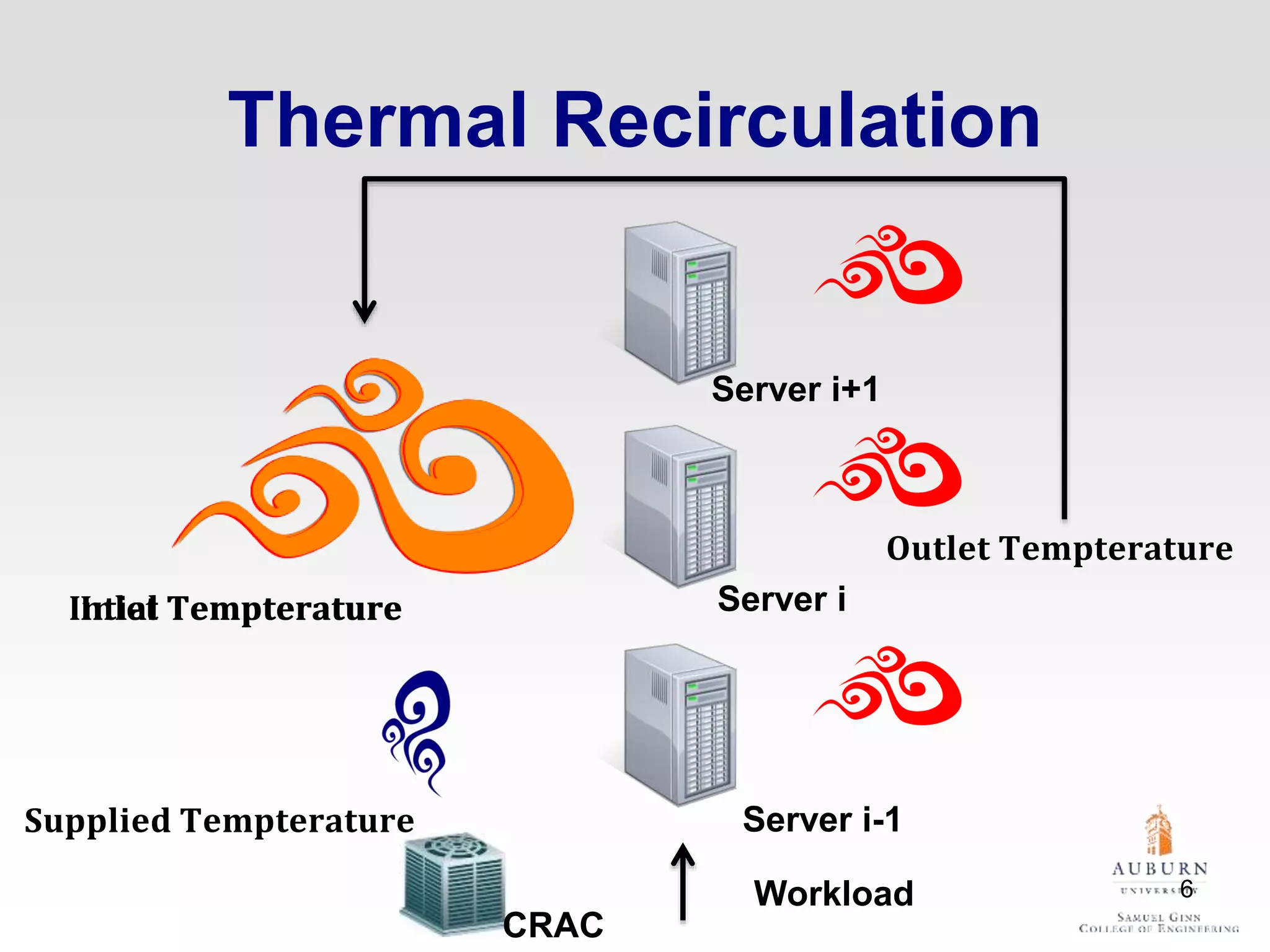

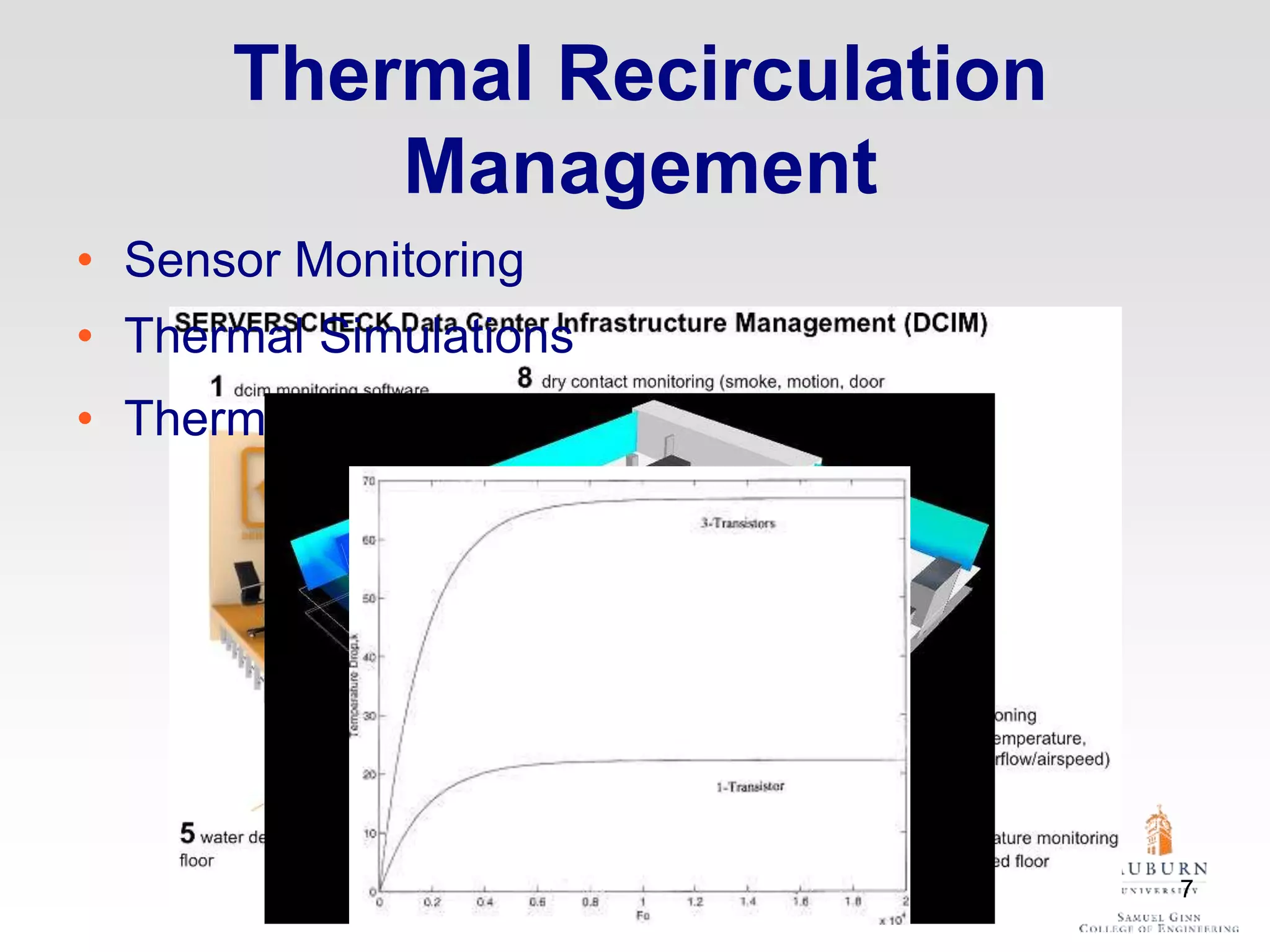

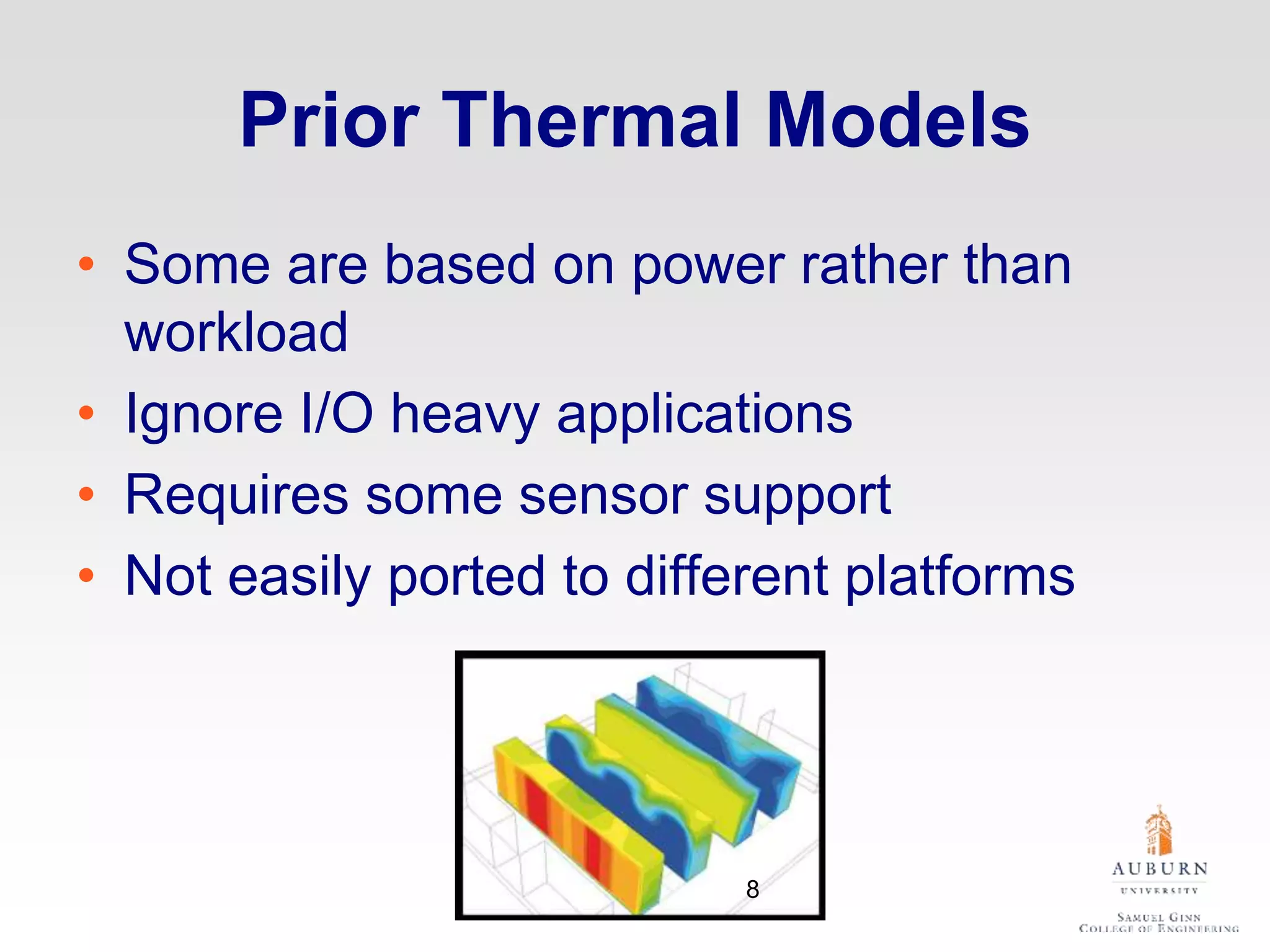

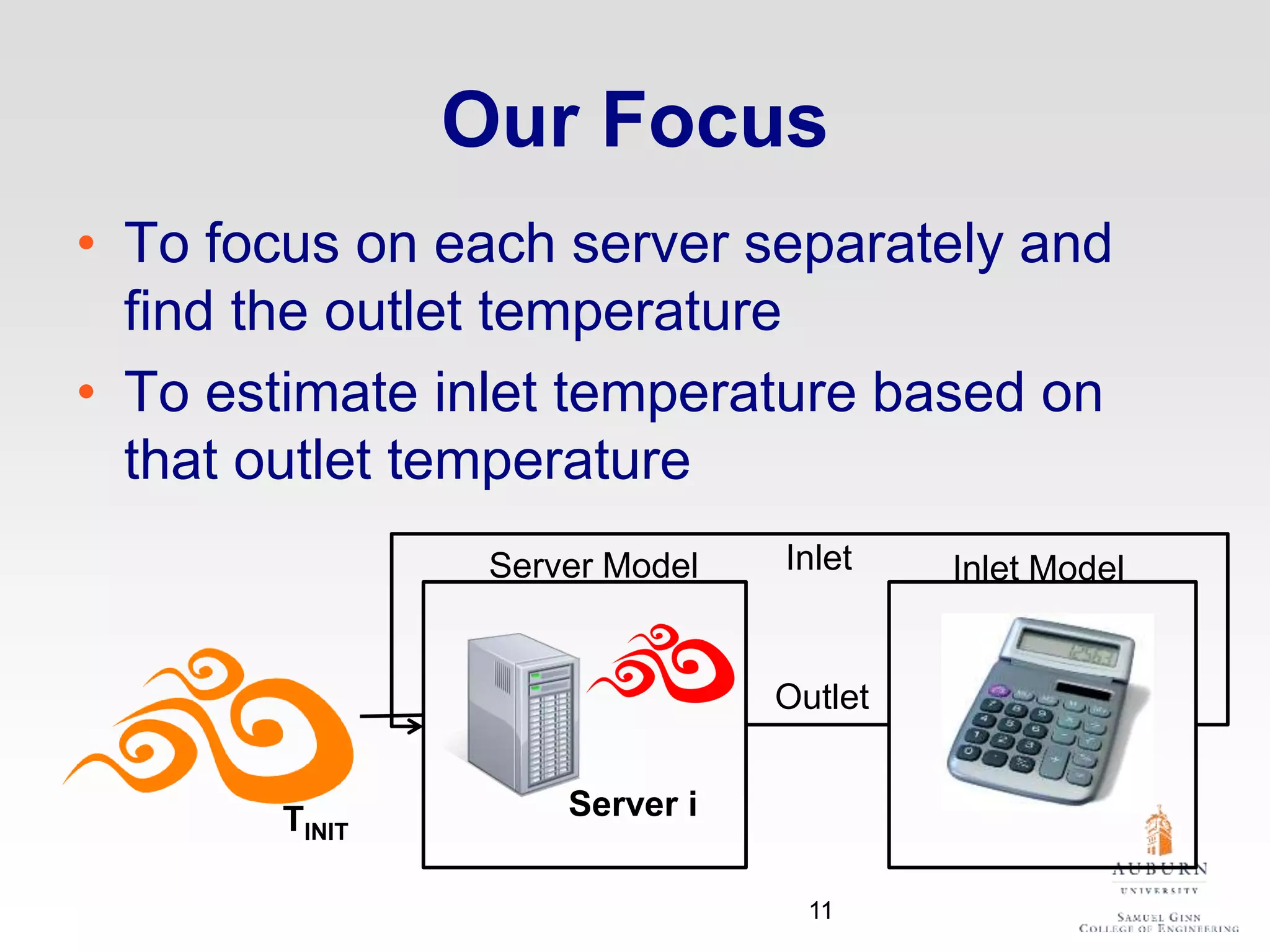

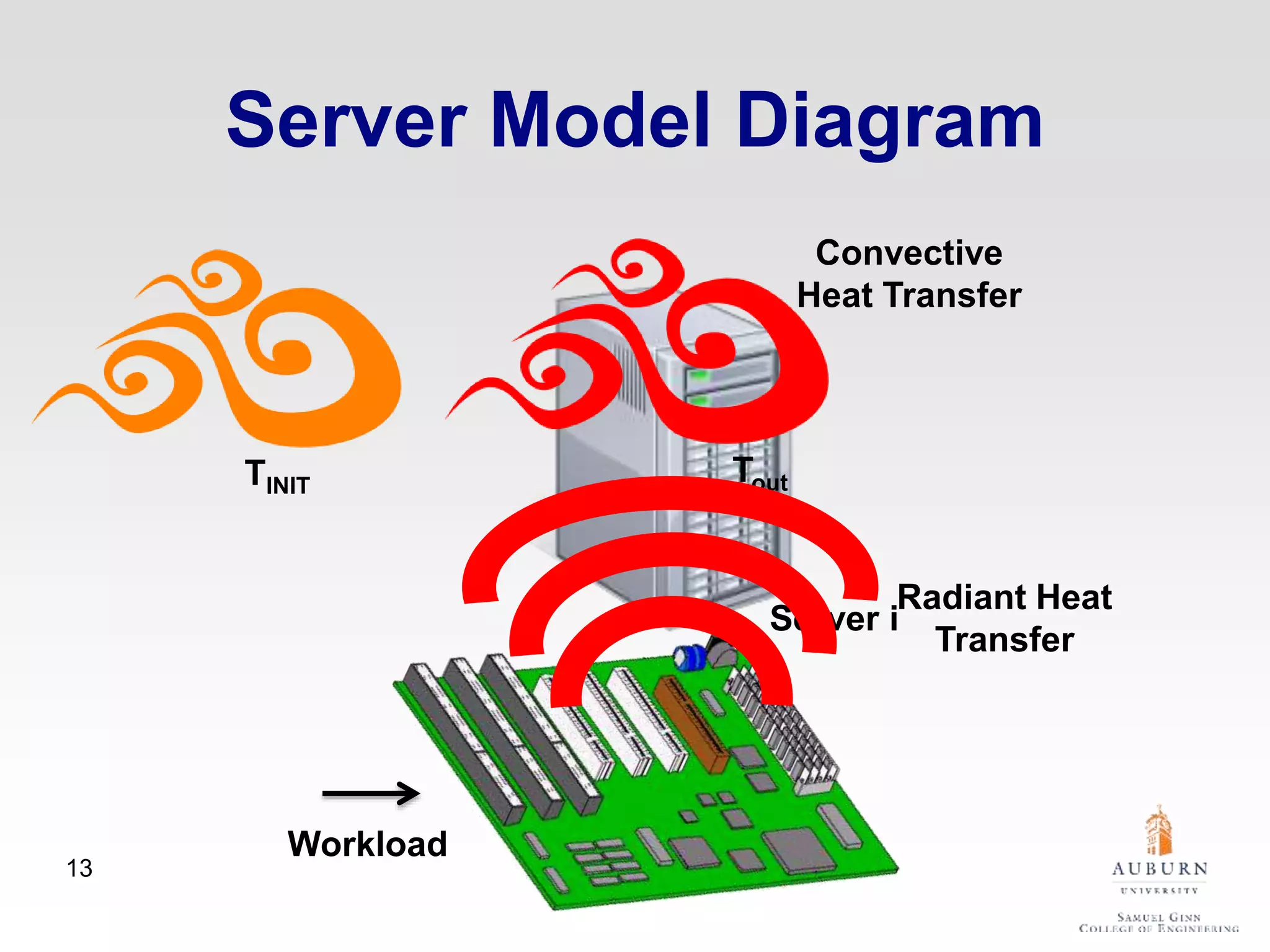

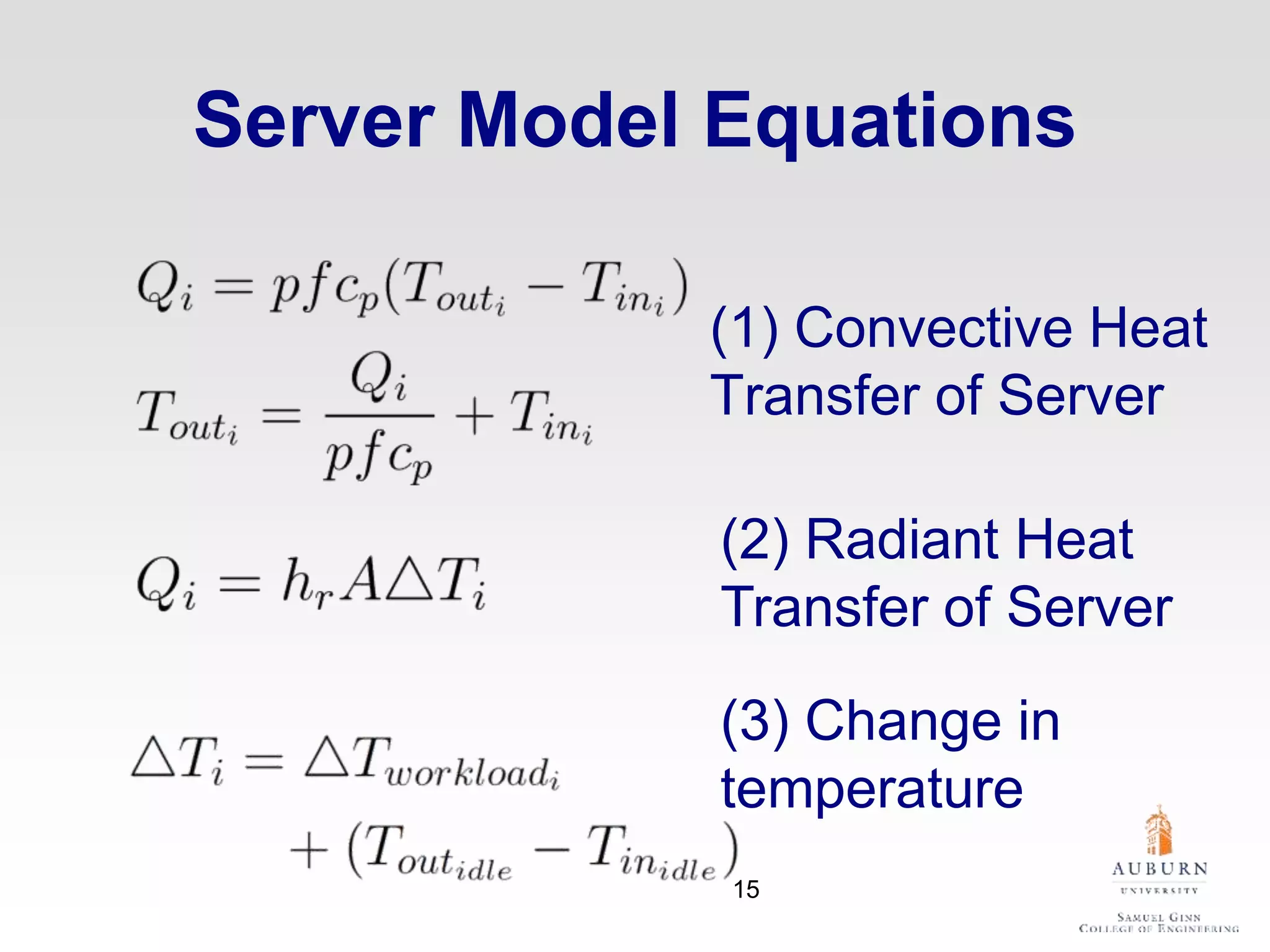

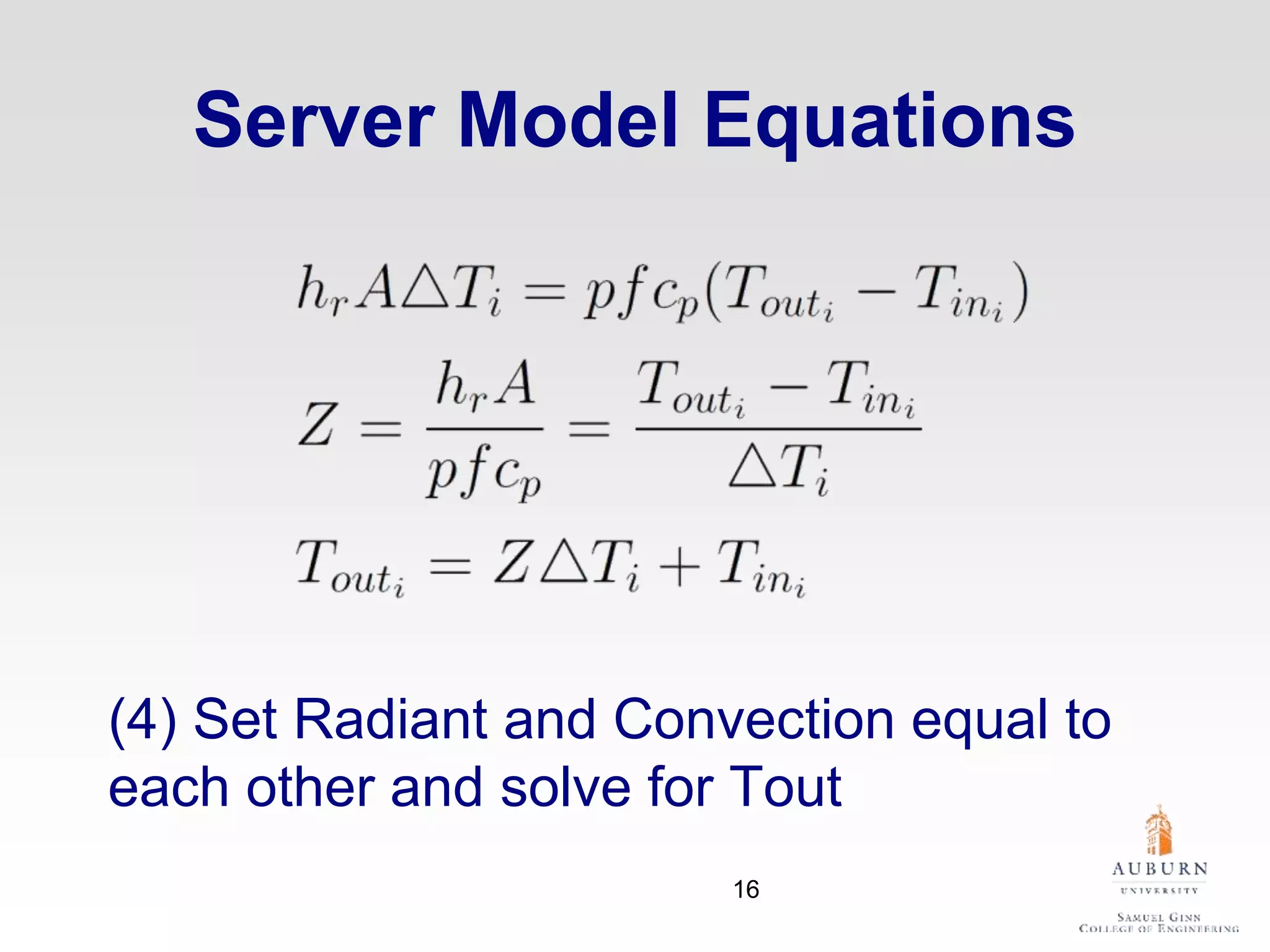

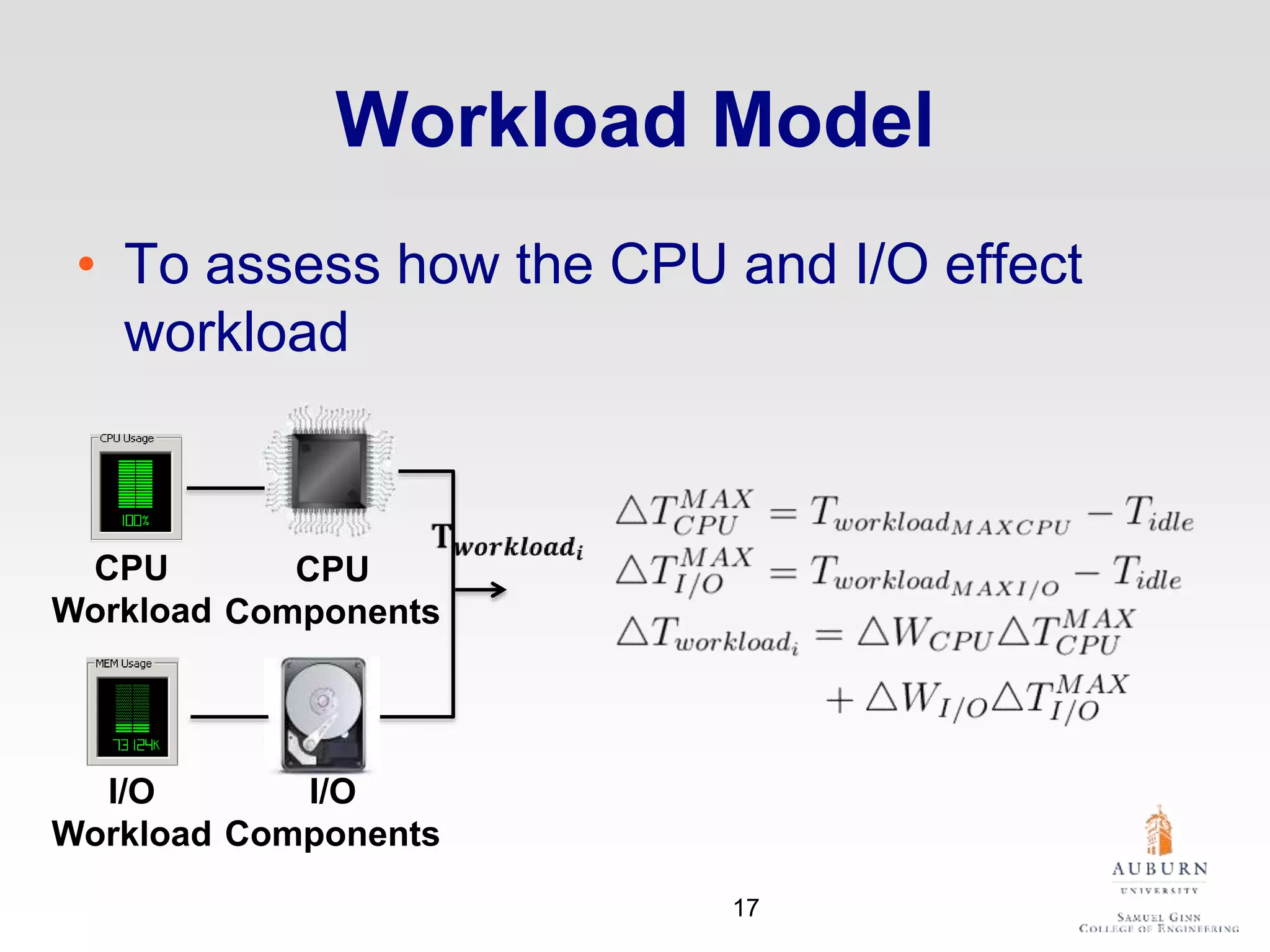

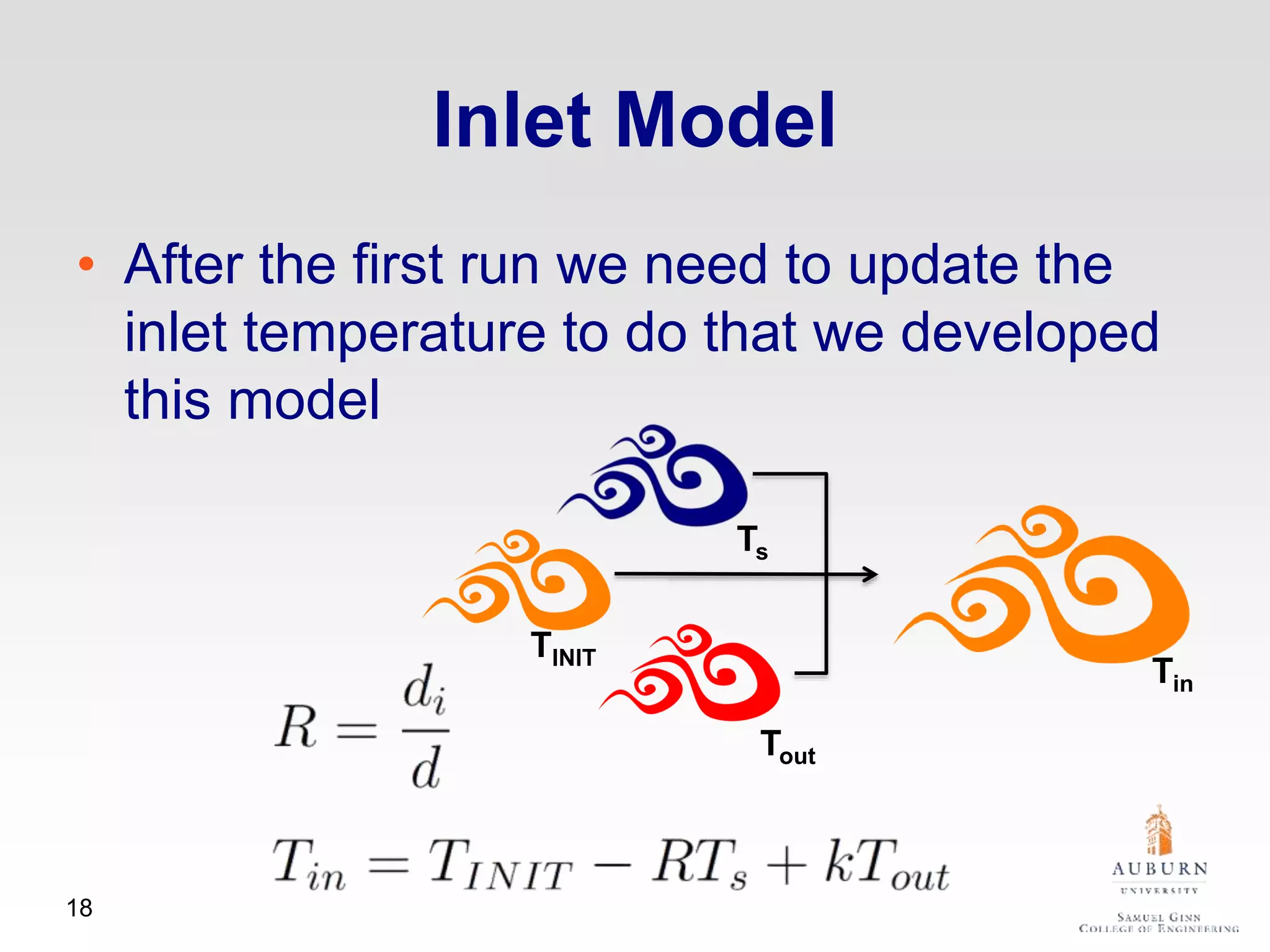

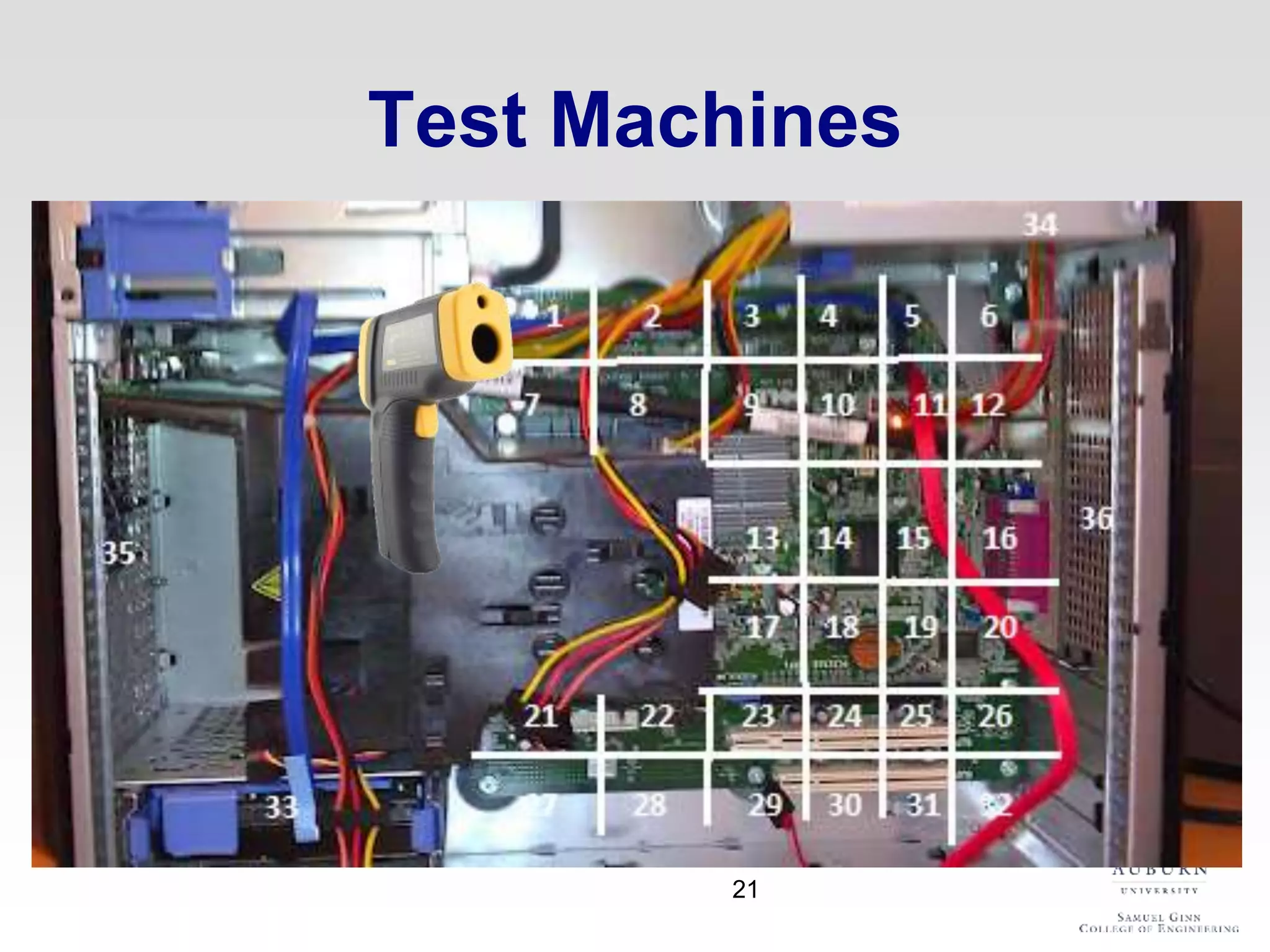

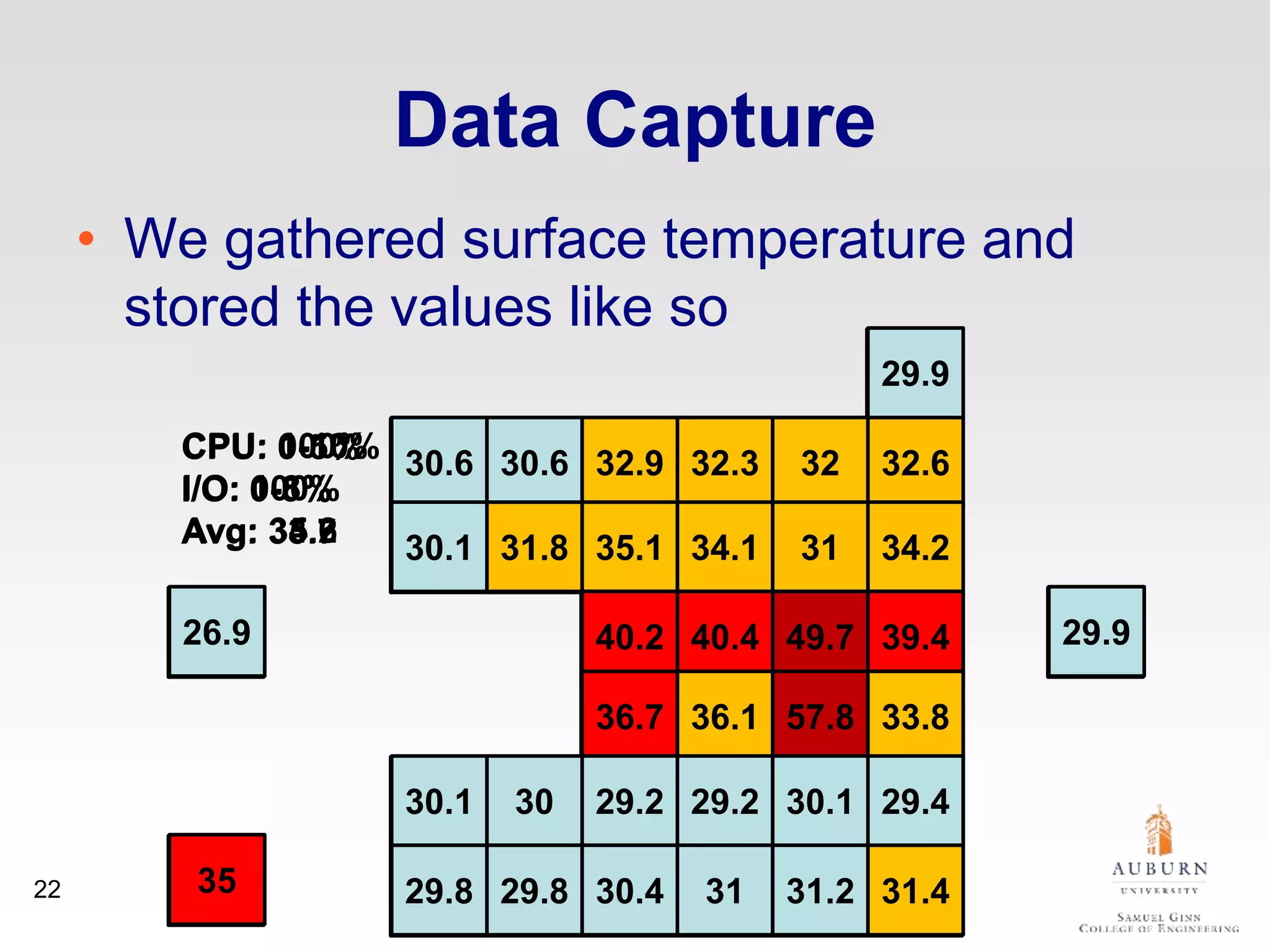

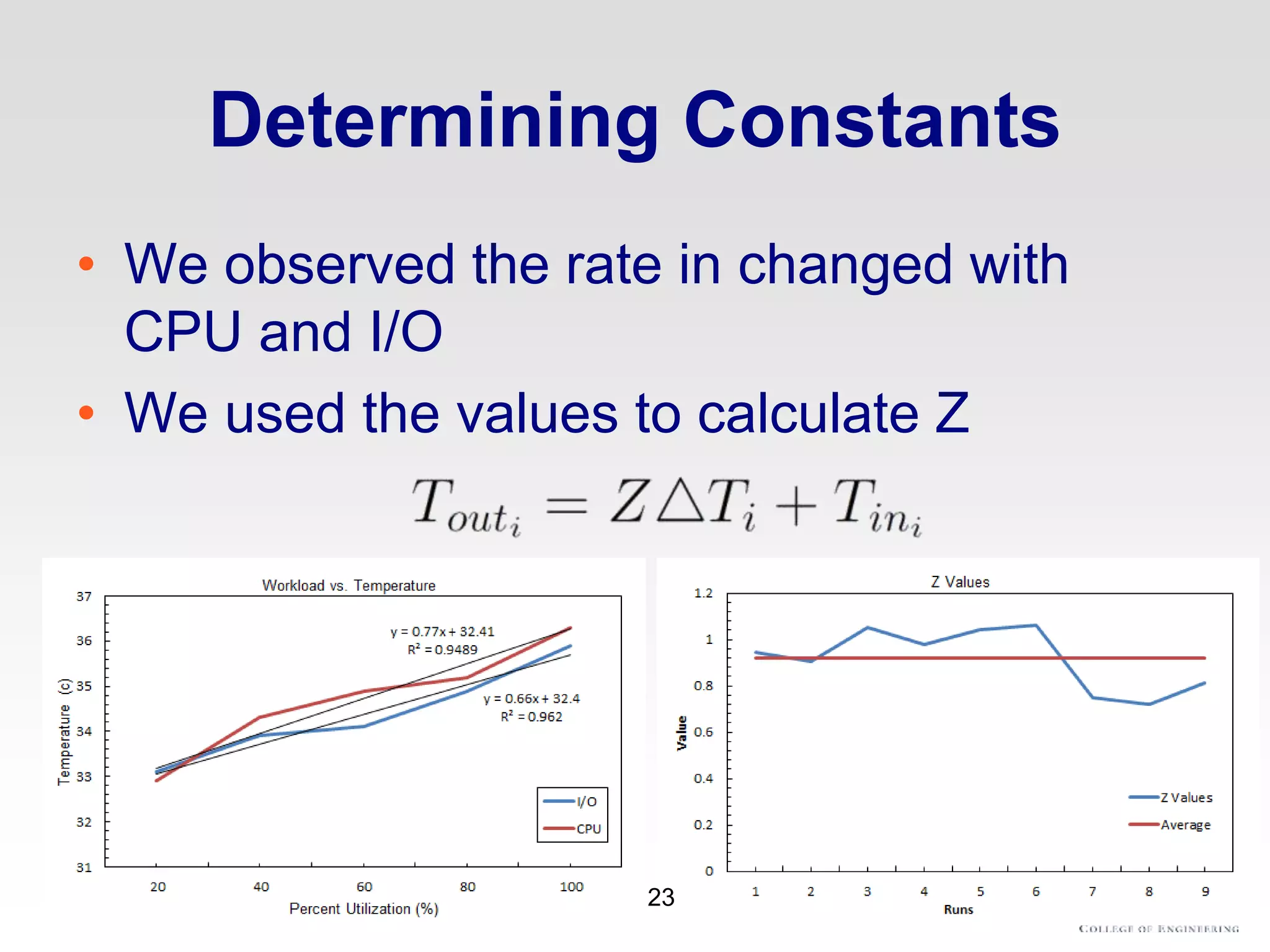

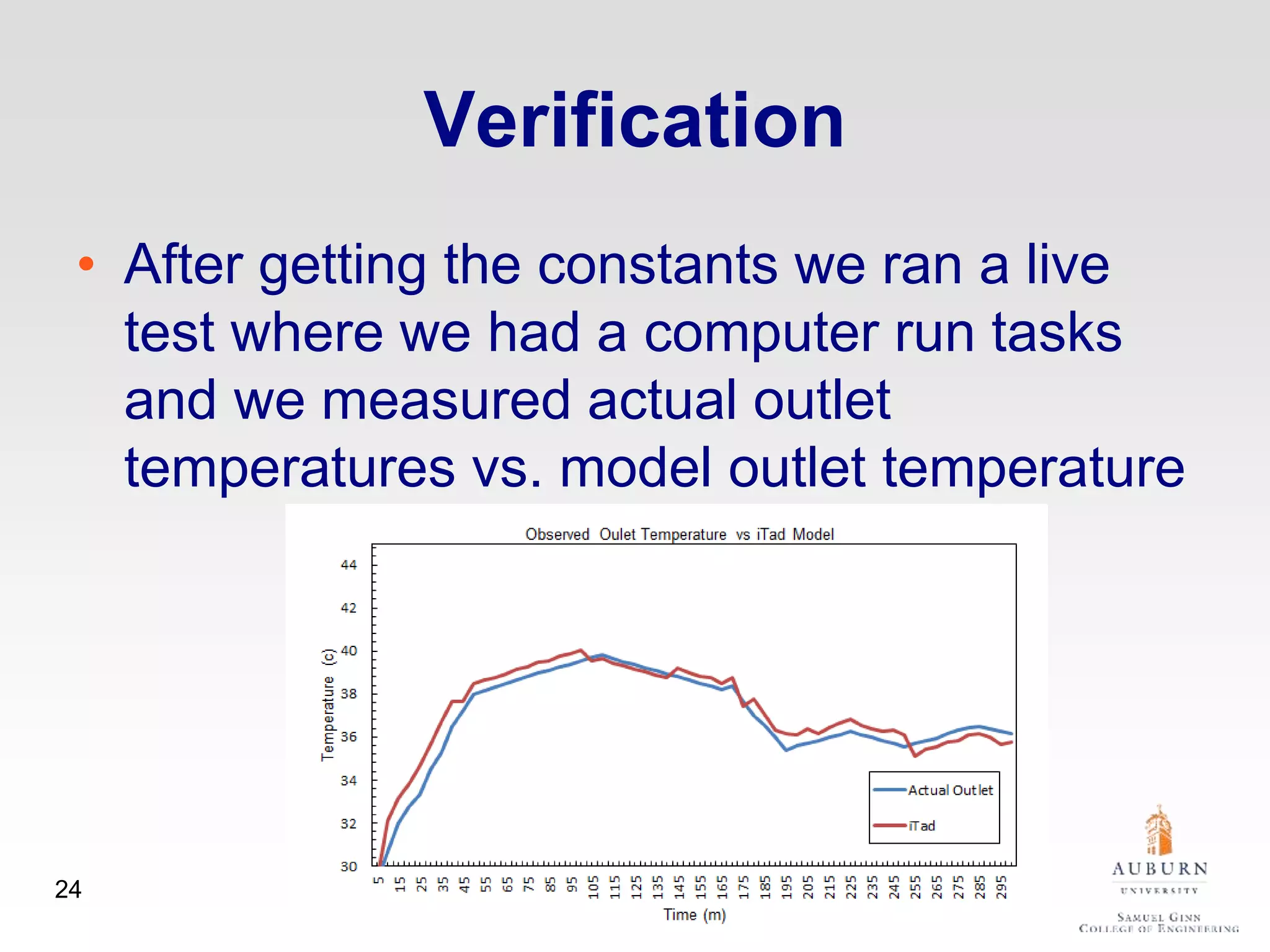

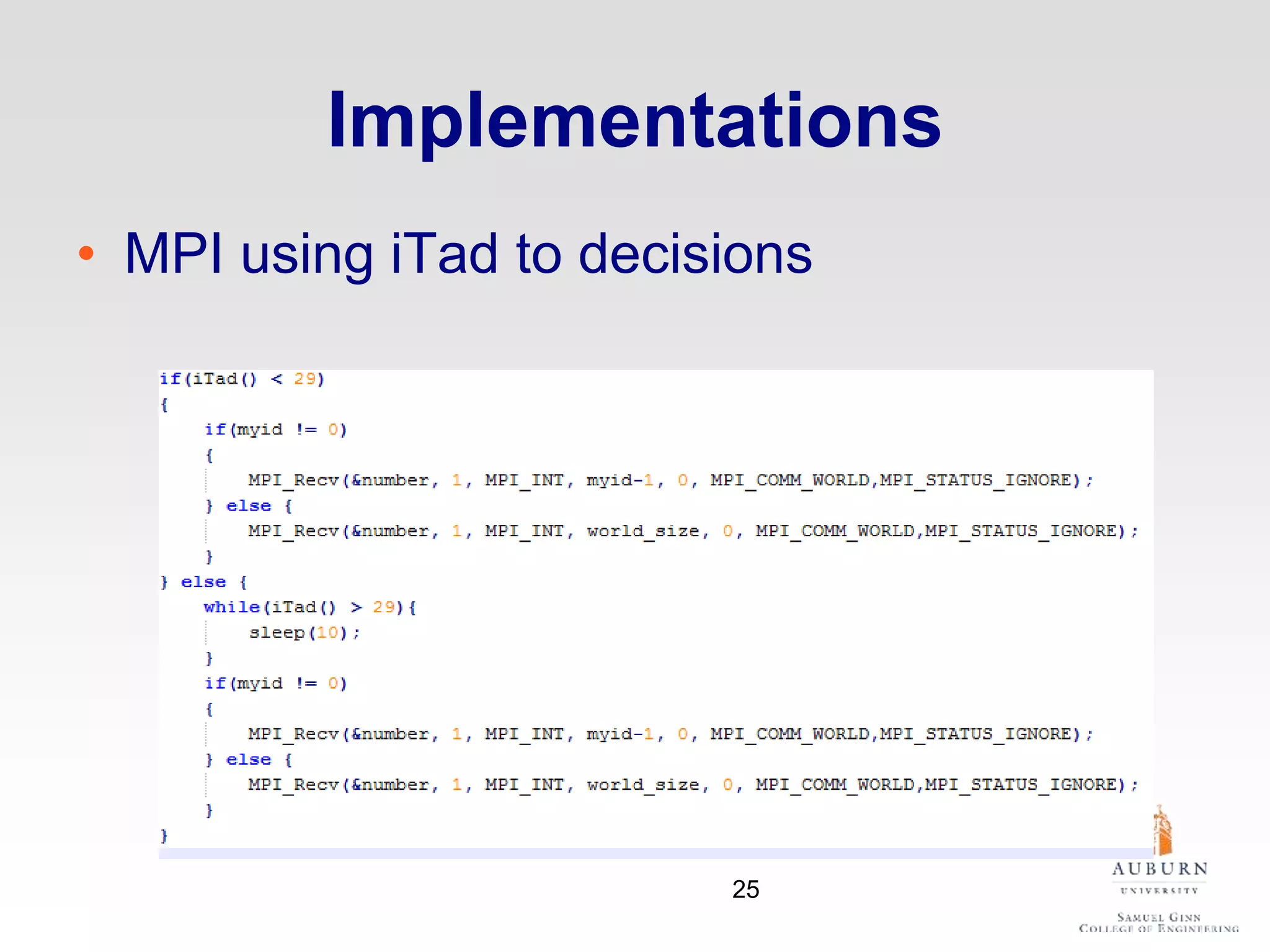

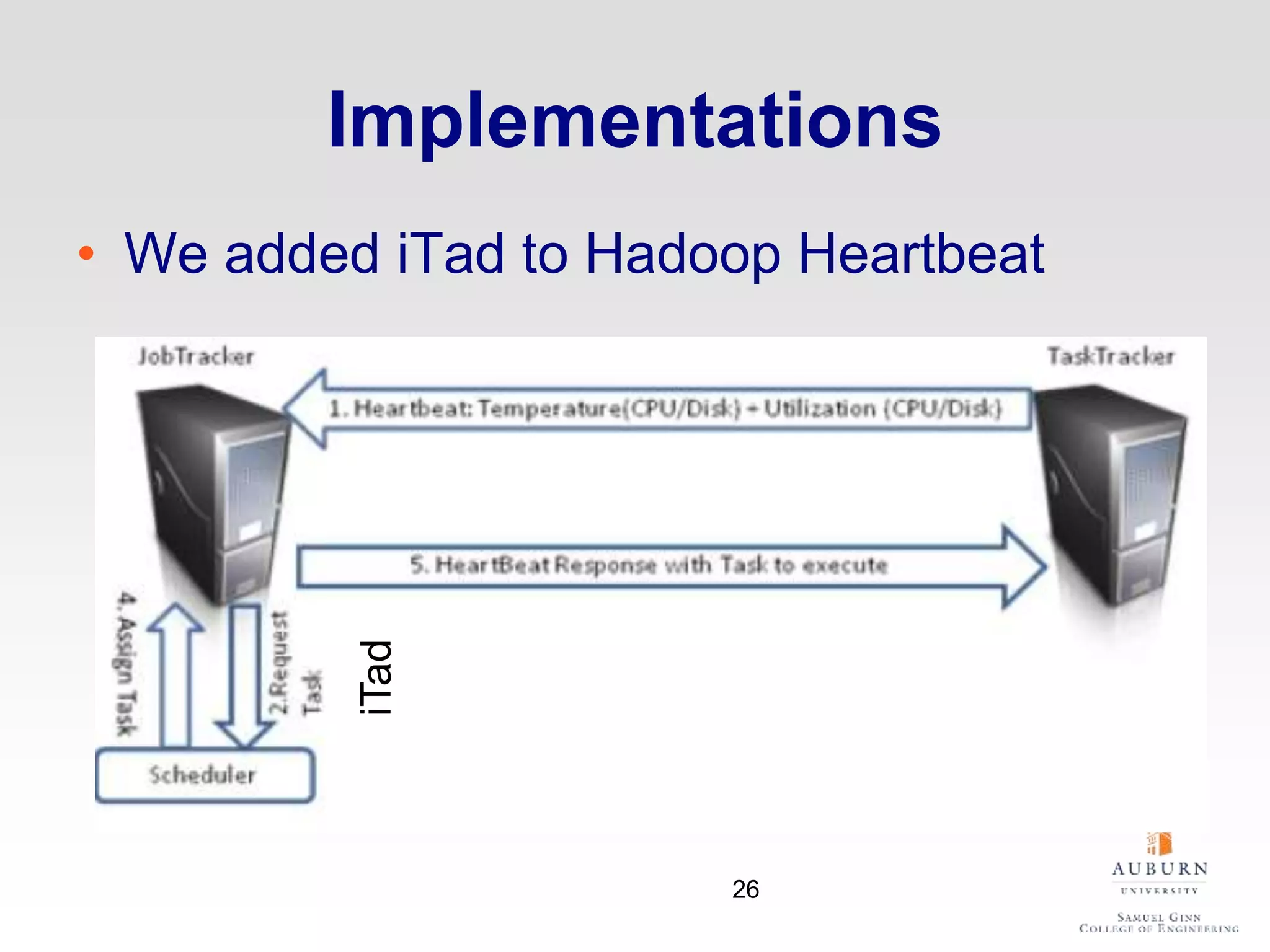

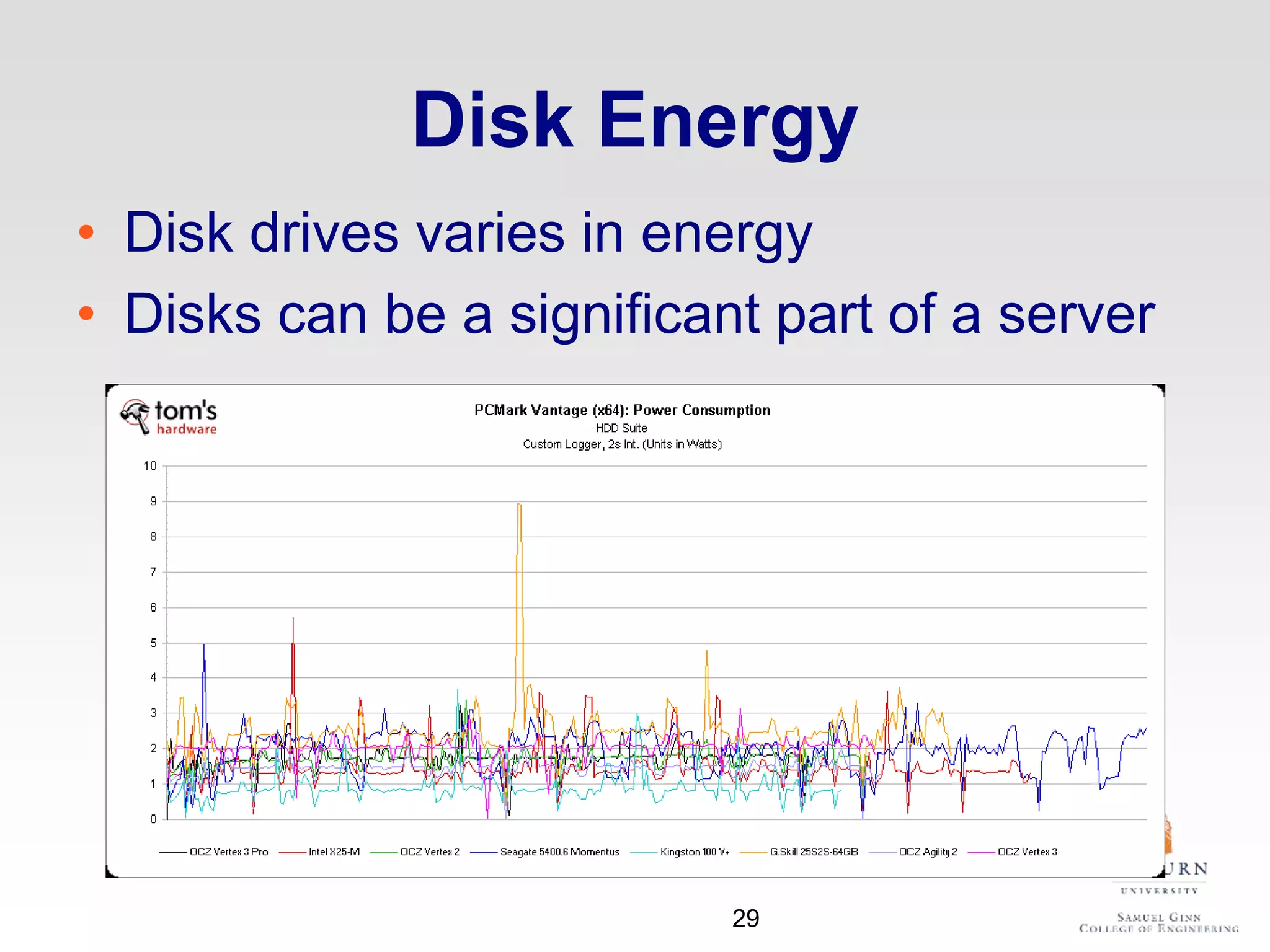

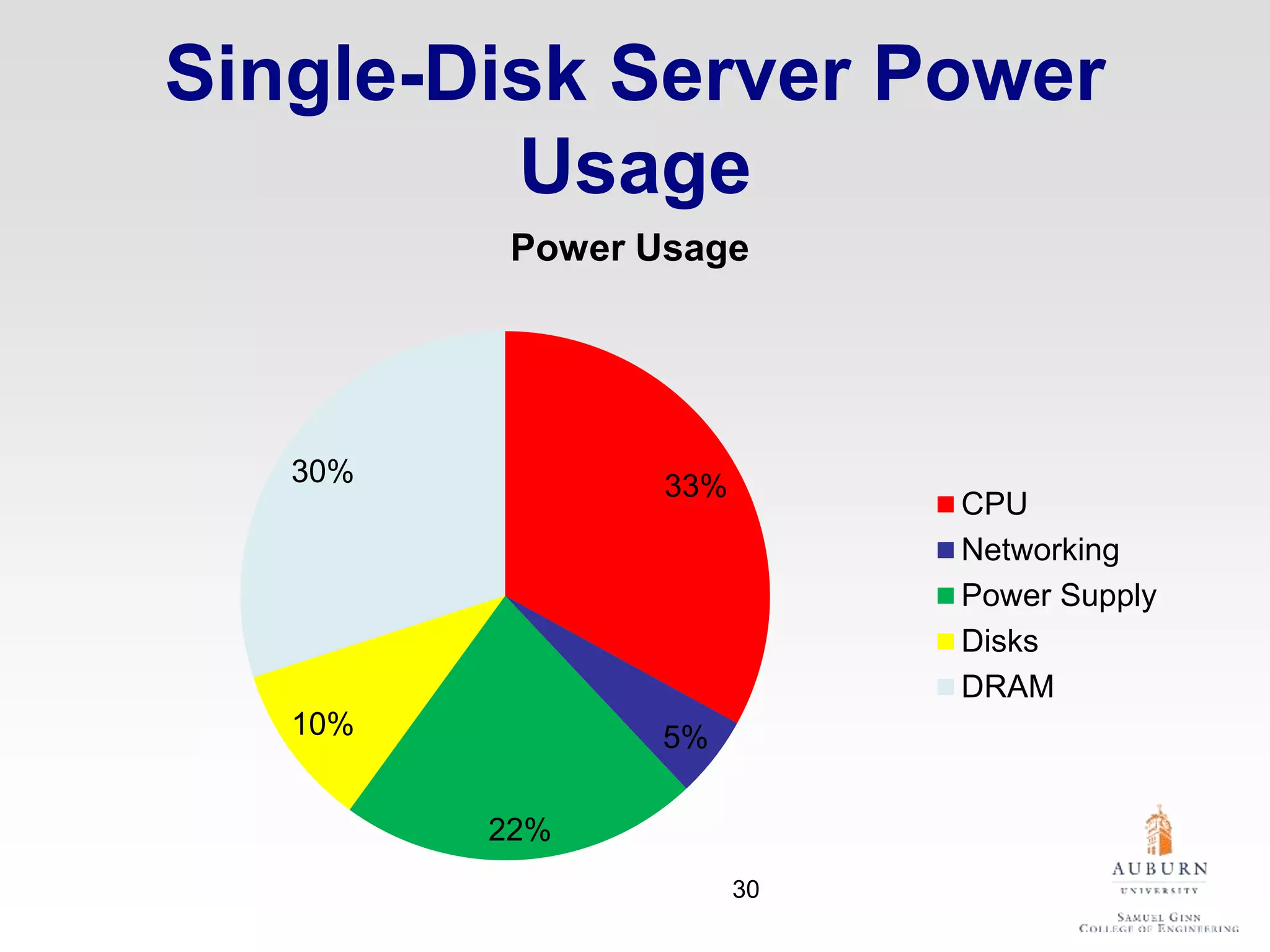

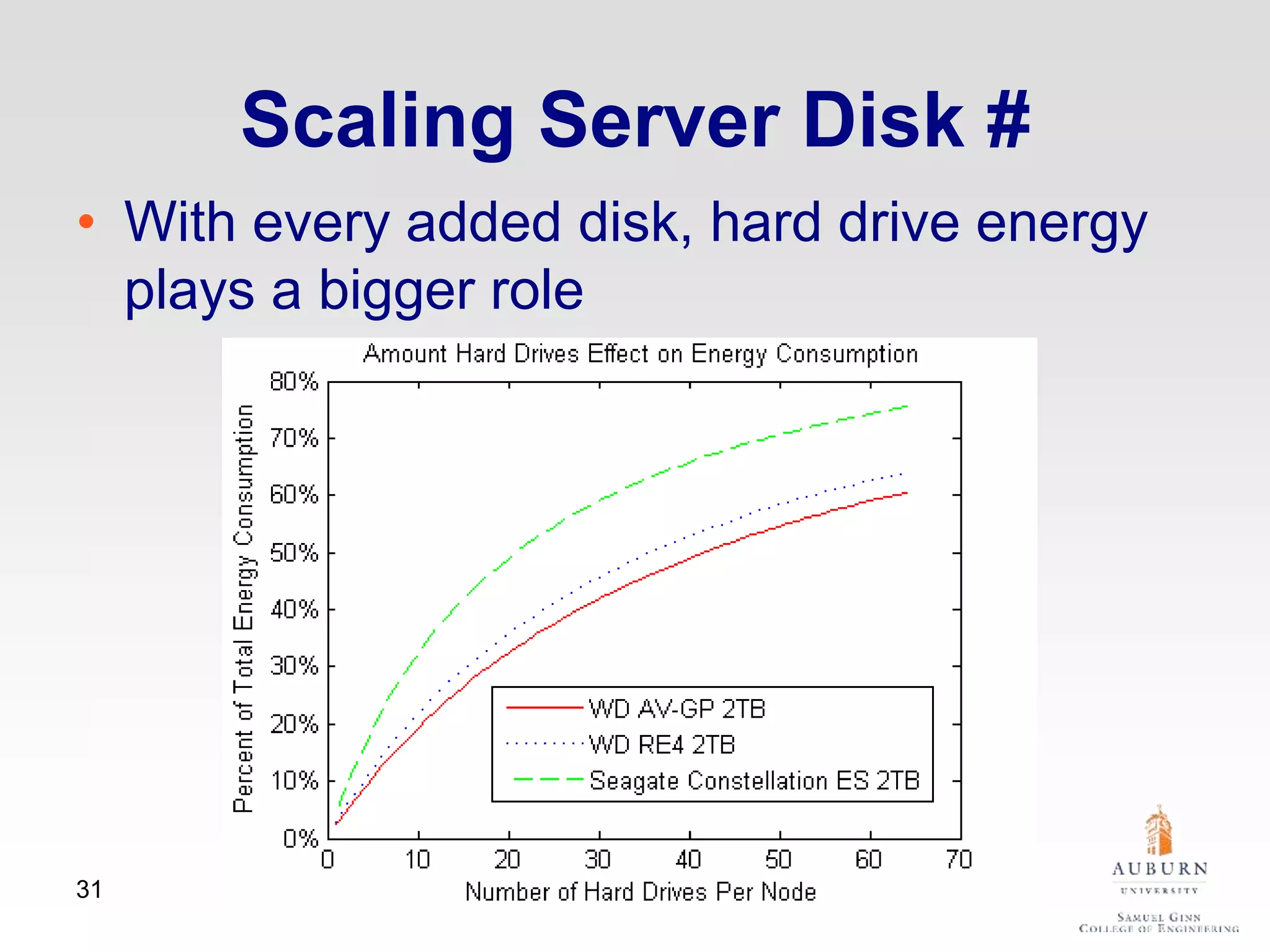

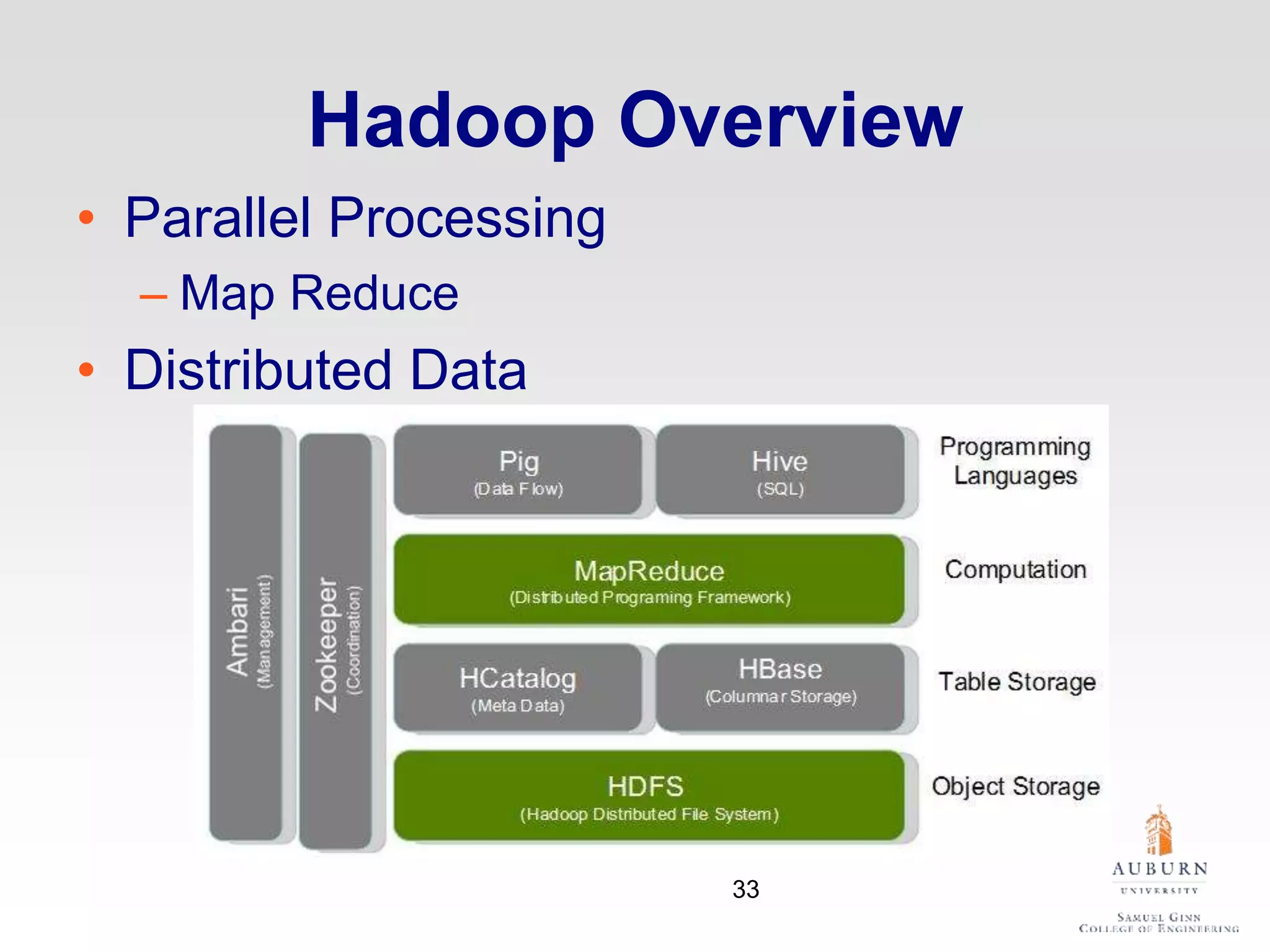

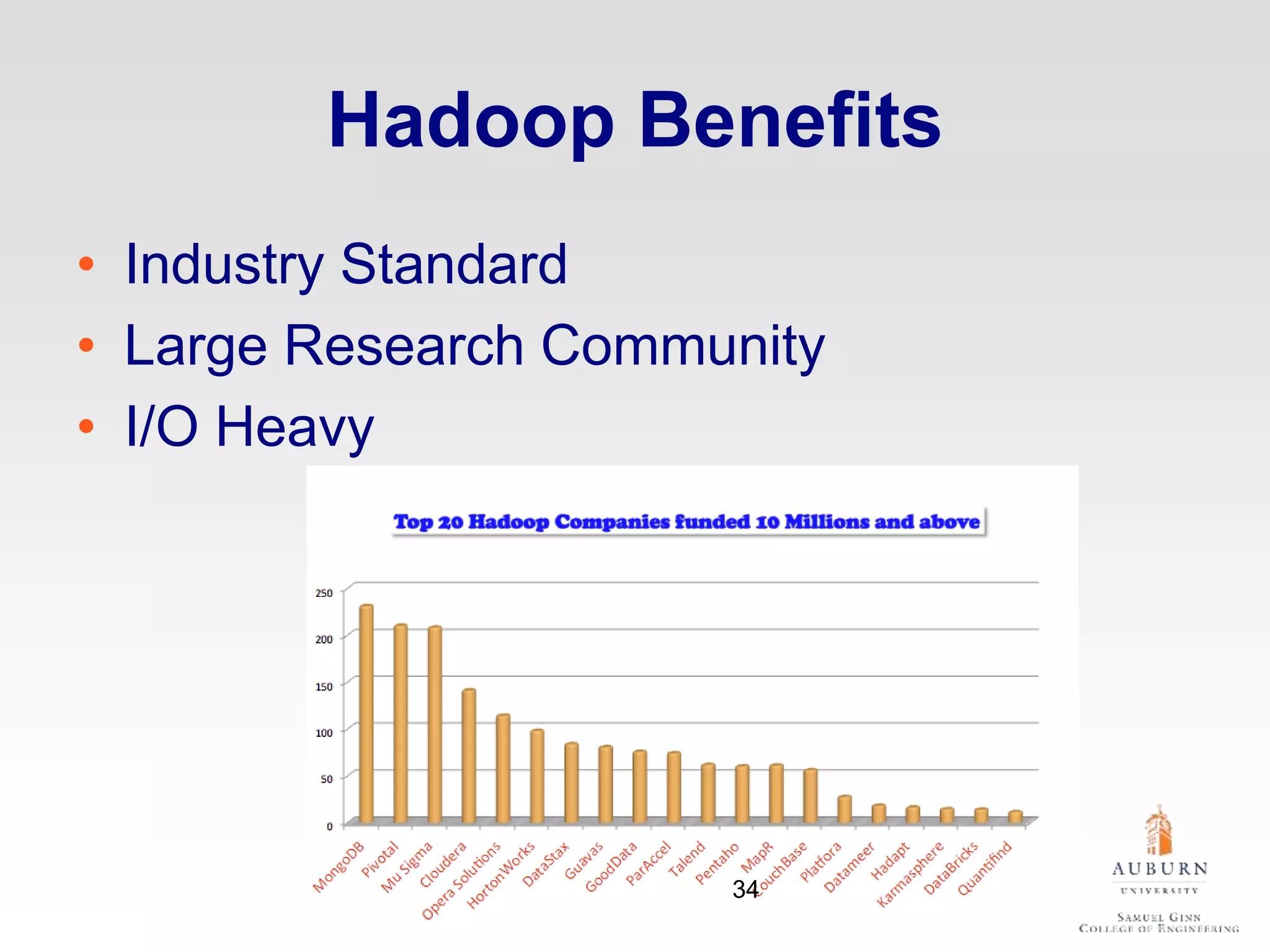

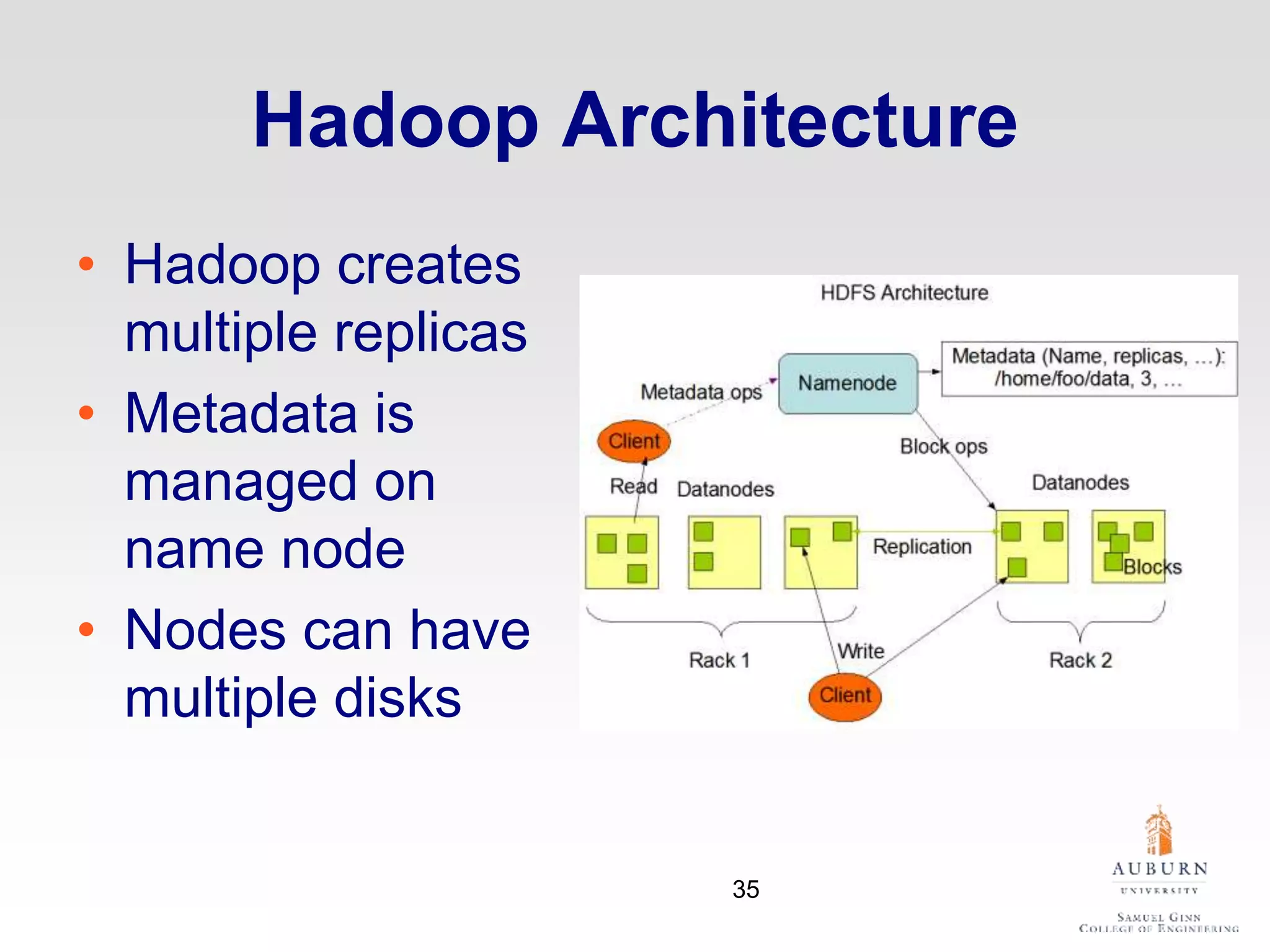

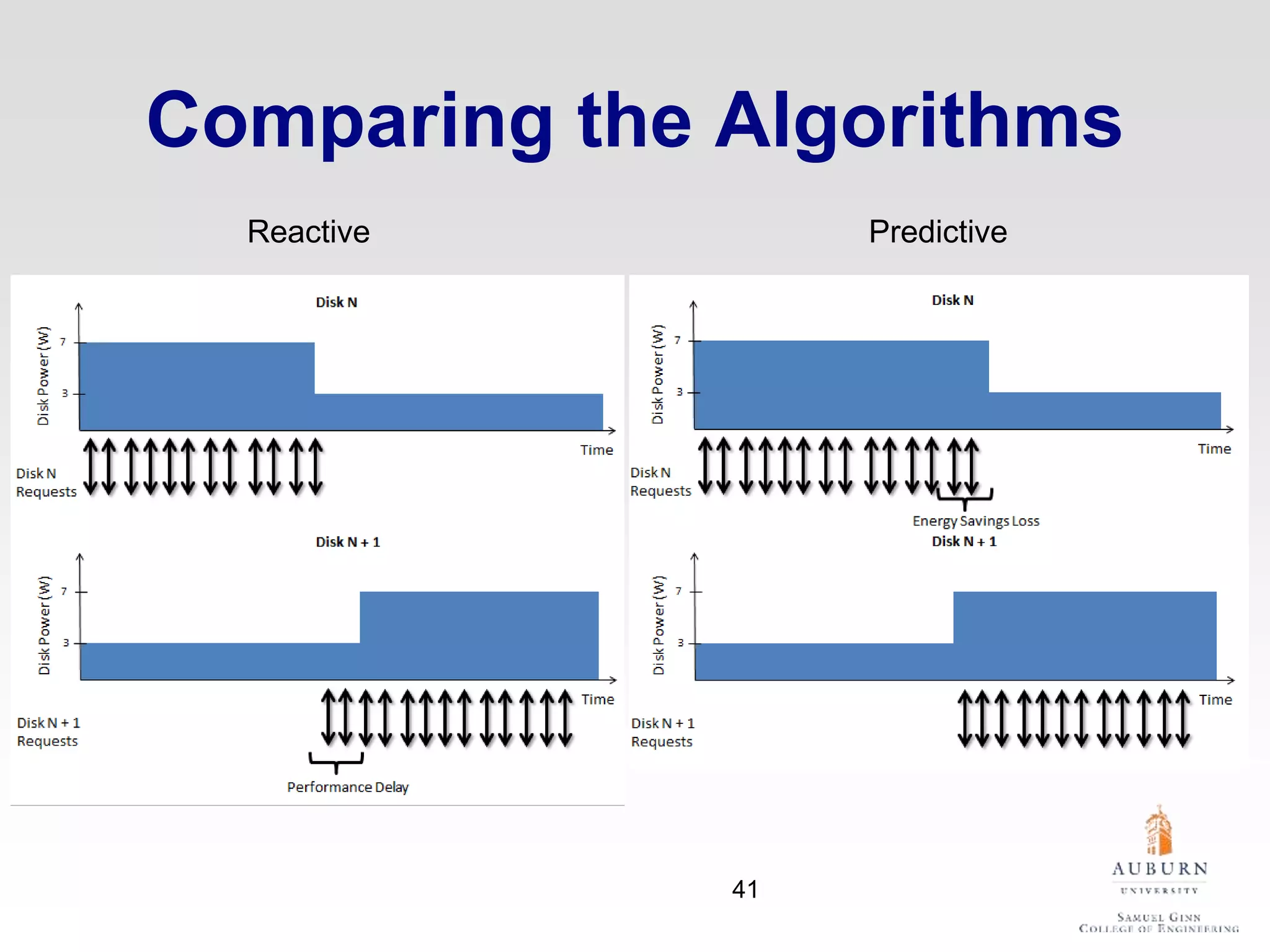

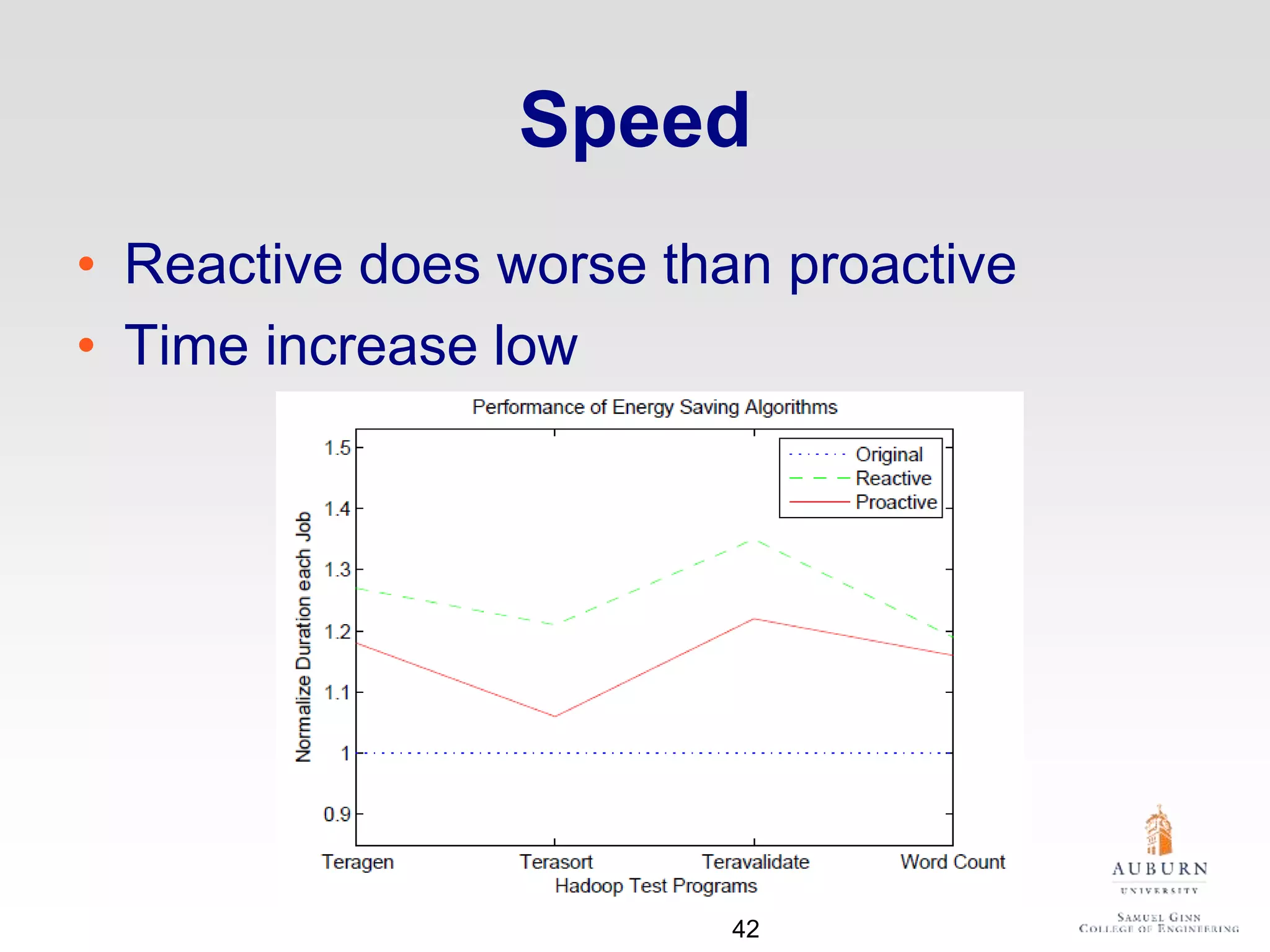

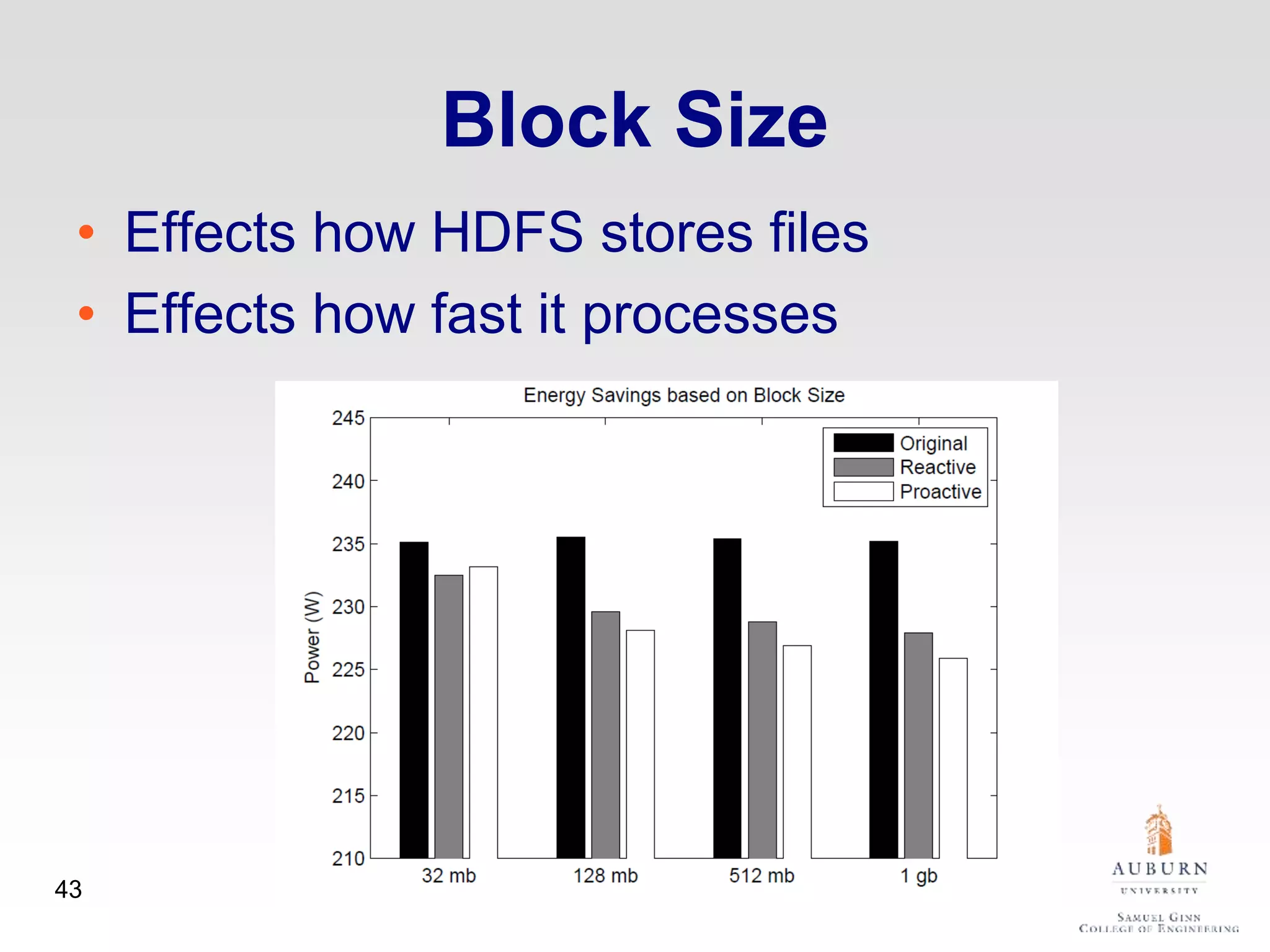

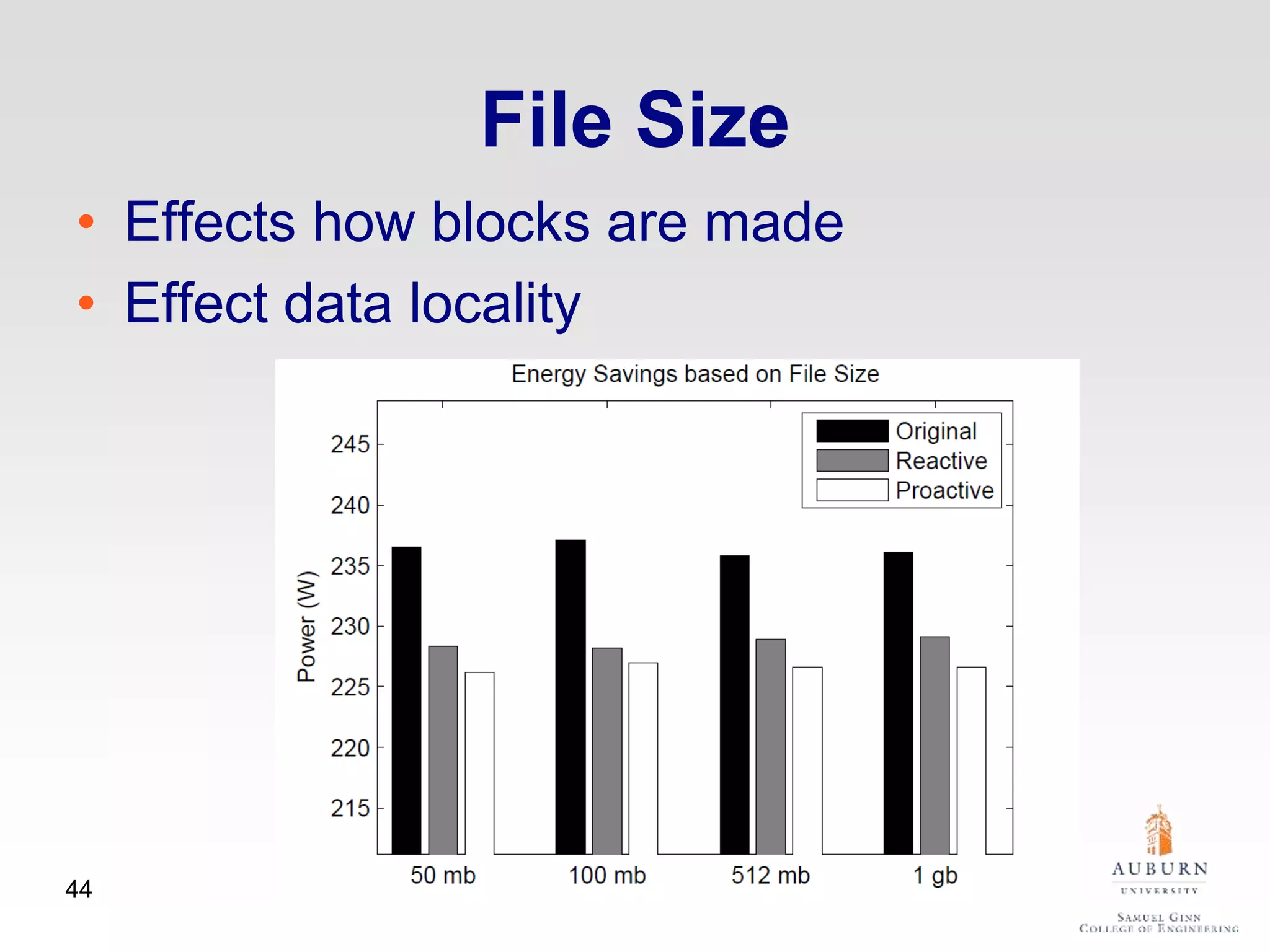

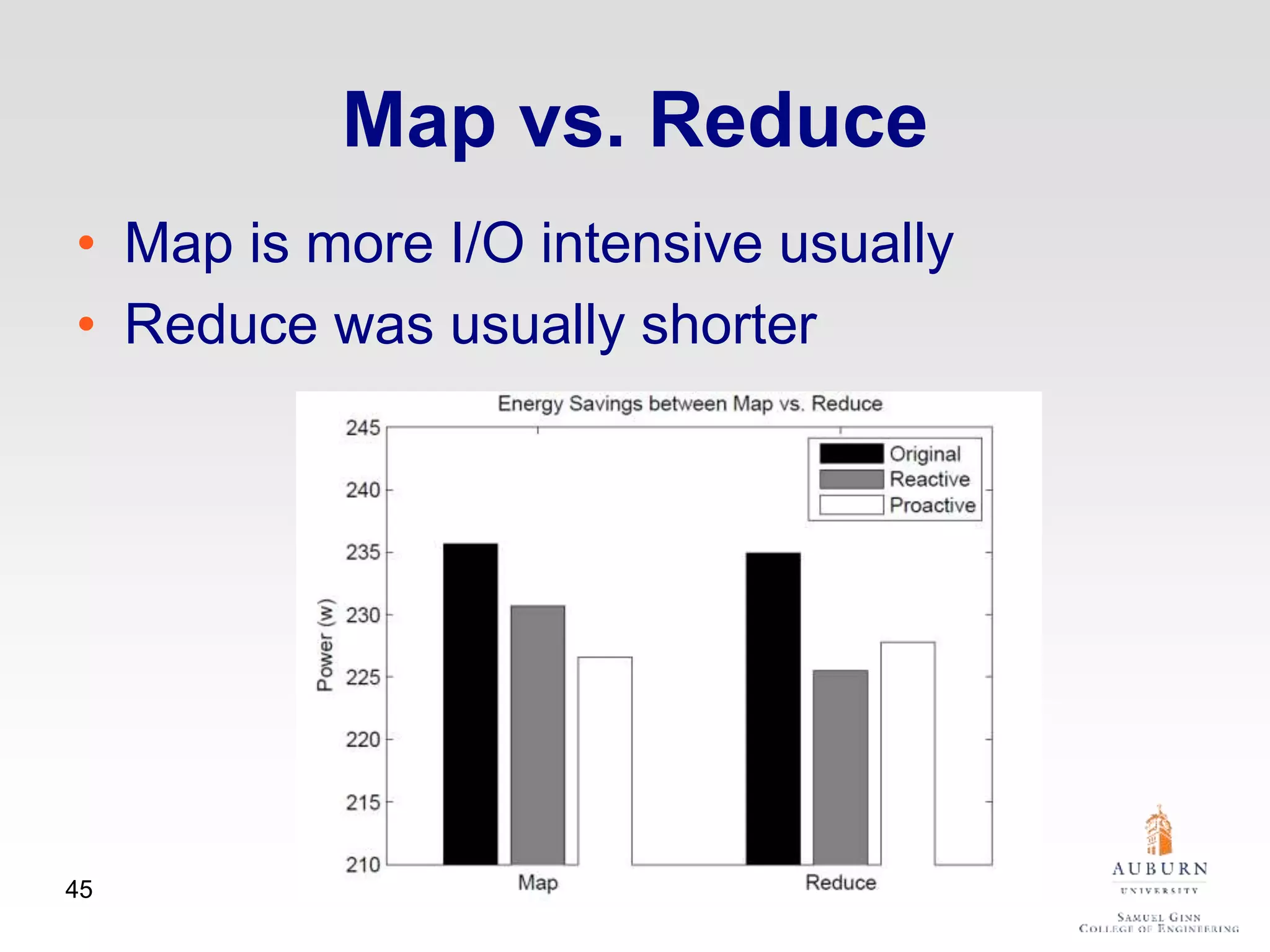

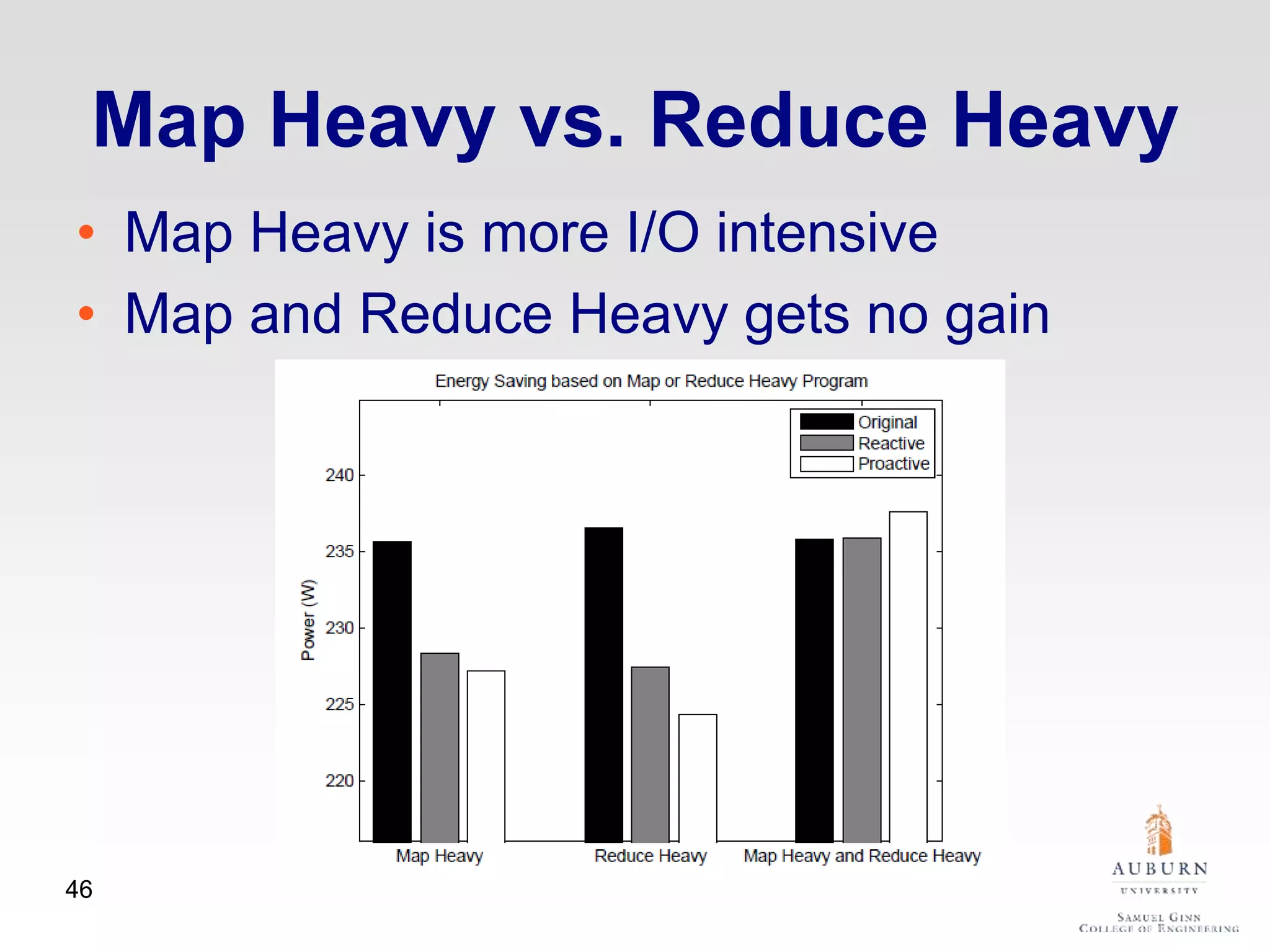

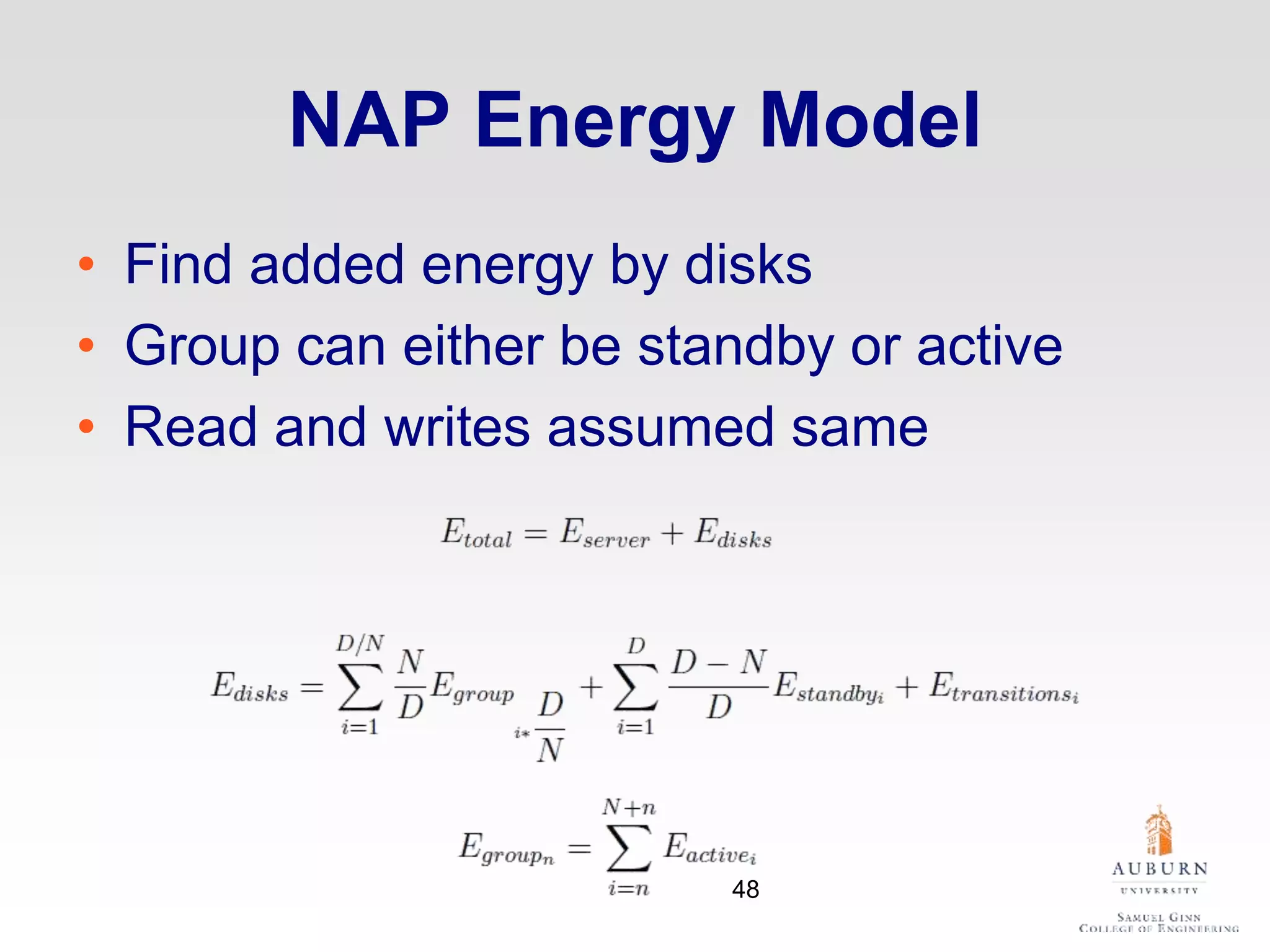

The document discusses thermal and energy-saving techniques for data centers, highlighting a research goal to estimate the temperature of data nodes based on CPU and I/O utilization. It introduces ITAD as a method for measuring temperature and NAP as an energy-saving technique for Hadoop clusters. Additionally, it presents various models and algorithms for optimizing energy efficiency in server operations.