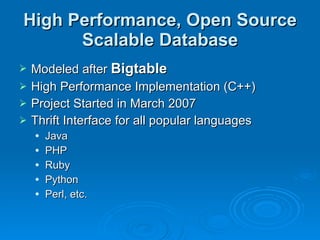

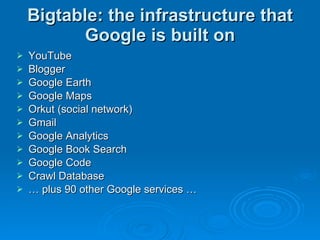

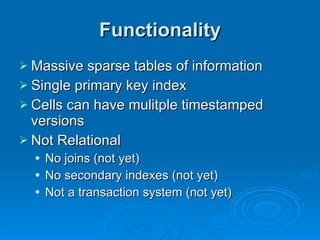

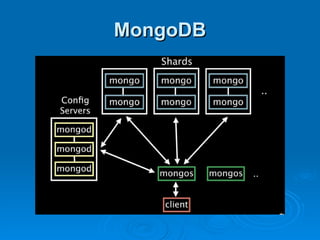

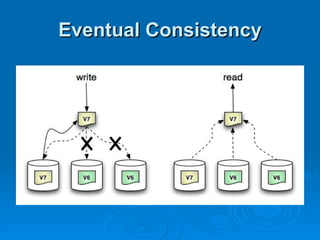

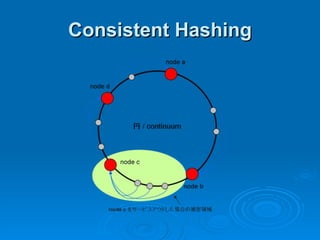

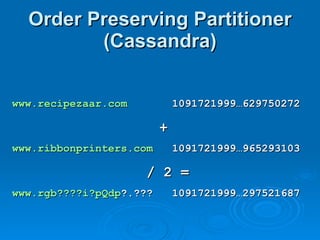

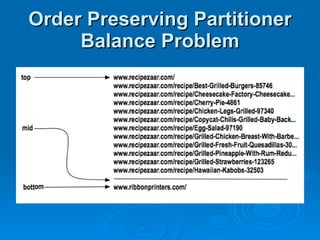

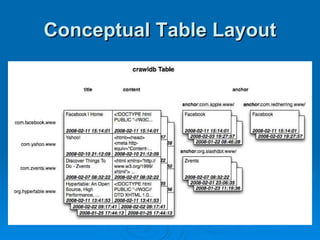

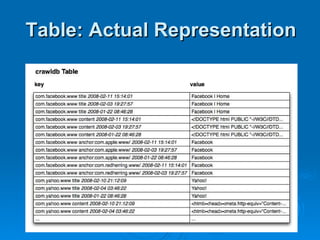

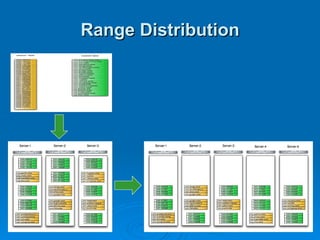

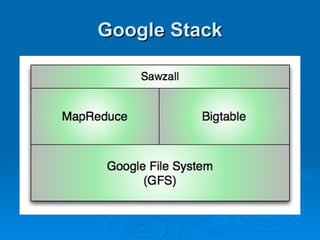

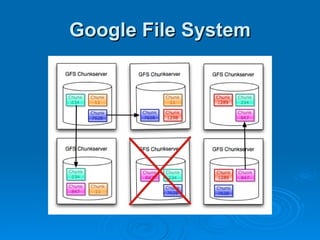

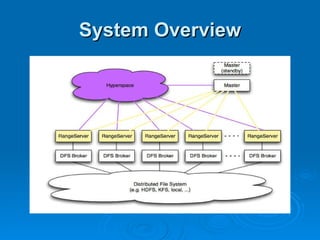

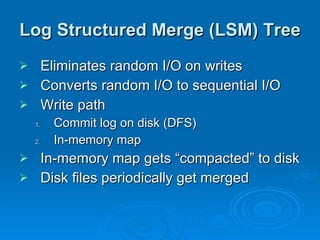

Hypertable is an open source, scalable database modeled after Google's Bigtable database. It provides high performance for massive sparse tables of information using a single primary key index. Key features include auto-sharding of data, support for popular programming languages through a Thrift interface, and deployment at companies using architectures like MongoDB, Cassandra, and Dynamo. It uses techniques like consistent hashing, order-preserving partitioning, and LSM trees to optimize performance.