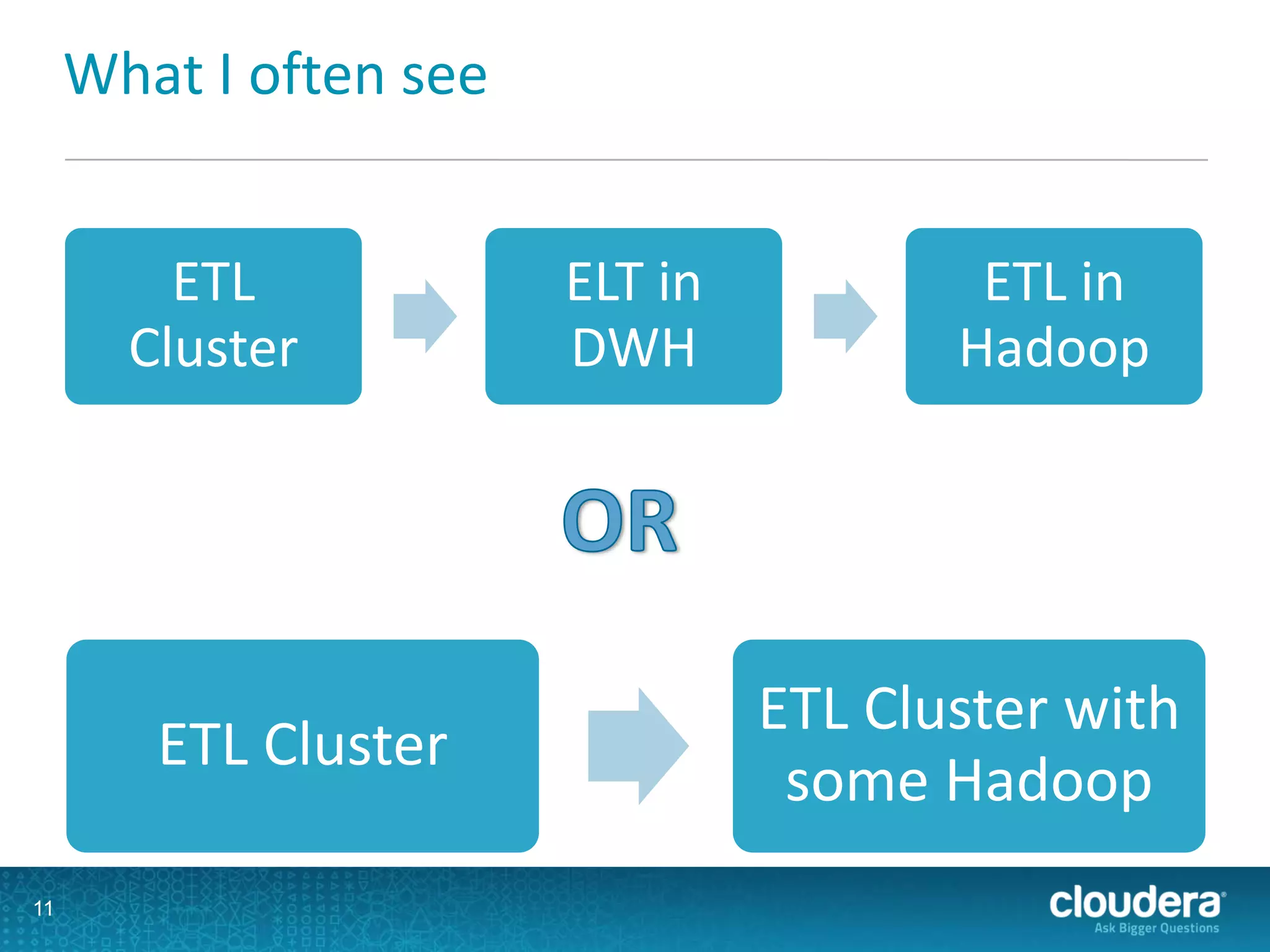

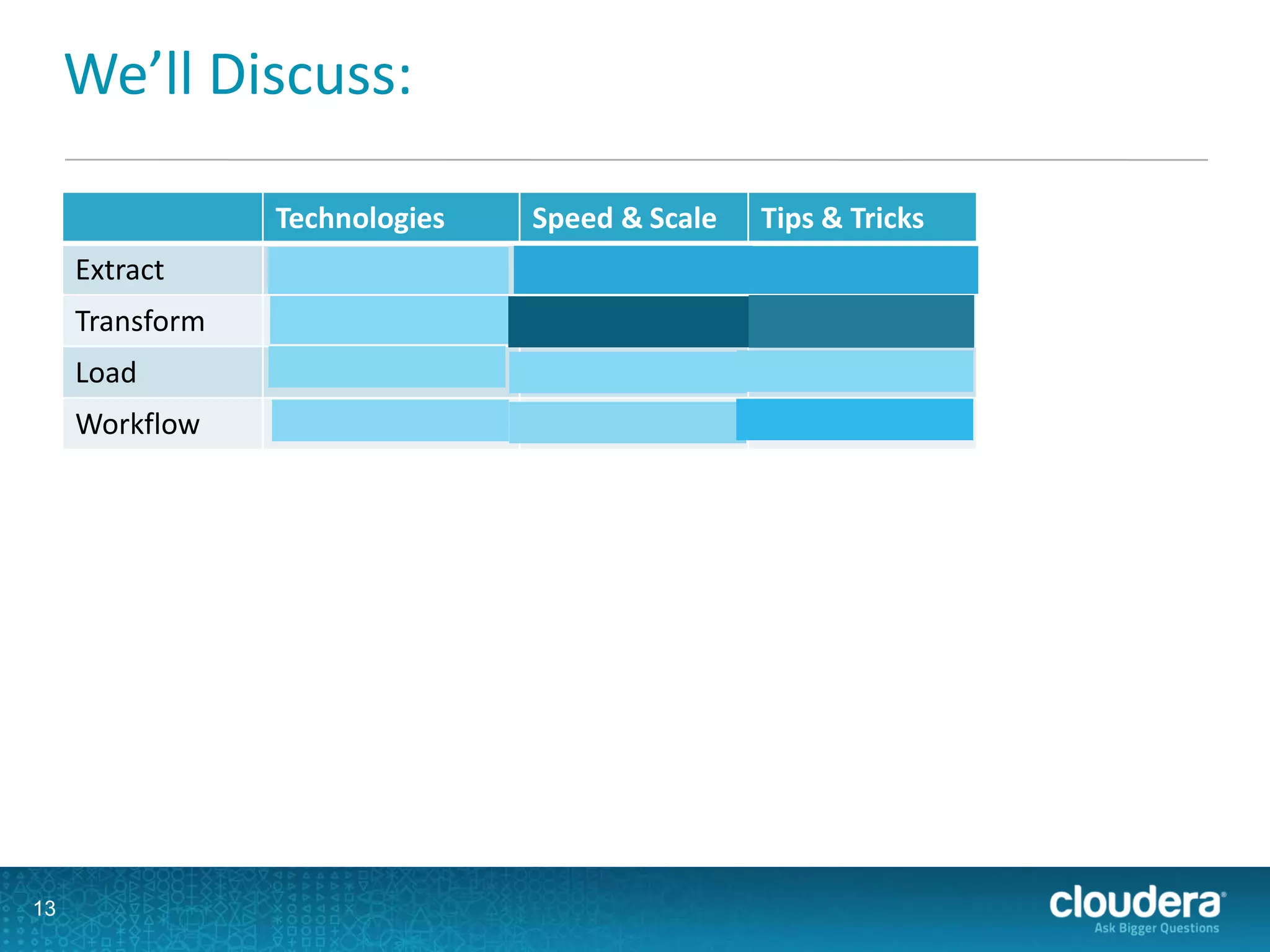

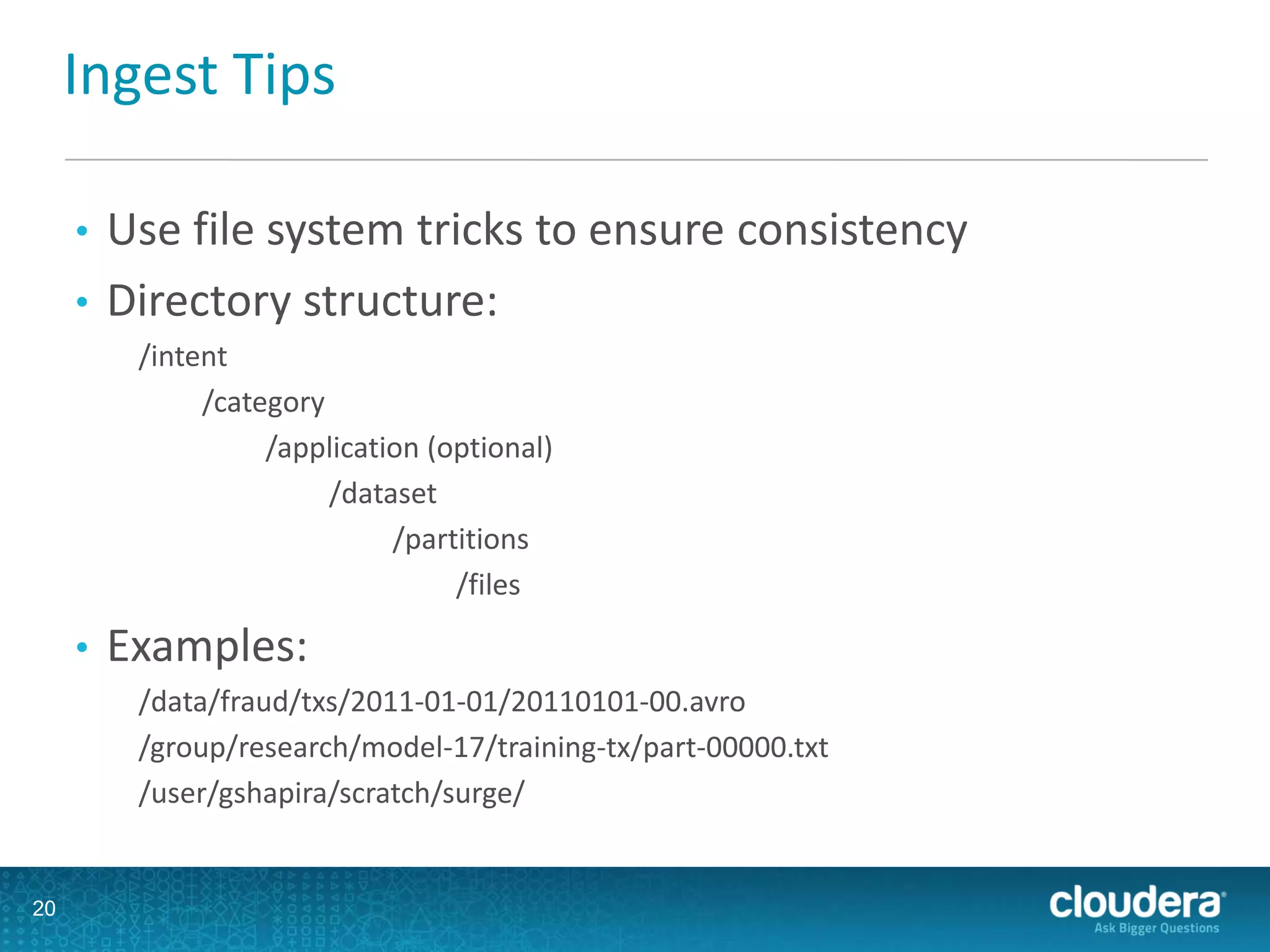

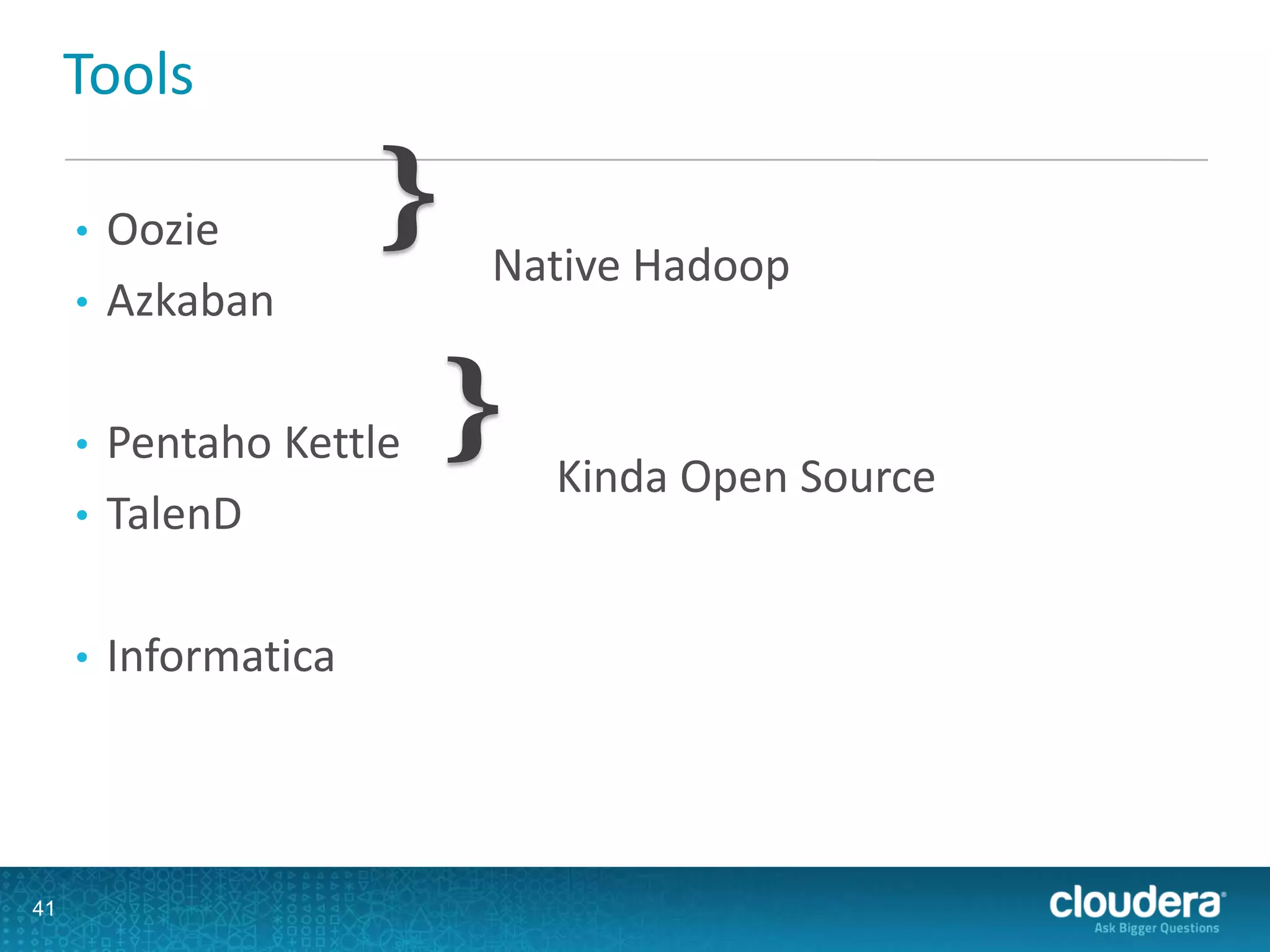

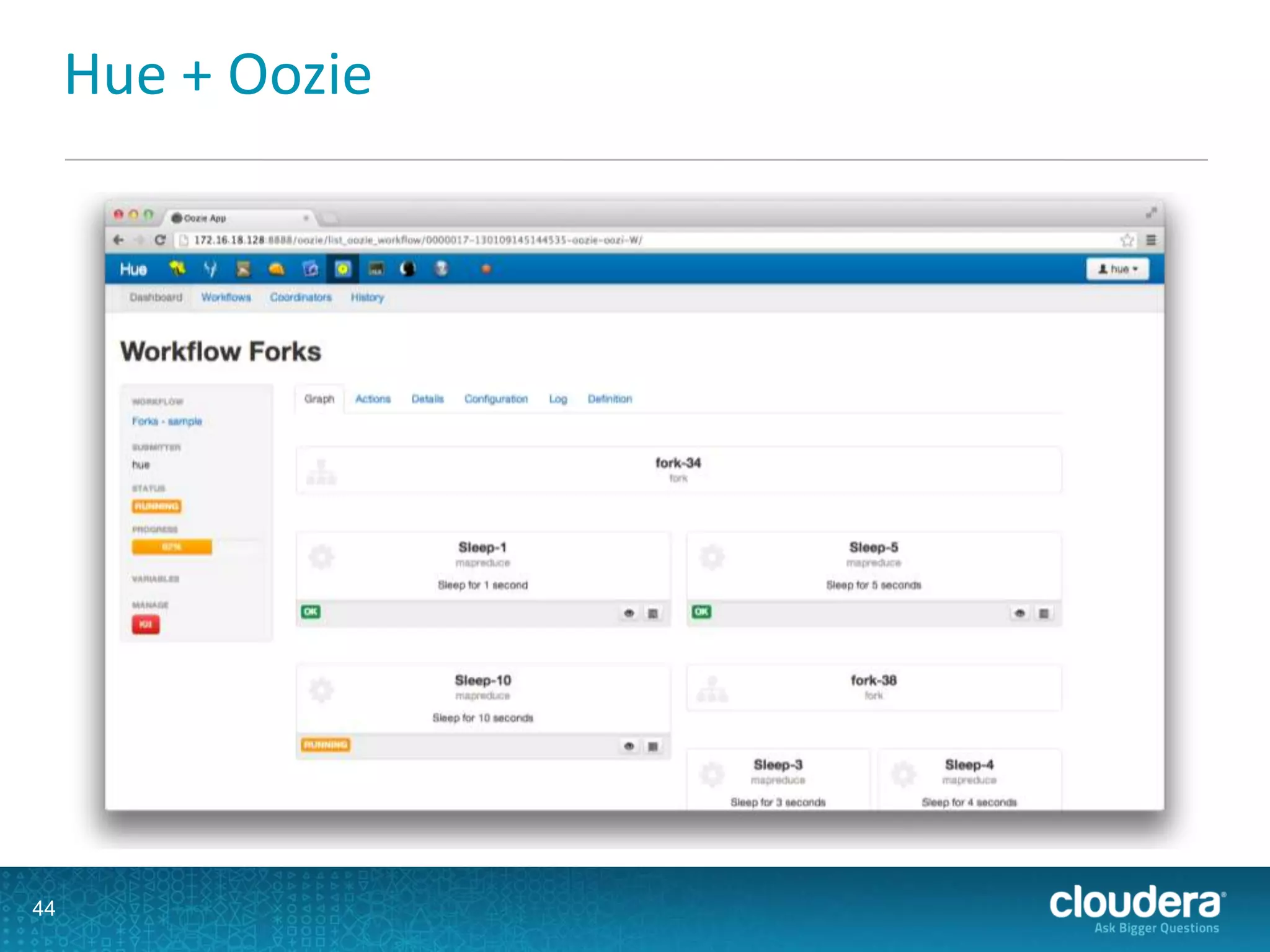

This document discusses scaling ETL processes with Hadoop. It describes using Hadoop for extracting data from various structured and unstructured sources, transforming data using MapReduce and other tools, and loading data into data warehouses or other targets. Specific techniques covered include using Sqoop and Flume for extraction, partitioning and tuning data structures for transformation, and loading data in parallel for scaling. Workflow management with Oozie and monitoring with Cloudera Manager are also discussed.