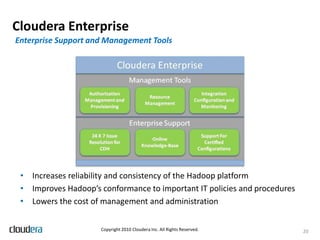

This document discusses lessons learned from productionizing Hadoop clusters. It emphasizes the importance of proper planning, data ingestion infrastructure, ETL processing tools, authentication and authorization, monitoring, and filling gaps not addressed by the Hadoop core software. The document provides best practices and considerations for setting up a complete and enterprise-ready Hadoop system.