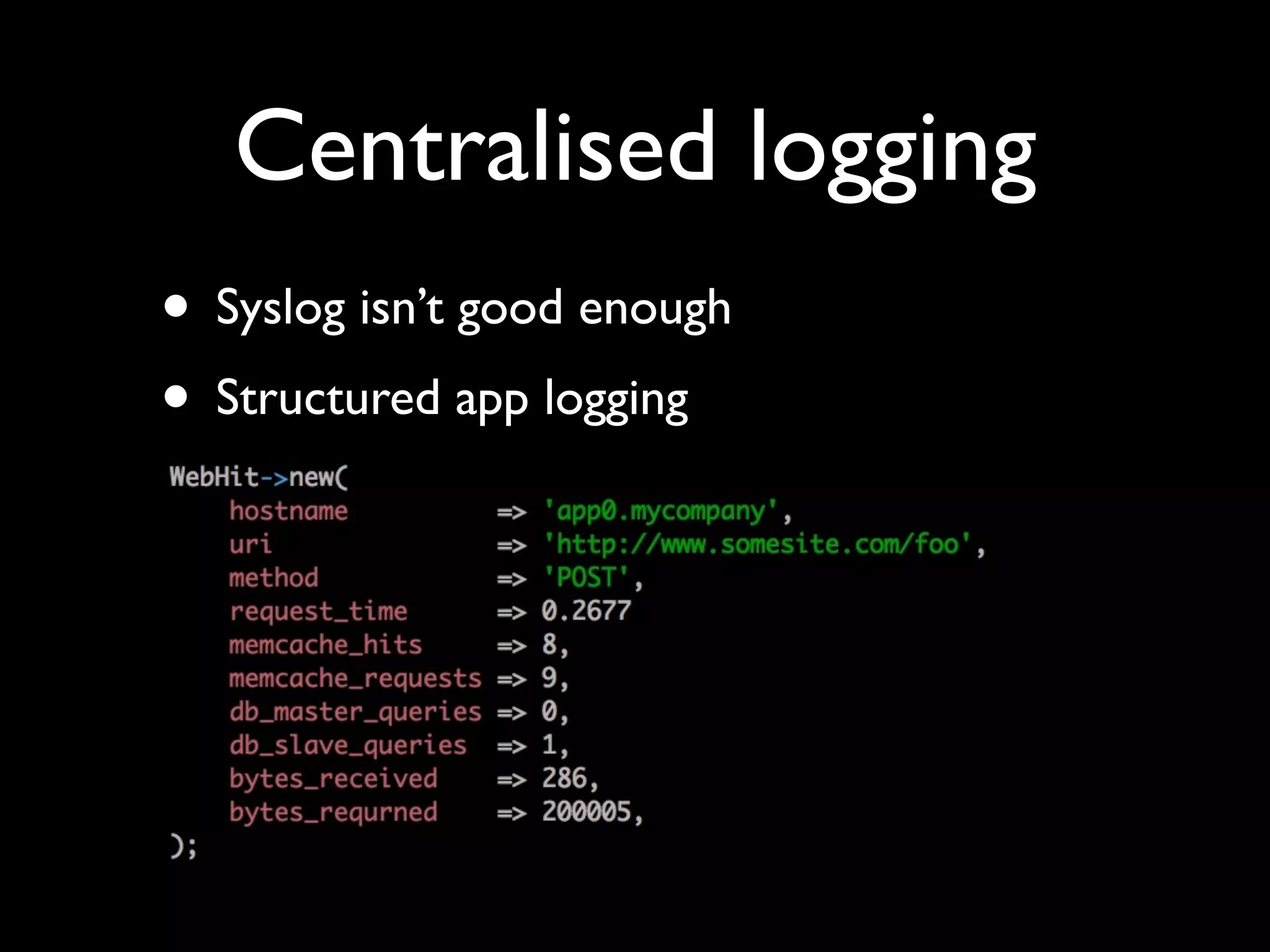

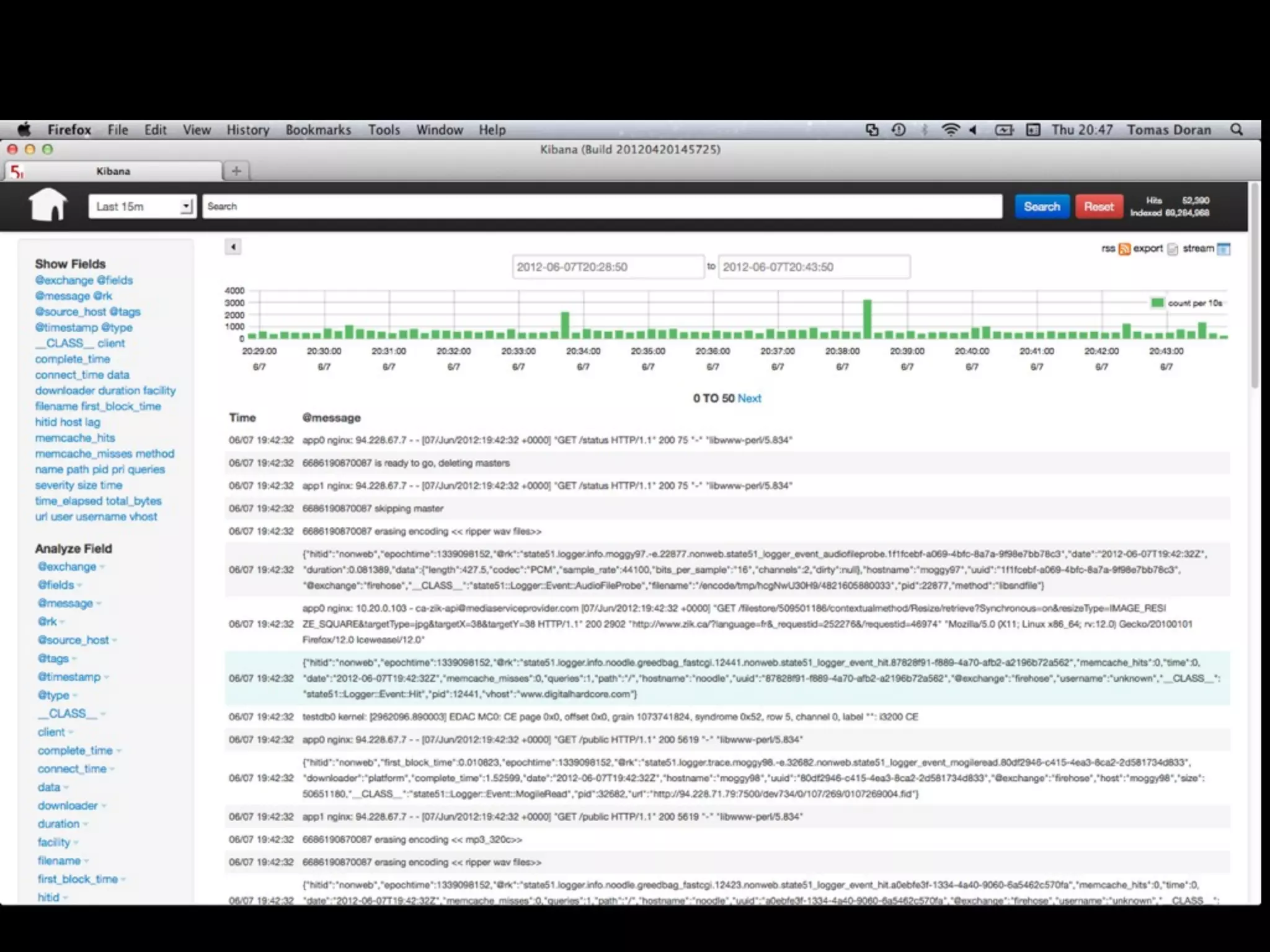

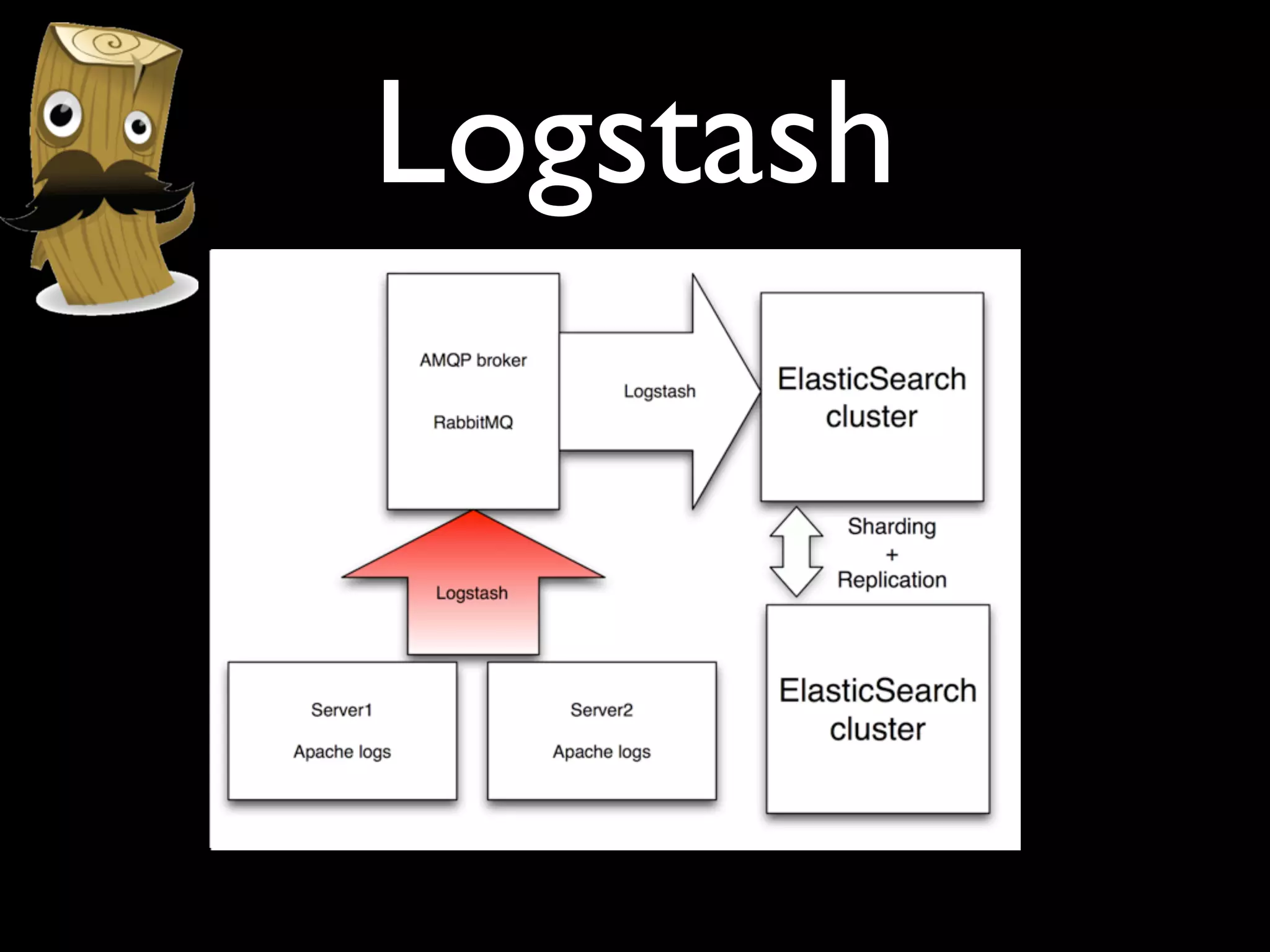

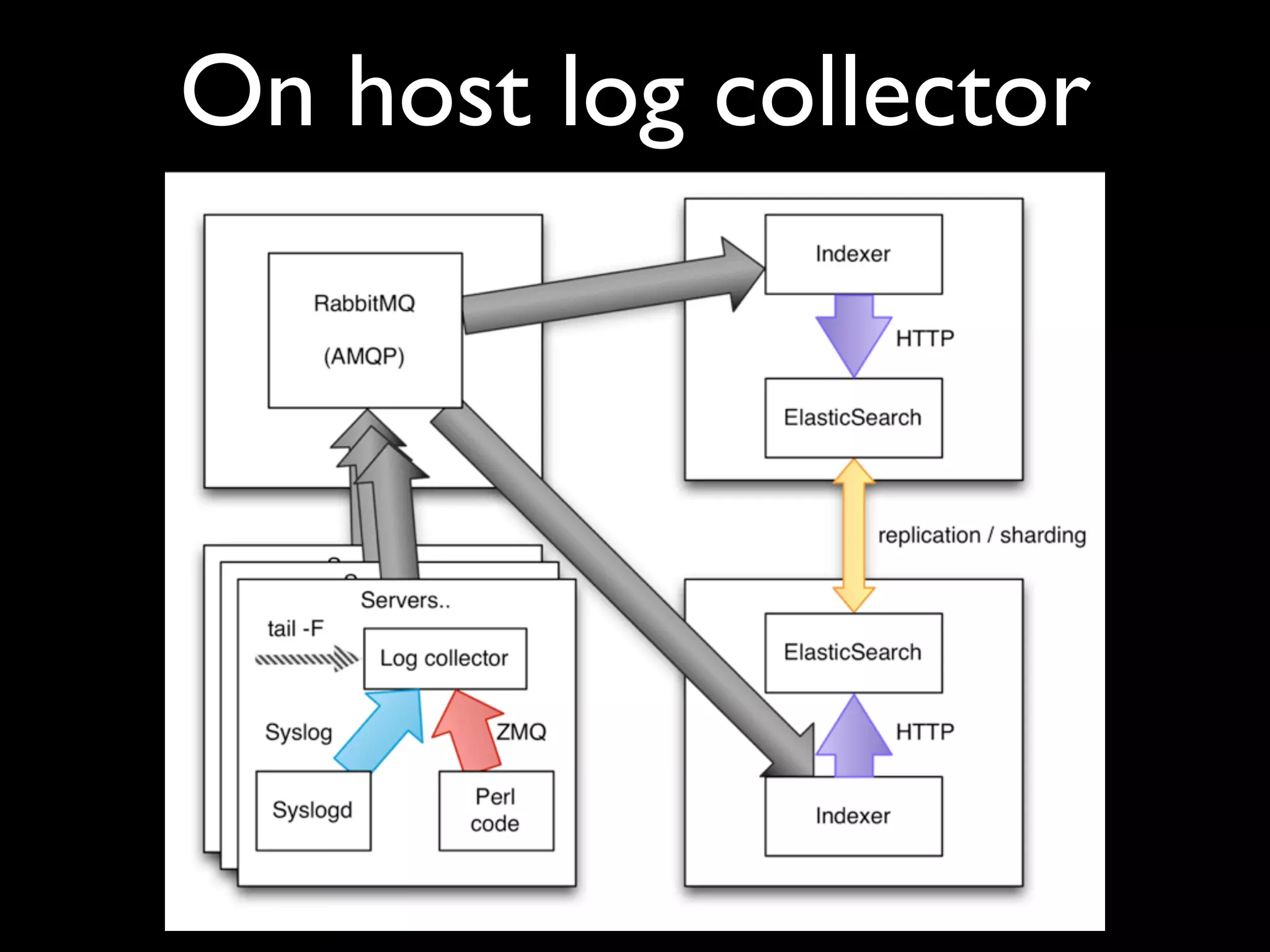

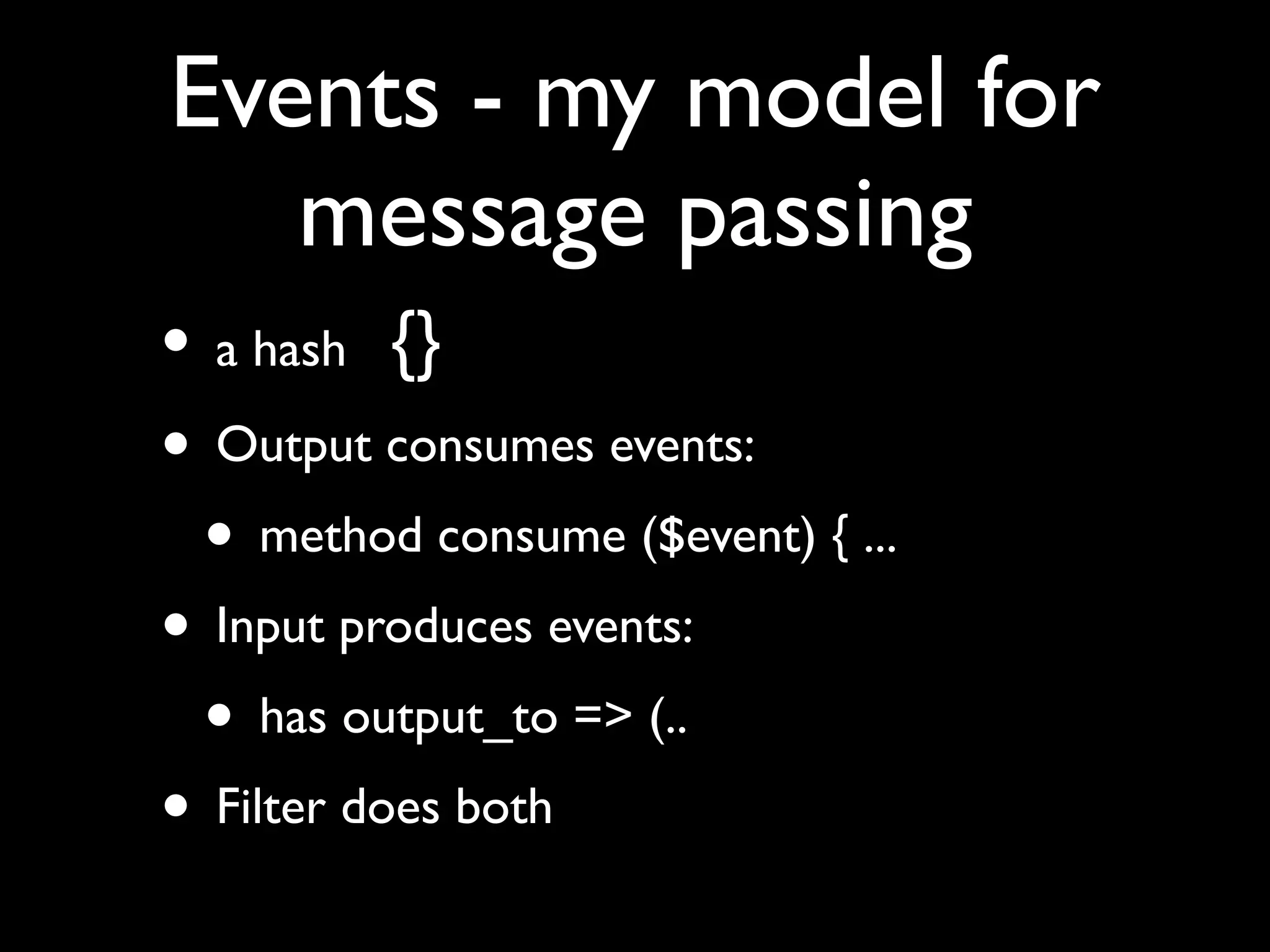

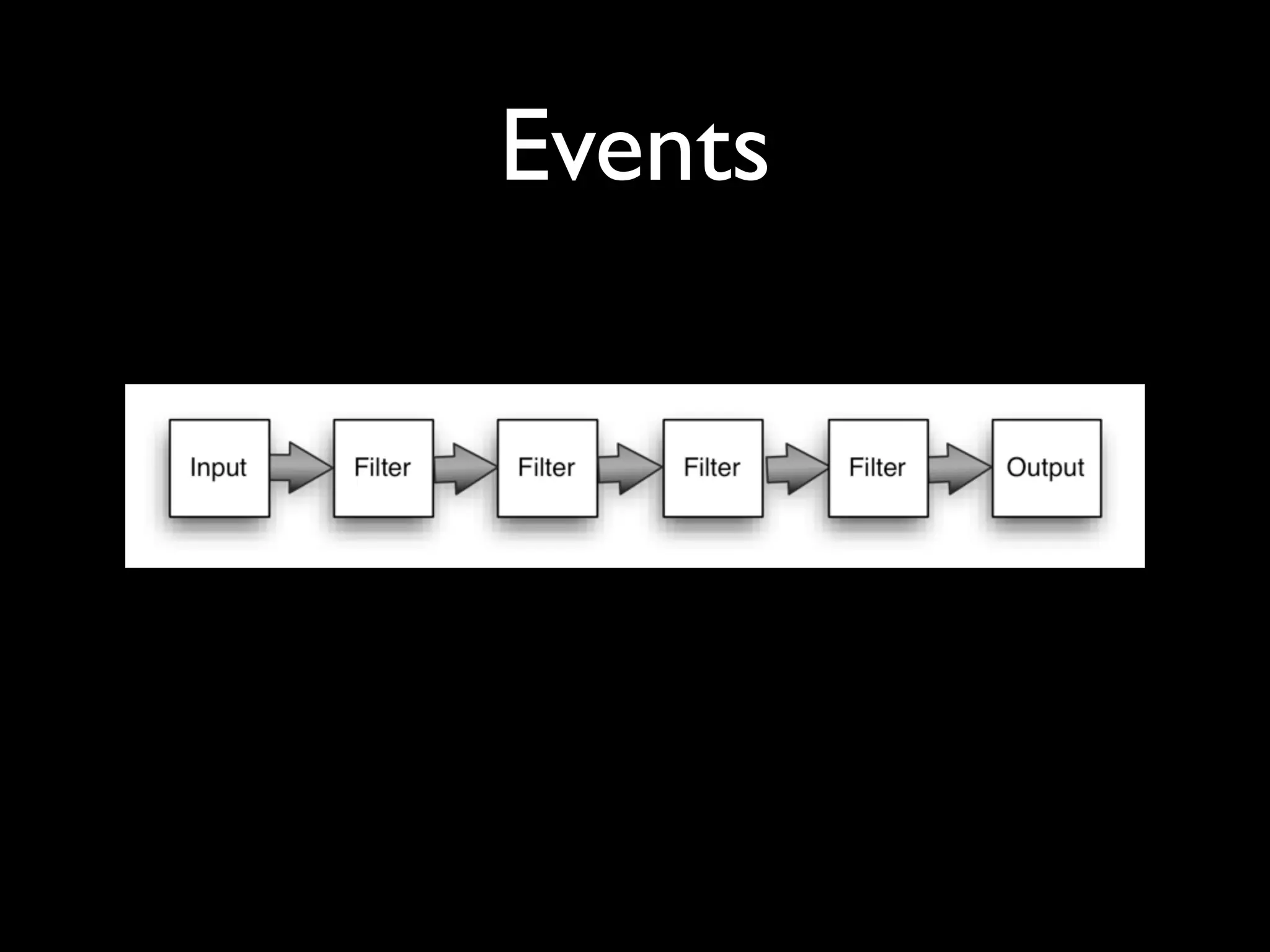

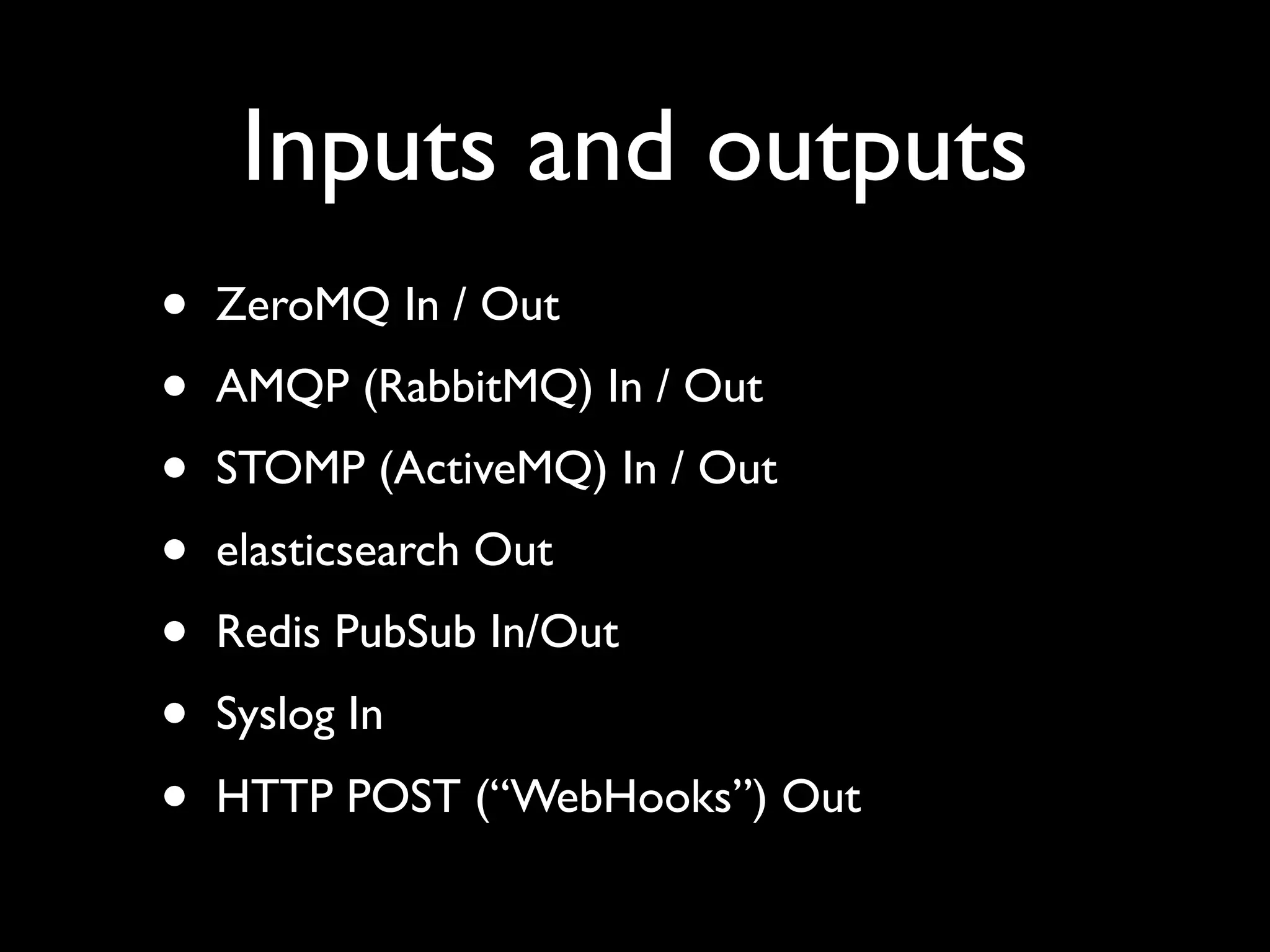

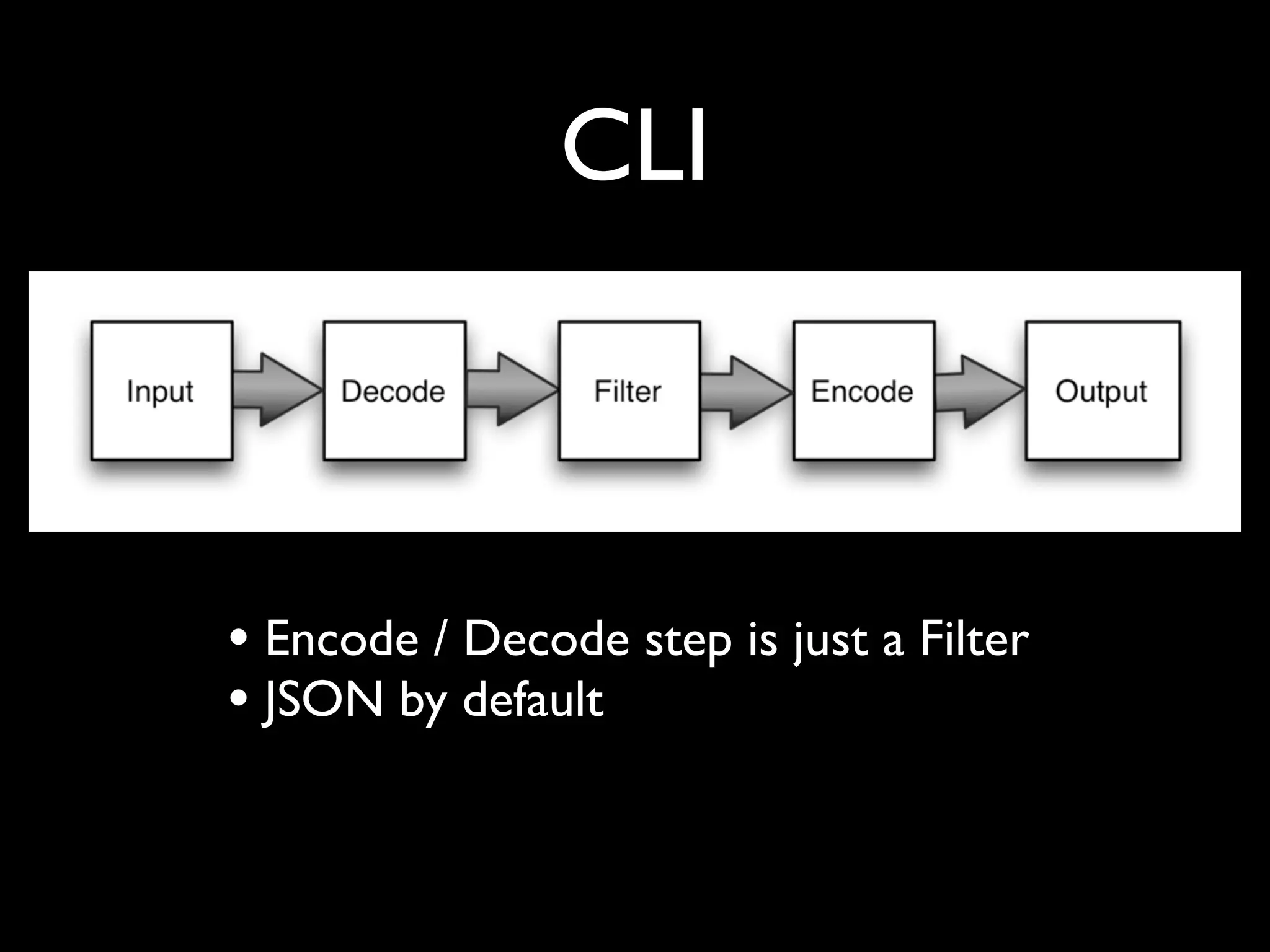

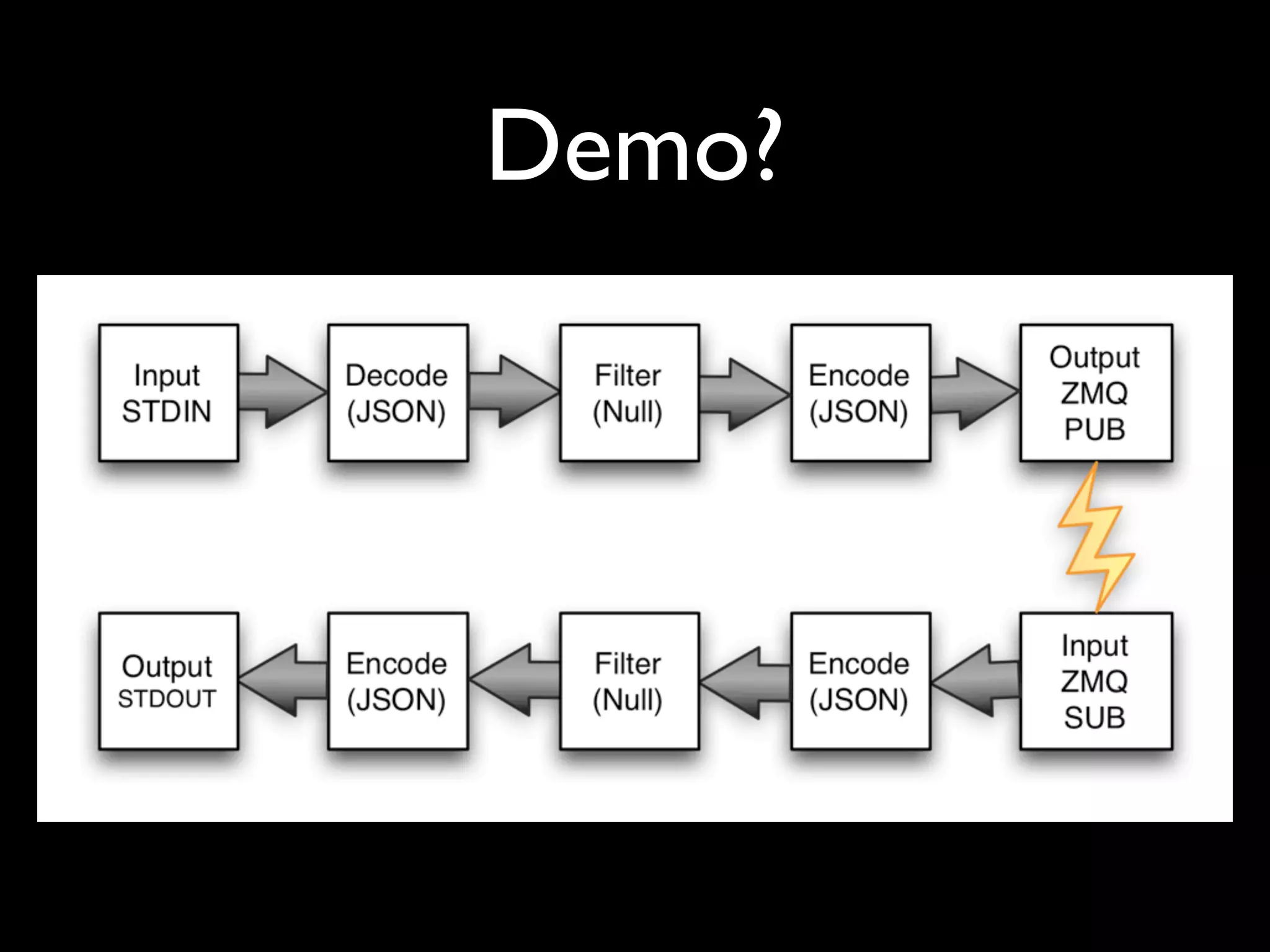

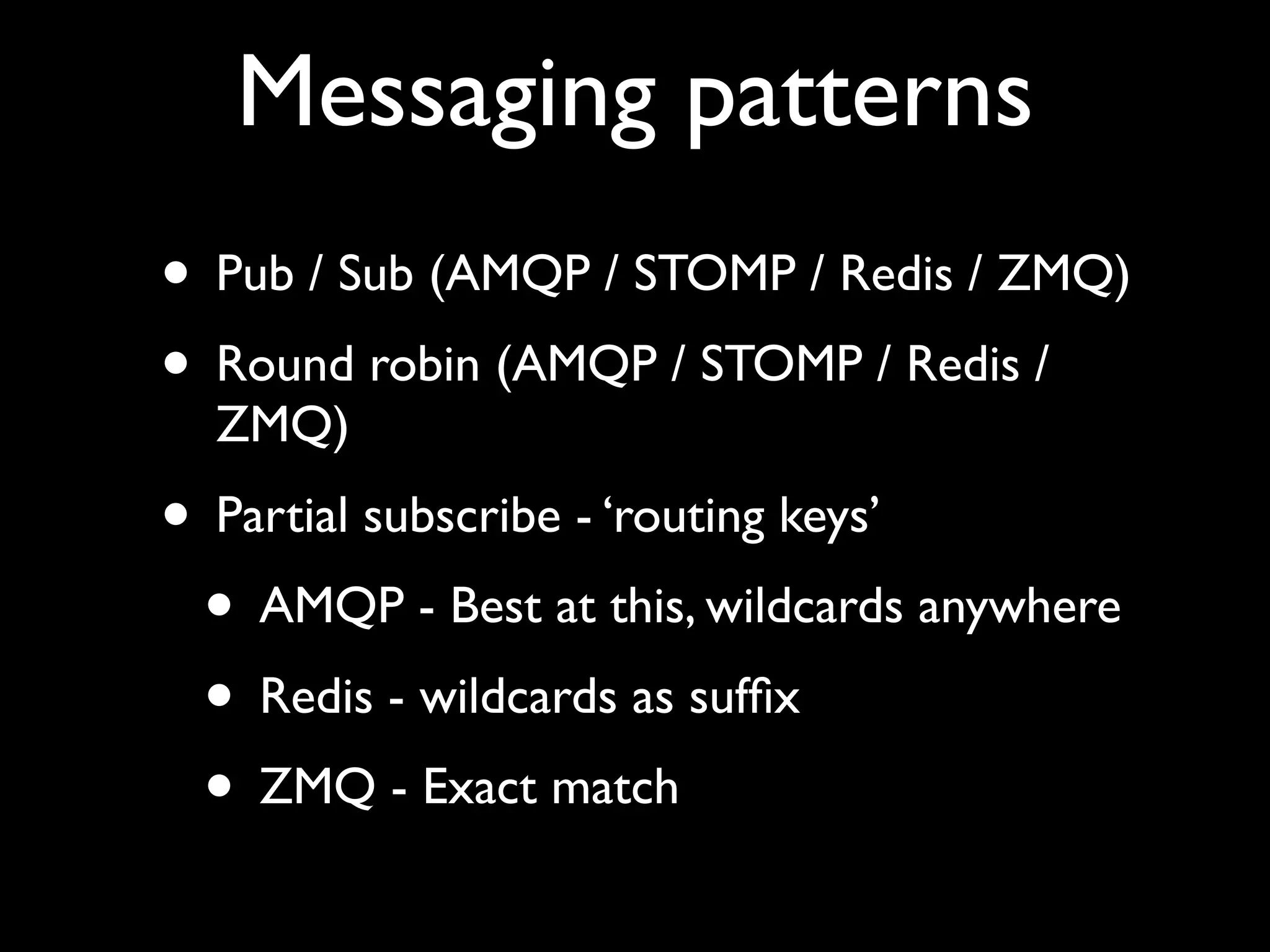

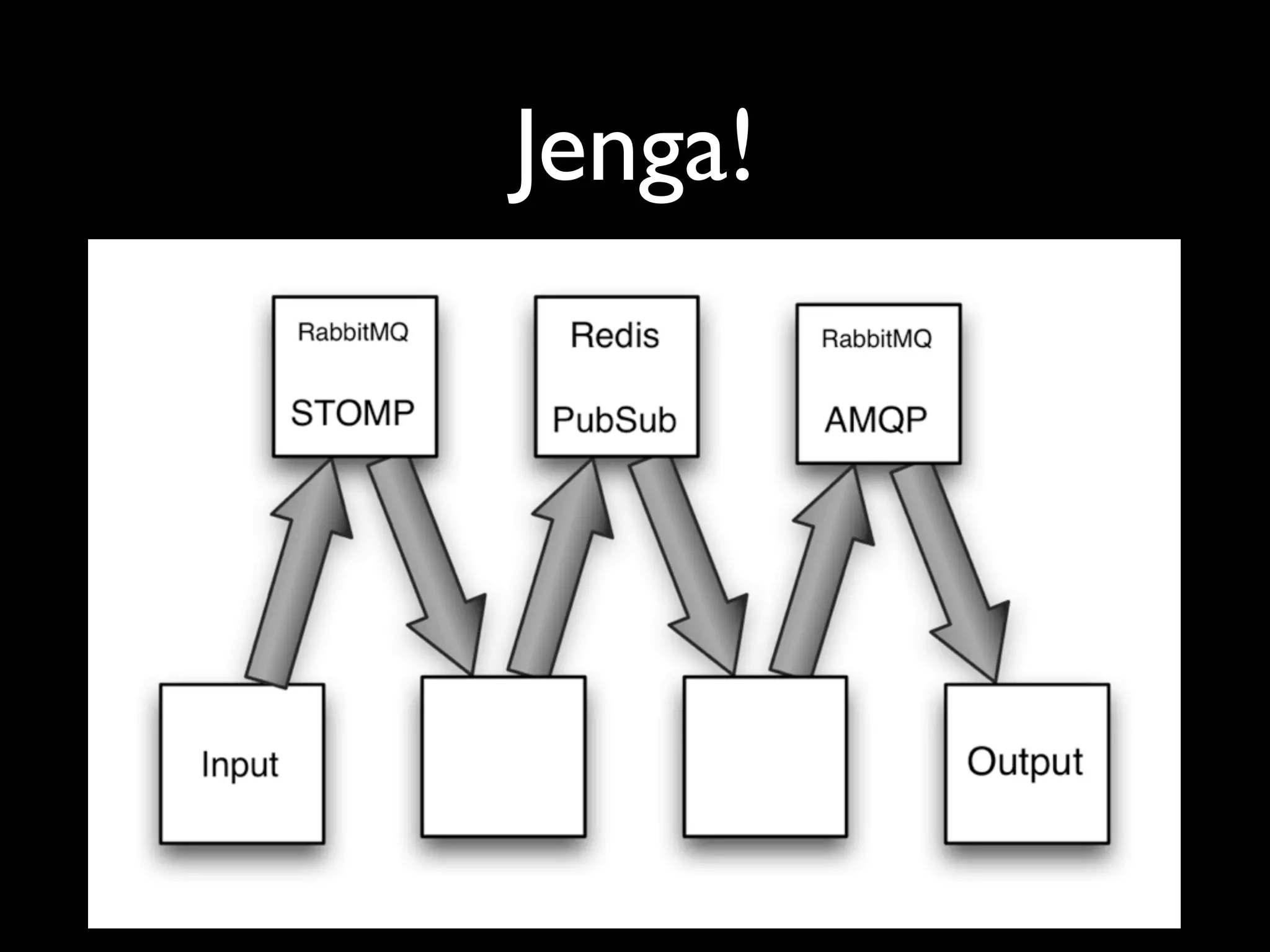

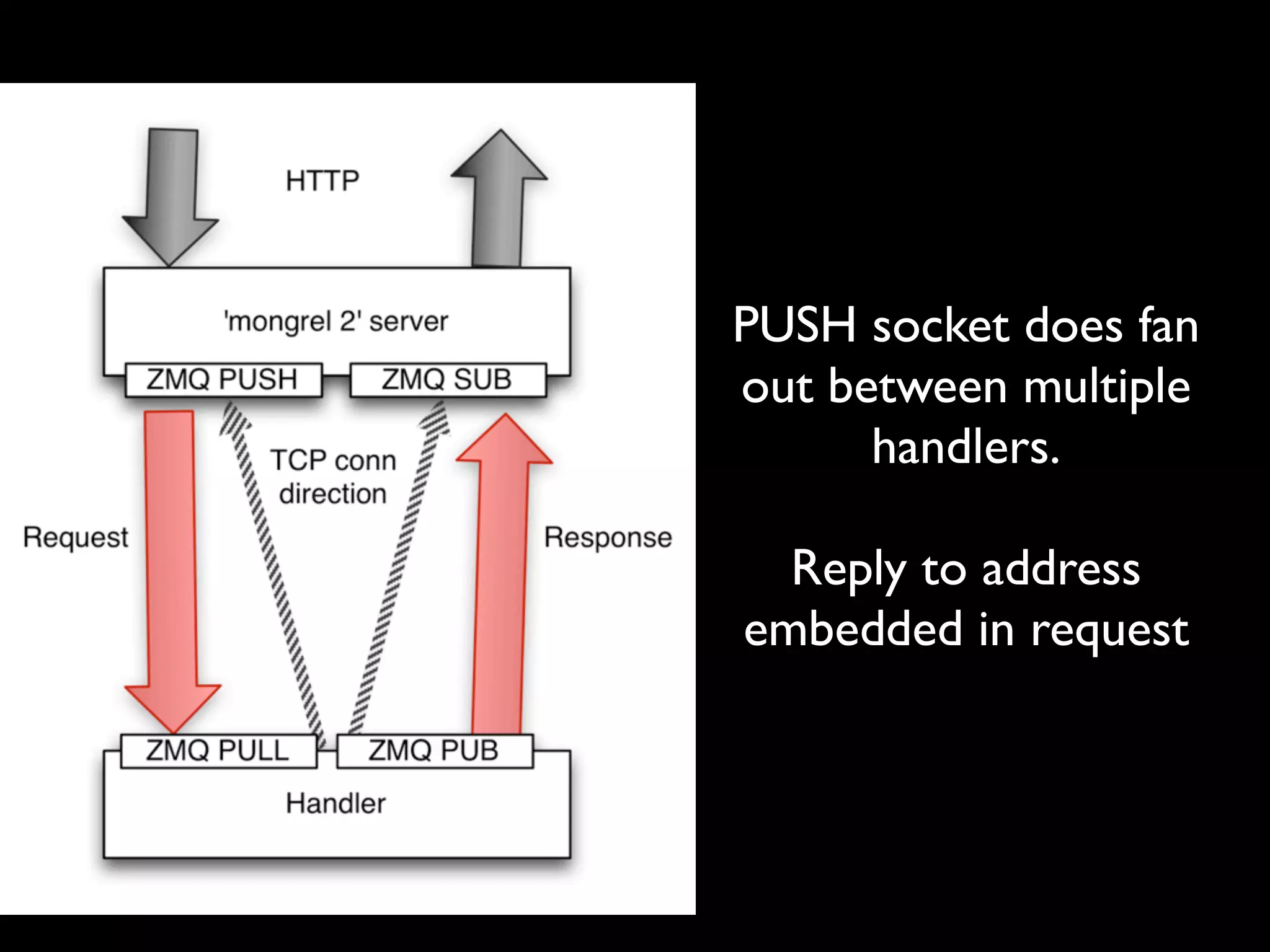

The document discusses a new Perl library, message::passing, designed to improve logging and messaging interoperability. It highlights the insufficiencies of traditional logging methods like syslog and logstash while proposing a simplified solution utilizing structured app logging and ZeroMQ for efficient message passing. The framework also supports various messaging patterns and is validated through production use by other developers.