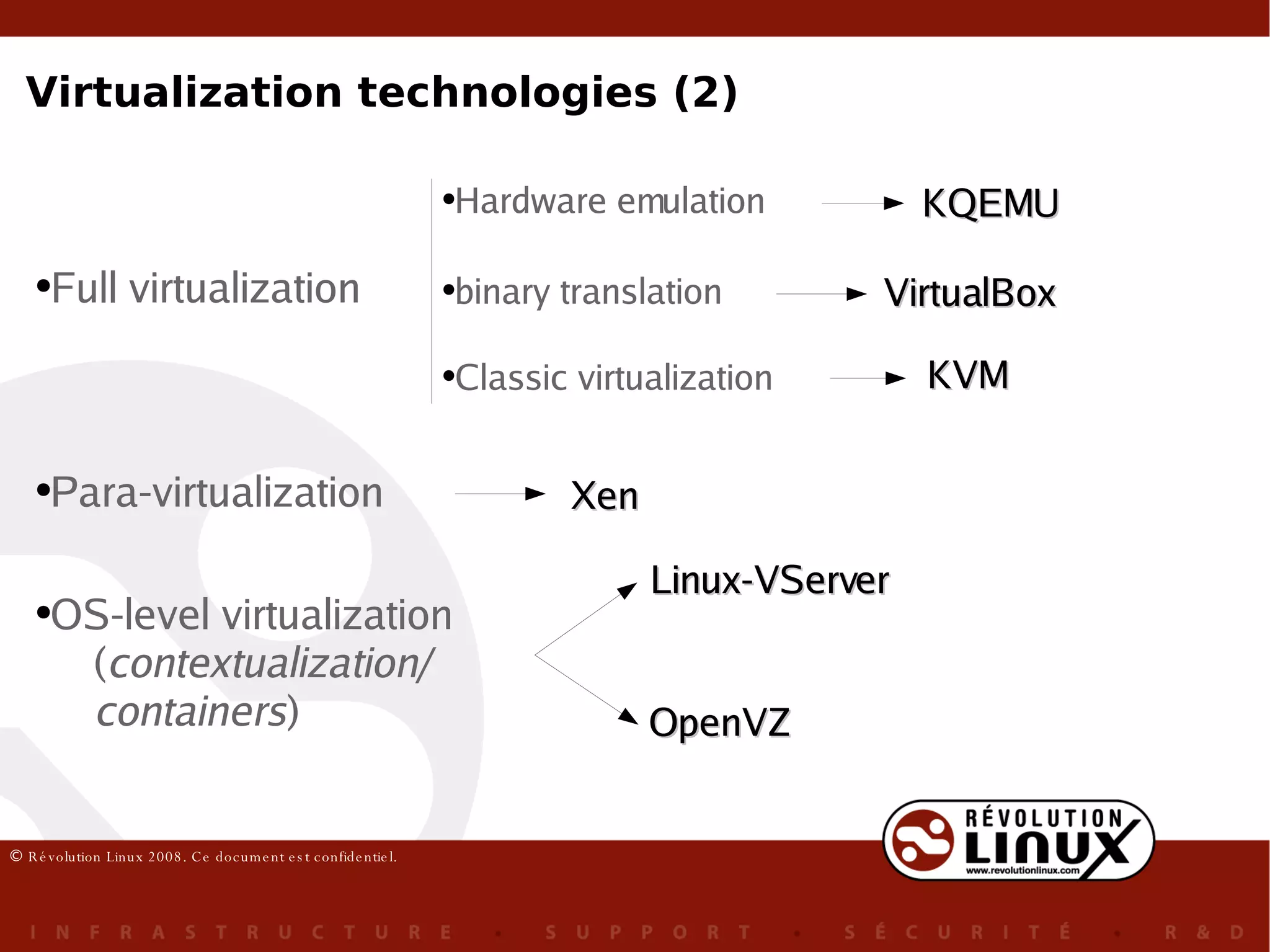

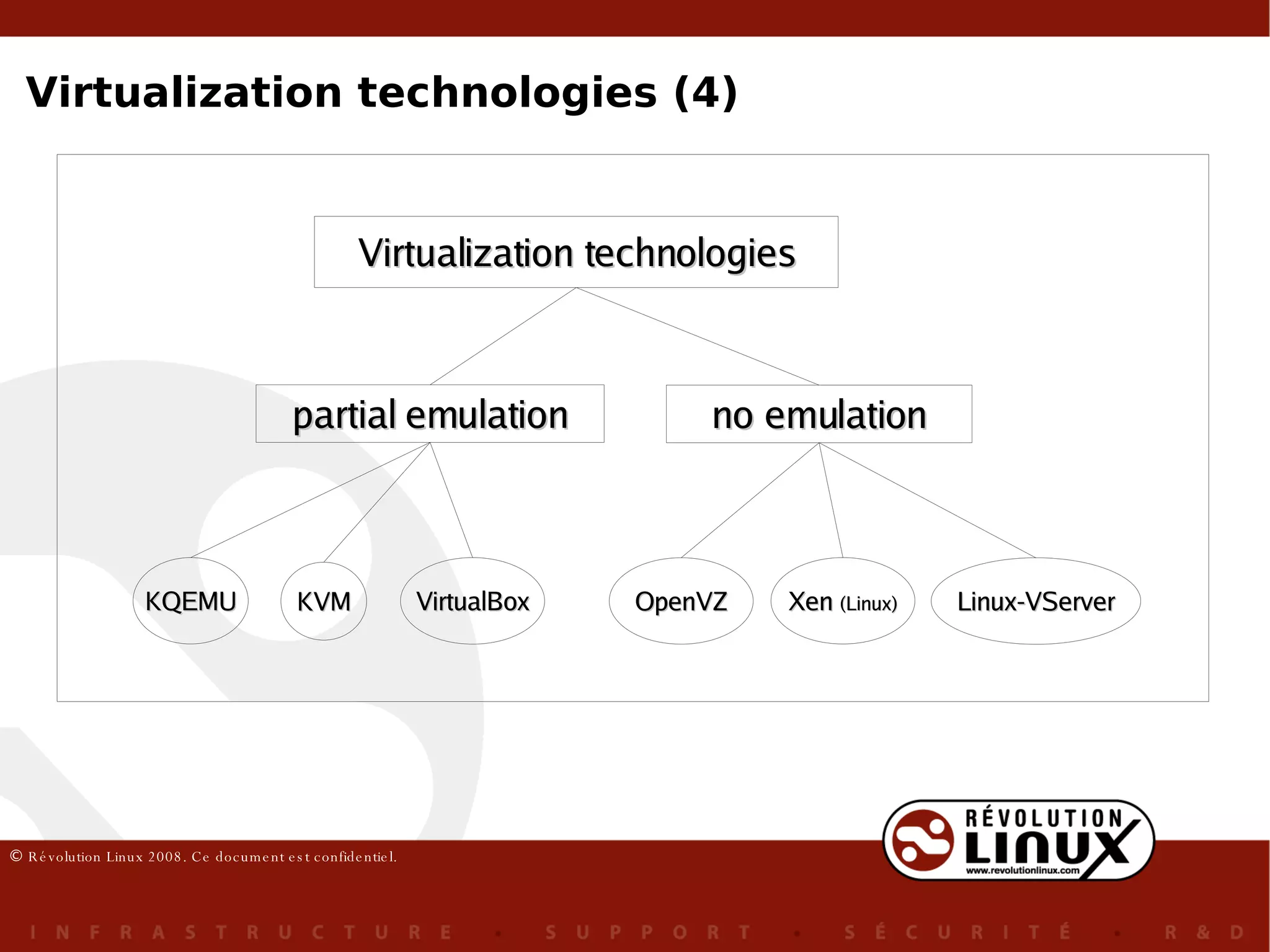

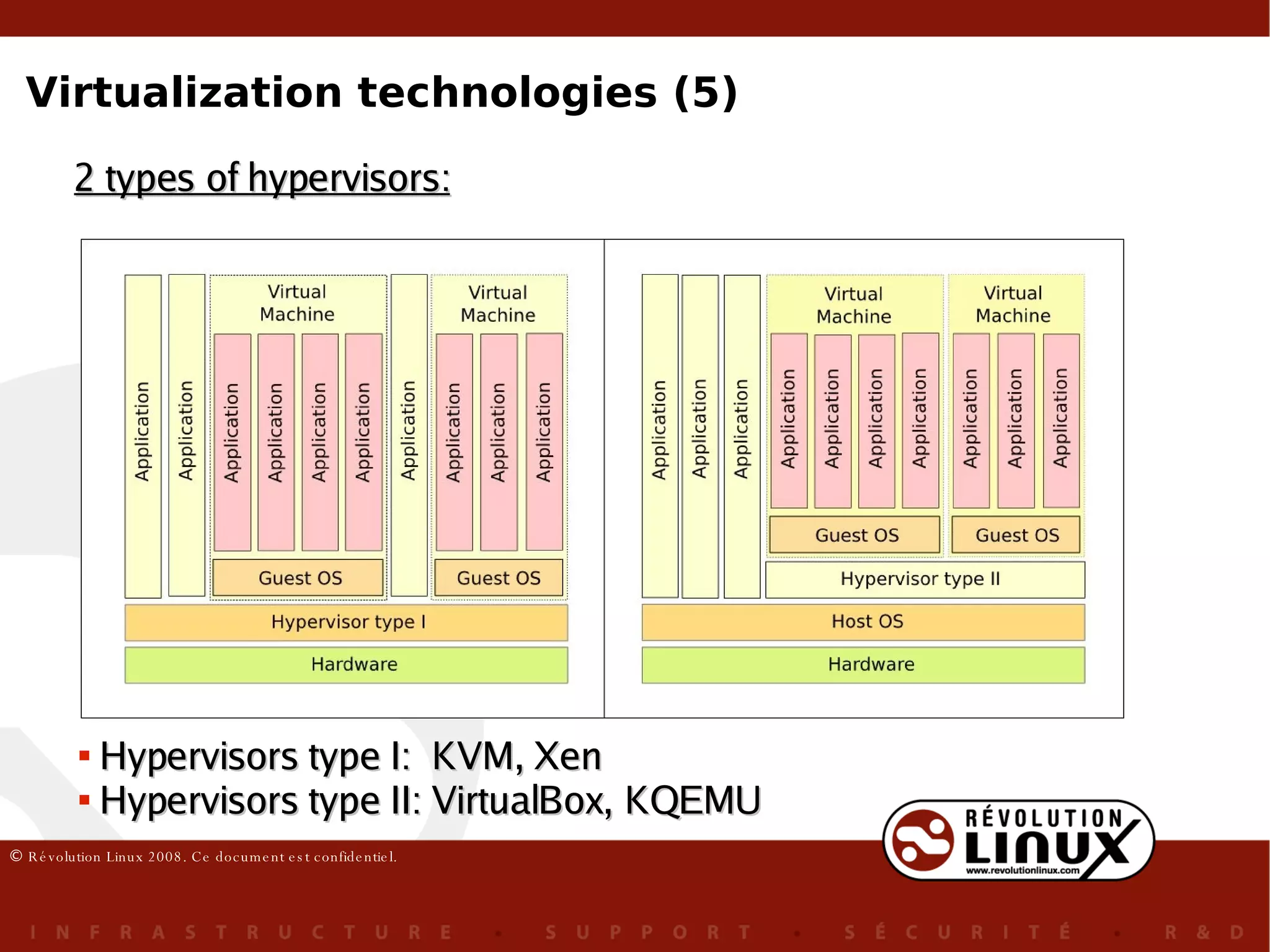

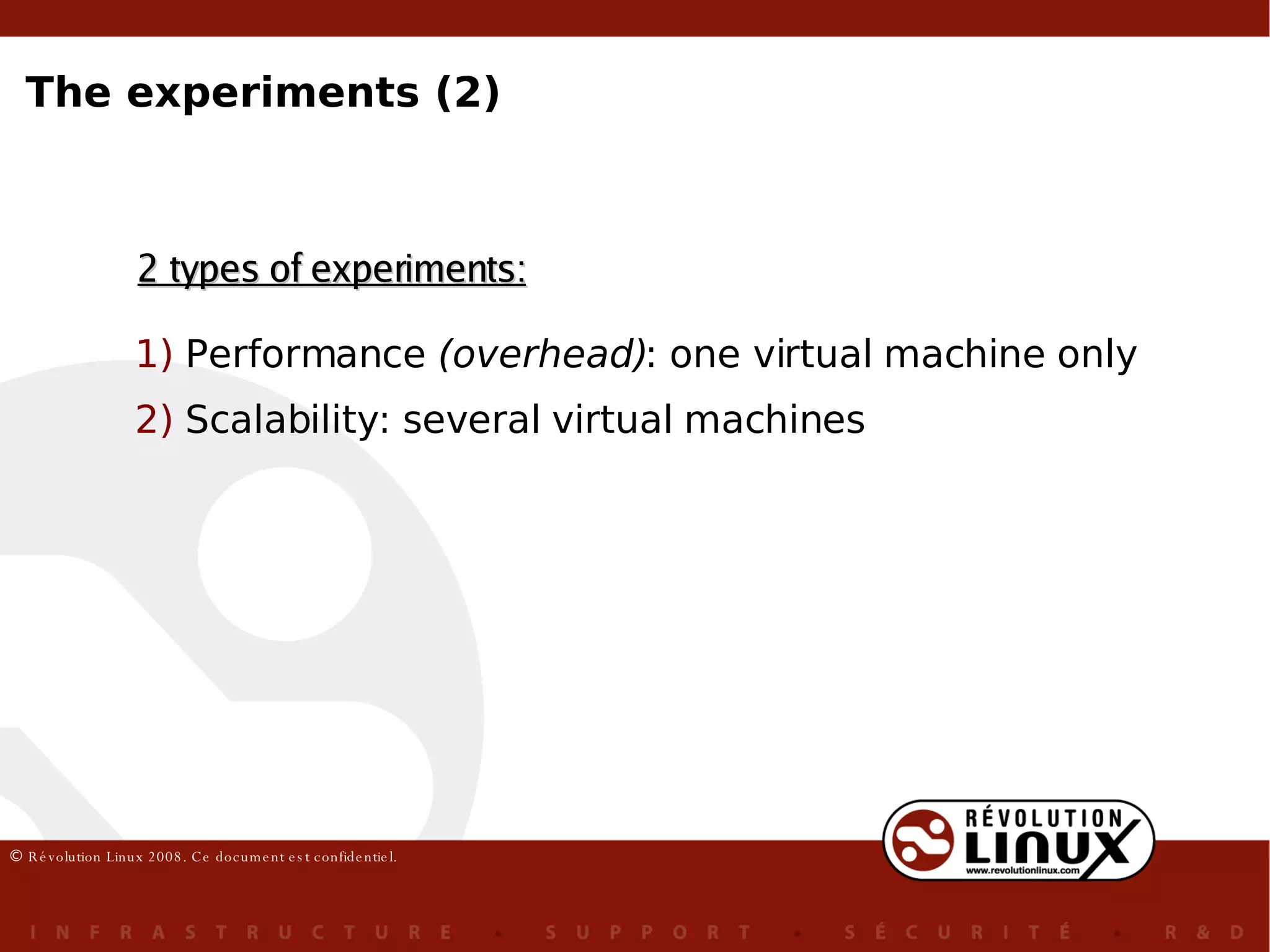

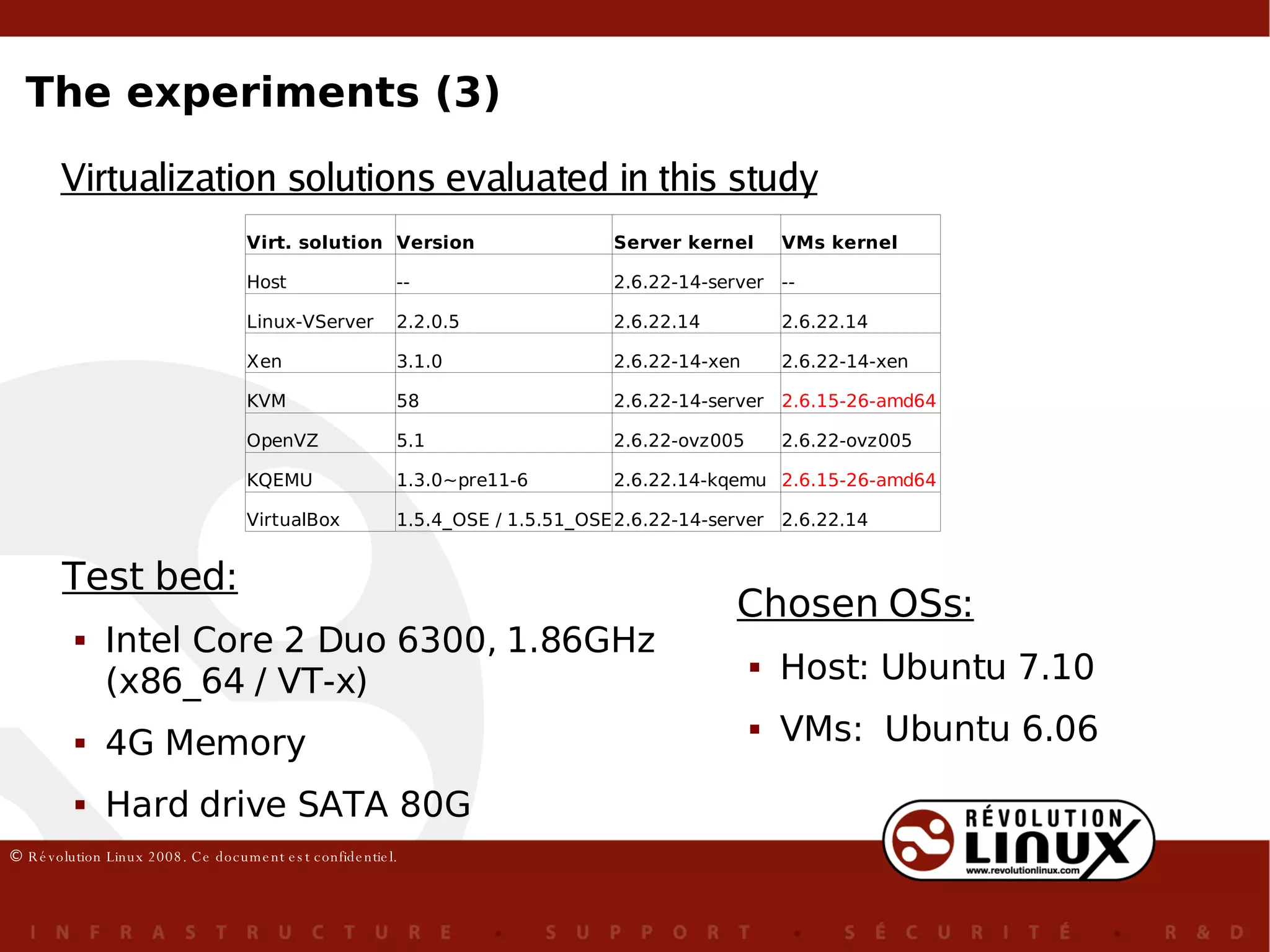

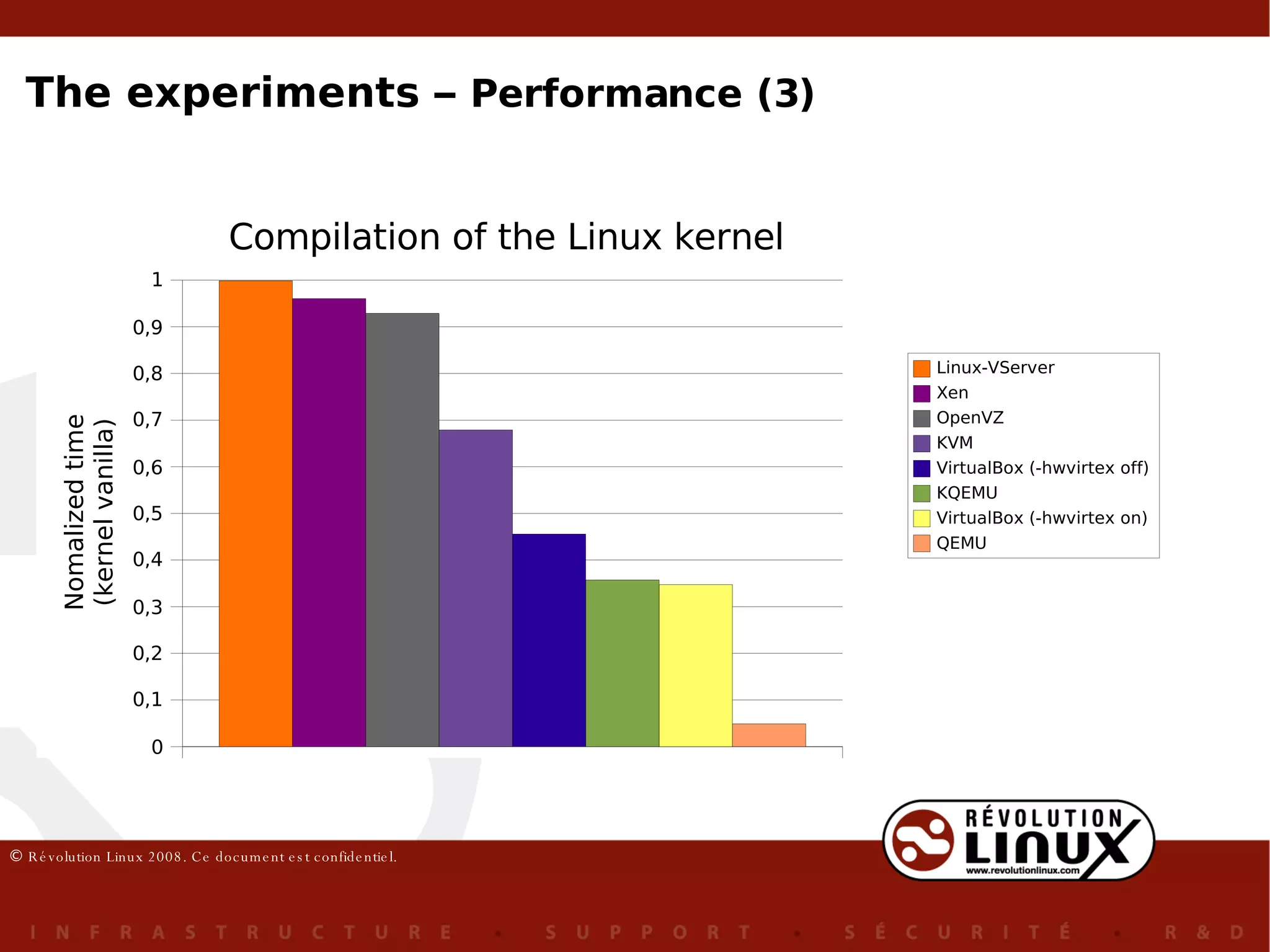

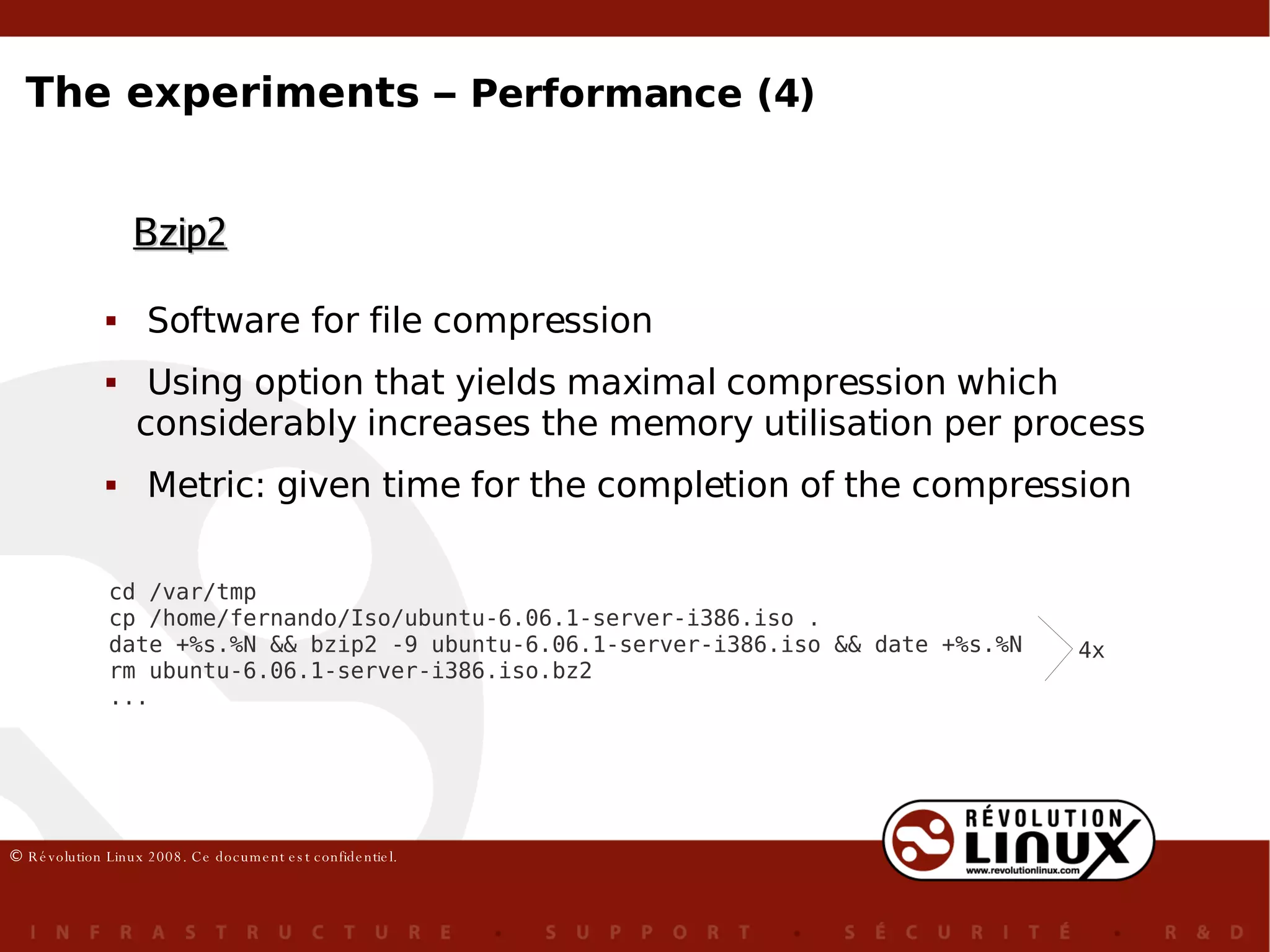

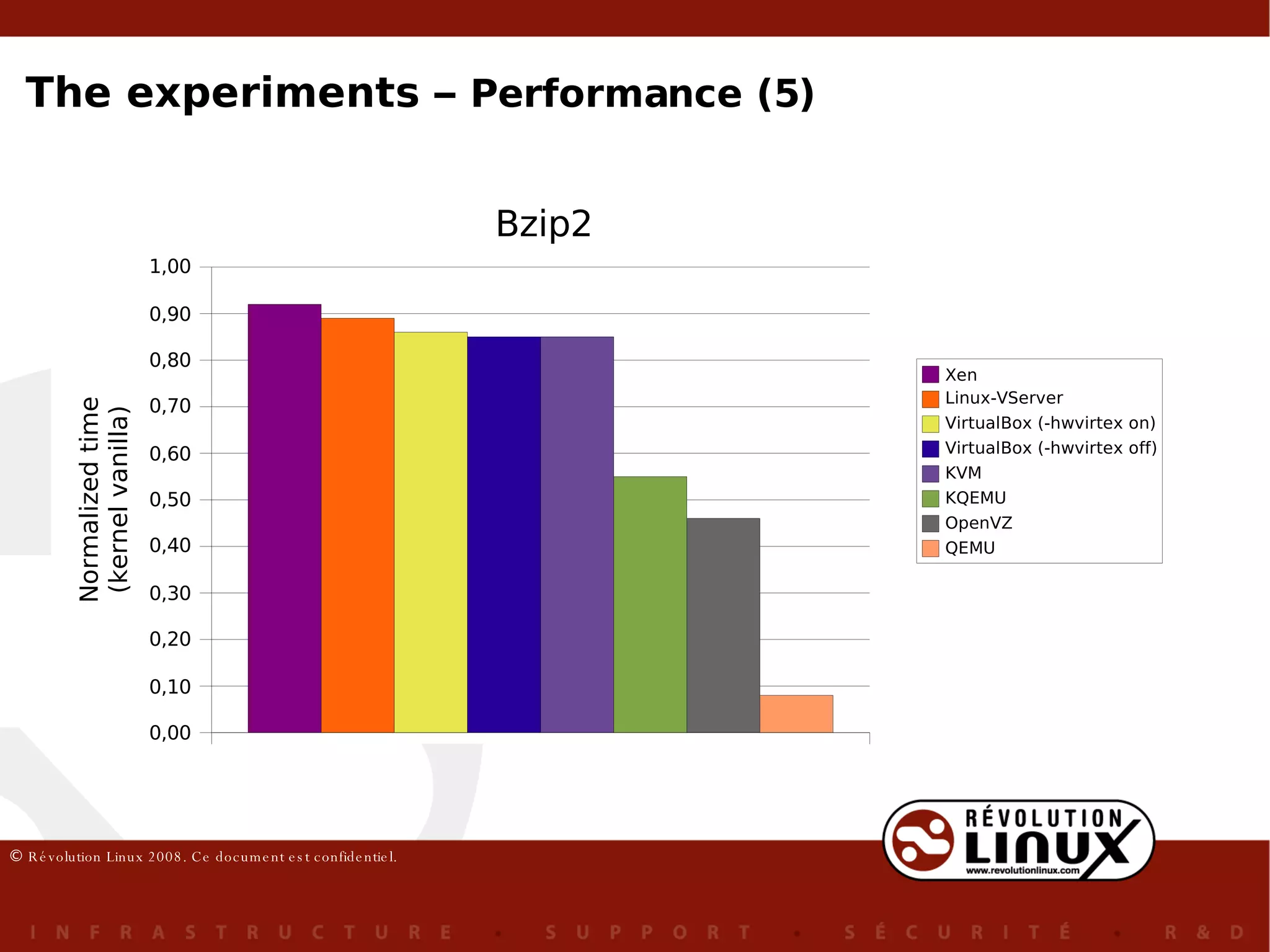

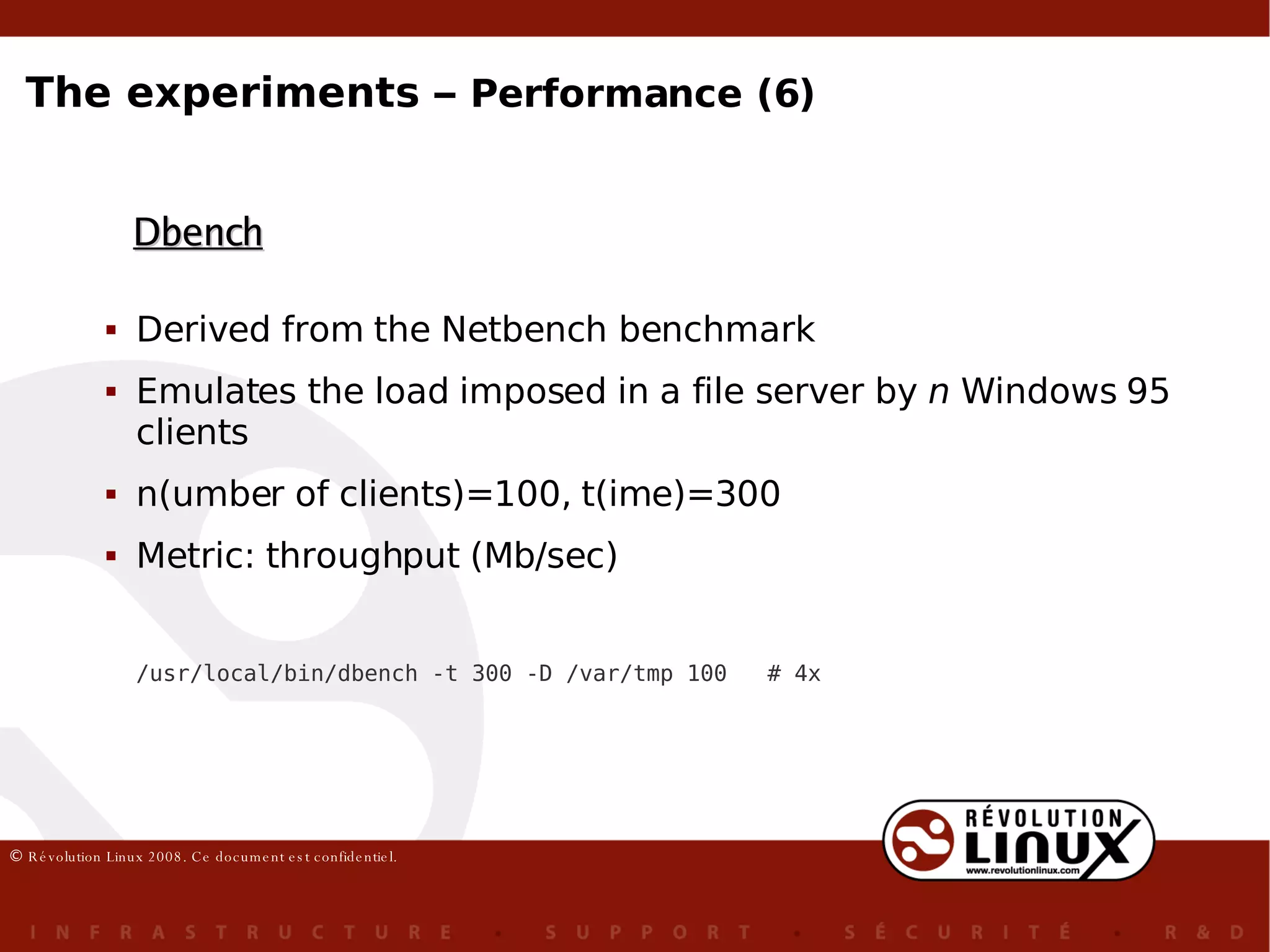

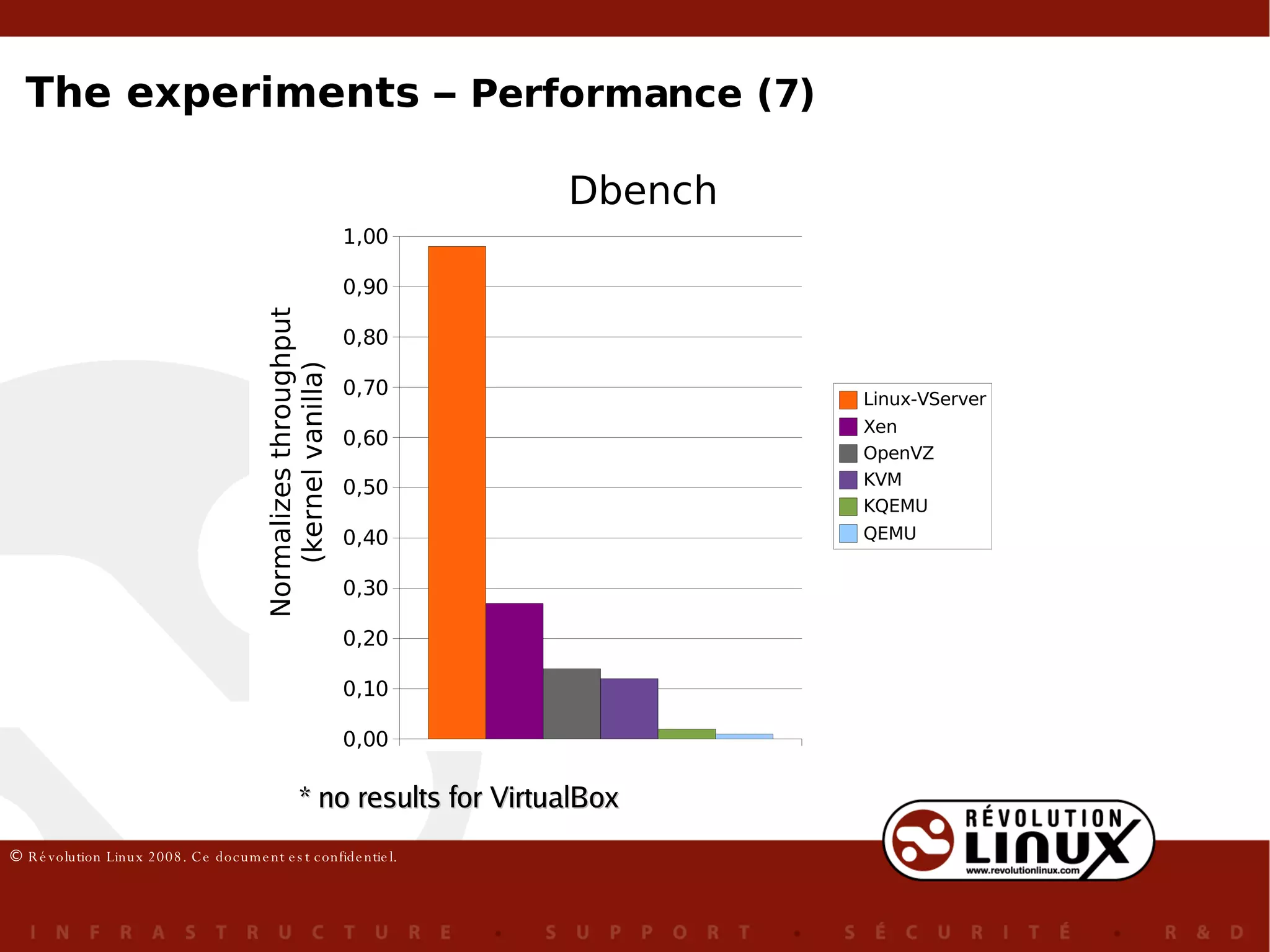

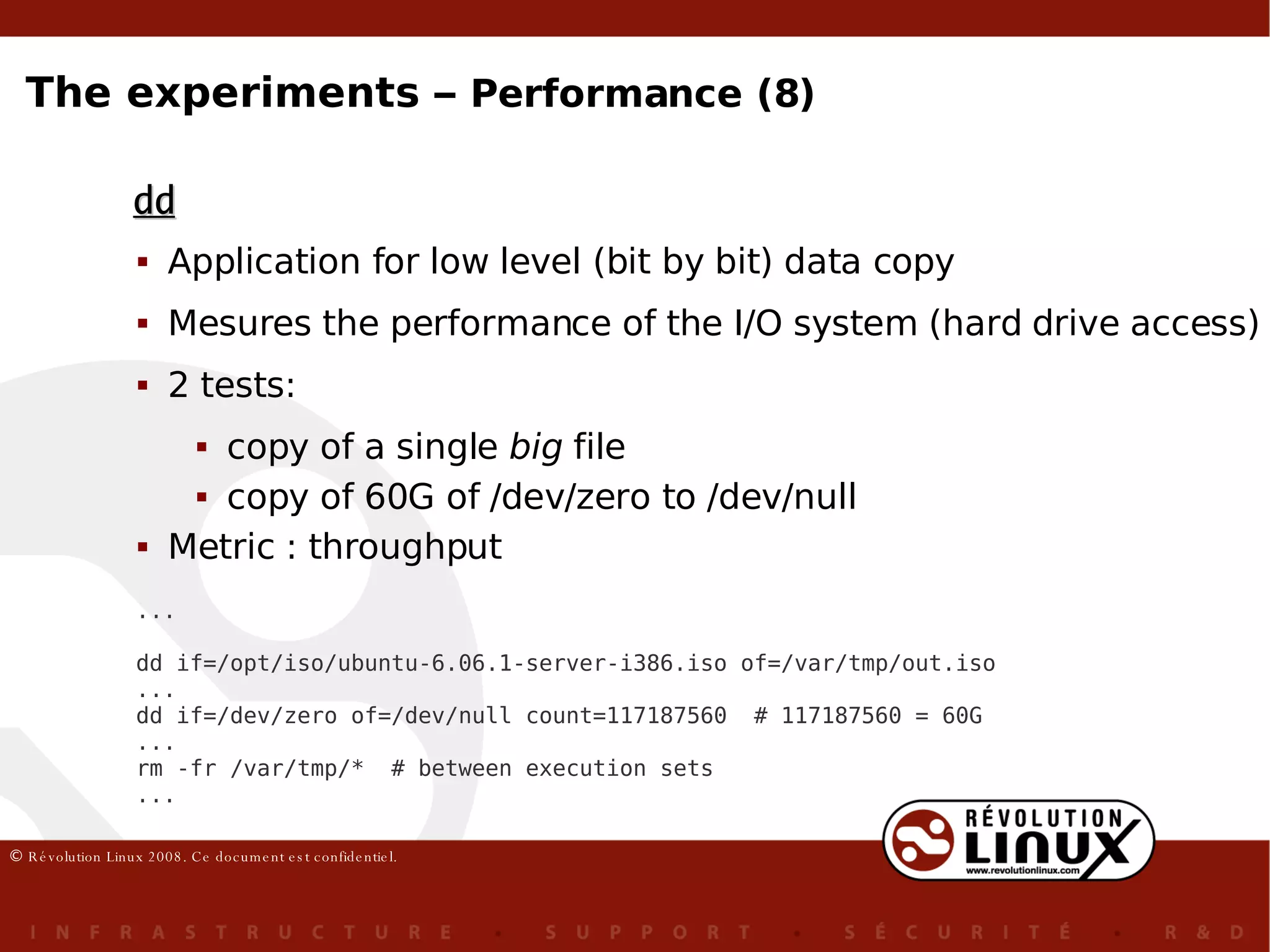

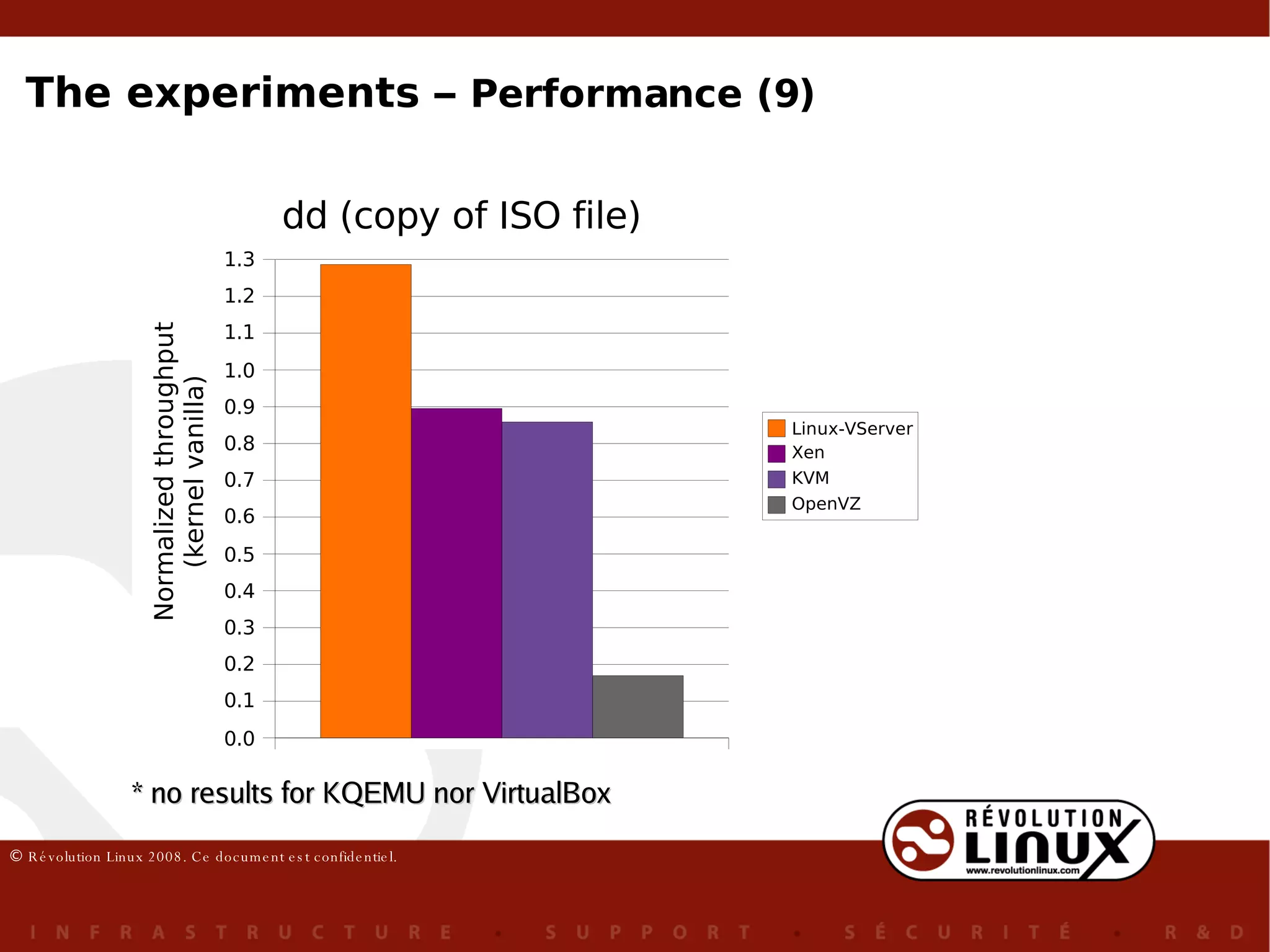

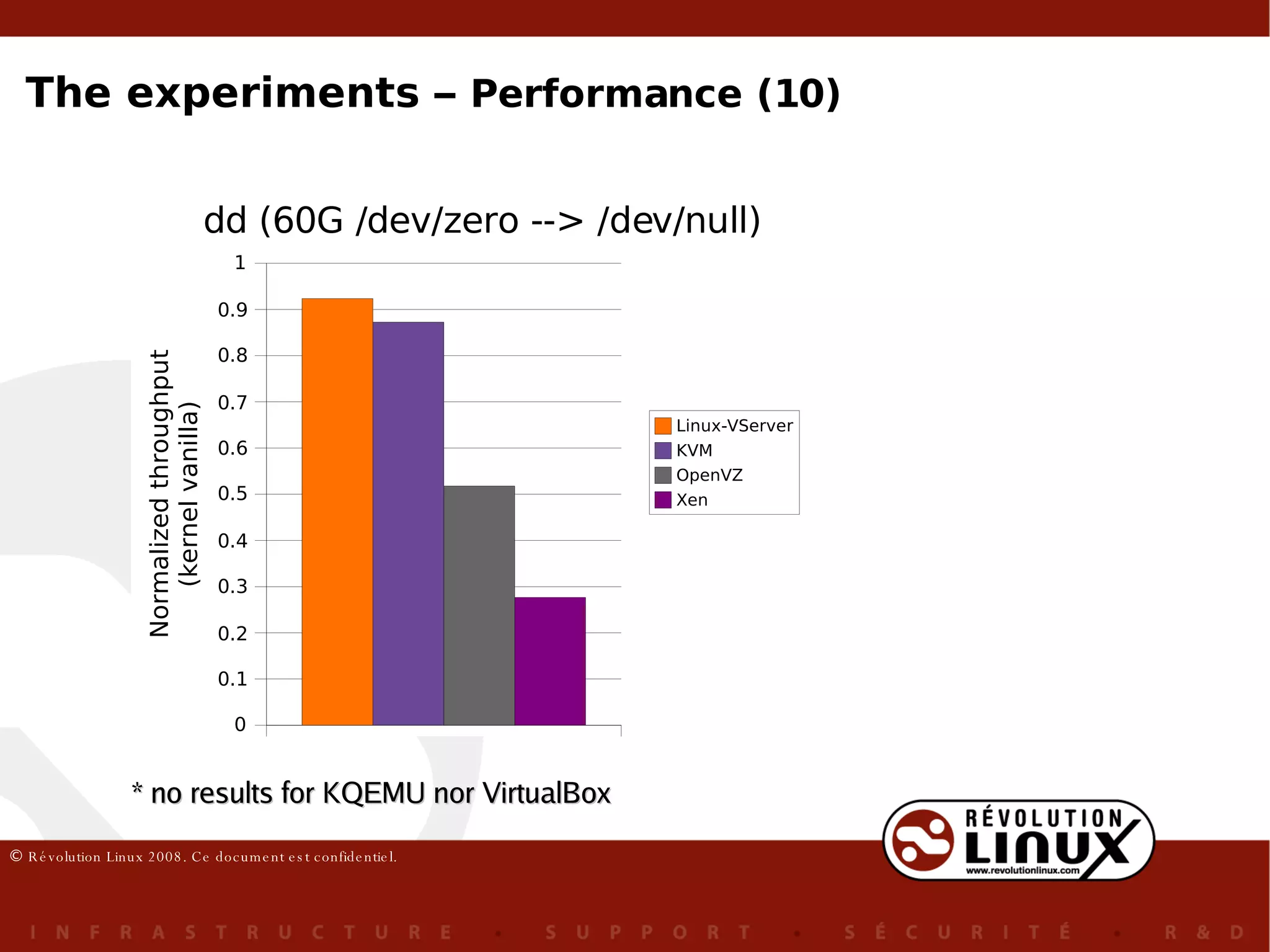

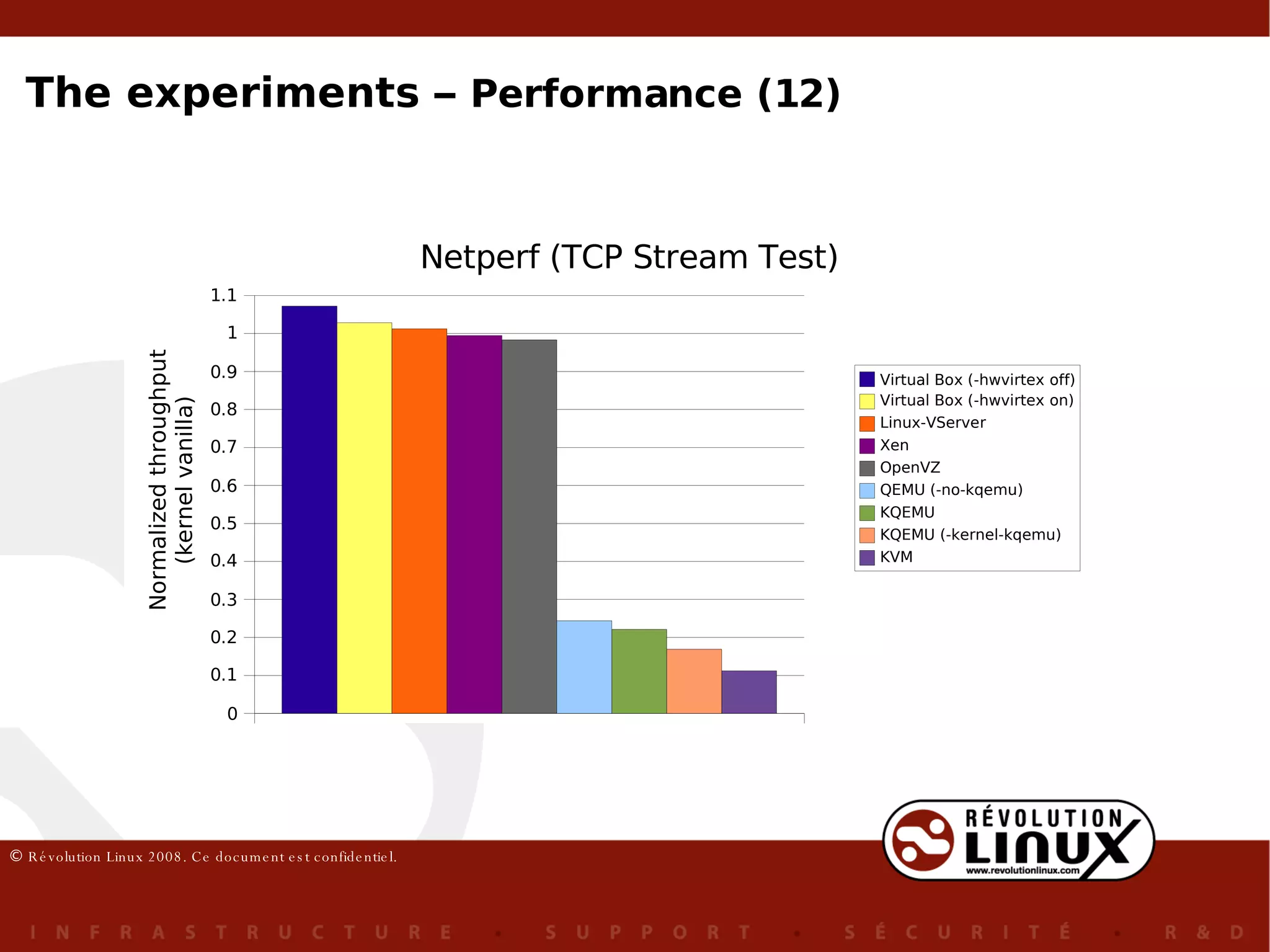

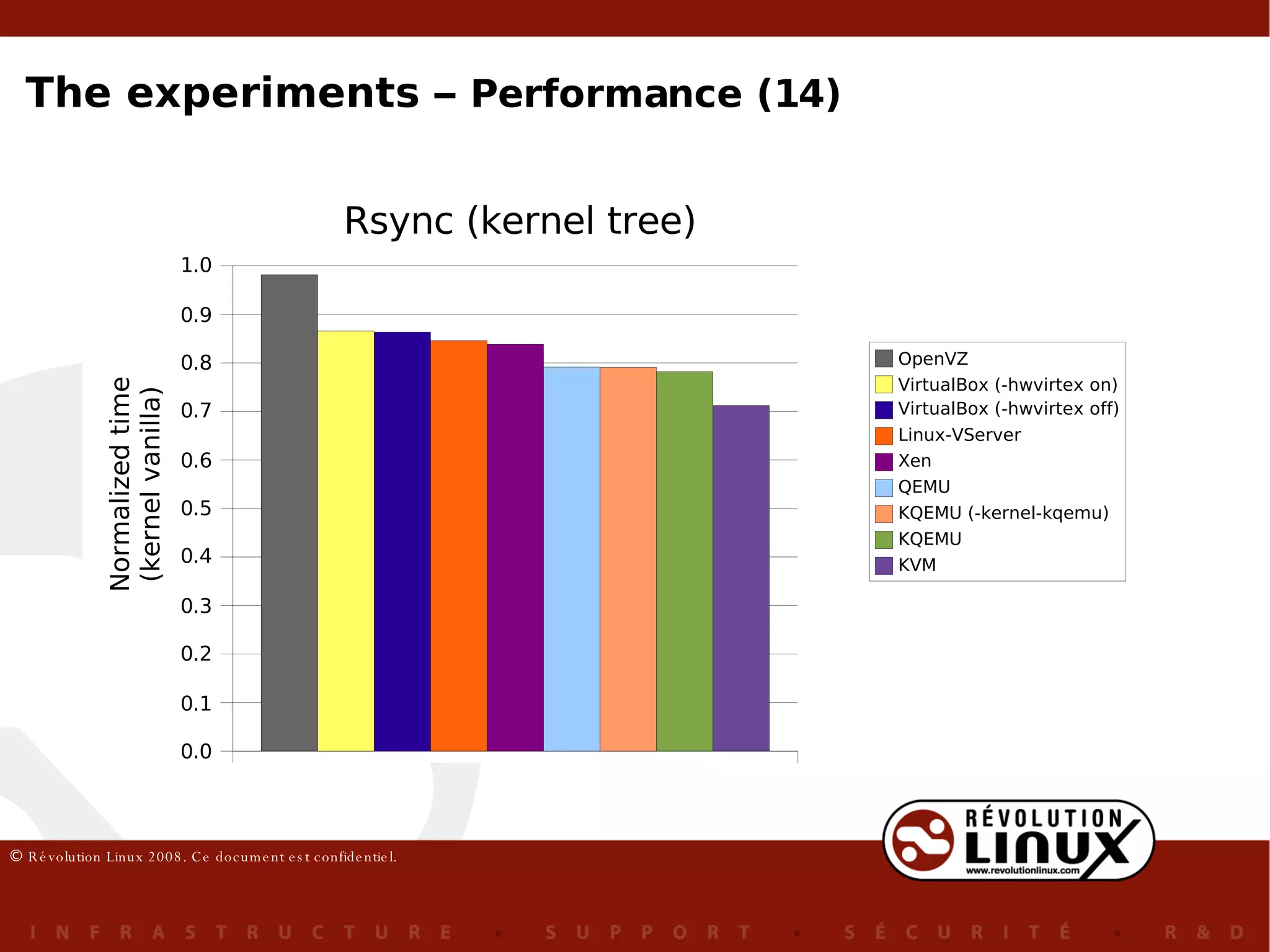

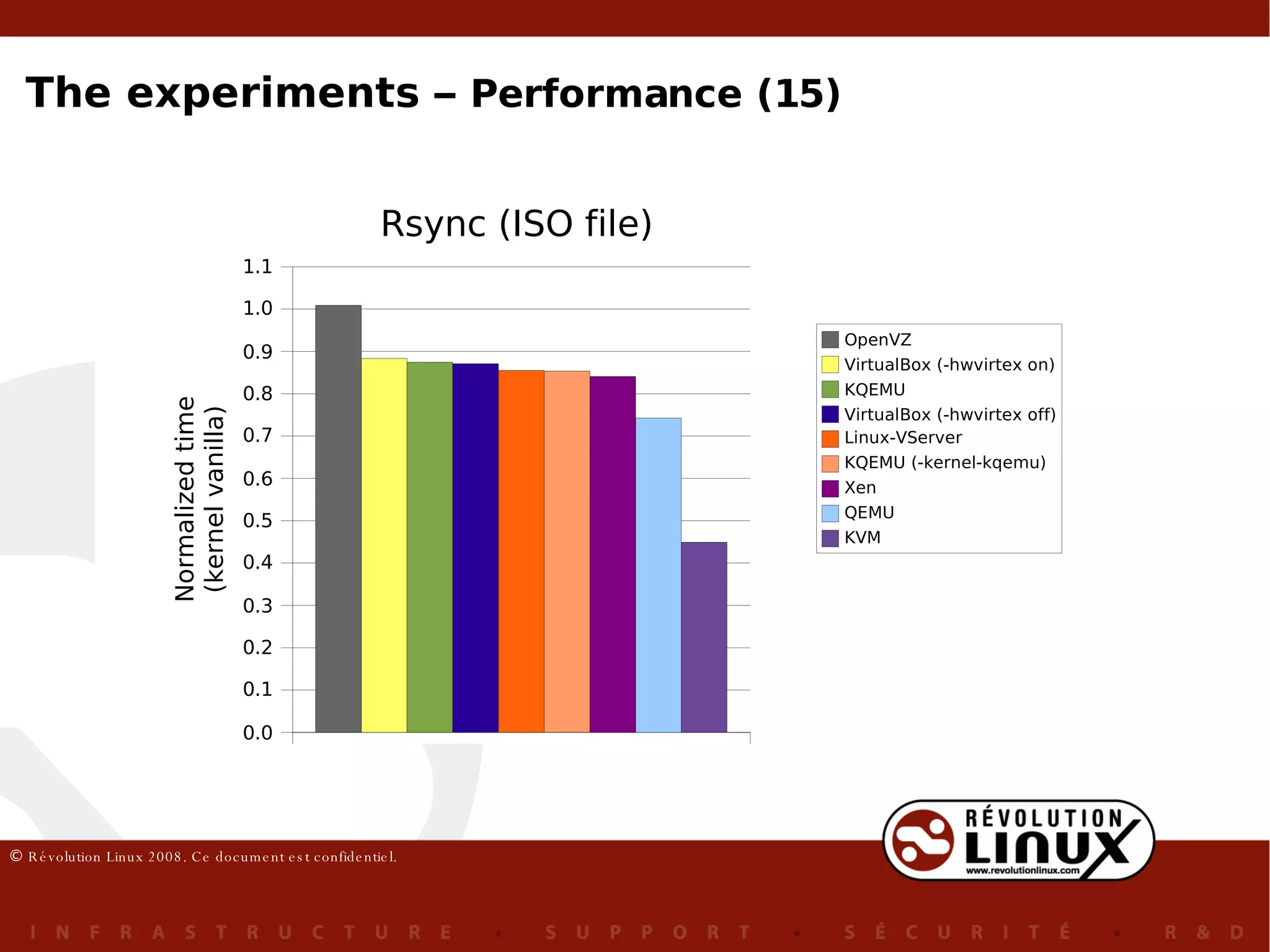

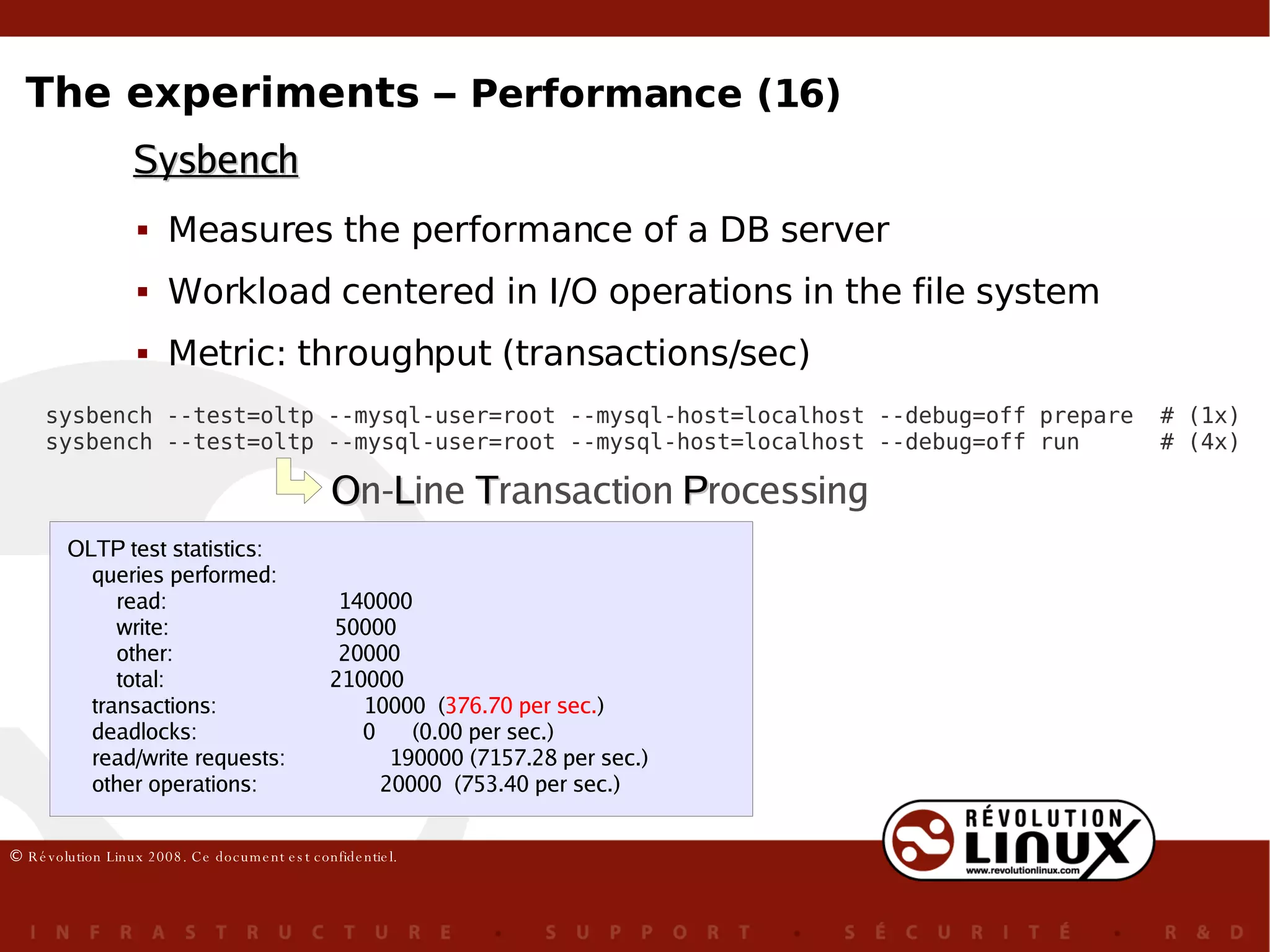

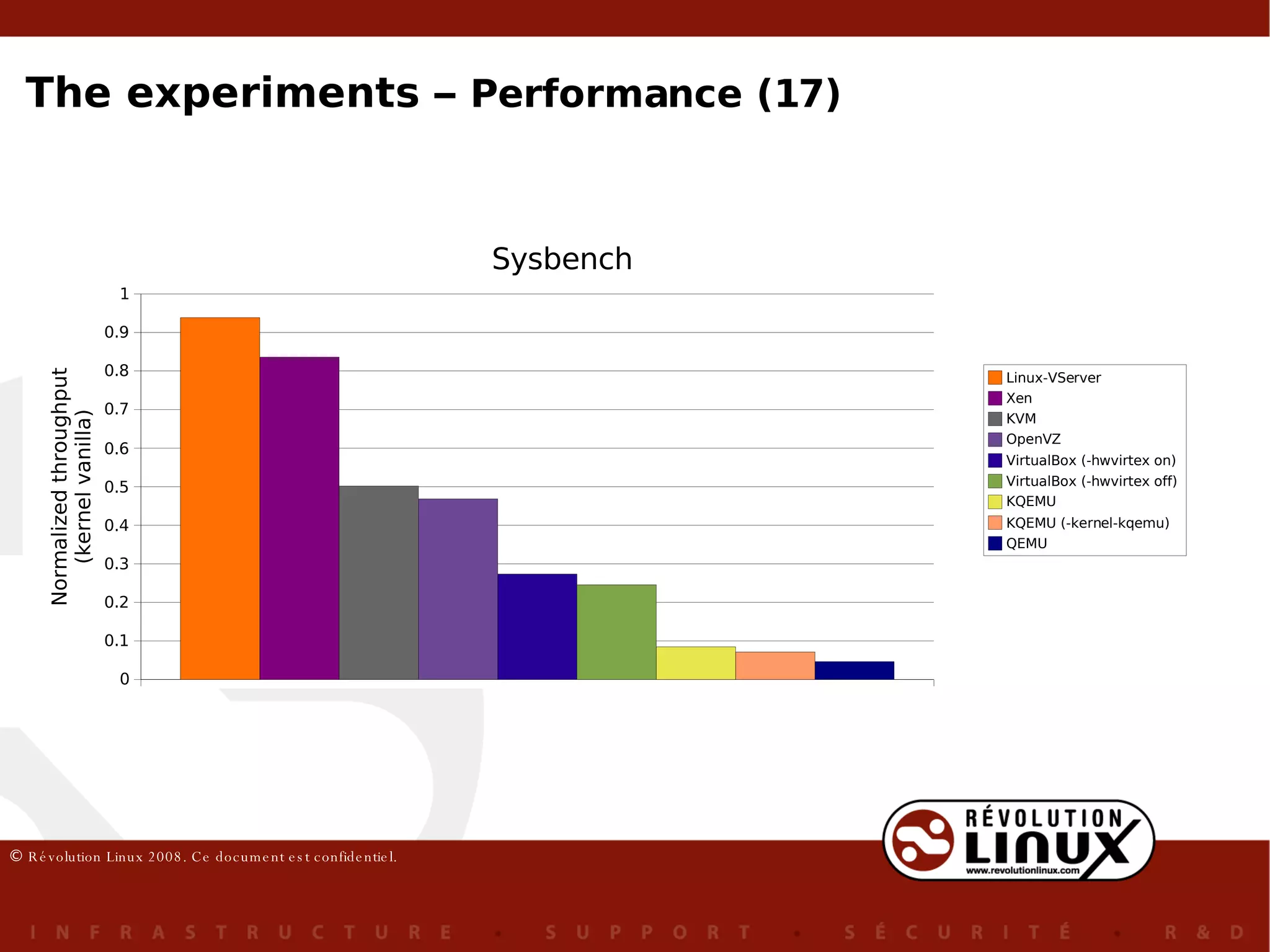

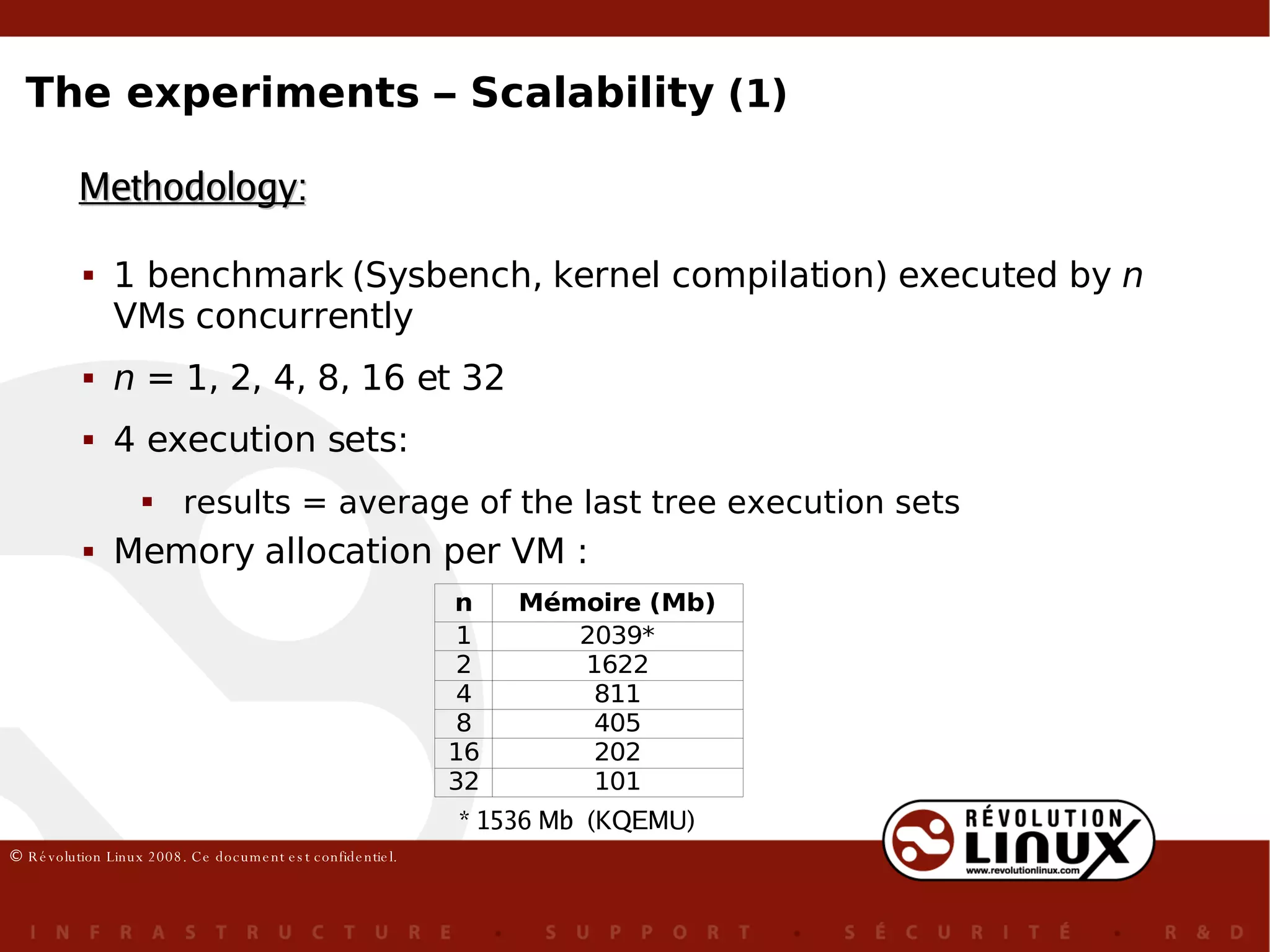

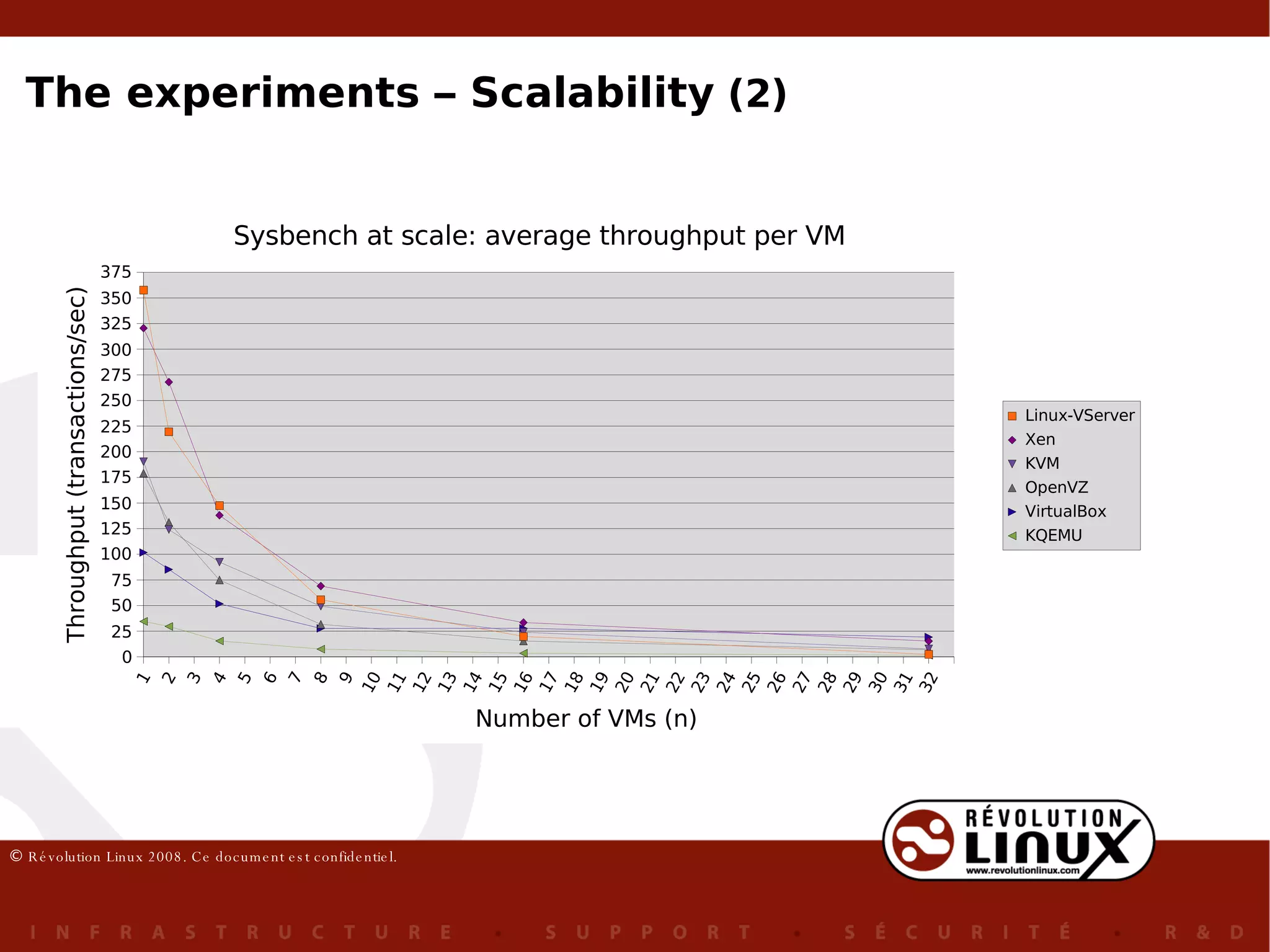

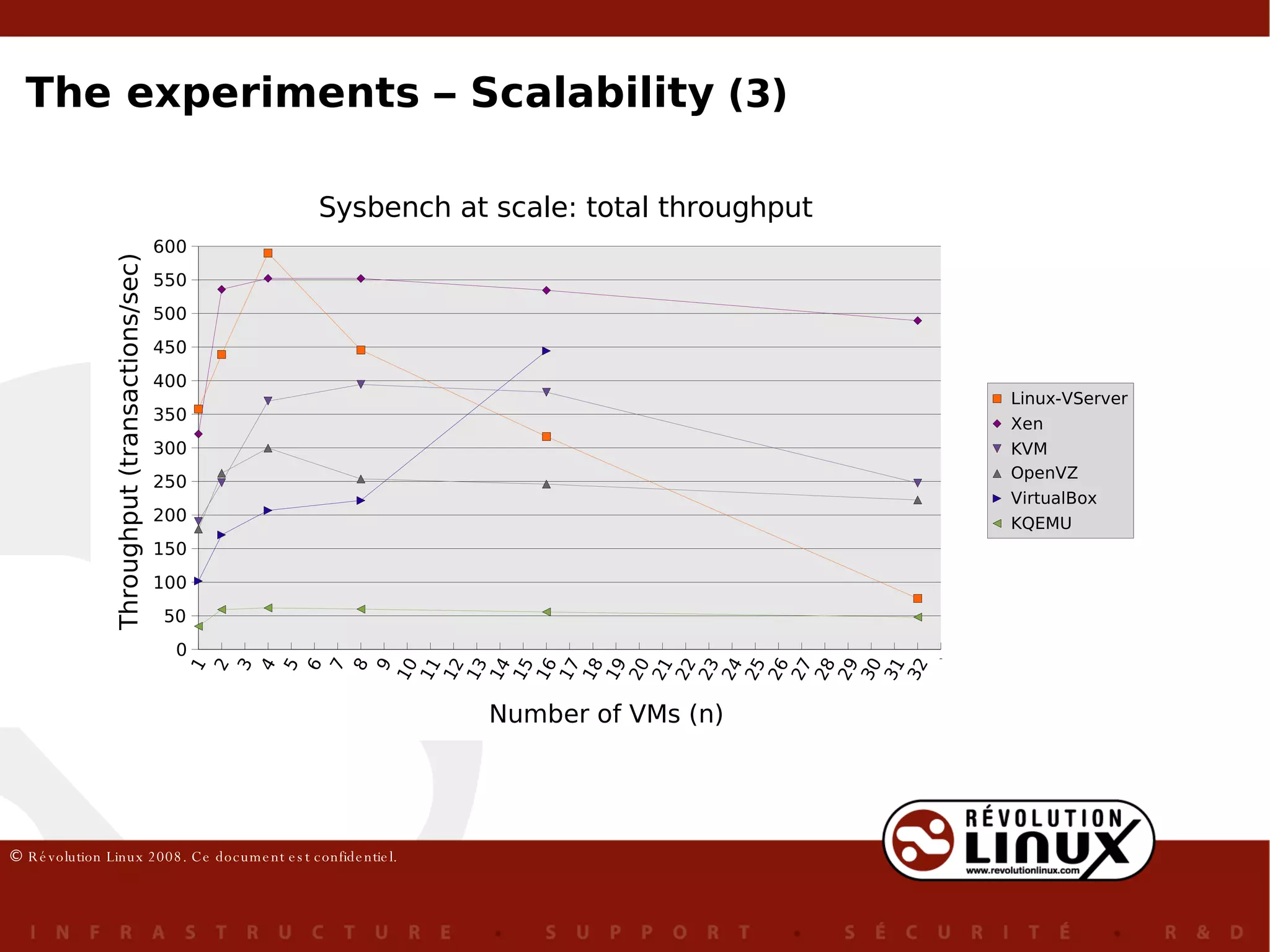

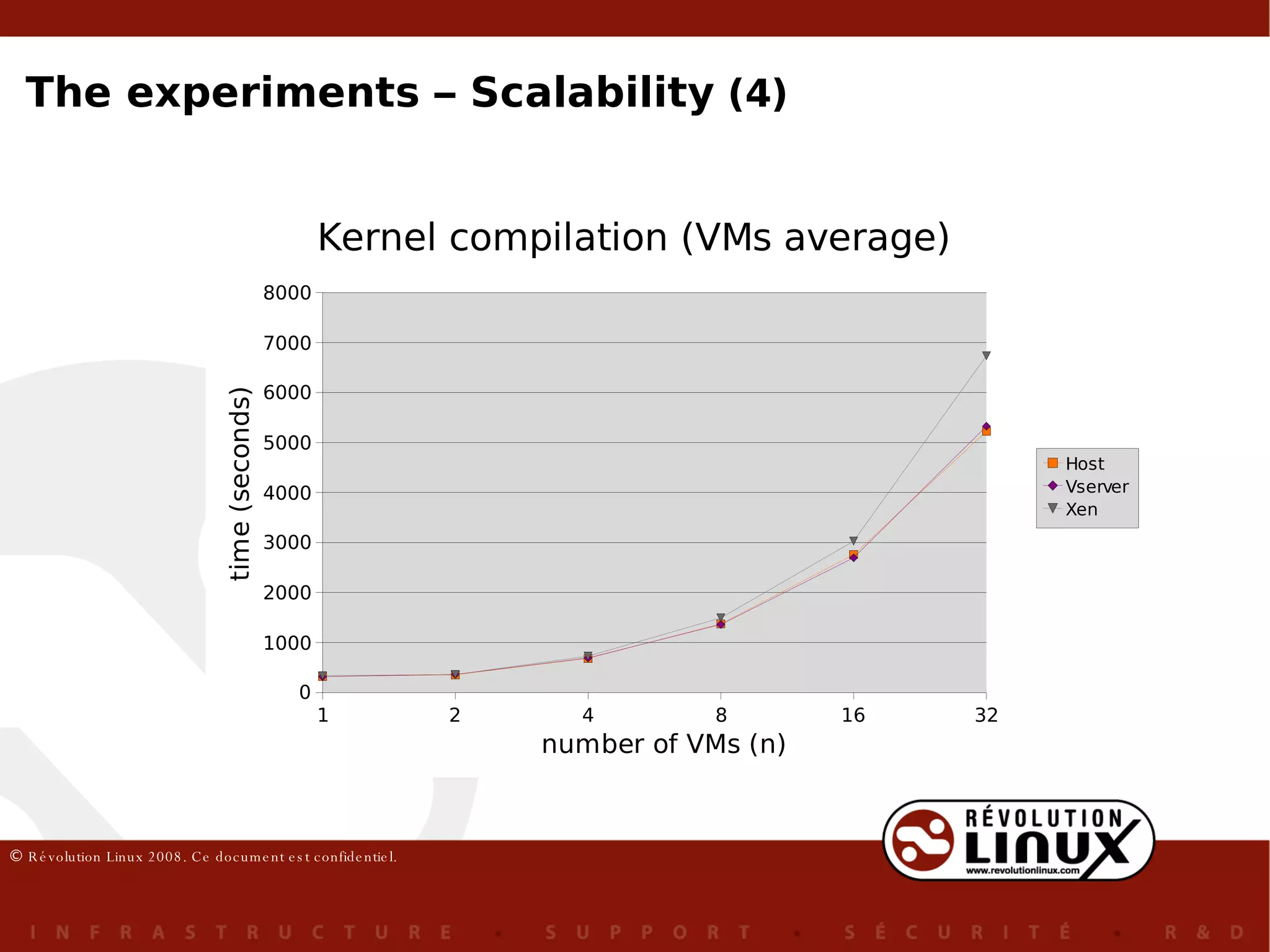

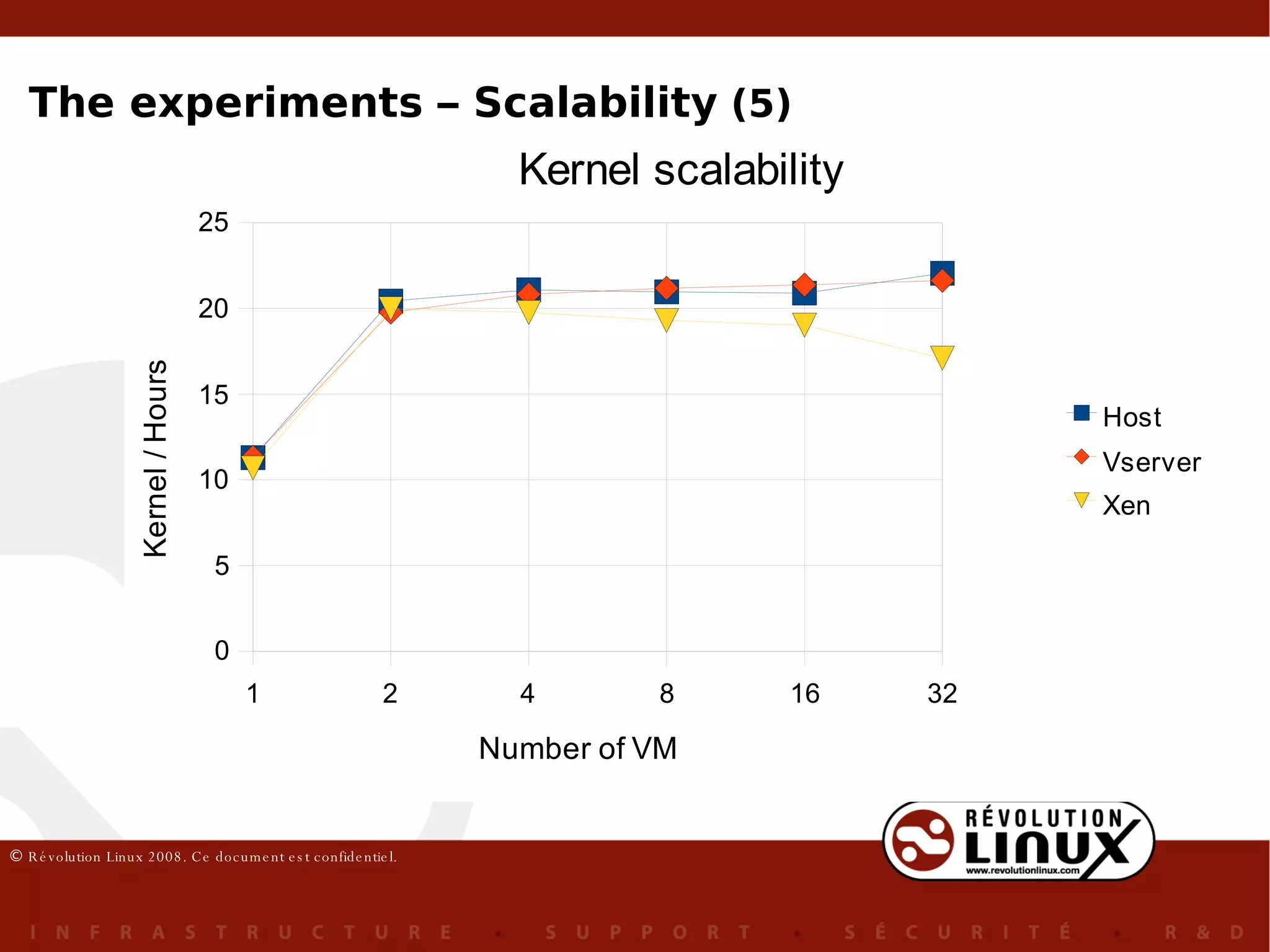

The document compares several open source virtualization and container technologies. It describes experiments conducted to measure the performance and scalability of KVM, Xen, Linux-VServer, OpenVZ, VirtualBox and KQEMU. The experiments involved benchmarking the technologies using various workloads to test aspects like overhead, throughput, and ability to run multiple virtual machines concurrently. Linux-VServer showed the best overall performance, while Xen performed well except on I/O-bound workloads. The conclusions recommend using OpenVZ for network applications, Linux-VServer for general use, and KVM or VirtualBox for development environments.

![Fernando Laudares Camargos Gabriel Girard Benoit des Ligneris, Ph. D. [email_address] Comparative study of Open Source virtualization & contextualization technologies](https://image.slidesharecdn.com/presentationvirtualizationen-1218110908456287-8/75/Comparison-of-Open-Source-Virtualization-Technology-1-2048.jpg)