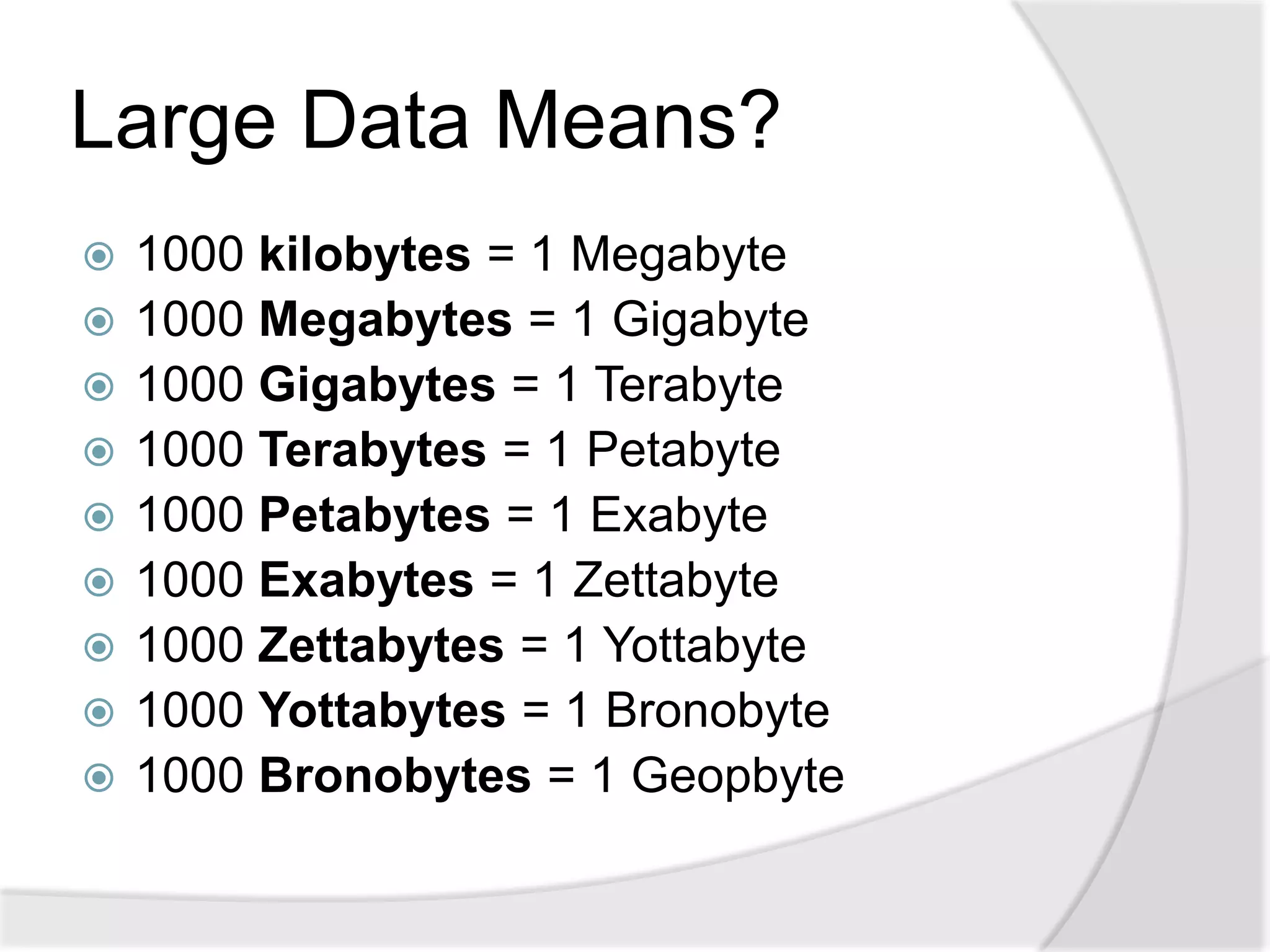

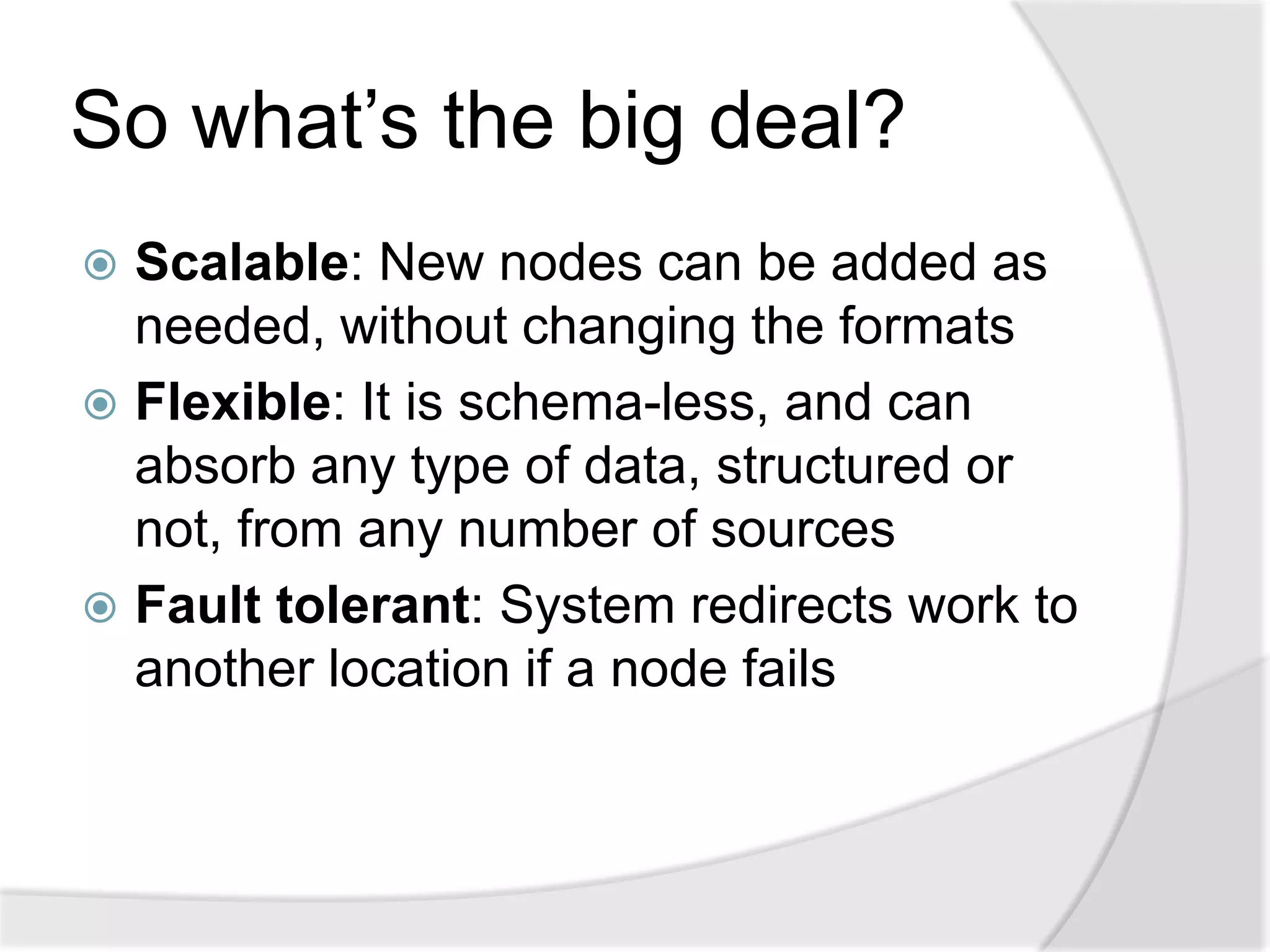

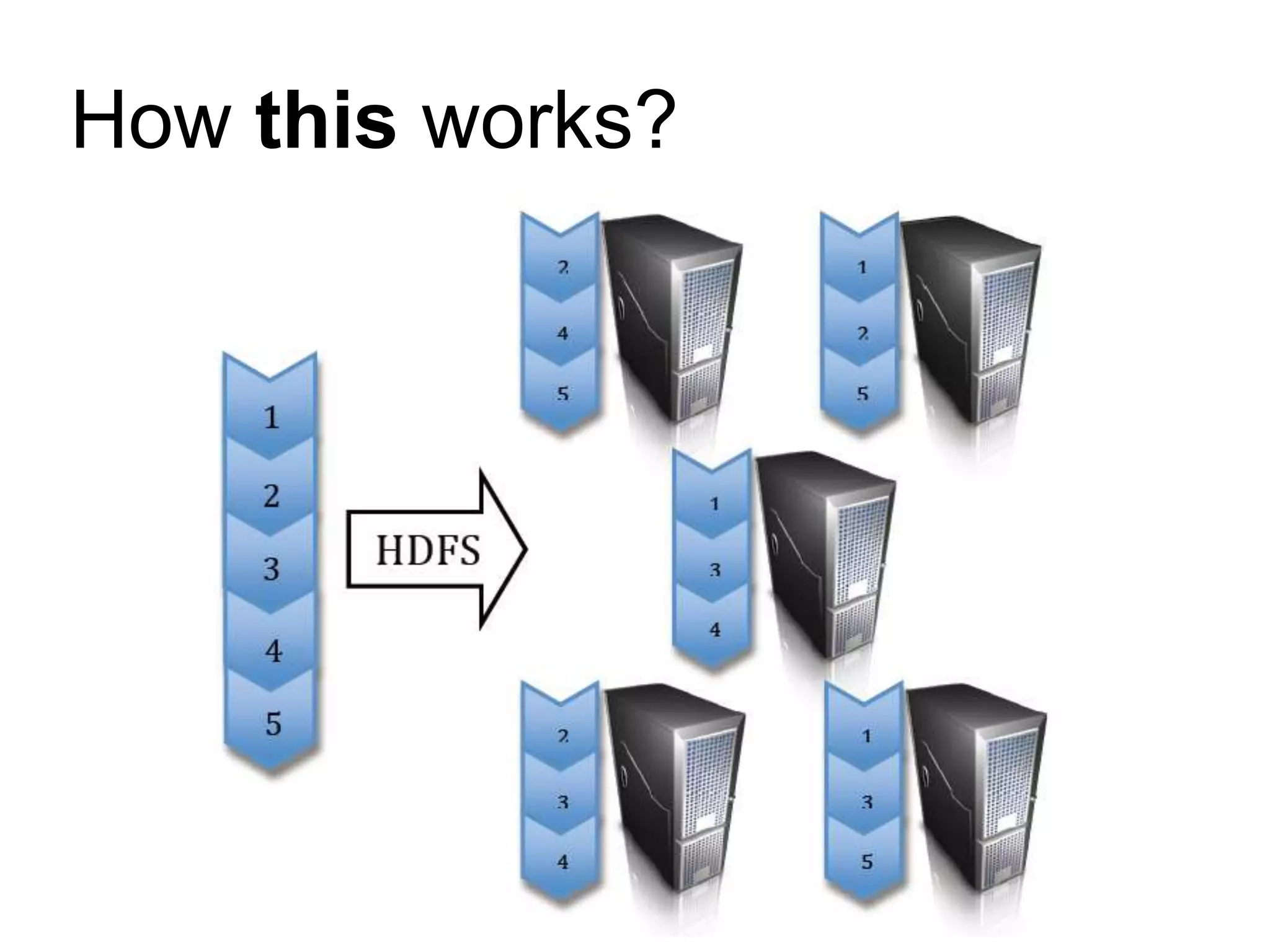

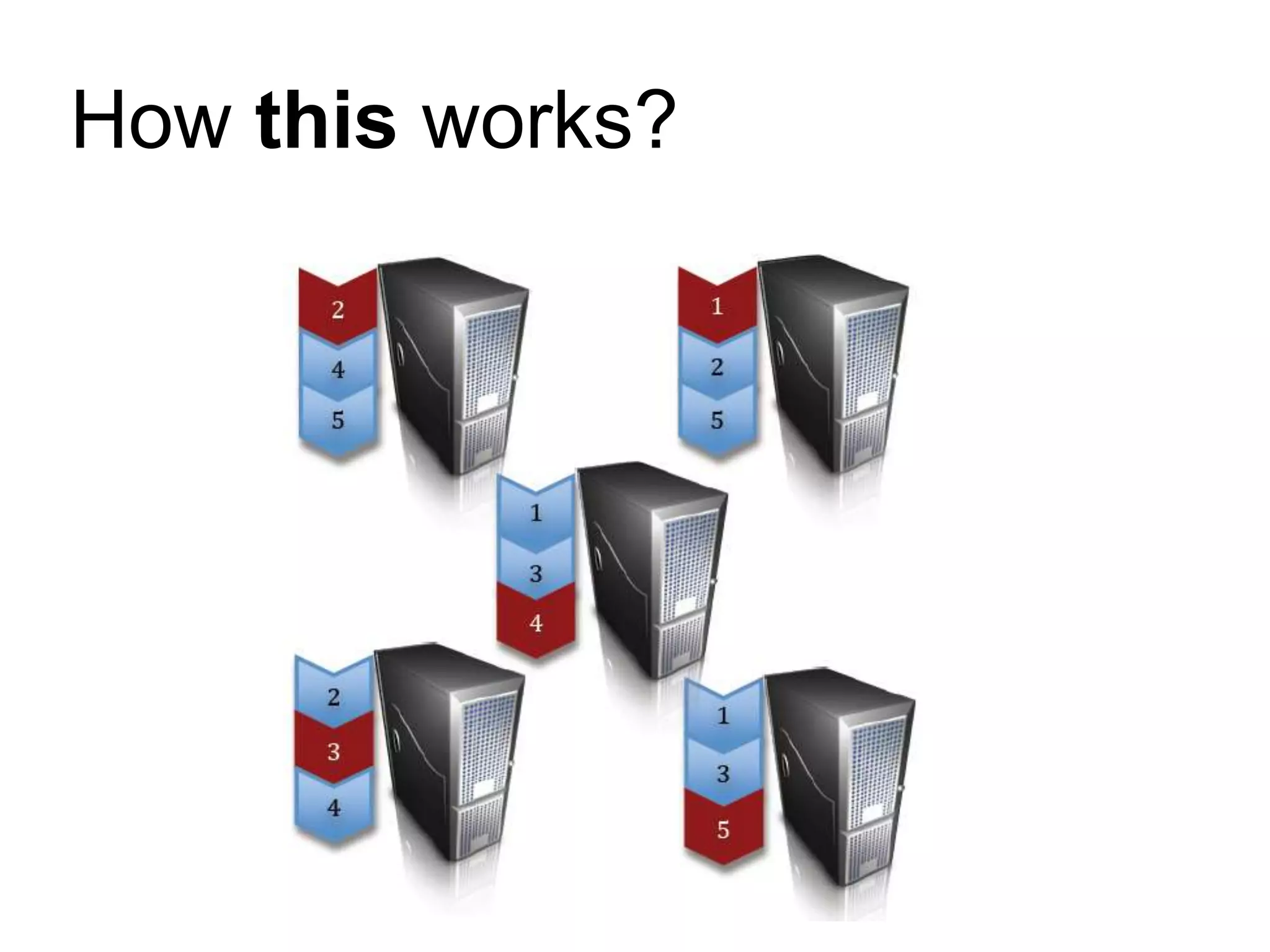

The document discusses Hadoop, an open-source project by the Apache Foundation designed for managing large-scale data processing, inspired by Google's MapReduce and Google File System. It emphasizes Hadoop's scalable, flexible, and fault-tolerant characteristics, which include storing data in a distributed manner through HDFS and processing it using the MapReduce algorithm. Notable companies utilizing Hadoop include LinkedIn, Walt Disney, Wal-Mart, General Electric, Nokia, Bank of America, and Foursquare.