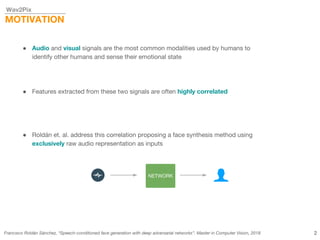

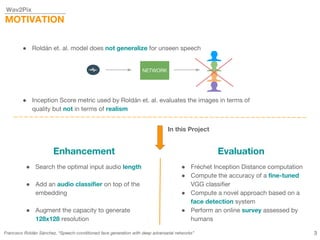

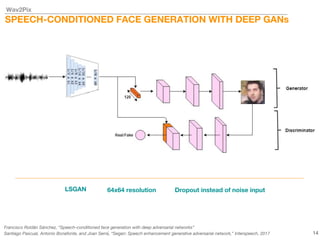

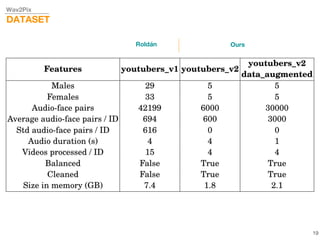

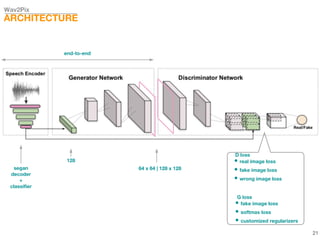

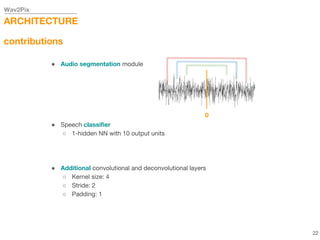

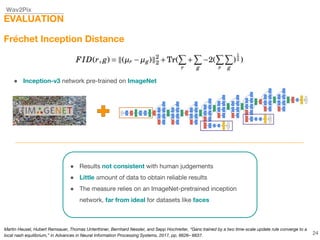

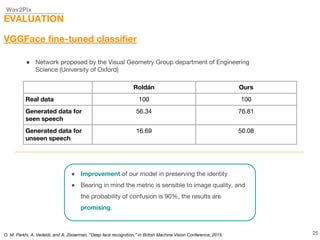

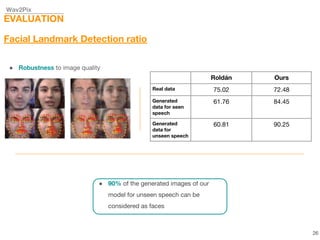

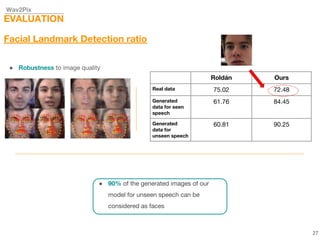

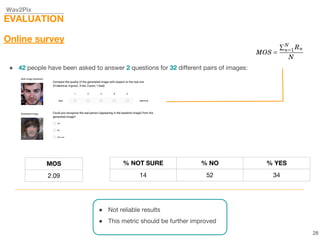

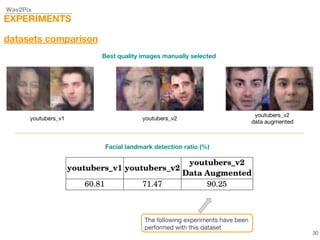

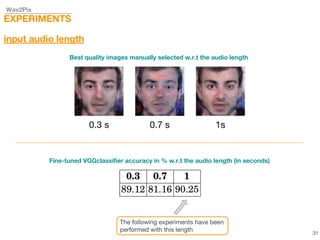

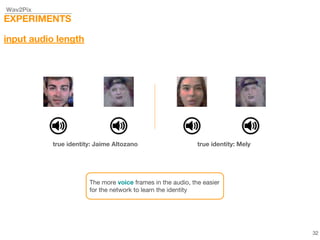

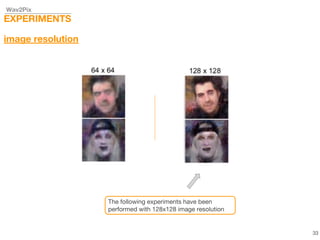

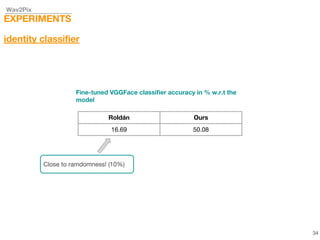

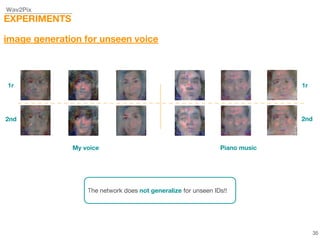

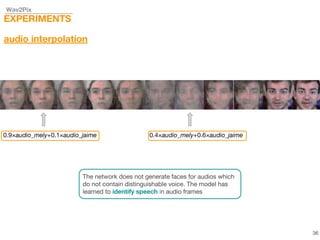

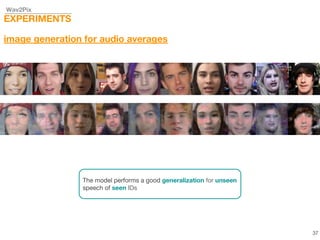

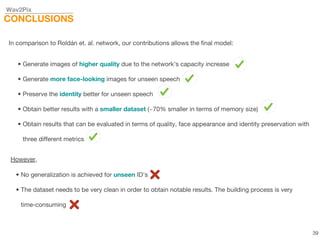

This document describes a study on speech-conditioned face generation using generative adversarial networks. The study aimed to enhance an existing model by adding an audio classifier, increasing the image resolution to 128x128, and using additional evaluation metrics. The results showed that the enhanced model generated higher quality images that better preserved identity for unseen speech compared to the existing model, but it did not generalize to generating faces for unseen identities.