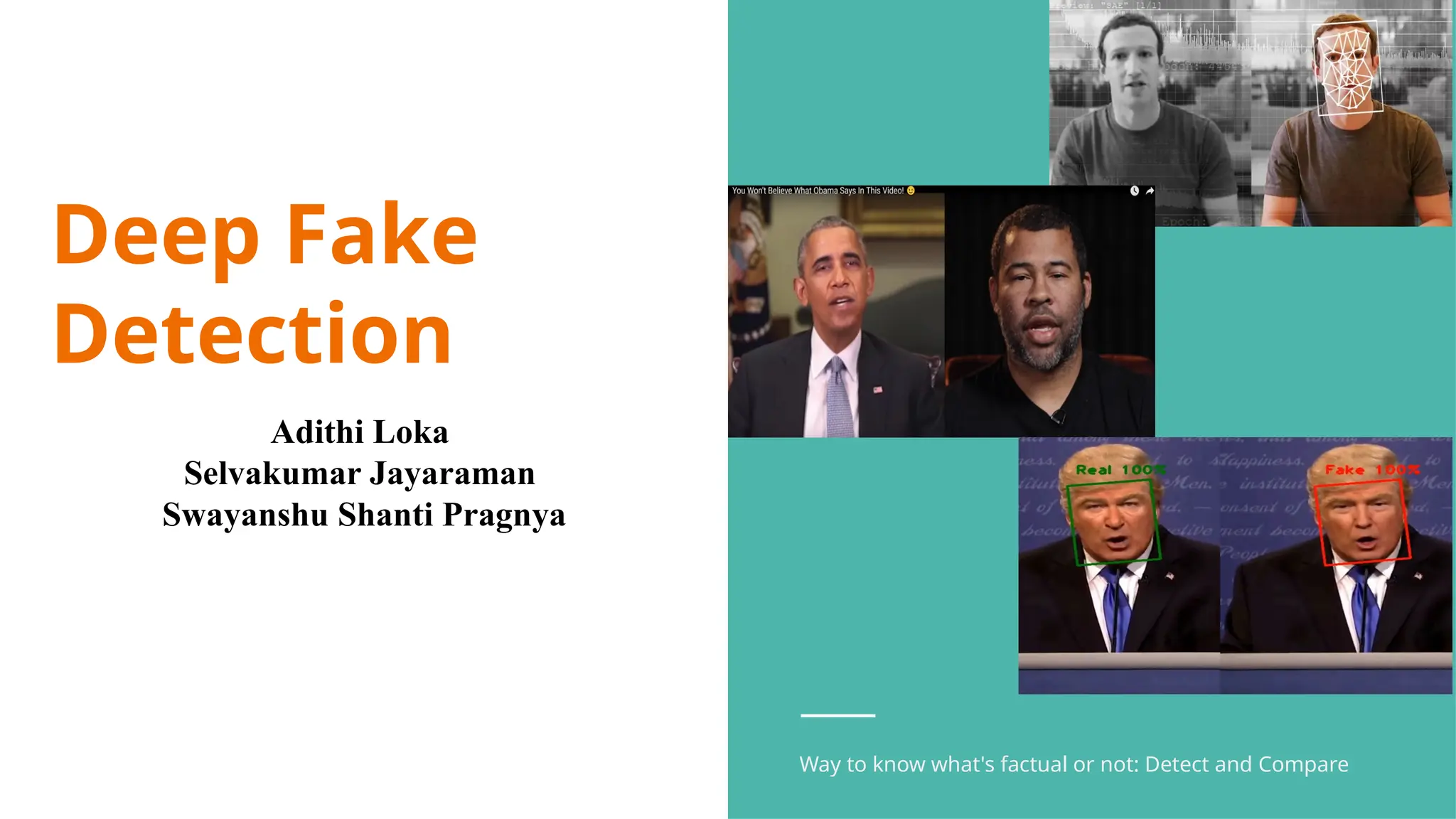

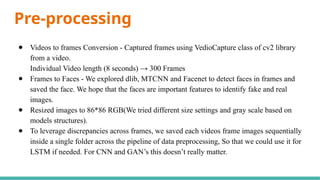

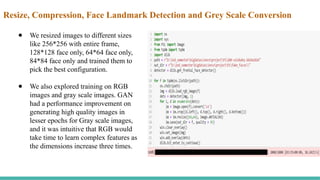

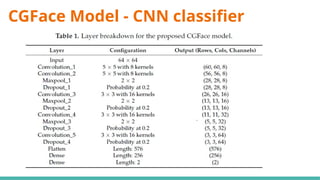

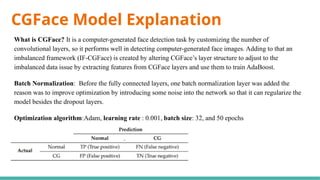

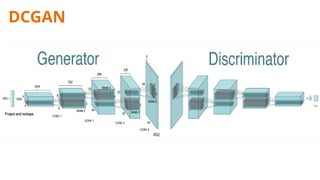

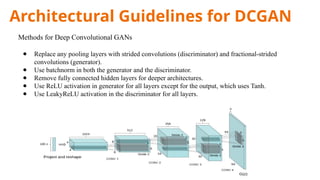

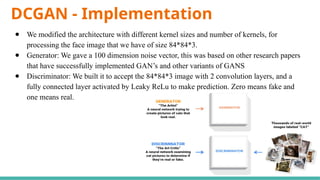

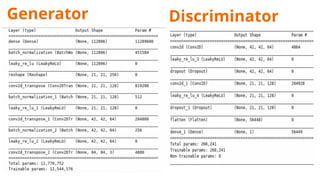

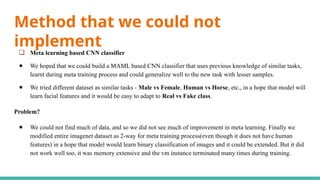

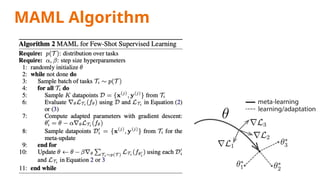

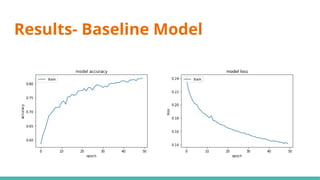

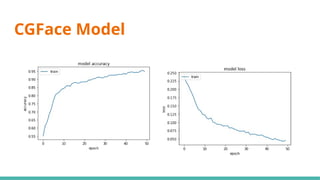

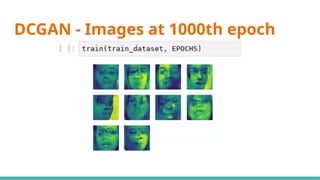

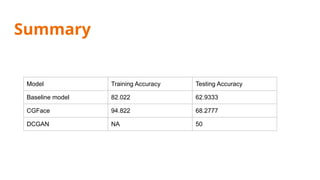

The document discusses deepfake detection, highlighting the need for improved accuracy in identifying real versus fake videos due to the negative implications on privacy and democracy. It details the methodology used in developing a model for classification, including dataset preparation, feature extraction, and the implementation of various deep learning techniques such as CNN and GAN. The findings indicate challenges faced in training models effectively, particularly with meta learning and resource requirements for processing video data.