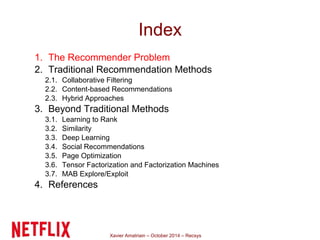

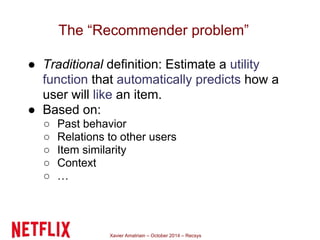

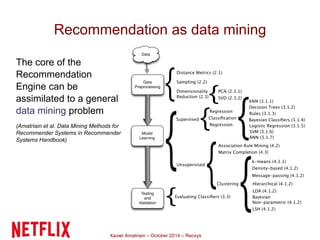

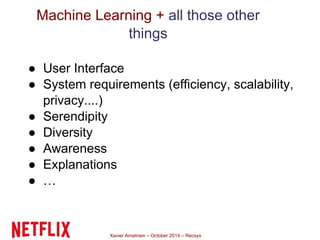

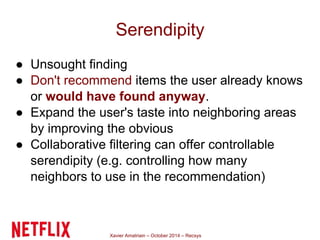

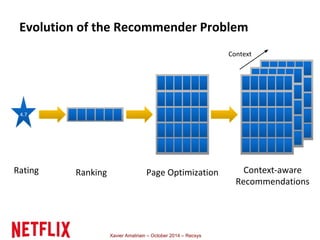

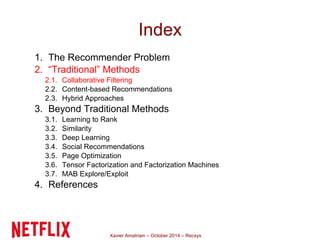

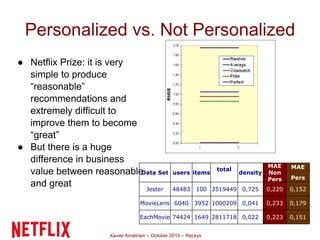

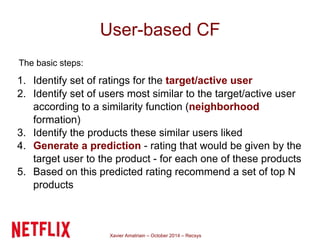

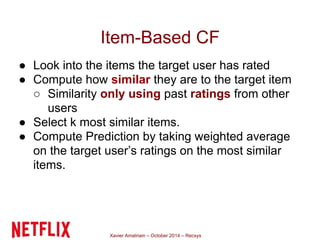

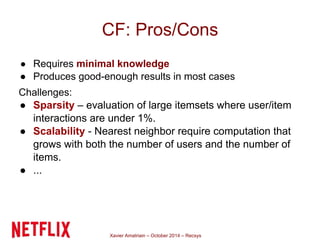

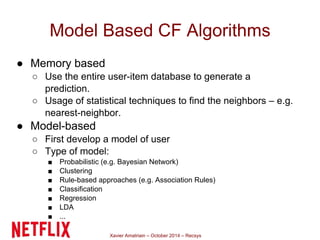

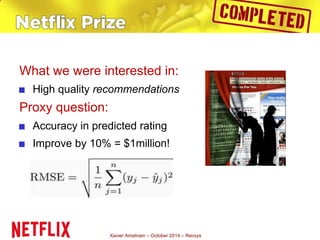

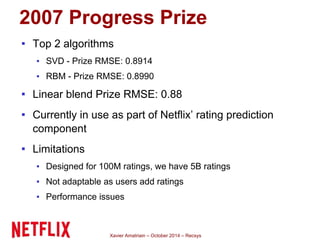

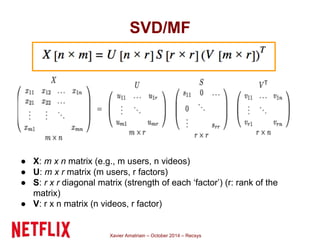

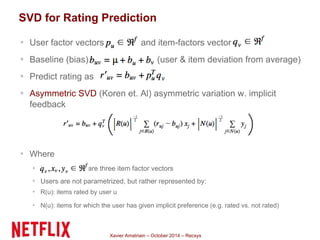

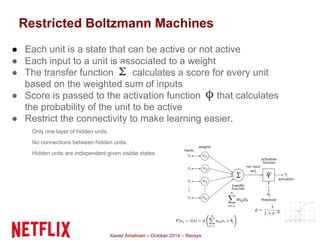

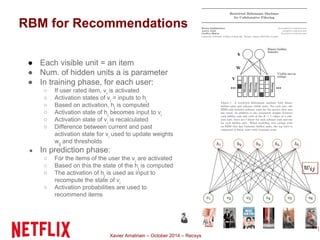

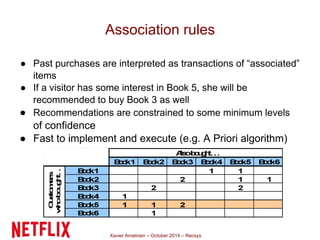

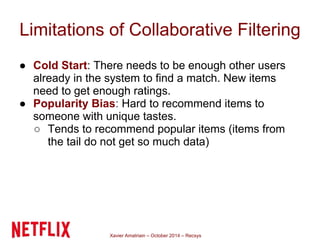

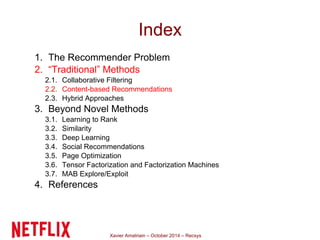

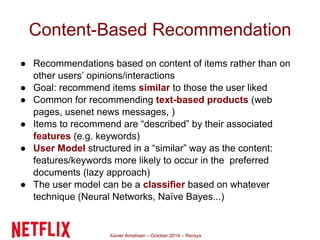

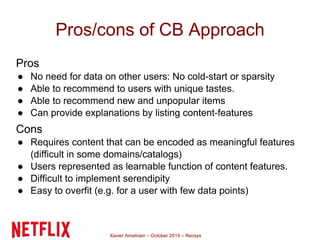

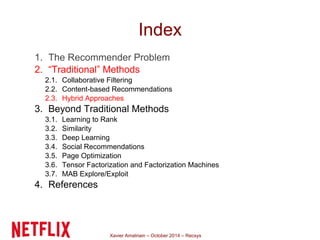

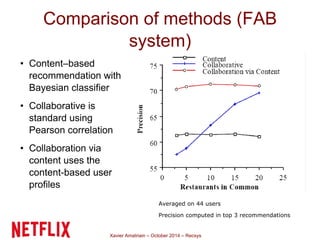

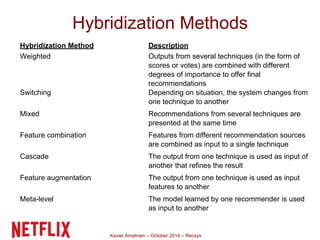

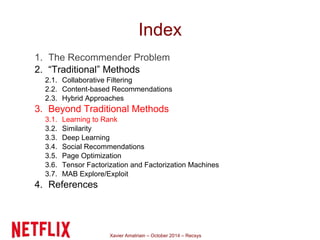

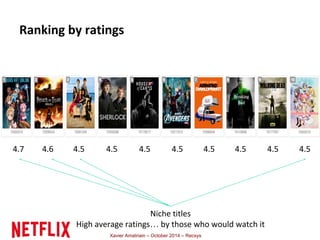

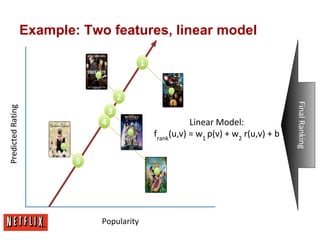

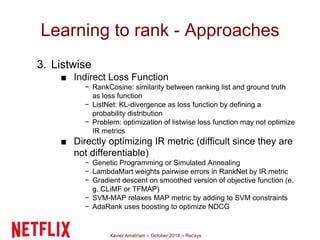

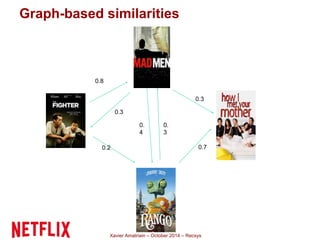

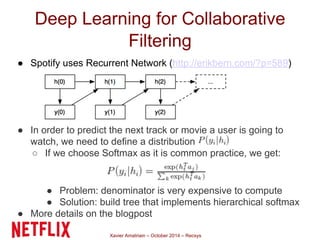

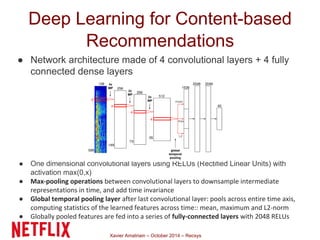

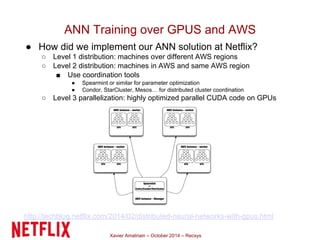

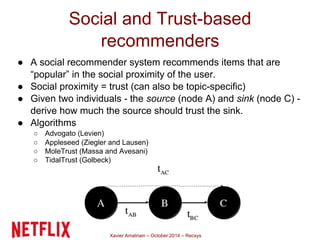

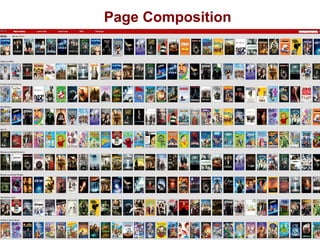

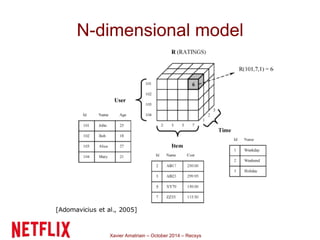

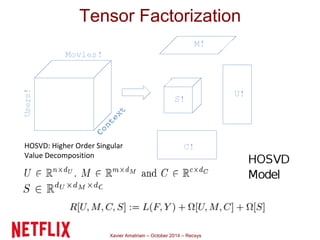

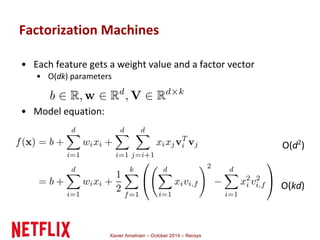

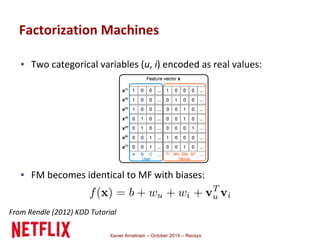

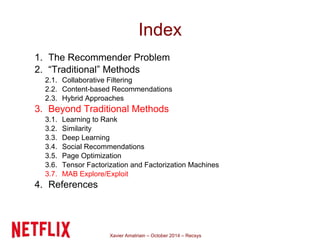

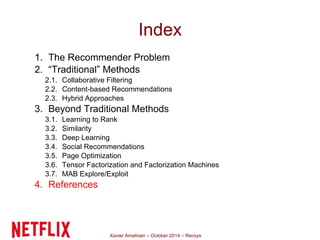

This document summarizes Xavier Amatriain's presentation on recommender systems. It discusses traditional recommendation methods like collaborative filtering, content-based recommendations, and hybrid approaches. It also covers newer methods that go beyond traditional techniques, such as learning to rank, deep learning, social recommendations, and context-aware recommendations. Throughout the presentation, Amatriain discusses challenges like cold starts, popularity bias, and limitations of different recommendation approaches. He also shares lessons learned from the Netflix Prize competition, including how SVD and RBM models were used.