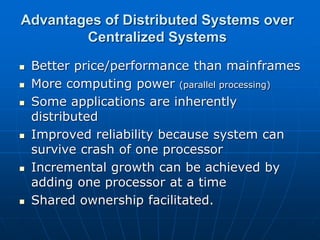

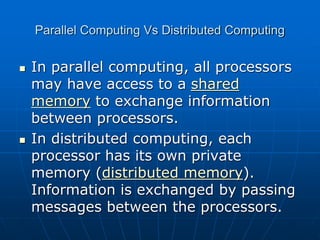

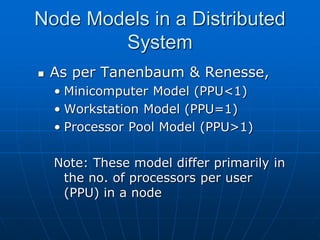

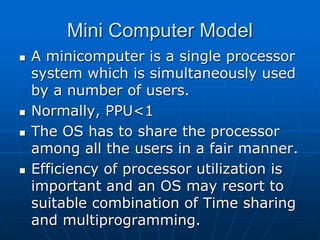

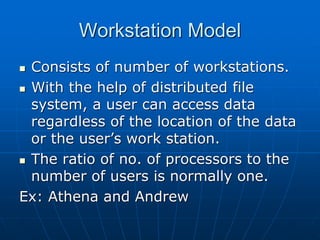

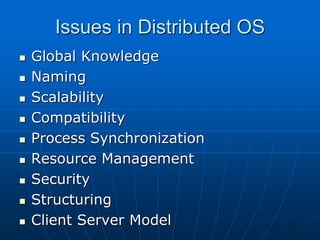

This document provides an overview of distributed systems. It discusses tightly-coupled and loosely-coupled multiprocessor systems, with loosely-coupled systems referring to distributed systems that have independent processors, memories, and operating systems. The document outlines some key properties of distributed systems, including that they consist of independent nodes that communicate through message passing, and accessing remote resources is more expensive than local resources. It also summarizes some advantages and challenges of distributed systems.