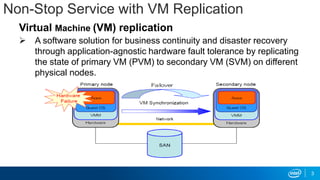

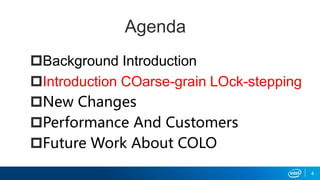

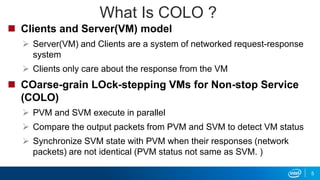

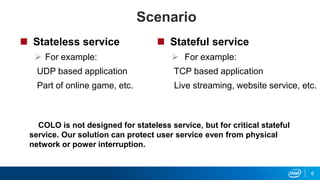

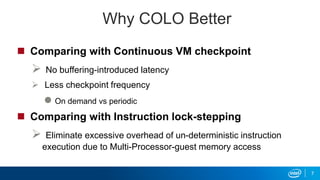

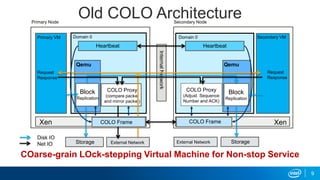

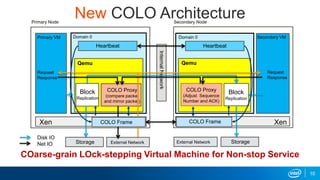

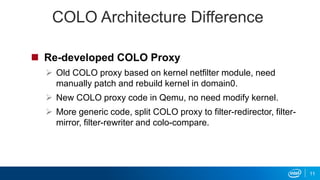

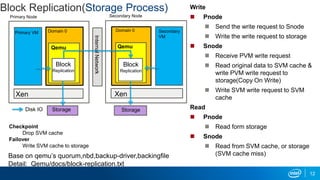

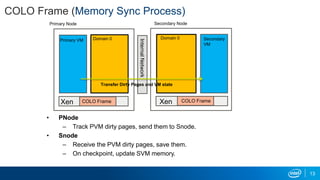

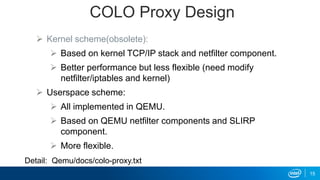

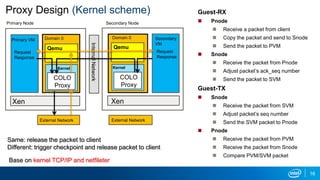

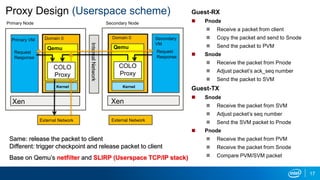

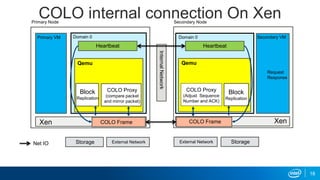

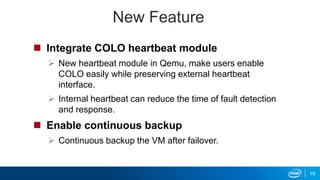

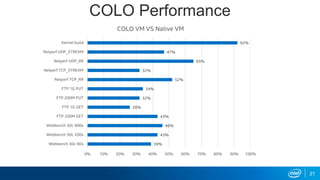

The document outlines the legal disclaimer and technical details concerning Intel's products, focusing on the COLO (Coarse-Grain Lock-Stepping) virtual machine replication process for business continuity and disaster recovery. It discusses the architecture, design improvements, performance metrics, and future enhancements for COLO to provide non-stop service primarily for critical stateful applications. The document also emphasizes that Intel products are not intended for critical medical applications and comes with a disclaimer regarding intellectual property rights.