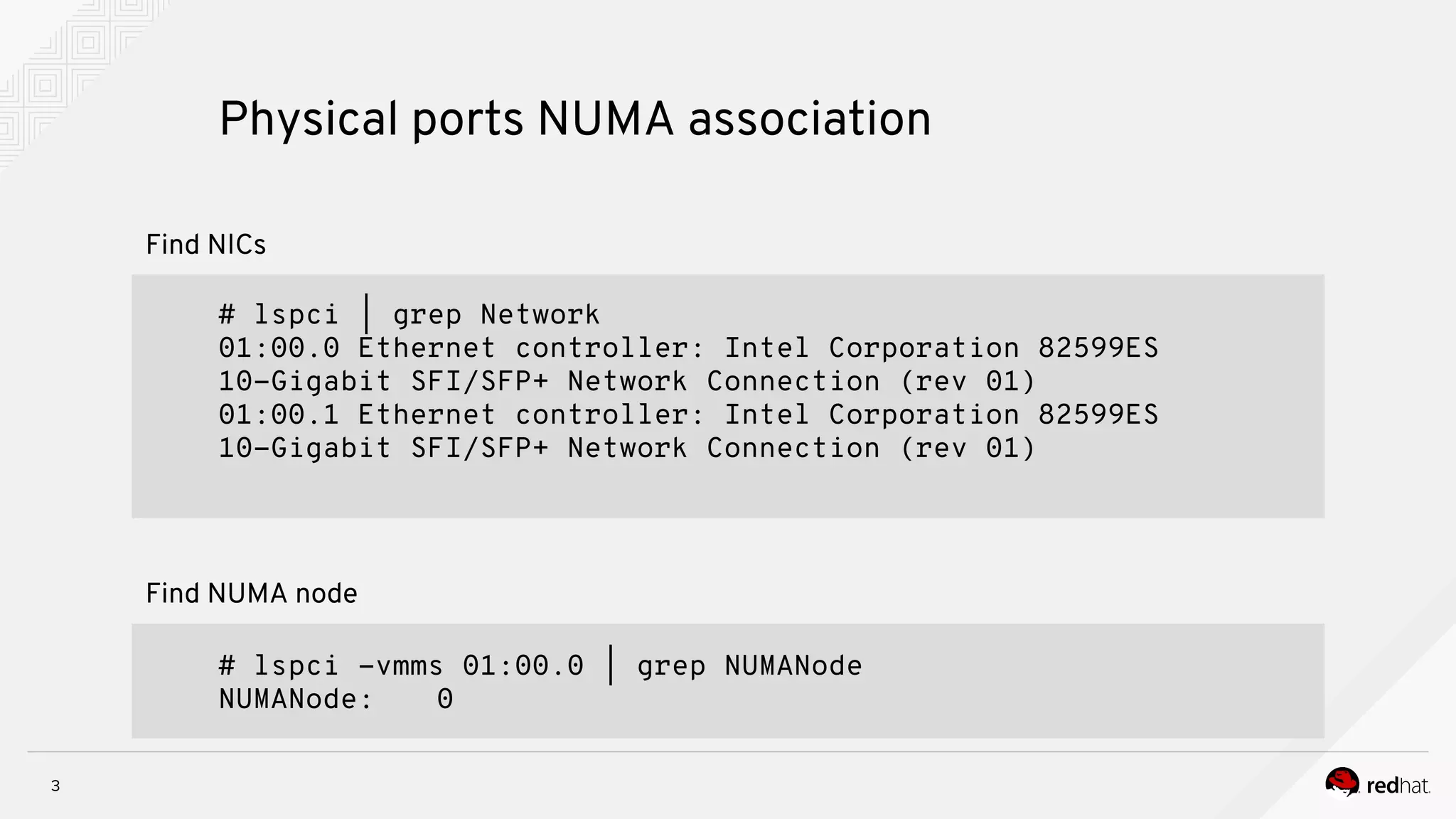

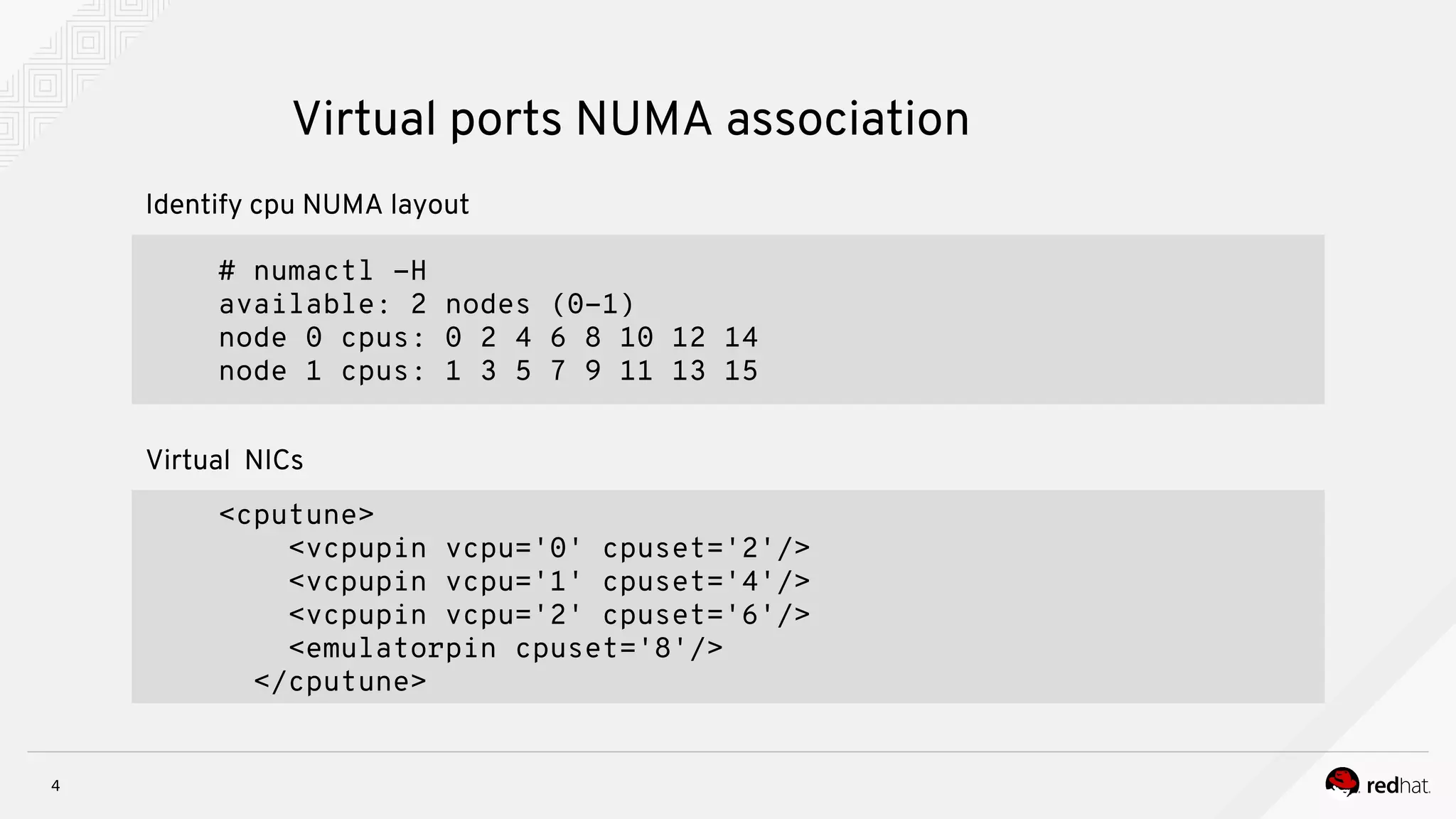

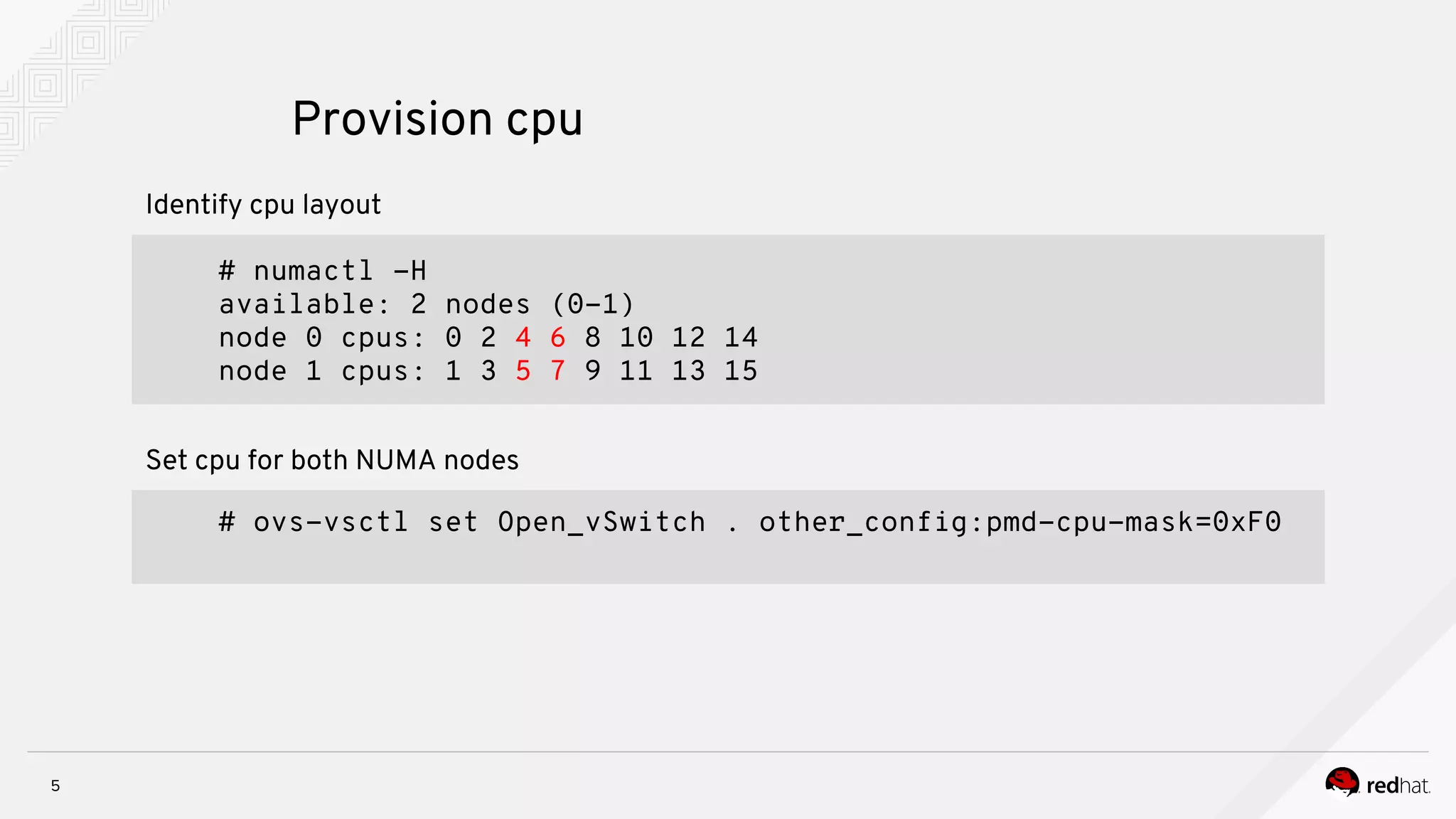

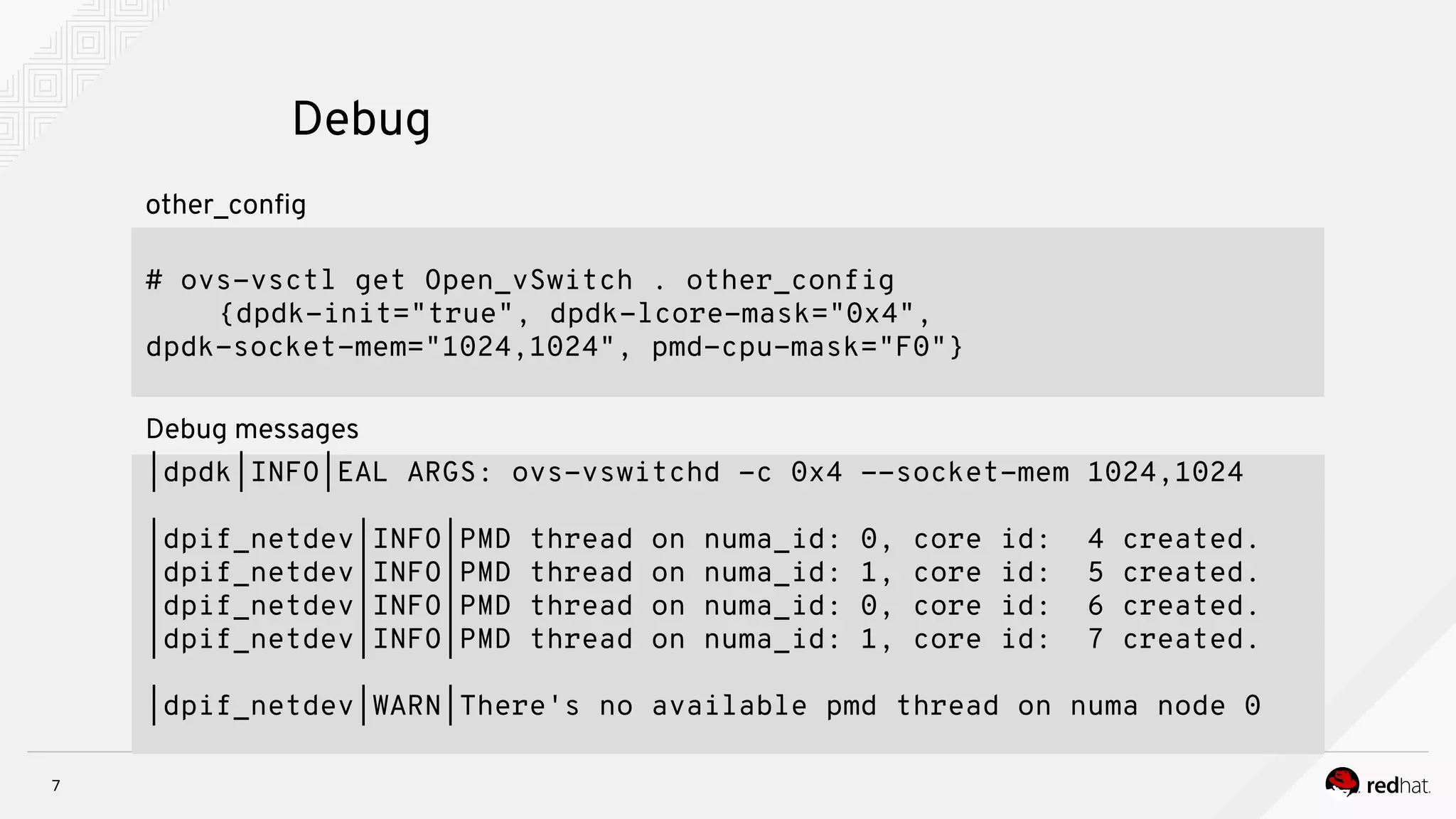

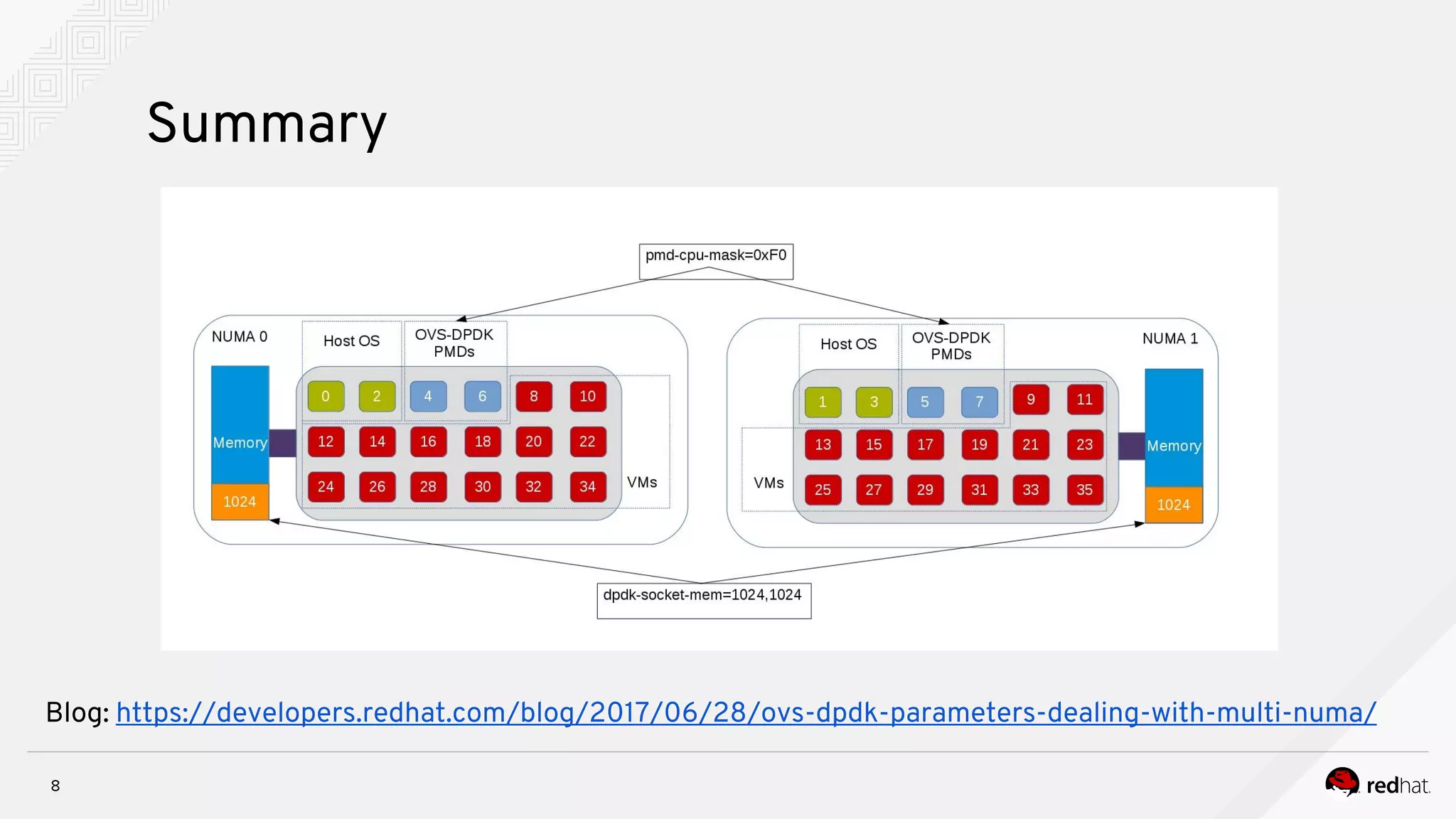

This document discusses configuring OVS-DPDK parameters for a multi-NUMA environment. It recommends associating physical NICs and virtual ports to their respective NUMA nodes, provisioning CPUs on both nodes using pmd-cpu-mask, allocating hugepages for memory on each node using dpdk-socket-mem, and debugging by checking PMD thread placement and other_config settings. Correct configuration of these OVS-DPDK parameters is necessary for performance when using multiple NUMA nodes.