Latent Dirichlet Allocation (LDA) and other text classification methods were evaluated on a Wikipedia document classification task. LDA was able to classify documents into topics but mapping topics to categories was challenging. Two approaches were tested: 1) Mapping topics to the best matching training document category. 2) Manually mapping topics to categories. Accuracy was 97% for approach 1 but this risked false positives. Approach 2 achieved 88% accuracy but required manual work. Tf-idf and Doc2Vec feature vectors outperformed LDA and Word2Vec when classified with an SVM for this imbalanced multi-label dataset.

![Latent Dirichlet Allocation for document classification

During Testing phase

INPUT:

We provide the document to be classified in bag of words form to the learnt model

OUTPUT:

Topic distribution for a the text eg: “[(34, 0.023705742561150572), (60, 0.017830310671555303), (62,

0.023999239610385081), (83,0.029439444128473557), (87, 0.028172479800878891), (90, 0.1207424163376625),

(116,0.022904510579689157)]” represents the probabilities of the doc to fall under topics like 34,60,62….

Major challenge in classification:

It seems to be fairly simple to classify a document in different topics as we can see in output of testing phase.

But

our aim is to classify the document under different tags like “politics, science” etc. and not under topic

numbers.](https://image.slidesharecdn.com/ireteam51-160415163026/75/Wikipedia-Document-Classification-7-2048.jpg)

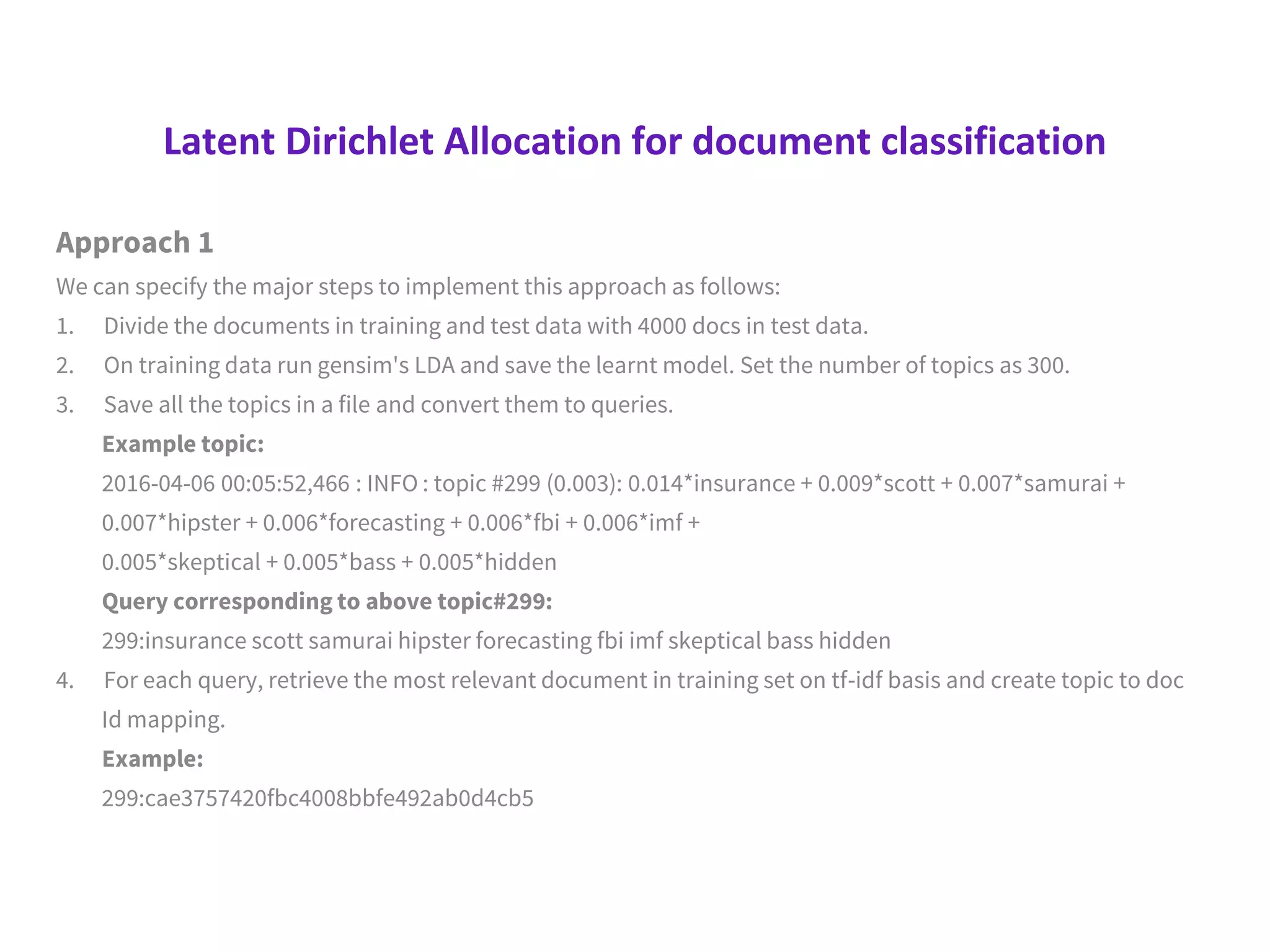

![Latent Dirichlet Allocation for document classification

5. Create a topic to tag mapping using the docId to tag mapping (already available in tagData.xml) and doc ID

to topic mapping created in above step.

Example docId to tag from tagData.xml:

cae3757420fbc4008bbfe492ab0d4cb5 : ['wiki', 'en', 'wikipedia,', 'activism', '-‘, 'political', 'poetry', 'free',

'person', 'music', 'encyclopedia', 'the', 'biography', 'history']

Example topic to docId:

299:cae3757420fbc4008bbfe492ab0d4cb5

Example topic to tag:

299:['wiki', 'en', 'wikipedia,', 'activism', '-', 'political', 'poetry', 'free', 'person', 'music‘, 'encyclopedia', 'the',

'biography', 'history']

Now each topic is mapped to multiple tags.

6. For each of the test documents (from 4000 docs in test data), find out the relevant topics using learnt LDA

model. Combine the tags corresponding to them and match them against already available target tags

(from tagData.xml) for that particular document.

If even one tag is matched, we say that document is correctly classified.](https://image.slidesharecdn.com/ireteam51-160415163026/75/Wikipedia-Document-Classification-10-2048.jpg)

![Latent Dirichlet Allocation for document classification

Example:

Topic distribution returned by LDA for a particular doc:

[(34, 0.023705742561150572), (60, 0.017830310671555303), (62, 0.023999239610385081), (83,

0.029439444128473557), (87, 0.028172479800878891), (90, 0.1207424163376625), (116,

0.022904510579689157), (149, 0.010136256627631658), (155, 0.045428499528247894), (162,

0.014294122339773195), (192, 0.01315170635603234), (193, 0.055764500858303222), (206,

0.015174121956574787), (240, 0.052498569359746373), (243, 0.016285345117555323), (247,

0.019478047862044864), (255, 0.018193391082926114), (263, 0.030209722561452931), (287,

0.042405659613804568), (289, 0.055528896333028231), (291, 0.030064093091433357)]

Tags combined for above topics (from topic to tag mapping created in above step):

['money', 'brain', 'web', 'thinking', 'interesting', 'environment', 'teaching', 'web2.0', 'bio',

'finance', 'government', 'food', 'howto', 'geek', 'cool', 'articles', 'school', 'cognitive', 'cognition',

'energy', 'computerscience', '2read', 'culture', 'computer', 'video', 'home', 'todo', 'investment',

'depression', 'psychology', 'wikipedia', 'research', 'health', 'internet', 'medicine', 'electronics',

'tech', 'math', 'business', 'marketing', 'free', 'standard', 'interface', 'article', 'definition',

'anarchism', 'of', 'study', 'economics', 'programming', 'american', 'games', 'advertising', 'social',

'software', 'apple', 'coding', 'maths', 'learning', 'management', 'system', 'quiz', 'pc', 'music',

'memory', 'war', 'nutrition', 'comparison', 'india', 'info', 'science', 'dev', '@wikipedia', 'future',

'behavior', 'design', 'history', '@read', 'mind', 'hardware', 'webdev', 'politics', 'technology‘]](https://image.slidesharecdn.com/ireteam51-160415163026/75/Wikipedia-Document-Classification-11-2048.jpg)

![Latent Dirichlet Allocation for document classification

Target tags for this particular doc from tagData.xml:

['reference', 'economics', 'wikipedia', 'politics', 'reading', 'resources']

Accuracy from this approach: 97%

Problem with this approach:

1. If there is any match between our found tags and true tags, then we call it as correctly classified. Probability

of such scenario is very high as we have multiple found tags and multiple true tags. So even if we are doing

something wrong, chances of getting good accuracy is very high.

2. As we are doing tf-idf based matching then there is high chance that the document we get on top is not best

match for that particular topic. It can also happen because we are not considering all the representative

words of a particular topic to frame the query, we just considered top 10.](https://image.slidesharecdn.com/ireteam51-160415163026/75/Wikipedia-Document-Classification-12-2048.jpg)

![Latent Dirichlet Allocation for document classification

Accuracy from this approach: 88%

Problem with this approach:

1. Mapping topics to tags manually is an issue. We can’t always find out the best suited tag just by seeing the

topic words. Sometimes tags don’t reflect anything eg: ‘wikipedia’, ‘wiki’, ‘reference’ create problem.

Modification:

Performed the above experiment again but just with meaningful tags i.e. no tag like ‘wikipedia’, ‘wiki’,

‘reference’ etc. After eliminating these documents left were 17K. But the approach posed another issue:

1. There are similar tags which can represent a topic at the same time eg: [research, science], [web, internet],

[programming, math], [literature, language].

If we keep all such similar tags then accuracy is : 80% but if we strictly keep just one tag then accuracy drops

to 65%.

Reason for the drop is possibly manual work. We can’t surely say which tag should be kept when both tags are

same.

Conclusion: 2ND approach is better as there is very less chance of false good accuracy and accuracy is also not

bad considering just ~19K documents for learning.](https://image.slidesharecdn.com/ireteam51-160415163026/75/Wikipedia-Document-Classification-14-2048.jpg)