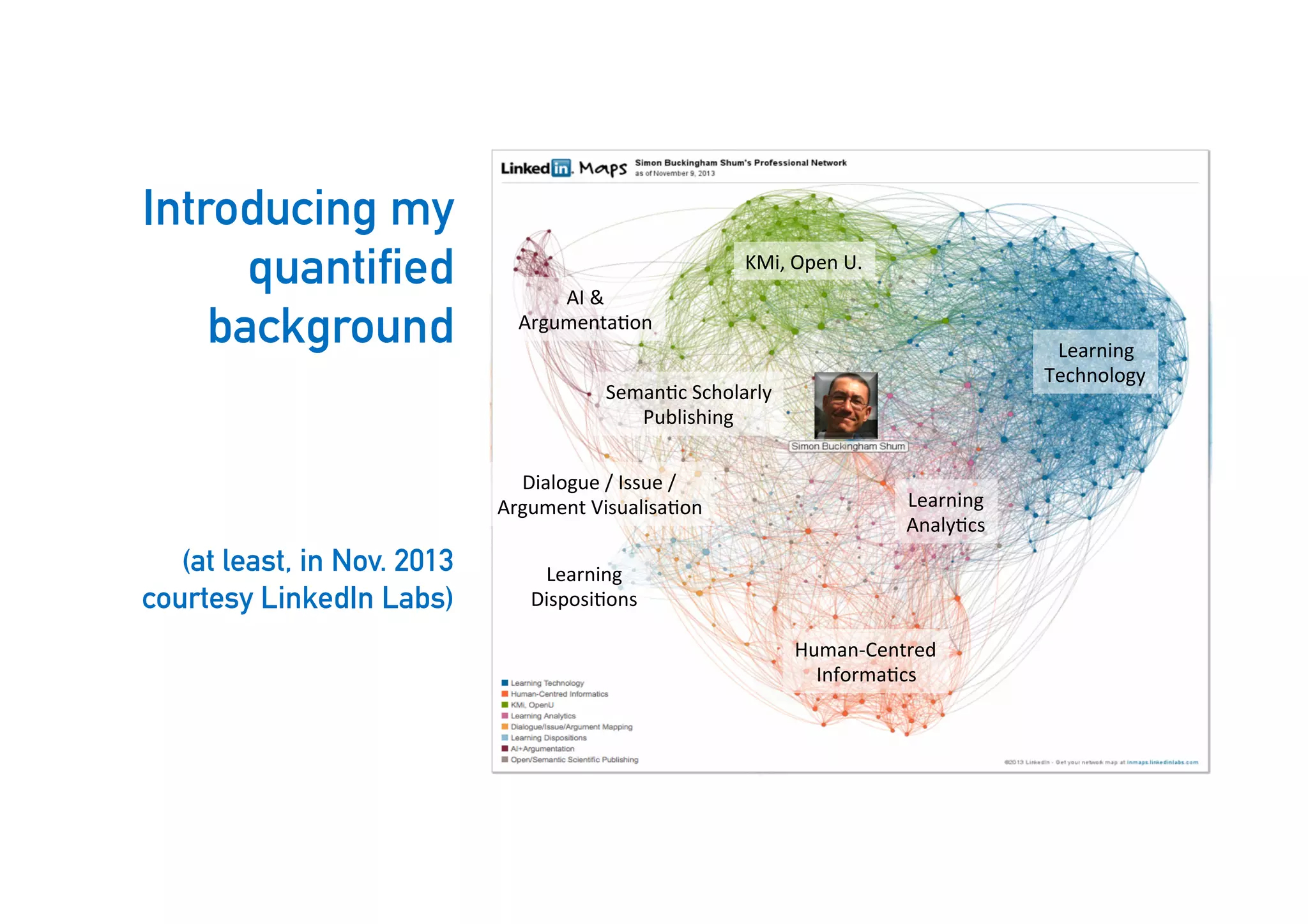

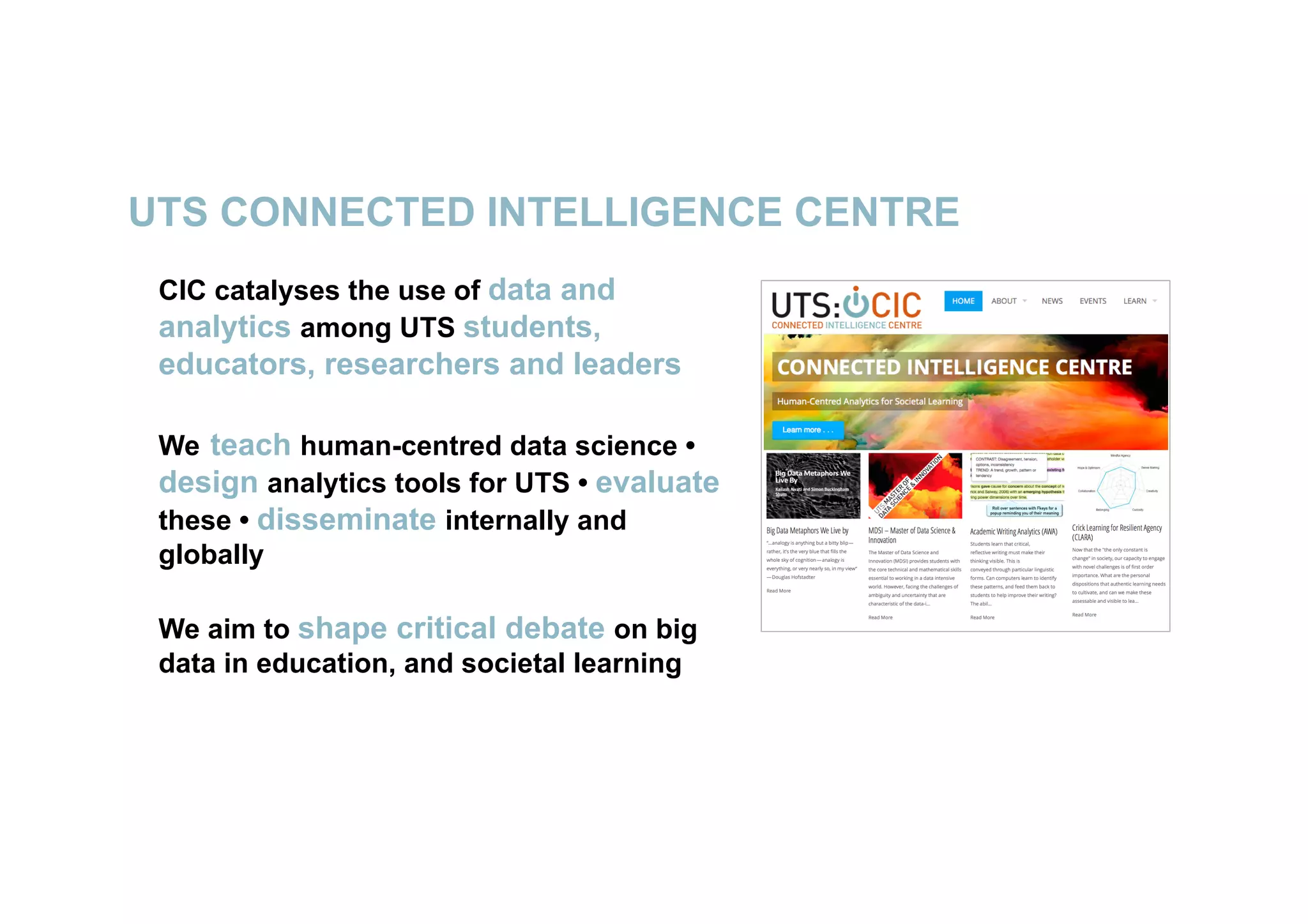

Simon Buckingham Shum is a professor of learning informatics and director of the Connected Intelligence Centre at the University of Technology Sydney. He discusses how large scale data and analytics are changing society and education. His research focuses on developing human-centered approaches to data science, machine learning, and analytics to ensure they are used ethically and for the benefit of learning. He argues analytics require a critical perspective to avoid potential harms and should seek to understand issues beyond surface-level metrics.

![Intelligent tutoring for skills mastery (CMU)

Lovett M, Meyer O and Thille C. (2008) The Open Learning Initiative: Measuring the effectiveness of the OLI statistics course in accelerating student

learning. Journal of Interactive Media in Education 14. http://jime.open.ac.uk/article/2008-14/352

“In this study, results showed that

OLI-Statistics students [blended

learning] learned a full semester’s

worth of material in half as much

time and performed as well or

better than students learning from

traditional instruction over a full

semester.”

26](https://image.slidesharecdn.com/sbskeynotelibrarydatacarpentry2016-160712005315/75/Who-are-you-and-makes-you-special-26-2048.jpg)

![Framing future knowledge

infrastructures

http://knowledgeinfrastructures.org

This too, however, is not a neutral

feature. As knowledge

infrastructures shape, generate

and distribute knowledge, they do

so differentially, often in ways that

encode and reinforce existing

interests and relations of power.

[…] At scale, the effect of these

choices may be an aggregate

imbalance in the structure and

distribution of our knowledge.](https://image.slidesharecdn.com/sbskeynotelibrarydatacarpentry2016-160712005315/75/Who-are-you-and-makes-you-special-43-2048.jpg)

![Bowker, G. C. and Star, L. S. (1999). Sorting Things Out: Classification and Its Consequences. MIT Press, Cambridge, MA, pp. 277, 278, 281

“Classification systems provide both a

warrant and a tool for forgetting

[...] what to forget and how to forget it

[...] The argument comes down to asking

not only what gets coded in but what gets

coded out of a given scheme.”

46](https://image.slidesharecdn.com/sbskeynotelibrarydatacarpentry2016-160712005315/75/Who-are-you-and-makes-you-special-46-2048.jpg)

![Selwyn, N. (2014). Data entry: towards the critical study of digital data and education. Learning, Media and Technology.

http://dx.doi.org/10.1080/17439884.2014.921628

“observing, measuring, describing,

categorising, classifying, sorting, ordering

and ranking). […] these processes of meaning-making are never

wholly neutral, objective and ‘automated’ but are fraught with

problems and compromises, biases and

omissions.

47](https://image.slidesharecdn.com/sbskeynotelibrarydatacarpentry2016-160712005315/75/Who-are-you-and-makes-you-special-47-2048.jpg)

![http://governingalgorithms.org

In

an

increasingly

algorithmic

world

[…]

What,

then,

do

we

talk

about

when

we

talk

about

“governing

algorithms”?

49](https://image.slidesharecdn.com/sbskeynotelibrarydatacarpentry2016-160712005315/75/Who-are-you-and-makes-you-special-49-2048.jpg)