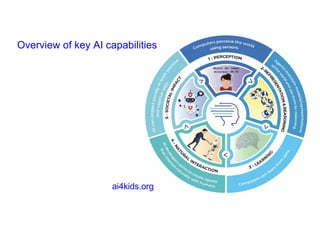

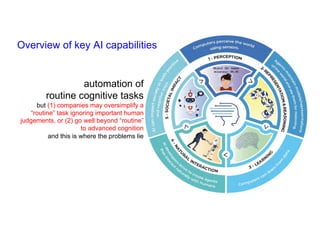

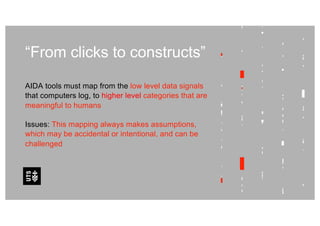

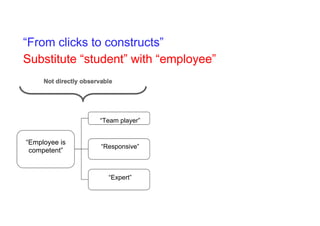

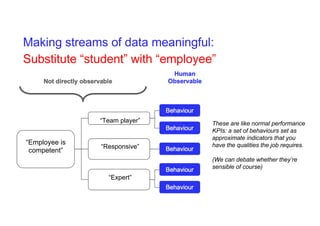

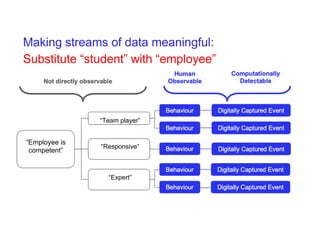

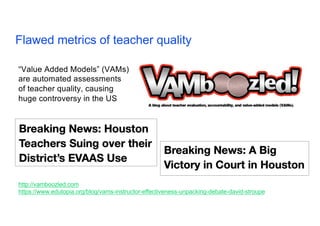

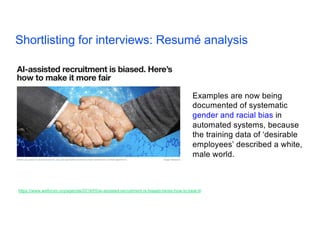

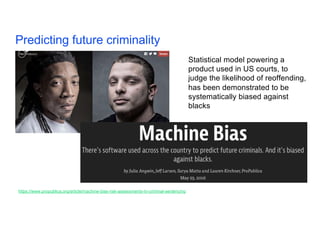

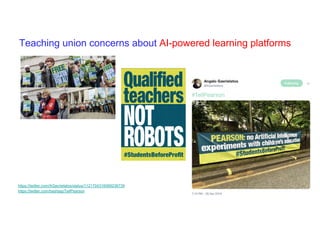

The document discusses key concepts and capabilities of AI and data analytics, highlighting issues such as the potential for bias in automated systems and the oversimplification of human tasks. It emphasizes the importance of meaningful data mapping and the risks associated with predictive modeling and profiling. Additionally, the document touches on the role of trade unions in addressing AI challenges and the need for effective reskilling and upskilling of employees.

![https://www.weforum.org/reports/the-future-of-jobs-report-2018

“Insufficient reskilling and upskilling:

Employers indicate that they are set to

prioritize and focus their re- and

upskilling efforts on employees

currently performing high-value

roles as a way of strengthening their

enterprise’s strategic capacity

[...]

In other words, those most in

need of reskilling and upskilling

are least likely to receive such

training.” (p.ix)](https://image.slidesharecdn.com/aidaethics-acossintro-sept2019-191213060117/85/AI-Data-Analytics-AIDA-Key-concepts-examples-risks-30-320.jpg)