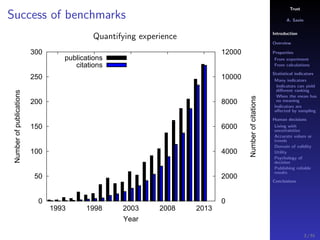

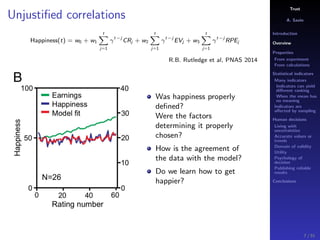

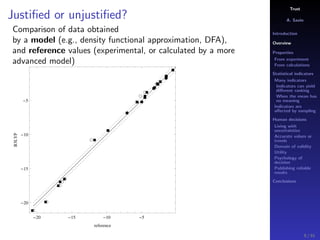

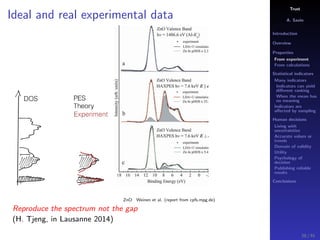

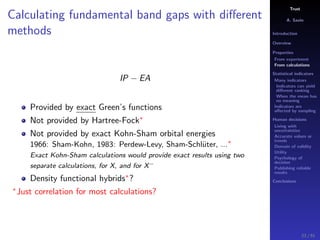

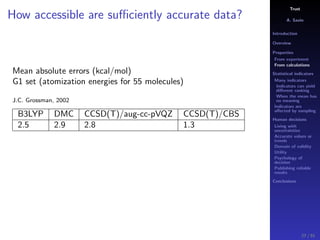

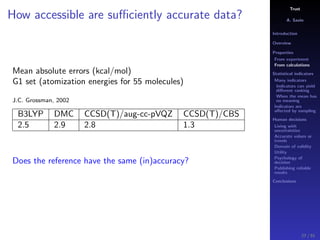

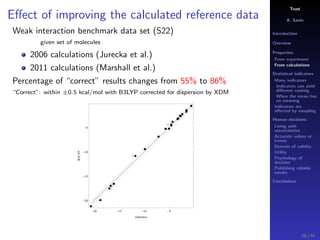

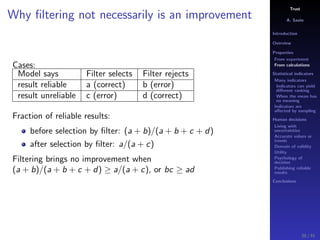

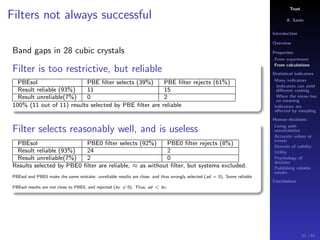

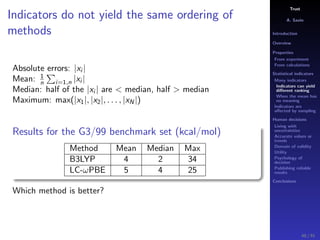

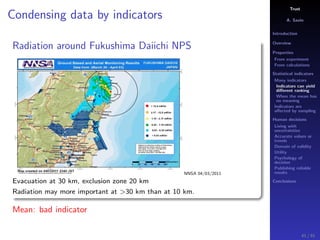

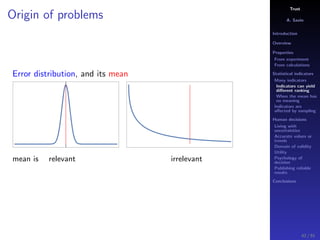

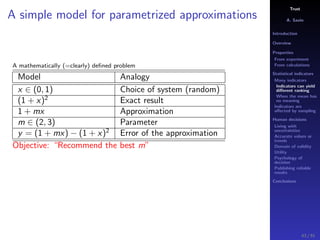

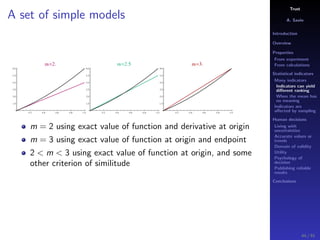

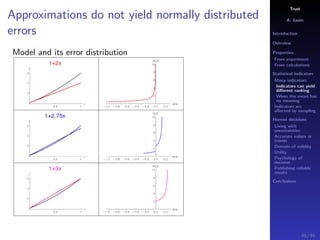

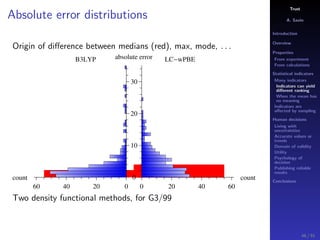

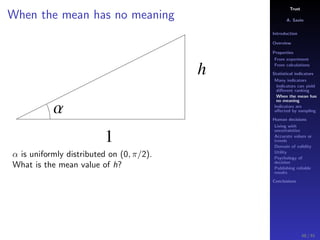

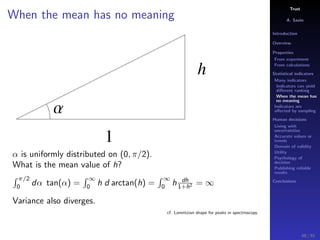

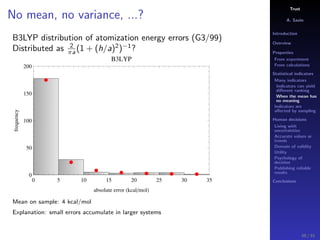

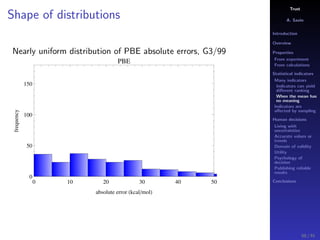

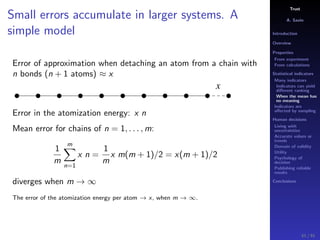

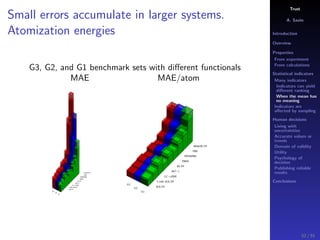

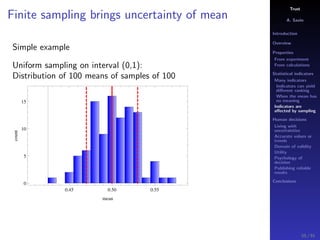

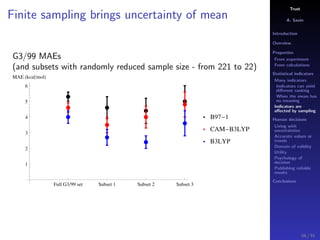

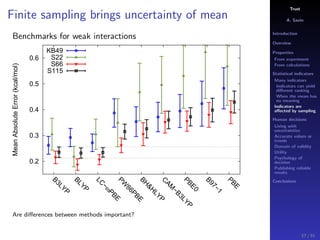

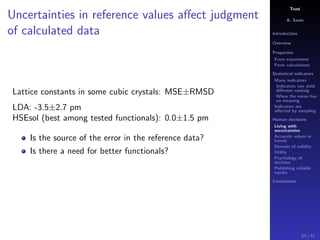

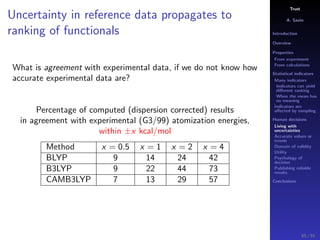

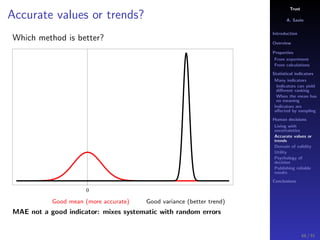

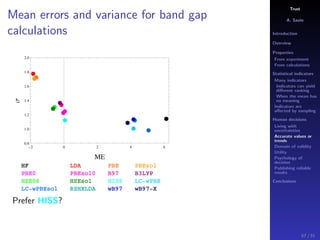

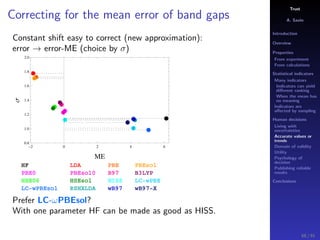

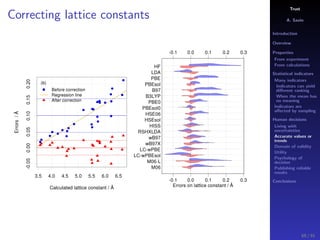

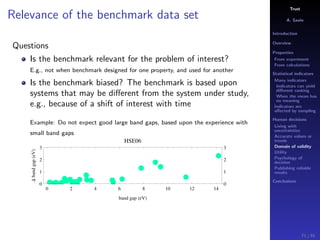

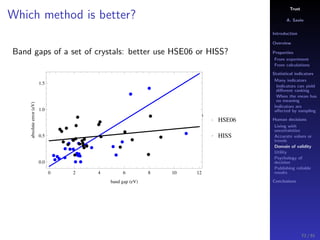

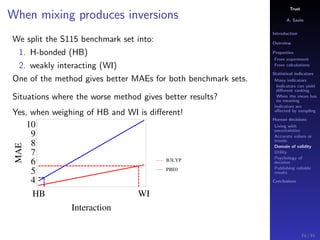

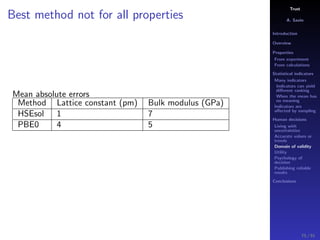

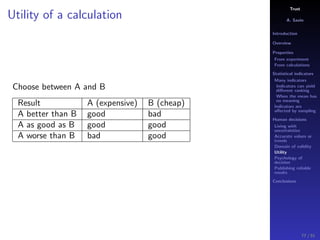

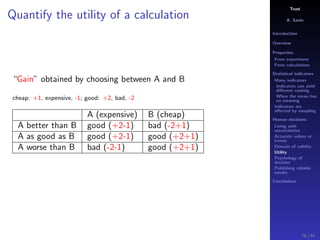

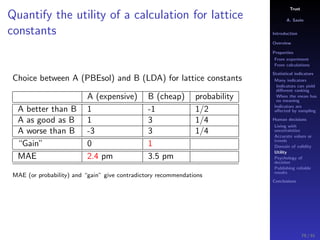

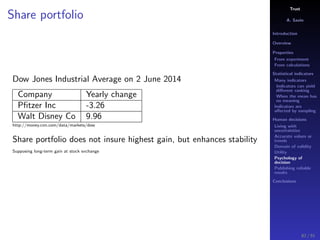

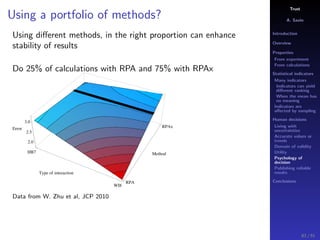

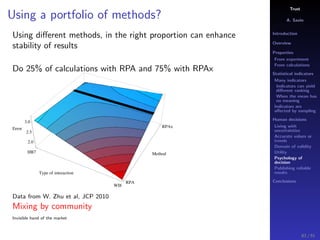

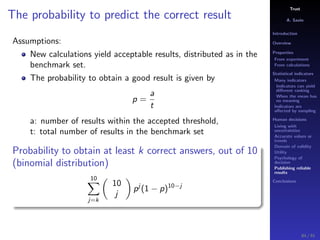

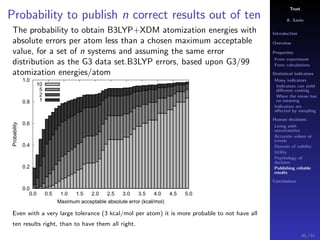

The document discusses the complexities of interpreting statistical indicators and experimental data within the context of decision-making and reliability. It emphasizes the influence of sampling, human judgment, and the inherent uncertainties in deriving accurate values or trends from various sources. The conclusions suggest a need for careful consideration of the methodologies used in statistical predictions and the validation of results against benchmark data.