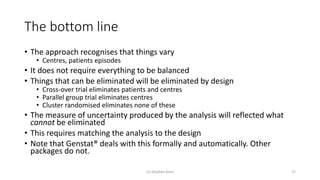

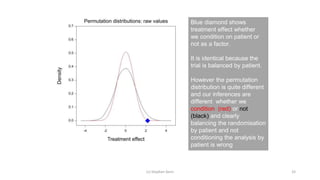

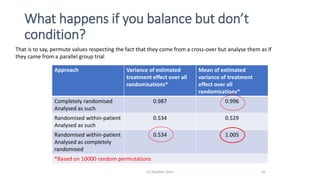

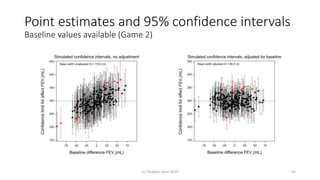

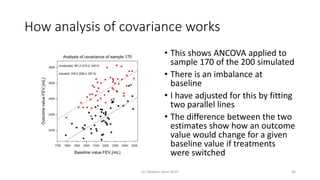

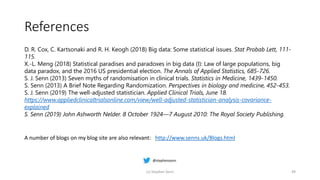

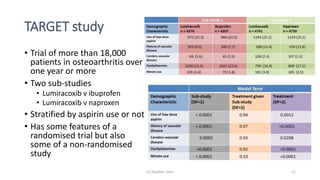

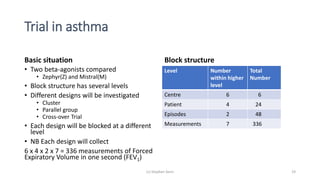

The document discusses the relationship between experiment design and data analysis, emphasizing that poorly designed studies can lead to misleading conclusions, regardless of the amount of data. It highlights the importance of careful experimental design while examining various statistical issues and challenges in clinical trials and observational studies. The author warns against over-reliance on big data claims, advocating for rigorous methodologies to ensure valid inferences.

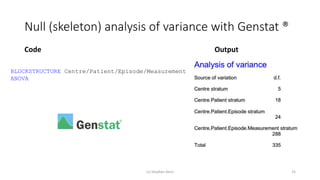

![Full (skeleton) analysis of variance with Genstat ®

Additional Code Output

(c) Stephen Senn 26

TREATMENTSTRUCTURE Design[]

ANOVA

(Here Design[] is a pointer with values corresponding

to each of the three designs.)](https://image.slidesharecdn.com/toinfinityandbeyondv2-201118220033/85/To-infinity-and-beyond-v2-26-320.jpg)