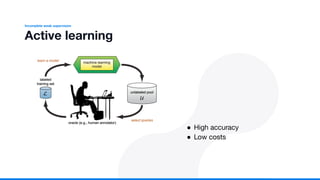

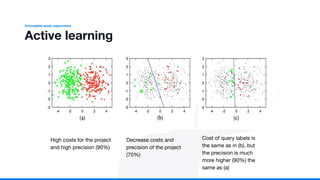

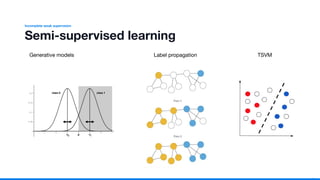

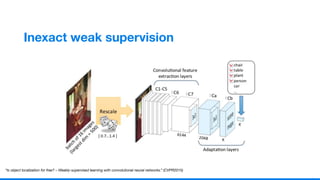

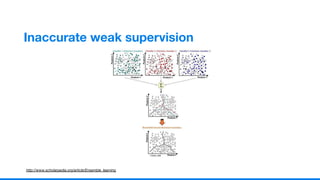

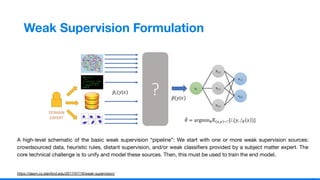

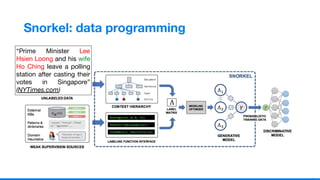

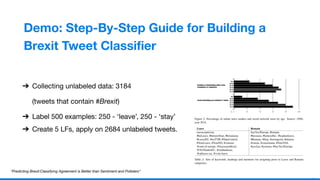

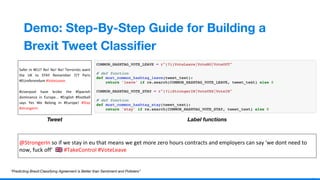

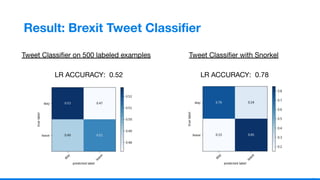

The document introduces weakly supervised learning, outlining its types: incomplete, inexact, and inaccurate, and discusses best practices. It highlights the Snorkel system for managing training data and provides a step-by-step guide for building a Brexit tweet classifier. The summary emphasizes the applications of weak supervision in various domains, including social media text mining and medical image classification.