1. The document describes the development and testing of an Accessibility Evaluation Assistant (AEA) tool to help novice evaluators learn how to manually assess websites for accessibility.

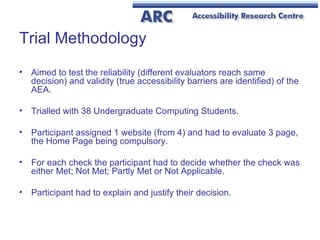

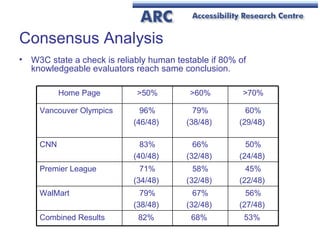

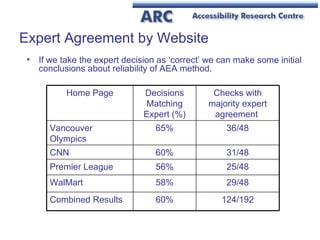

2. A trial of the AEA tool involved 38 undergraduate students evaluating the accessibility of pages on 4 different websites.

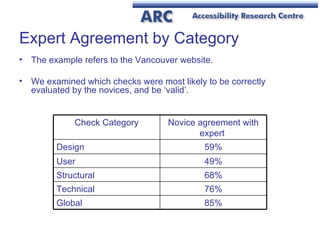

3. The results showed that when guided by the AEA, novice evaluators were able to reliably identify accessibility barriers, though some check categories like subjective user checks produced lower agreement with experts. The AEA shows promise as an educational tool for developing evaluation skills.

![Christopher Bailey Dr. Elaine Pearson Teesside University [email_address] Development and Trial of an Educational Tool to Support the Accessibility Evaluation Process](https://image.slidesharecdn.com/w4abaileydevelopmenttrialeducationalevaluation-110329163128-phpapp02/75/W4A2011-C-Bailey-1-2048.jpg)

![Christopher Bailey Dr. Elaine Pearson Teesside University [email_address] Accessibility Evaluation Assistant http://arc.tees.ac.uk/aea Questions?](https://image.slidesharecdn.com/w4abaileydevelopmenttrialeducationalevaluation-110329163128-phpapp02/85/W4A2011-C-Bailey-13-320.jpg)