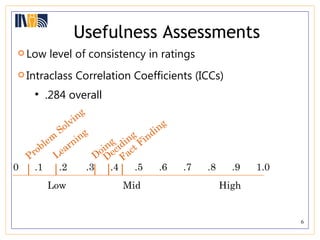

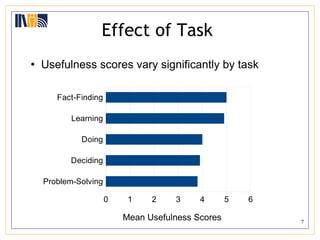

This document reports on a user study that examined how task and document genre influence perceptions of document usefulness. 25 participants assessed the usefulness of 160 documents from the Canadian government website on 5 different tasks. Usefulness scores varied significantly by task and genre. Documents like news articles were seen as less useful than guides or homepages. While genre influenced perceptions for tasks like deciding or learning, it did not for more simple or complex tasks. The study also found that identifying genres was challenging for participants. In summary, the document explores how task and genre impact judgments of a document's usefulness.

![Genre Identification Challenging task: “ [I] didn’t really know how to describe them other than information. They were reports of web site pages with info to me.” Participants chose the same label as the expert for 52% of documents; But, only 25% of all labels matched the expert assessment due to heavy use of multiple labels. For most genres, the label most commonly applied by assessors as a group matched the expert assessment.](https://image.slidesharecdn.com/presentation-111206163408-phpapp01/85/Presentation-10-320.jpg)