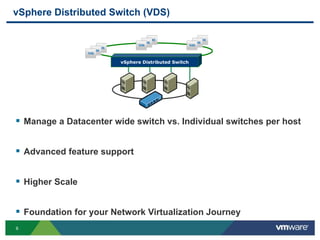

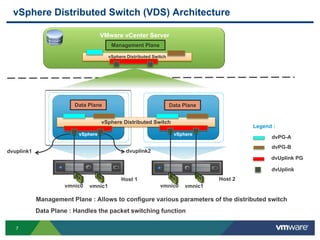

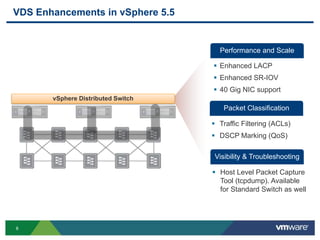

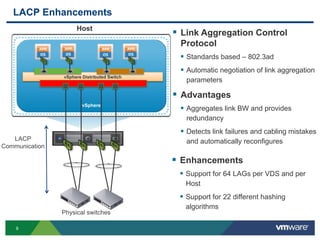

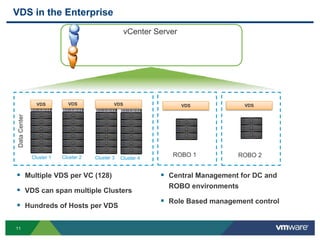

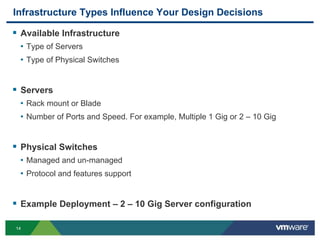

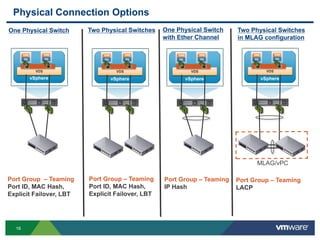

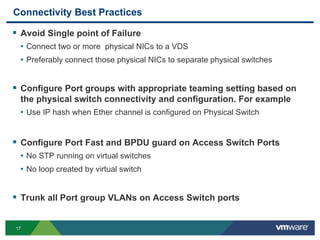

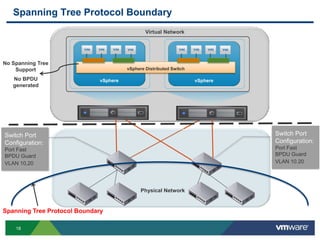

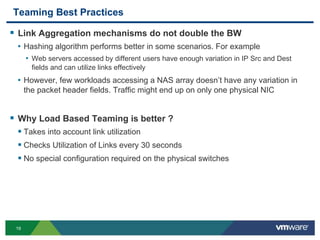

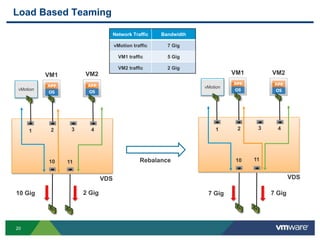

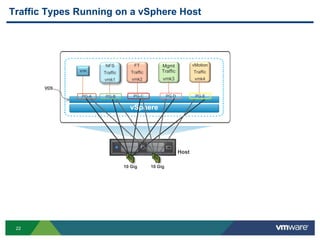

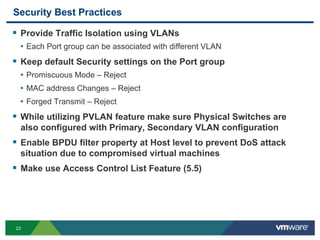

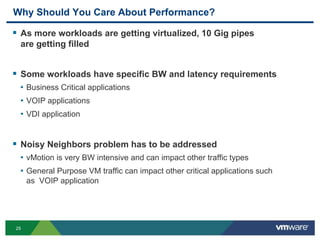

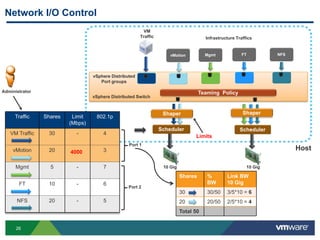

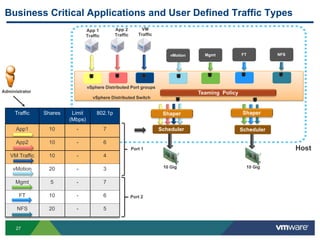

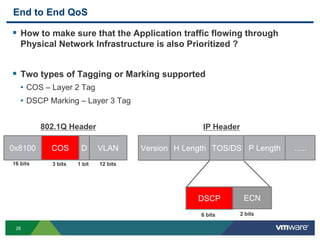

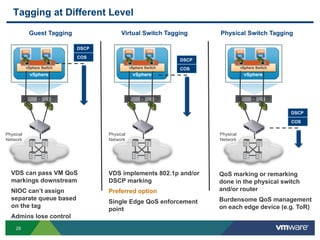

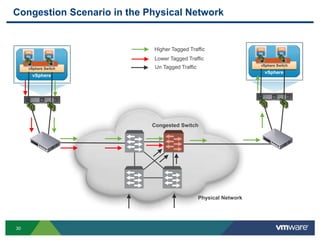

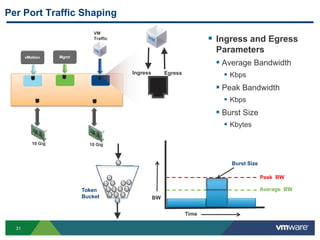

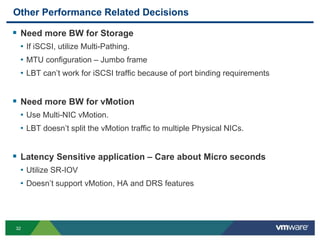

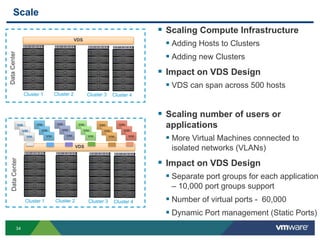

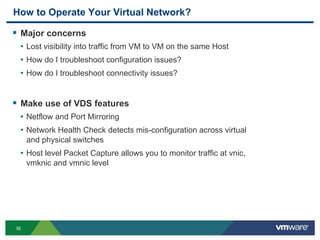

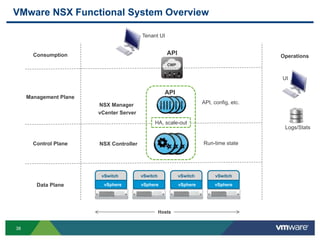

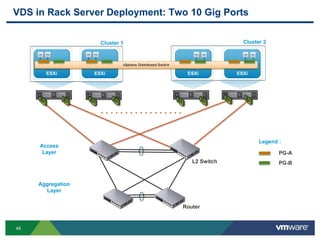

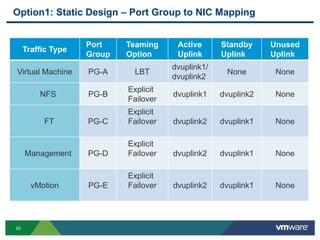

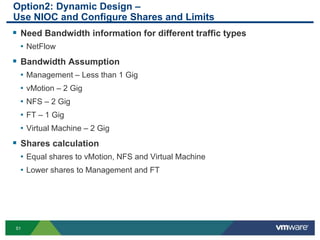

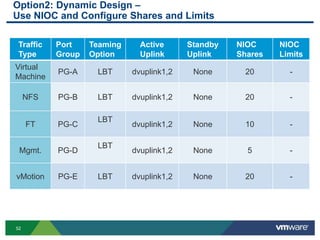

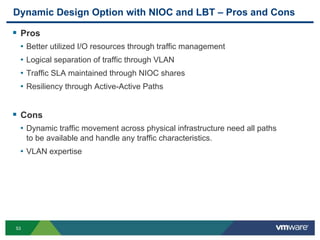

The document outlines the design and best practices for using VMware's vSphere Distributed Switch (VDS), highlighting its new capabilities and enhancements in version 5.5. It covers various aspects such as deployment strategies, infrastructure design goals, security measures, performance issues, and the importance of using features like Network I/O Control (NIOC) and Load Based Teaming (LBT). Additionally, it provides recommendations for ensuring reliable, secure, and scalable virtual network architecture.