今回の資料「Transfusion / π0 / π0.5」は、画像・言語・アクションを統合するロボット基盤モデルについて紹介しています。

拡散×自己回帰を融合したTransformerをベースに、π0.5ではオープンワールドでの推論・計画も可能に。

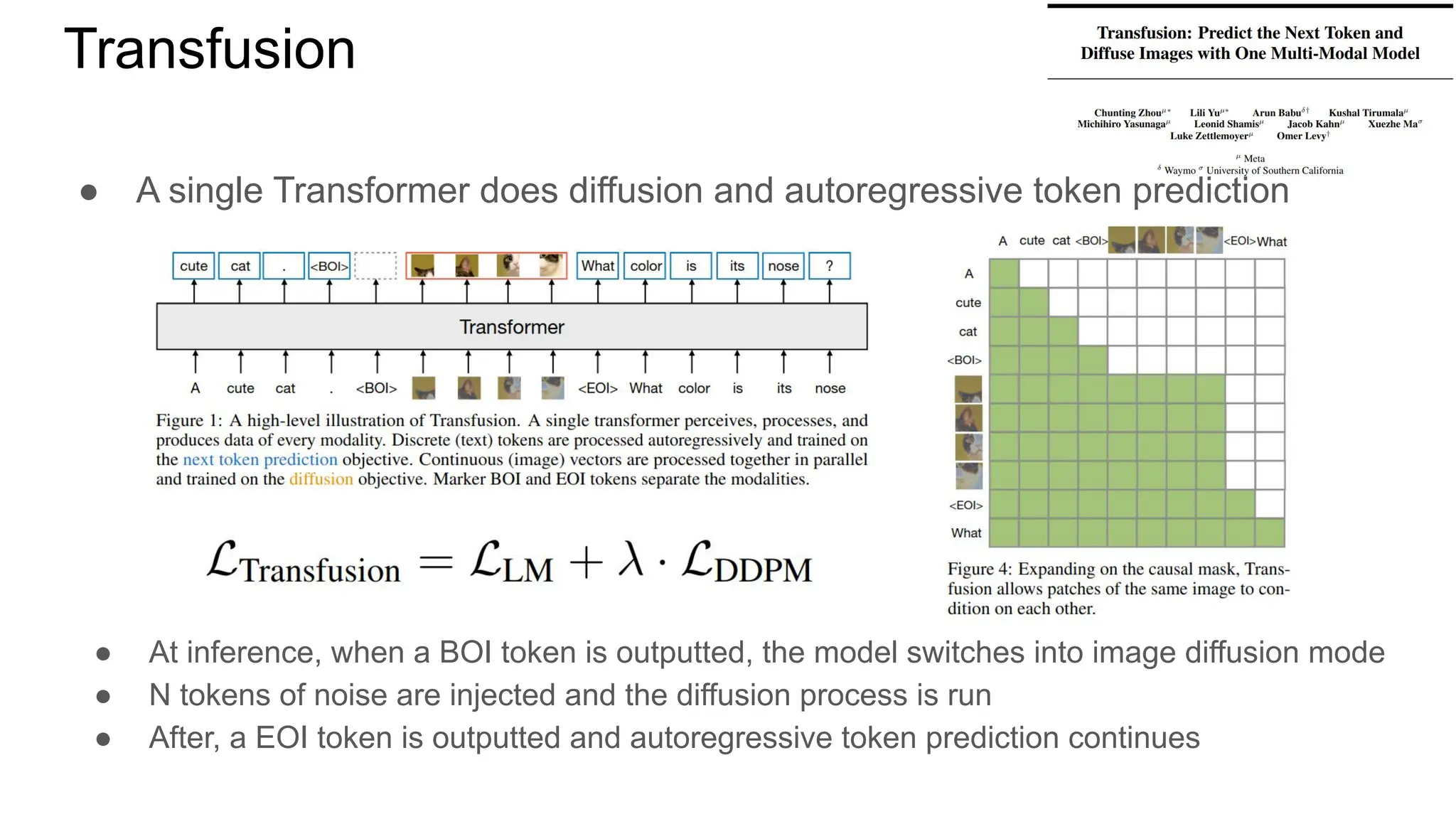

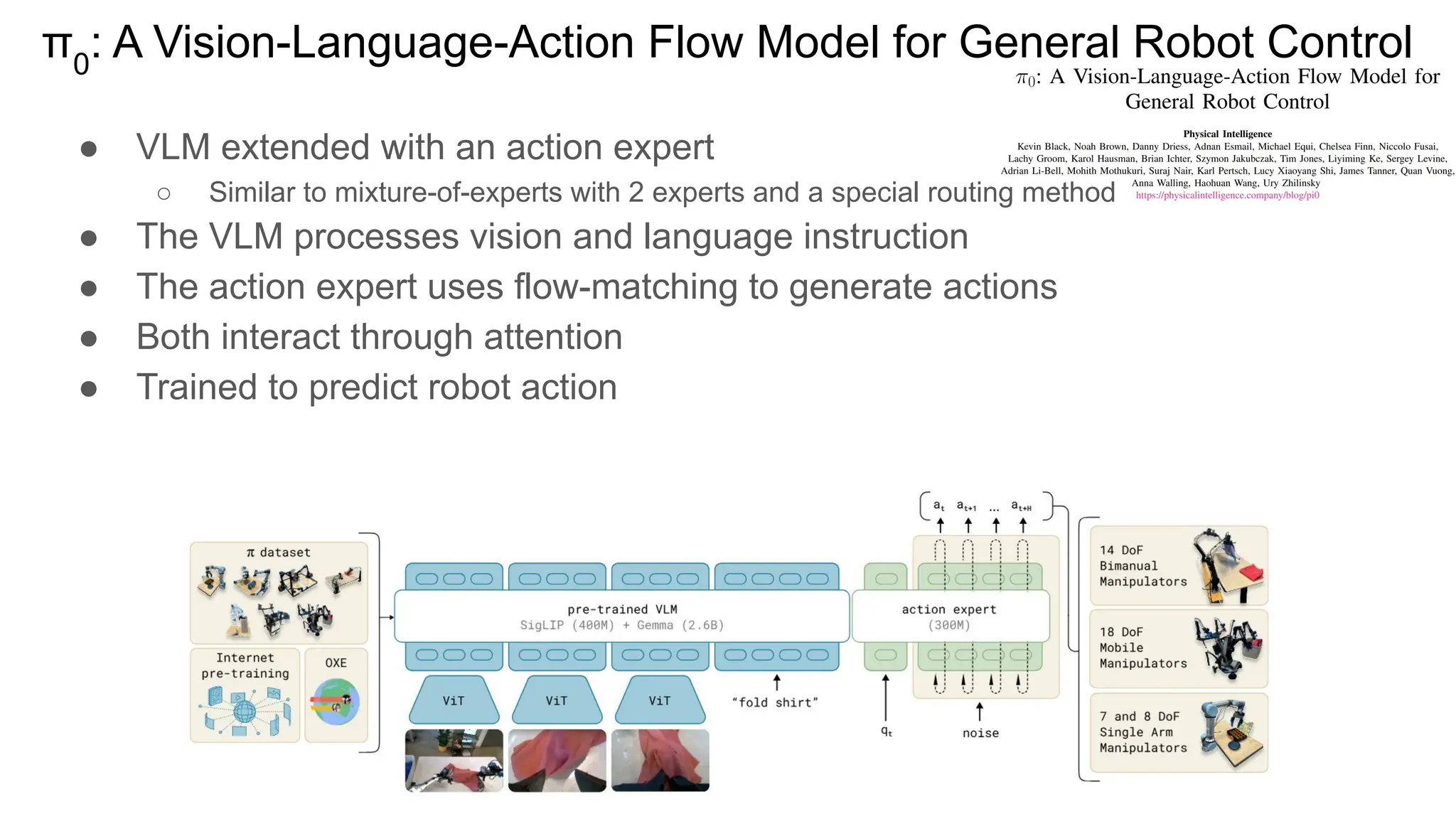

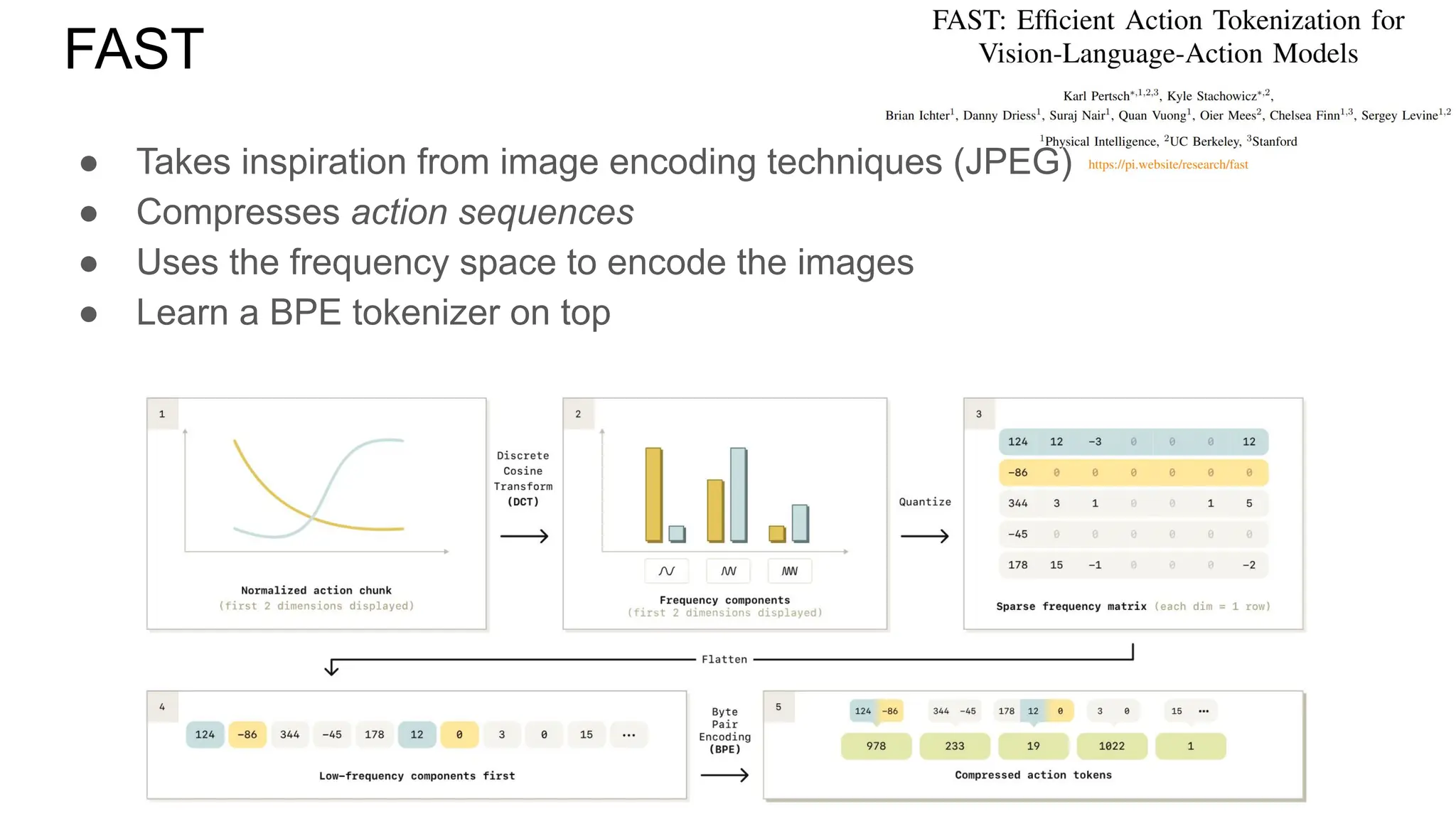

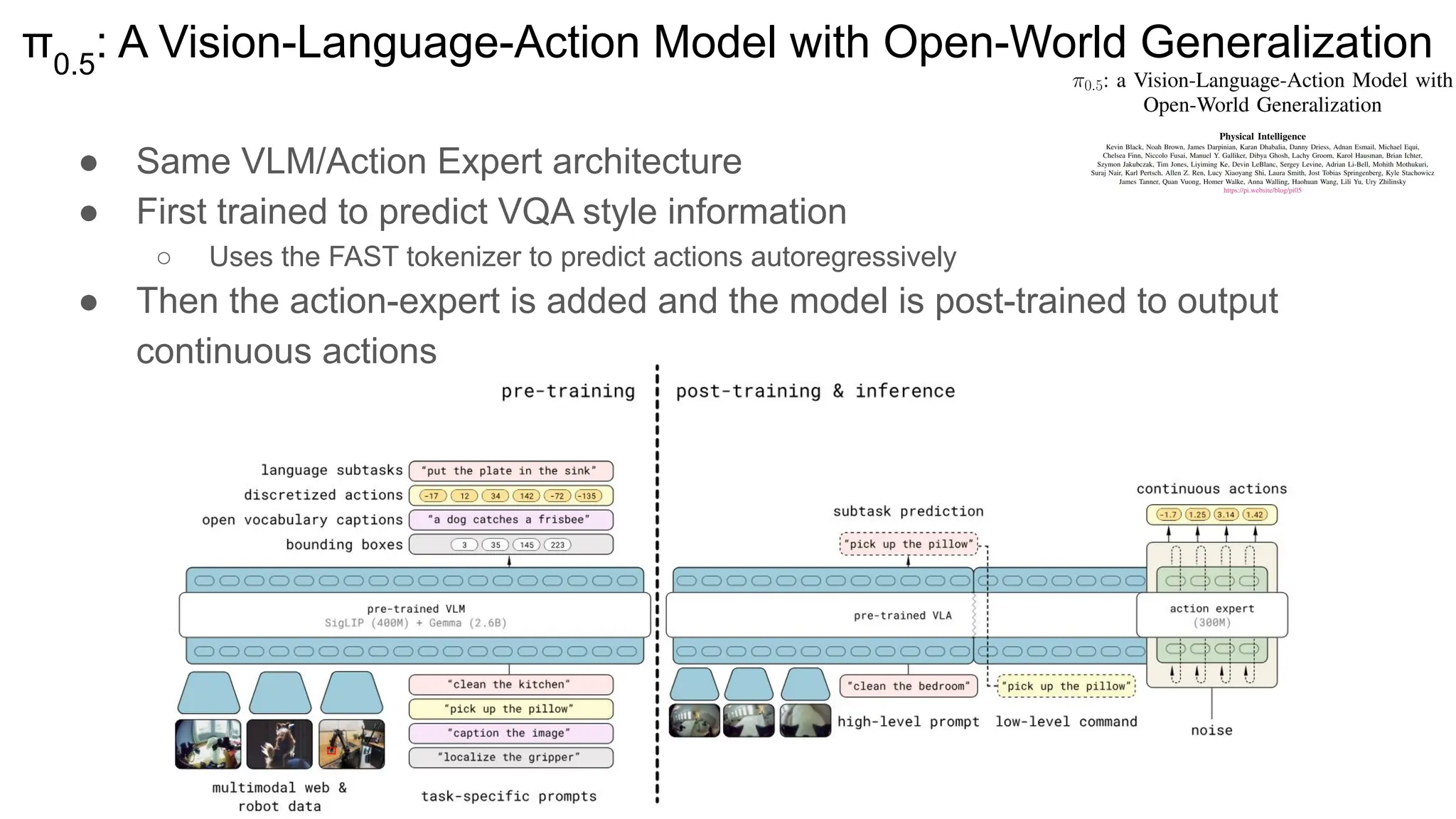

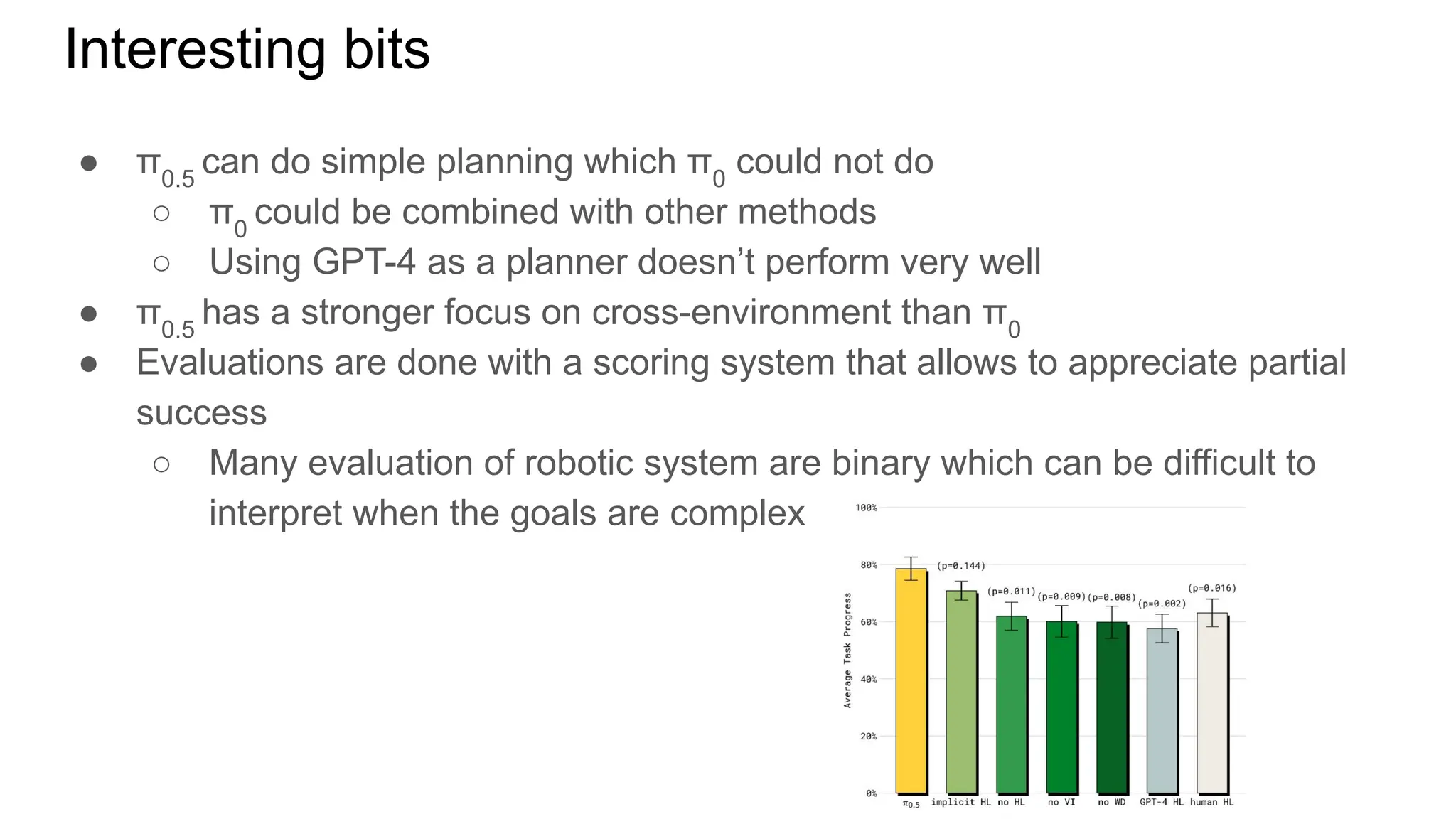

This presentation introduces robot foundation models that integrate vision, language, and action.

Built on a Transformer combining diffusion and autoregression, π0.5 enables reasoning and planning in open-world settings.