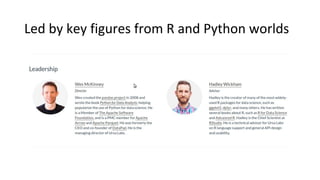

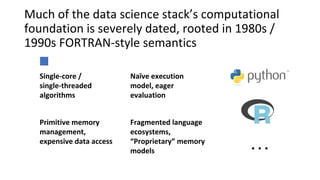

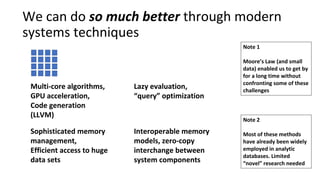

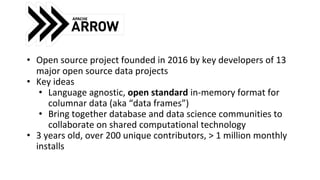

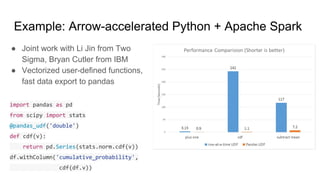

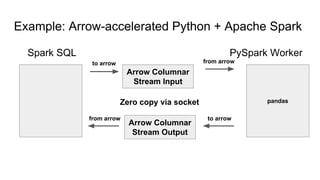

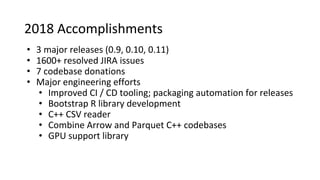

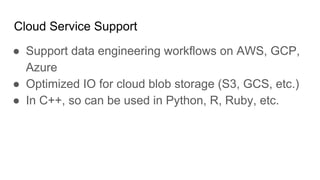

Ursa Labs, established by key developers from major open-source data projects, aims to enhance the Apache Arrow ecosystem through modern computational techniques. The organization focuses on developing a language-agnostic, in-memory format for efficient data interchange and computation across various platforms, addressing limitations of traditional data science stacks. In 2018, significant strides were made in file format support and the development of frameworks like Arrow Flight and Gandiva, setting the stage for accelerated growth in 2019.