Embed presentation

Downloaded 39 times

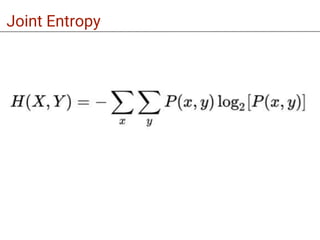

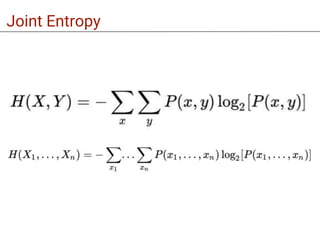

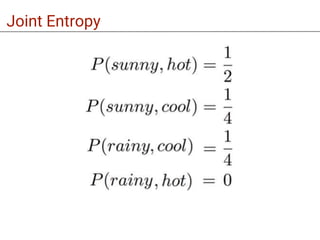

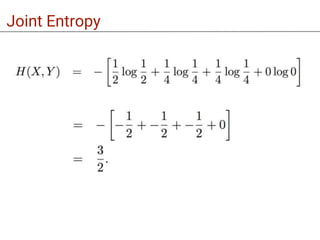

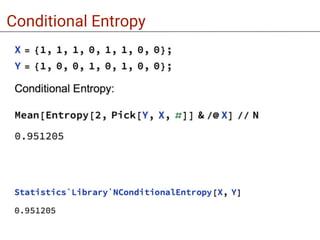

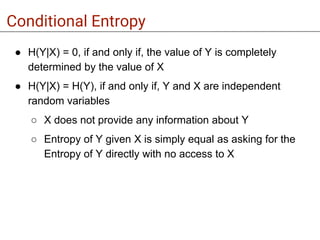

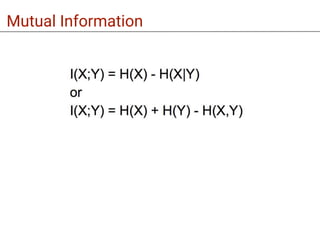

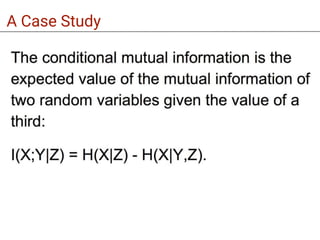

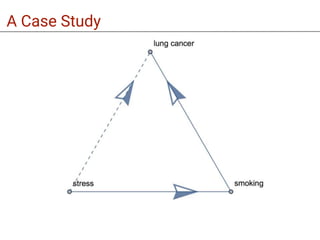

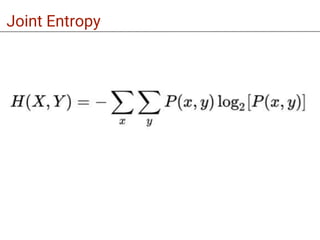

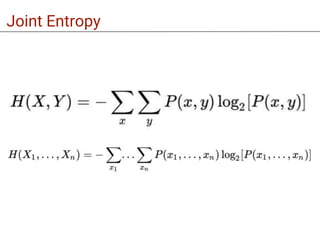

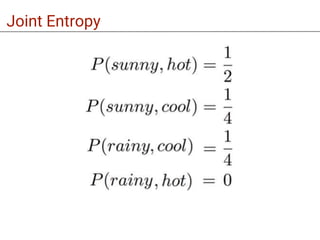

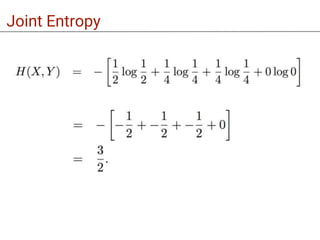

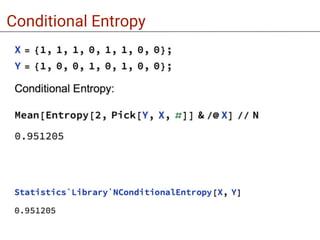

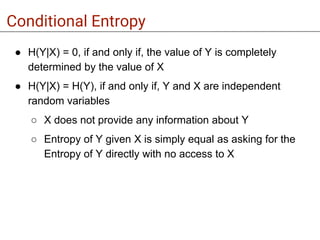

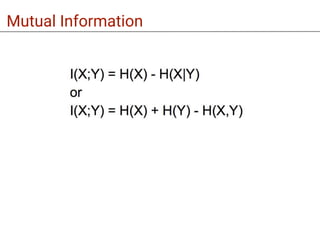

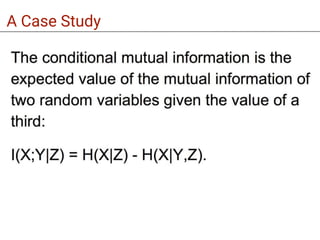

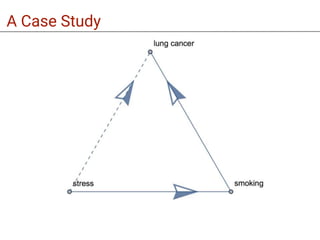

This document discusses joint entropy, conditional entropy, and mutual information. It defines joint entropy as the entropy of two random variables together. Conditional entropy is defined as the entropy of one random variable given knowledge of the other. It provides examples of when conditional entropy would be 0 or equal to the entropy without conditioning. The document also mentions a case study to demonstrate these information theory concepts.