Fine-tuning Pre-Trained Models for Generative AI Applications

- 1. FINETUNING PRETRAINED MODELS FOR GENERATIVE AI APPLICATIONS Talk to our Consultant Listen to the article Generative AI has been gaining huge traction recently thanks to its ability to autonomously generate high-quality text, images, audio and other forms of content. It has various applications in di몭erent domains, from content creation and marketing to healthcare, software development and 몭nance.

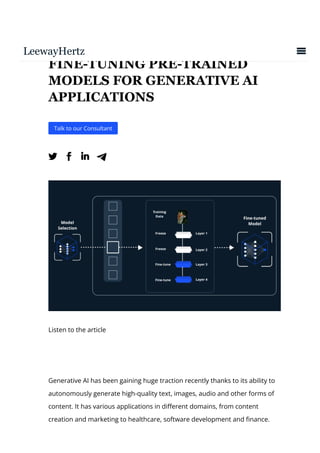

- 2. Applications powered by generative AI models can automate tedious and repetitive tasks in a business environment, showcasing intelligent decision- making skills. Whether chatbots, virtual assistants, or predictive analytics apps, generative AI revolutionizes businesses’ operations. It is, however, challenging to create models that can produce output that is both coherent and contextually relevant in generative AI applications. Pre- trained models emerge as a powerful solution to this issue. Because they are trained on massive amounts of data, pre-trained language models can generate text similar to human language. But there may be situations where pre-trained models do not perform optimally for a particular application or domain. A pre-trained model needs to be 몭ne-tuned in this situation. The 몭ne-tuning process involves updating pre-trained models with new information or data to help them adapt to speci몭c tasks or domains. During the process of 몭ne-tuning, the model is trained on a speci몭c set of data to customize it to a particular use case. As generative AI applications have grown in popularity, 몭ne-tuning has become an increasingly popular technique to enhance pre-trained models’ performance. What are pre-trained models? Popular pre-trained models for generative AI applications What does 몭ne-tuning a pre-trained model mean? How does 몭ne-tuning pre-trained models work? Understanding 몭ne-tuning with an example How to 몭ne-tune a pre-trained model? Best practices to follow when 몭ne-tuning a pre-trained model Bene몭ts of 몭ne-tuning pre-trained models for generative AI applications What generative AI development services does LeewayHertz o몭er? What are pretrained models? The term “pre-trained models” refers to models that are trained on large amounts of data to perform a speci몭c task, such as natural language

- 3. processing, image recognition, or speech recognition. Developers and researchers can use these models without having to train their own models from scratch since the models have already learned features and patterns from the data. In order to achieve high accuracy, pre-trained models are typically trained on large, high-quality datasets using state-of-the-art techniques. When compared to training a model from scratch, these pre-trained models can save developers and researchers time and money. It enables smaller organizations or individuals with limited resources to achieve impressive performance levels without requiring much data. Popular pretrained models for generative AI applications Some of the popular pre-trained models include: GPT-3 – Generative Pre-trained Transformer 3 is a cutting-edge model developed by OpenAI. It has been pre-trained on a large amount of text dataset to comprehend prompts entered in human language and generate human-like text. They can be e몭ciently 몭ne-tuned for language-related tasks like translation, question-answering and summarization. DALL-E – DALL-E is a language model developed by OpenAI for generating images from textual descriptions. Having been trained on a large dataset of images and descriptions, it can generate images that match the input descriptions. BERT – Bidirectional Encoder Representations from Transformers or BERT is a language model developed by Google and can be used for various tasks, including question answering, sentiment analysis, and language translation. It has been trained on a large amount of text data and can be 몭ne-tuned to handle speci몭c language tasks. StyleGAN – Style Generative Adversarial Network is another generative model developed by NVIDIA that generates high-quality images of animals, faces and other objects.

- 4. VQGAN + CLIP – This generative model, developed by EleutherAI, combines a generative model (VQGAN) and a language model (CLIP) to generate images based on textual prompts. With the help of a large dataset of images and textual descriptions, it can produce high-quality images matching input prompts. Whisper – Developed by OpenAI, Whisper is a versatile speech recognition model trained on a diverse range of audio data. It is a multi-task model capable of performing tasks such as multilingual speech recognition, speech translation, and language identi몭cation. What does finetuning a pretrained model mean? The 몭ne-tuning technique is used to optimize a model’s performance on a new or di몭erent task. It is used to tailor a model to meet a speci몭c need or domain, say cancer detection, in the 몭eld of healthcare. Pre-trained models are 몭ne-tuned by training them on large amounts of labeled data for a certain task, such as Natural Language Processing (NLP) or image classi몭cation. Once trained, the model can be applied to similar new tasks or datasets with limited labeled data by 몭ne-tuning the pre-trained model. The 몭ne-tuning process is commonly used in transfer learning, where a pre- trained model is used as a starting point to train a new model for a contrasting but related task. A pre-trained model can signi몭cantly diminish the labeled data required to train a new model, making it an e몭ective tool for tasks where labeled data is scarce or expensive. How does finetuning pretrained models work? Fine-tuning a pre-trained model works by updating the parameters utilizing the available labeled data instead of starting the training process from the ground up. The following are the generic steps involved in 몭ne-tuning: 1. Loading the pre-trained model: The initial phase in the process is to select

- 5. and load the right model, which has already been trained on a large amount of data, for a related task. 2. Modifying the model for the new task: Once a pre-trained model is loaded, its top layers must be replaced or retrained to customize it for the new task. Adapting the pre-trained model to new data is necessary because the top layers are often task speci몭c. 3. Freezing particular layers: The earlier layers facilitating low-level feature extraction are usually frozen in a pre-trained model. Since these layers have already learned general features that are useful for various tasks, freezing them may allow the model to preserve these features, avoiding over몭tting the limited labeled data available in the new task. 4. Training the new layers: With the labeled data available for the new task, the newly created layers are then trained, all the while keeping the weights of the earlier layers constant. As a result, the model’s parameters can be adapted to the new task, and its feature representations can be re몭ned. 5. Fine-tuning the model: Once the new layers are trained, you can 몭ne-tune the entire model on the new task using the available limited data.

- 6. Understanding finetuning with an example Suppose you have a pre-trained model trained on a wide range of medical data or images that can detect abnormalities like tumors and want to adapt the model for a speci몭c use case, say identifying a rare type of cancer, but you have a limited set of labeled data available. In such a case, you must 몭ne- tune the model by adding new layers on top of the pre-trained model and training the newly added layers with the available data. Typically, the earlier layers of a pre-trained model, which extract low-level features, are frozen to prevent over몭tting. How to finetune a pretrained model? Fine-tuning a pre-trained model involves the following steps: Choosing a pre-trained model The 몭rst step in 몭ne-tuning a pre-trained model involves selecting the right model. While choosing the model, ensure the pre-trained model you opt for suits the generative AI task you intend to perform. Here, we would be moving forward with OpenAI base models (Ada, Babbage, Curie and Davinci) to 몭ne- tune and incorporate them into our application. If you are confused about which OpenAI model to select for your right use case, you can refer to the comparison table below: Contact LeewayHertz’s AI experts for your project Fine-tune pre-trained generative AI models to build highly performant solutions Learn More

- 7. Ada Babbage Curie Pre-trained dataset Internet text Internet text Internet text Parameters 1.2 billion 6 billion 13 billion Released date 2020 2020 2021 Cost Least costly Lower cost than Curie Lower cost than Davinci Capability Can perform well if given more context More capable than Ada, but less e몭cient Can execute tasks that Ada or Babbage can do Tasks it can perform Apt for less nuanced tasks, like reformatting and parsing text or simple classi몭cation tasks Most suited for semantic search tasks. It can also do moderate classi몭cation tasks Handle complex classi몭cation tasks, sentiment analysis, summarization, chatbot applications and Q&A Unique features Fastest model Perform straightforward tasks Balances speed and power

- 8. Once you 몭gure out the right model for your speci몭c use case, start installing the dependencies and preparing the data. Installation It is suggested to use OpenAI’s Command-line Interface (CLI). Run the following command to install it: pip install upgrade openai To set your OPENAI_API_KEY environment variable, you can add the following line to your shell initialization script (such as .bashrc, zshrc, etc.) or run it in the command line before executing the 몭ne-tuning command. export OPENAI_API_KEY="<OPENAI_API_KEY>" Data preparation Before 몭ne-tuning the model, preparing the data corresponding to your particular use case is crucial. The raw data cannot be directly fed into the model as it requires 몭ltering, formatting and pre-processing into a speci몭c format. The data needs to be organized and arranged systemically so the model can interpret and analyze the data easily. For our application, the data must be transformed into JSONL format, where each line represents a training example of a prompt-completion pair. You can utilize OpenAI’s CLI data preparation tool to convert your data into this 몭le format e몭ciently. {"prompt": "<prompt text>", "completion": "<ideal generated text>"} {"prompt": "<prompt text>", "completion": "<ideal generated text>"} {"prompt": "<prompt text>", "completion": "<ideal generated text>"} ... CLI data preparation tool

- 9. OpenAI’s CLI data preparation tool validates, provides suggestions and reformats your data. Run the following command: openai tools fine_tunes.prepare_data f <LOCAL_FILE> A JSONL 몭le can be generated from any type of 몭le, whether a CSV, JSON, XLSX, TSV or JSONL 몭le. Ensure that a prompt and completion key/column is included. Develop a 몭ne-tuned model Once you have prepared and pre-processed the training data and converted it into JSONL 몭le, you can begin the 몭ne-tuning process utilizing the OpenAI CLI: openai api fine_tunes.create t <TRAIN_FILE_ID_OR_PATH> m <BASE_MODEL In the above command, the ‘BASE_MODEL’ should be the name of the base model you chose, be it Babbage, Curie, Ada or Davinci. The above commands result in the following things: Develops a 몭ne-tuned job. Uploads the 몭le utilizing the 몭les API or avails an already-uploaded 몭le. Events are streamed until the task is complete. Once you begin the 몭ne-tuning job, it takes some time to complete. Depending on the size of your dataset and model, your job may be queued behind other jobs in our system. If the streaming of the event is interrupted due to any reason, you can run the following command to resume it: openai api fine_tunes.follow i <YOUR_FINE_TUNE_JOB_ID> After the job is completed, it will display the name of the 몭ne-tuned model. Use a 몭ne-tuned model When you successfully develop a 몭ne-tuned model, the 몭eld,

- 10. When you successfully develop a 몭ne-tuned model, the 몭eld, ‘FINE_TUNED_MODEL’, will print the name of your customized model, for example, “curie:ft-personal-2023-03-01-11-00-50.” You can specify this model as a parameter for OpenAI’s Completions API and utilize Playground to submit requests. The model may not be ready to handle requests immediately after your job completes. It is likely that your model is still being loaded if completion requests time out. In this case, try again later. To begin making requests, include the model’s name as the ‘model’ parameter in a completion request. Here’s an example using OpenAI CLI: openai api completions.create m <FINE_TUNED_MODEL> p <YOUR_PROMPT> The code snippet using Python may look like this: import openai openai.Completion.create( model=FINE_TUNED_MODEL, prompt=YOUR_PROMPT) In the above code, other than the ‘model’ and ‘prompt,’ you can also use other completion parameters like ‘frequency_penalty,’ ‘max_tokens,’ ‘temperature,’ ‘presence_penalty,’ and so on. Validation Once the model is 몭ne-tuned, run the 몭ne-tuned model on a separate validation dataset to assess its performance. To perform validation, you must reserve some data before 몭ne-tuning the model. The reserved data should have the same format as the training data and be mutually exclusive. Including a validation 몭le in the 몭ne-tuning process allows for periodic evaluations of the model’s performance against the validation data.

- 11. openai api fine_tunes.create t <TRAIN_FILE_ID_OR_PATH> v <VALIDATION_FILE_ID_OR_PATH> m <MODEL> By performing this step, you can identify any potential issues and 몭ne-tune the model further to make it more accurate. Best practices to follow when finetuning a pretrained model While 몭ne-tuning a pre-trained model, several best practices can help ensure successful outcomes. Here are some key practices to follow: 1. Understand the pre-trained model: Gain a comprehensive understanding of the pre-trained model architecture, its strengths, limitations, and the task it was initially trained on. This knowledge can enhance the 몭ne-tuning process and help make appropriate modi몭cations. 2. Select a relevant pre-trained model: Choose a pre-trained model that aligns closely with the target task or domain. A model trained on similar data or a related task will provide a better starting point for 몭ne-tuning. 3. Freeze early layers: Typically, the lower layers of a pre-trained model capture generic features and patterns. Freeze these early layers during 몭ne- tuning to preserve the learned representations. This practice helps prevent catastrophic forgetting and lets the model focus on task-speci몭c 몭ne-tuning. 4. Adjust learning rate: Experiment with di몭erent learning rates during 몭ne- tuning. It is typical to use a smaller learning rate compared to the initial pre- training phase. A lower learning rate allows the model to adapt more gradually and prevent drastic changes that could lead to over몭tting. 5. Utilize transfer learning techniques: Transfer learning methods can enhance 몭ne-tuning performance. Techniques like feature extraction, where pre-trained layers are used as 몭xed feature extractors, or gradual unfreezing, where layers are unfrozen gradually during training, can help preserve and transfer valuable knowledge.

- 12. 6. Regularize the model: Apply regularization techniques, such as dropout or weight decay, during 몭ne-tuning to prevent over몭tting. Regularization helps the model generalize better and reduces the risk of memorizing speci몭c training examples. 7. Monitor and evaluate performance: Continuously monitor and evaluate the performance of the 몭ne-tuned model on validation or holdout datasets. Use appropriate evaluation metrics to assess the model’s progress and make informed decisions on further 몭ne-tuning adjustments. 8. Data augmentation: Augment the training data by applying transformations, perturbations, or adding noise. Data augmentation can increase the diversity and generalizability of the training data, leading to better 몭ne-tuning results. 9. Consider domain adaptation: If the target task or domain signi몭cantly di몭ers from the pre-training data, consider domain adaptation techniques. These methods aim to bridge the gap between the pre-training data and the target data, improving the model’s performance on the speci몭c task. 10. Regularly backup and save checkpoints: Save model checkpoints at regular intervals during 몭ne-tuning to ensure progress is saved and prevent data loss. This practice allows for easy recovery and enables the exploration of di몭erent 몭ne-tuning strategies. Benefits of finetuning pretrained models for generative AI applications Fine-tuning a pre-trained model for generative AI applications promises the following bene몭ts: As pre-trained models are already trained on a large amount of data, it eliminates the need to train a model from scratch, saving time and resources. Fine-tuning facilitates customization of the pre-trained model to industry- speci몭c use cases, which improves performance and accuracy. It is especially useful for niche applications that require domain-speci몭c data or

- 13. specialized knowledge. As pre-trained models have already learned the underlying patterns in the data, 몭ne-tuning them can make them easier to identify and interpret the output. What generative AI development services does LeewayHertz offer? LeewayHertz is an expert generative AI development company with over 15 years of experience and a team of 250+ full-stack developers. With expertise in multiple AI models, including GPT-3, Midjourney, DALL-E, and Stable Di몭usion, our AI experts specialize in developing and deploying generative model-based applications. We have profound knowledge of AI technologies such as Machine Learning, Deep Learning, Computer Vision, Natural Language Processing (NLP), Transfer Learning, and other ML subsets. We o몭er the following generative AI development services: Consulting and strategy building Our AI developers assess your business goals, objectives, needs and other aspects to identify issues or shortcomings that can be resolved by integrating generative AI models. We also design a meticulous blueprint of how generative AI can be implemented in your business and o몭er ongoing improvement suggestions once the solution is deployed. Fine-tuning pre-trained models Our developers are experts in 몭ne-tuning models to adapt them for your business-speci몭c use case. We ful몭ll all the necessary steps required to 몭ne- tune a pre-trained model, be it GPT-3, DALL.E, Codex, Stable Di몭usion or Midjourney. Custom generative AI model-powered solution development From 몭nding the right AI model for your business and training the model to evaluating the performance and integrating it into your custom generative AI model-powered solution for your system, our developers undertake all the steps involved in building a business-speci몭c solution.

- 14. steps involved in building a business-speci몭c solution. Model integration and deployment At LeewayHertz, we prioritize evaluating and understanding our clients’ requirements to e몭ciently integrate generative AI model-powered solutions and applications into their business environment. Prompt engineering services Our team of prompt engineers is skilled in understanding the capabilities and limitations of a wide range of generative models, identifying the type and format of the prompt apt for the model and customizing the prompt to suit the project’s requirement using advanced NLP and NLG techniques. Endnote Fine-tuning pre-trained models is a reliable technique for creating high- performing generative AI applications. It enables developers to create custom models for business-speci몭c use cases based on the knowledge encoded in pre-existing models. Using this approach saves time and resources and ensures that the models 몭ne-tuned are accurate and robust. However, it is imperative to remember that 몭ne-tuning is not a one-size-몭ts- all solution and must be approached with care and consideration. But the right approach to 몭ne-tuning pre-trained models can unlock generative AI’s full potential for your business. Looking for generative AI developers Look no further than LeewayHertz. Our team of experienced developers and AI experts can help you 몭ne-tune pre-trained models to meet your speci몭c needs and create innovative applications. Author’s Bio

- 15. Akash Takyar CEO LeewayHertz Akash Takyar is the founder and CEO at LeewayHertz. The experience of building over 100+ platforms for startups and enterprises allows Akash to rapidly architect and design solutions that are scalable and beautiful. Akash's ability to build enterprise-grade technology solutions has attracted over 30 Fortune 500 companies, including Siemens, 3M, P&G and Hershey’s. Akash is an early adopter of new technology, a passionate technology enthusiast, and an investor in AI and IoT startups. Write to Akash Start a conversation by filling the form Once you let us know your requirement, our technical expert will schedule a call and discuss your idea in detail post sign of an NDA. All information will be kept con몭dential. Name Phone Company Email

- 16. Tell us about your project Send me the signed Non-Disclosure Agreement (NDA ) Start a conversation Insights Redefining logistics: The impact of generative AI in supply chains Incorporating generative AI promises to be a game-changer for supply chain

- 17. management, propelling it into an era of unprecedented innovation. From diagnosis to treatment: Exploring the applications of generative AI in healthcare Generative AI in healthcare refers to the application of generative AI techniques and models in various aspects of the healthcare industry. Read More Medical Imaging Personalised Medicine Population Health Management Drug Discovery Generative AI in Healthcare Read More

- 18. LEEWAYHERTZPORTFOLIO SERVICES GENERATIVE AI About Us Global AI Club Careers Case Studies Work Community TraceRx ESPN Filecoin Lottery of People World Poker Tour Chrysallis.AI Generative AI Arti몭cial Intelligence & ML Web3 Blockchain Software Development Hire Developers Generative AI Development Generative AI Consulting Generative AI Integration LLM Development Prompt Engineering ChatGPT Developers Generative AI in finance and banking: The current state and future implications The 몭nance industry has embraced generative AI and is extensively harnessing its power as an invaluable tool for its operations. Read More Show all Insights

- 19. Privacy & Cookies Policy INDUSTRIES PRODUCTS CONTACT US Get In Touch 415-301-2880 info@leewayhertz.com jobs@leewayhertz.com 388 Market Street Suite 1300 San Francisco, California 94111 Sitemap Consumer Electronics Financial Markets Healthcare Logistics Manufacturing Startup Whitelabel Crypto Wallet Whitelabel Blockchain Explorer Whitelabel Crypto Exchange Whitelabel Enterprise Crypto Wallet Whitelabel DAO ©2023 LeewayHertz. All Rights Reserved.