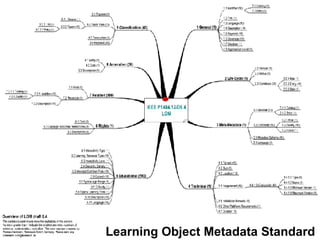

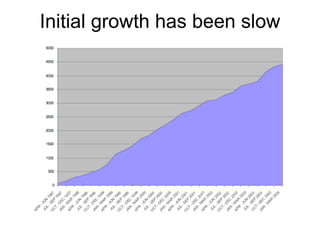

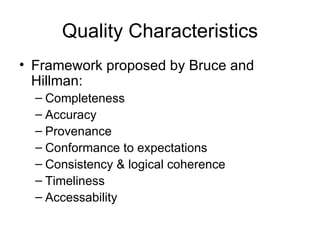

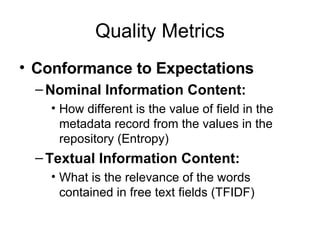

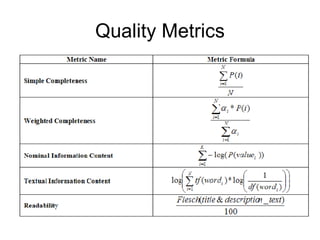

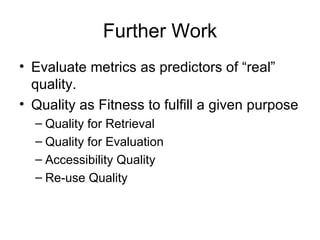

The document discusses the need for automatic evaluation of the quality of learning object metadata due to the overwhelming production and management that exceeds human capacity. It proposes using metrics to assess various quality characteristics such as completeness, accuracy, and accessibility, and highlights the importance of metadata quality in improving the findability and usability of learning objects. The research also indicates a correlation between certain metrics and human-assigned quality scores, suggesting a need for further evaluation of these metrics in predicting actual quality.