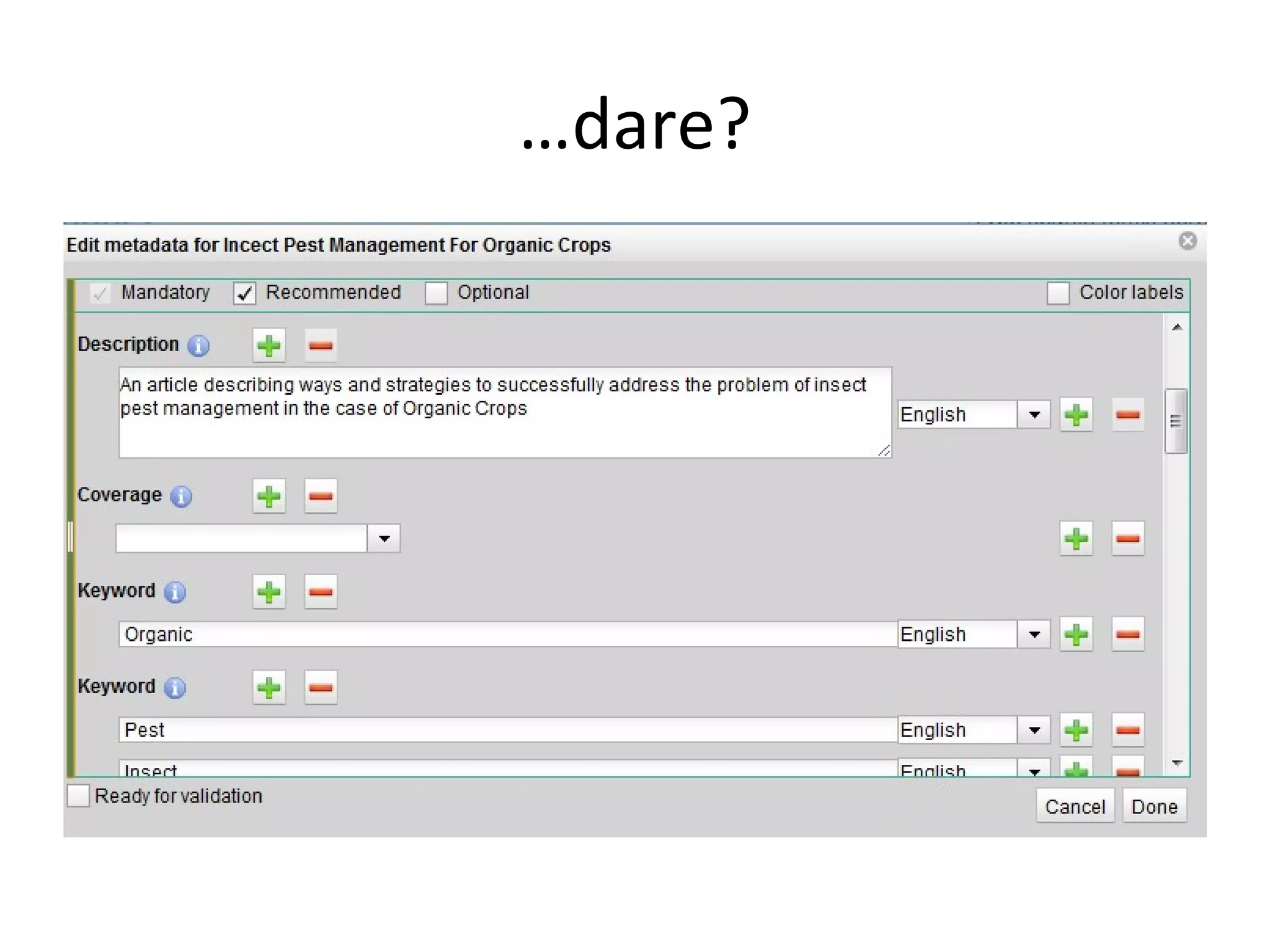

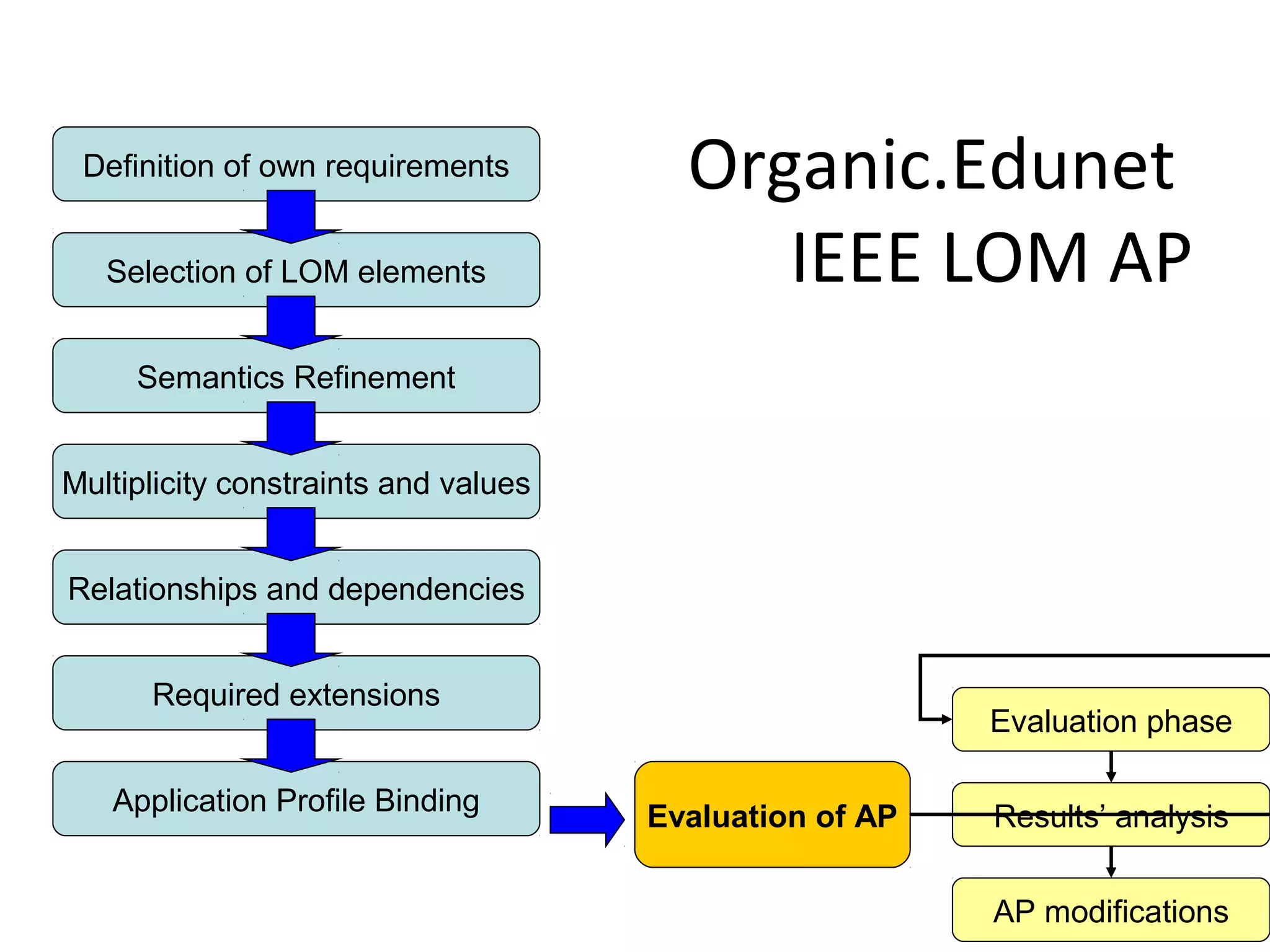

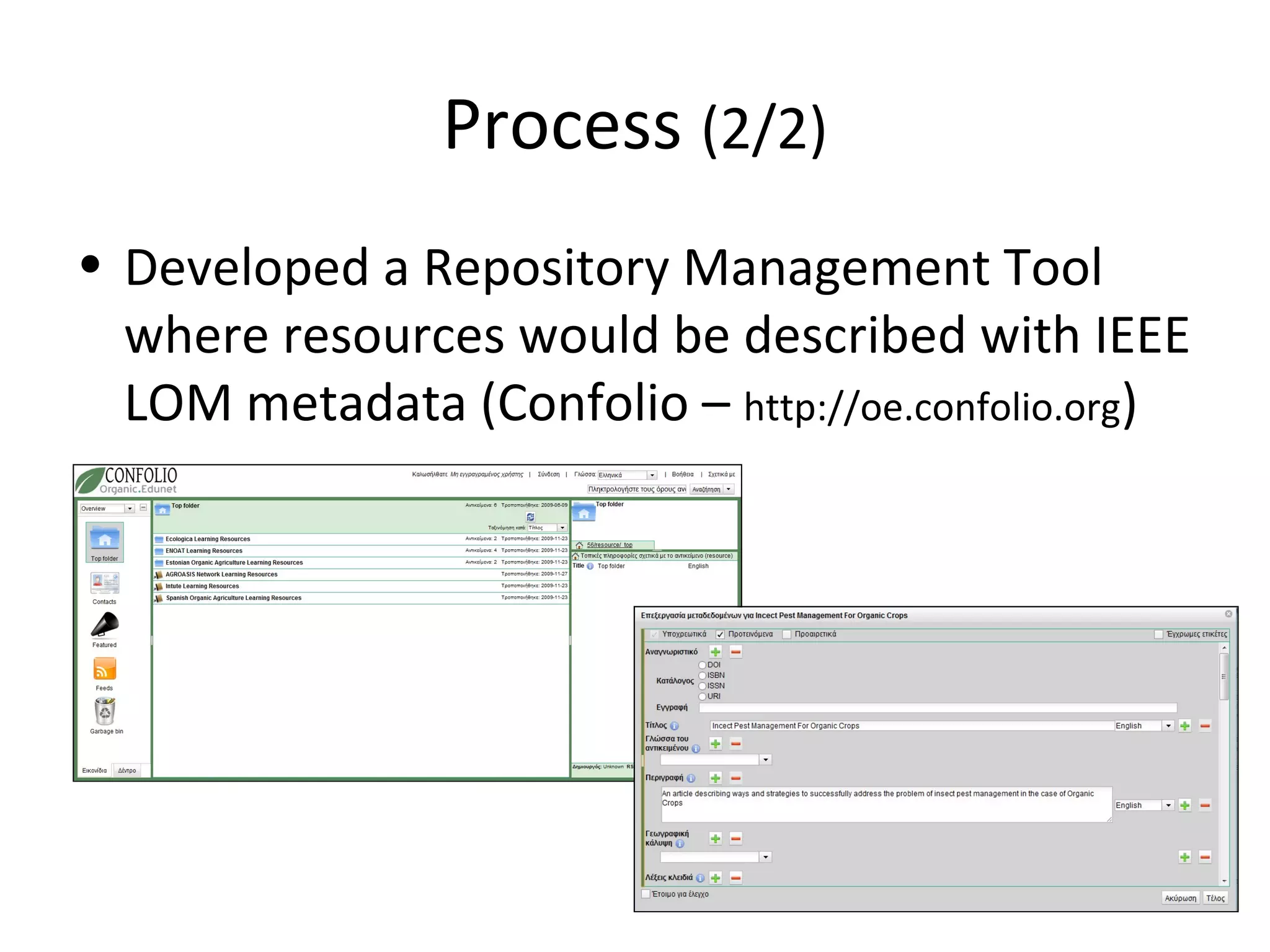

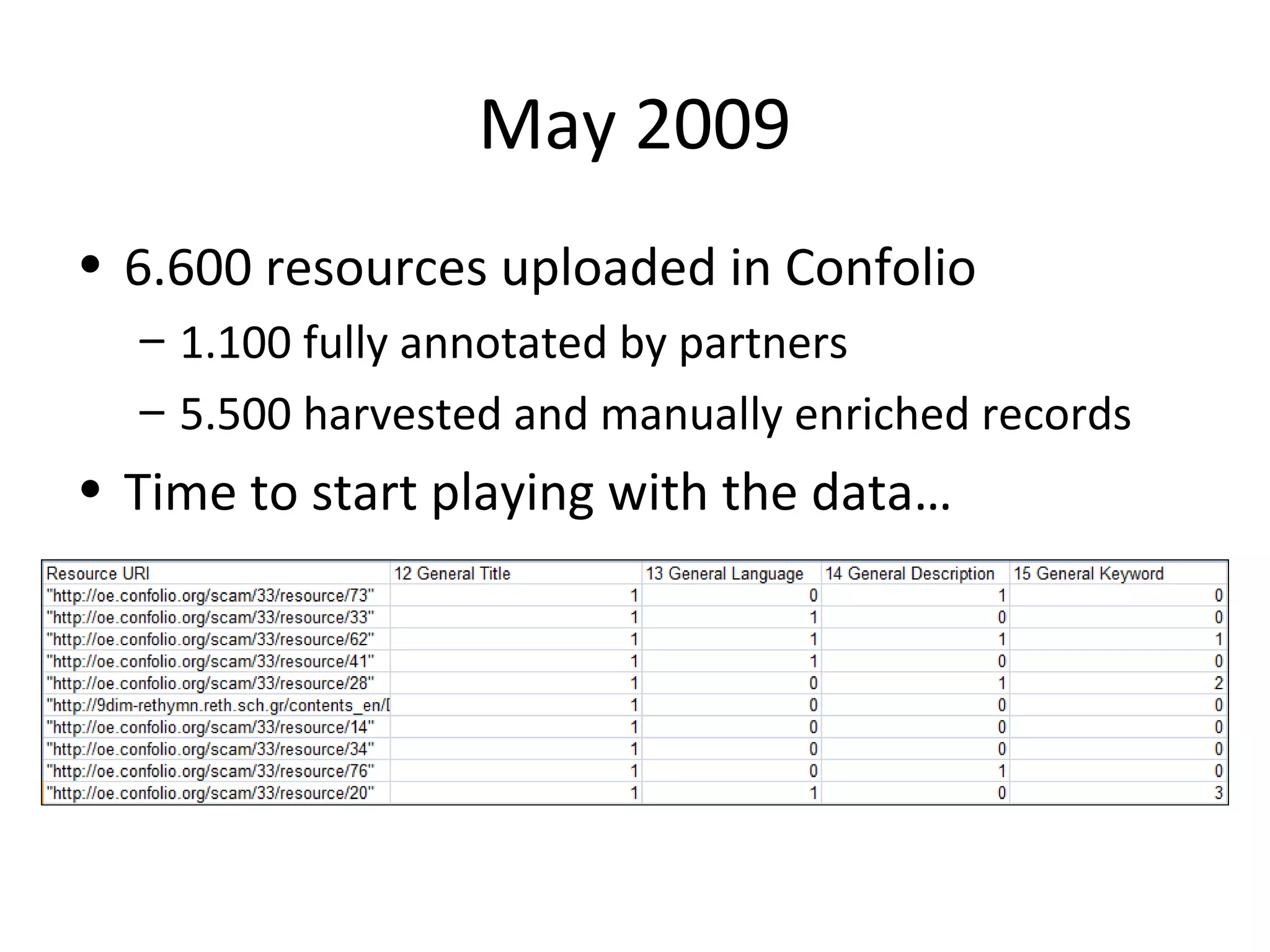

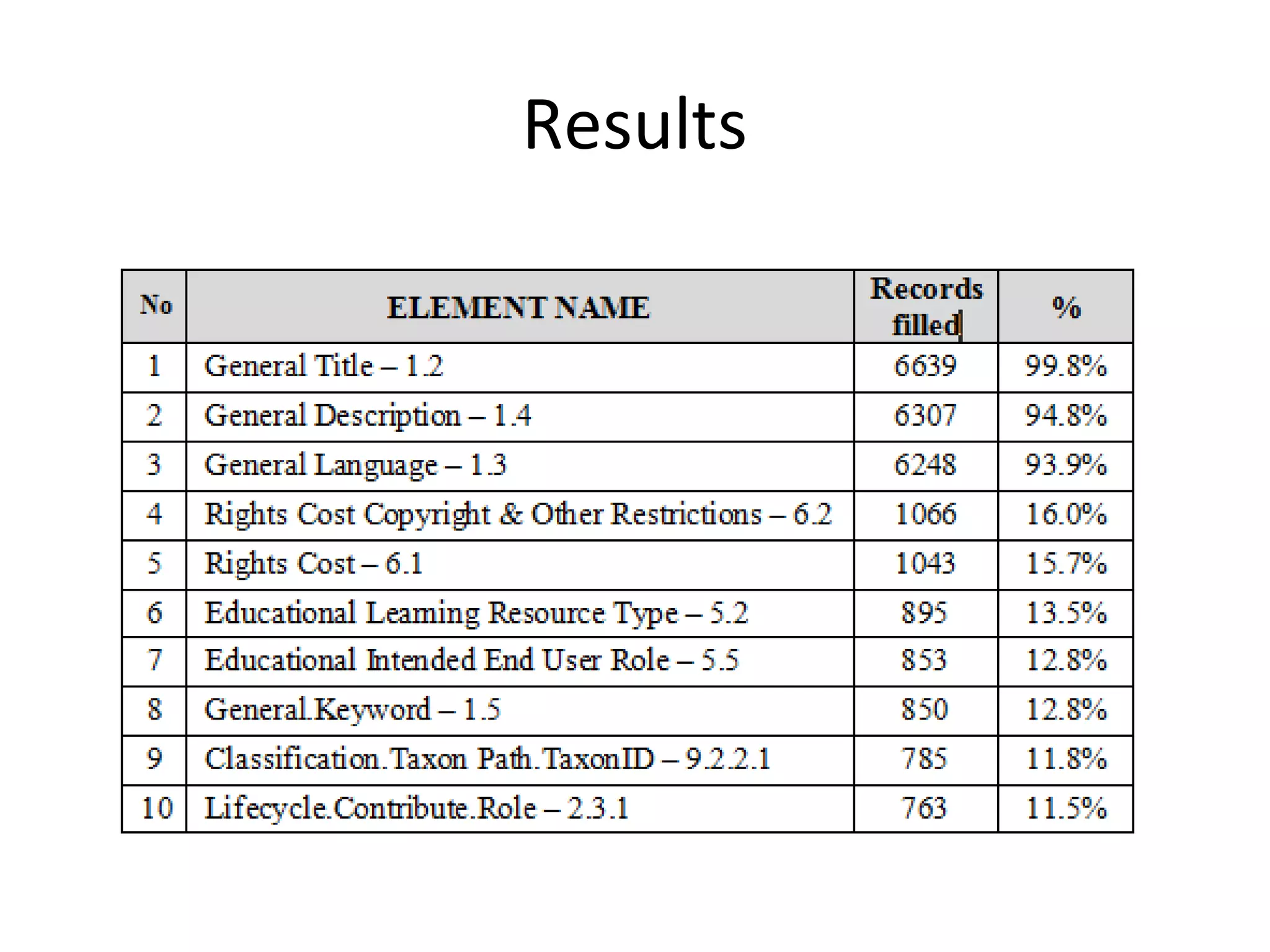

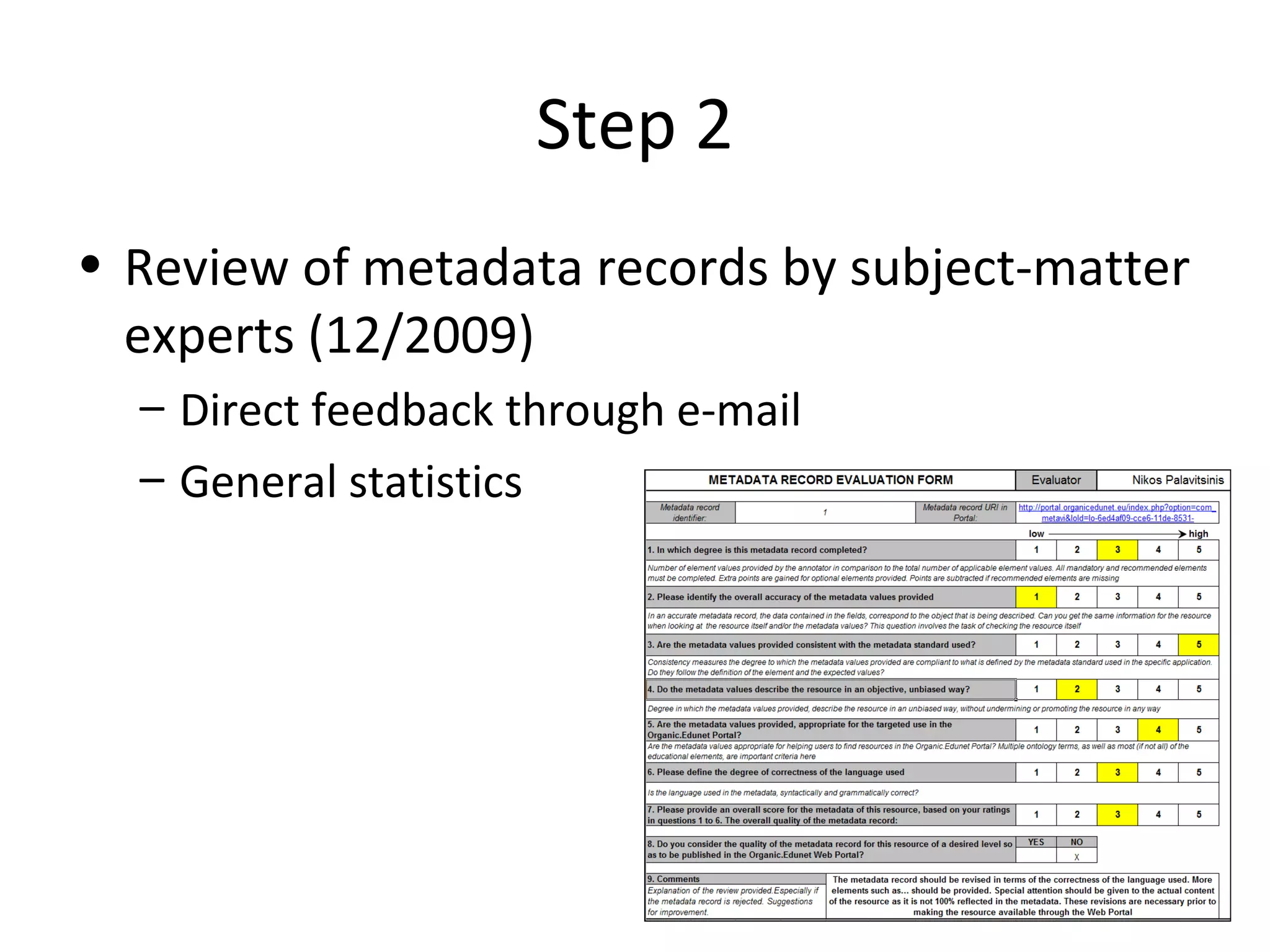

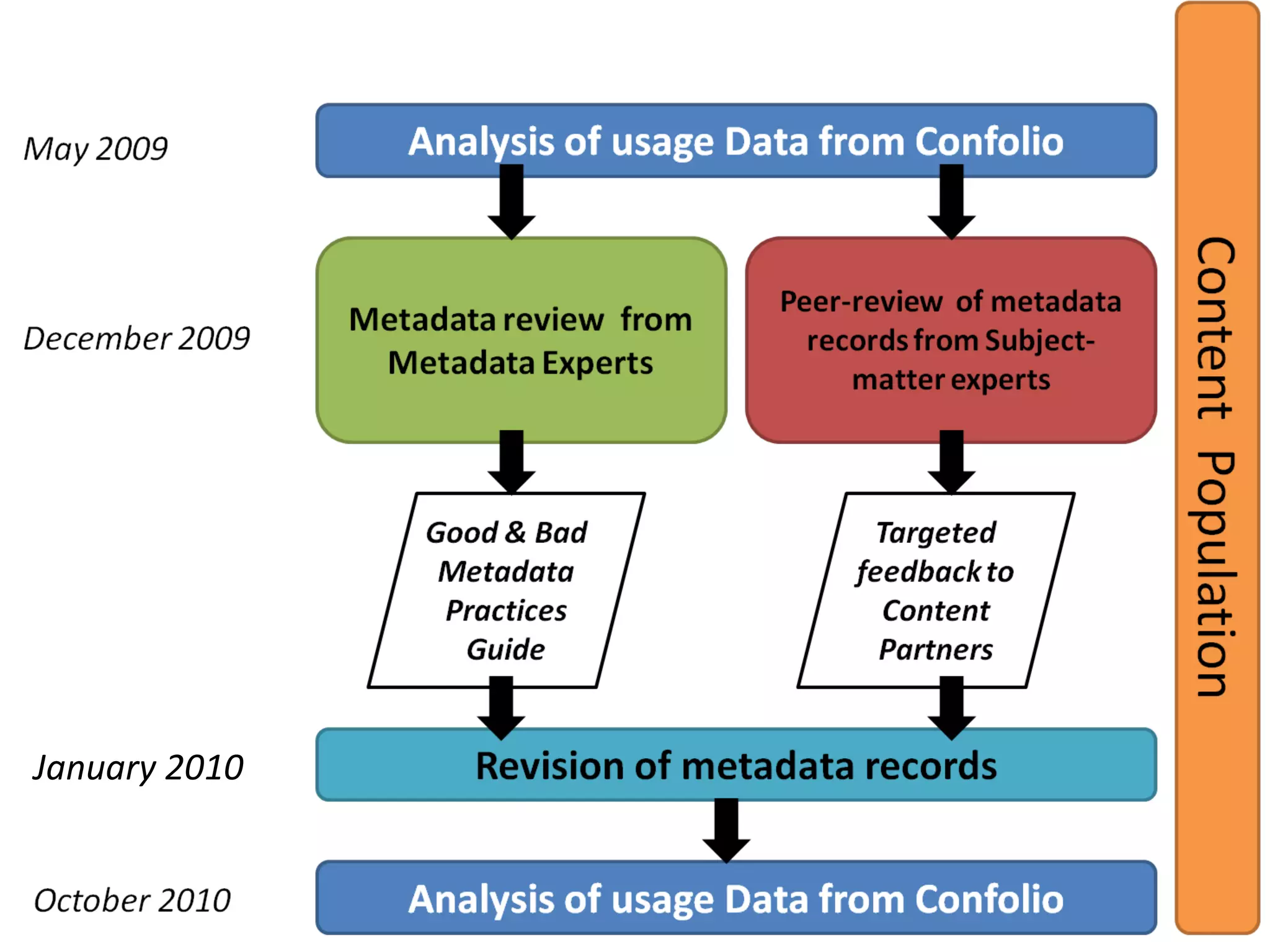

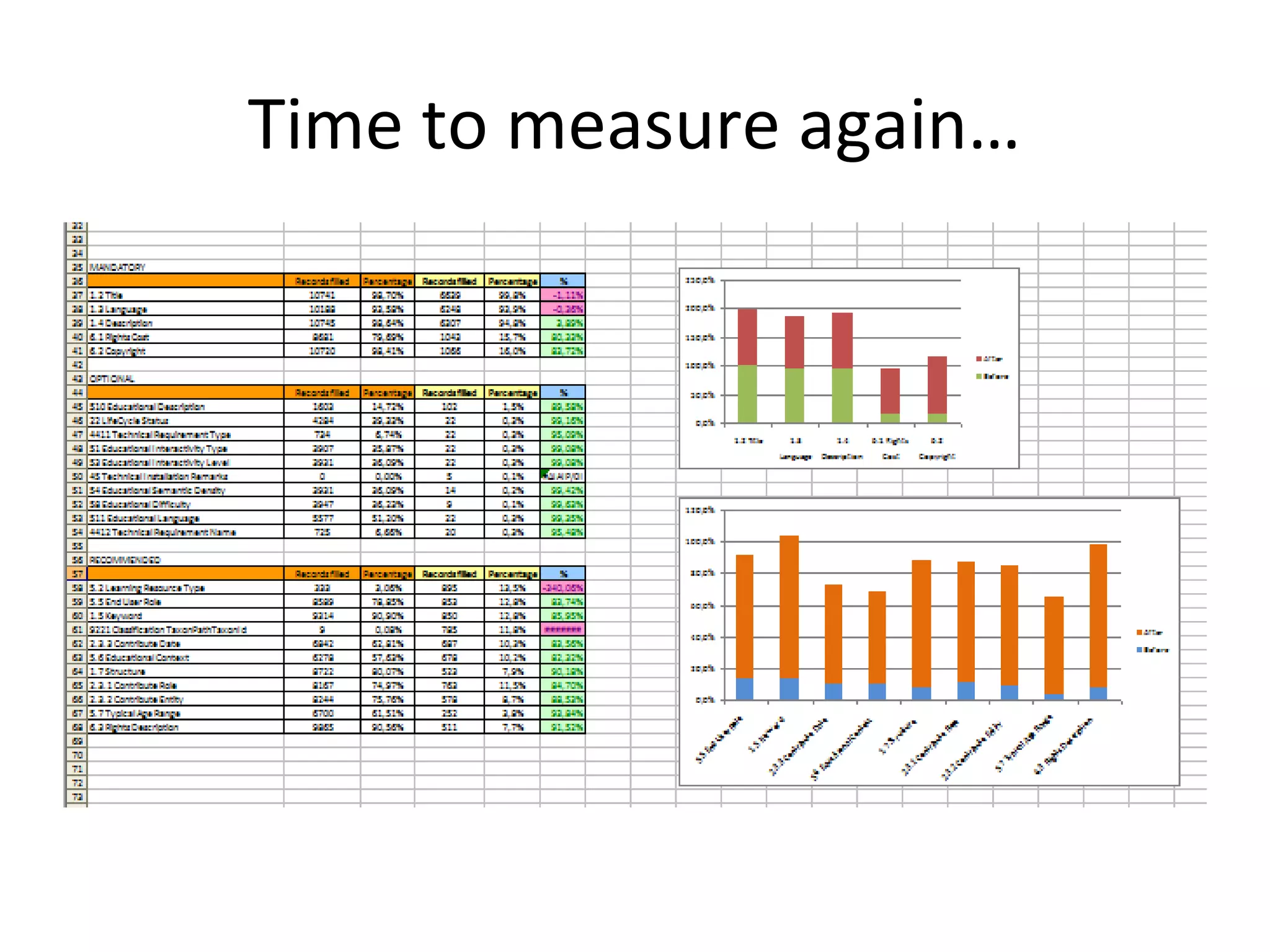

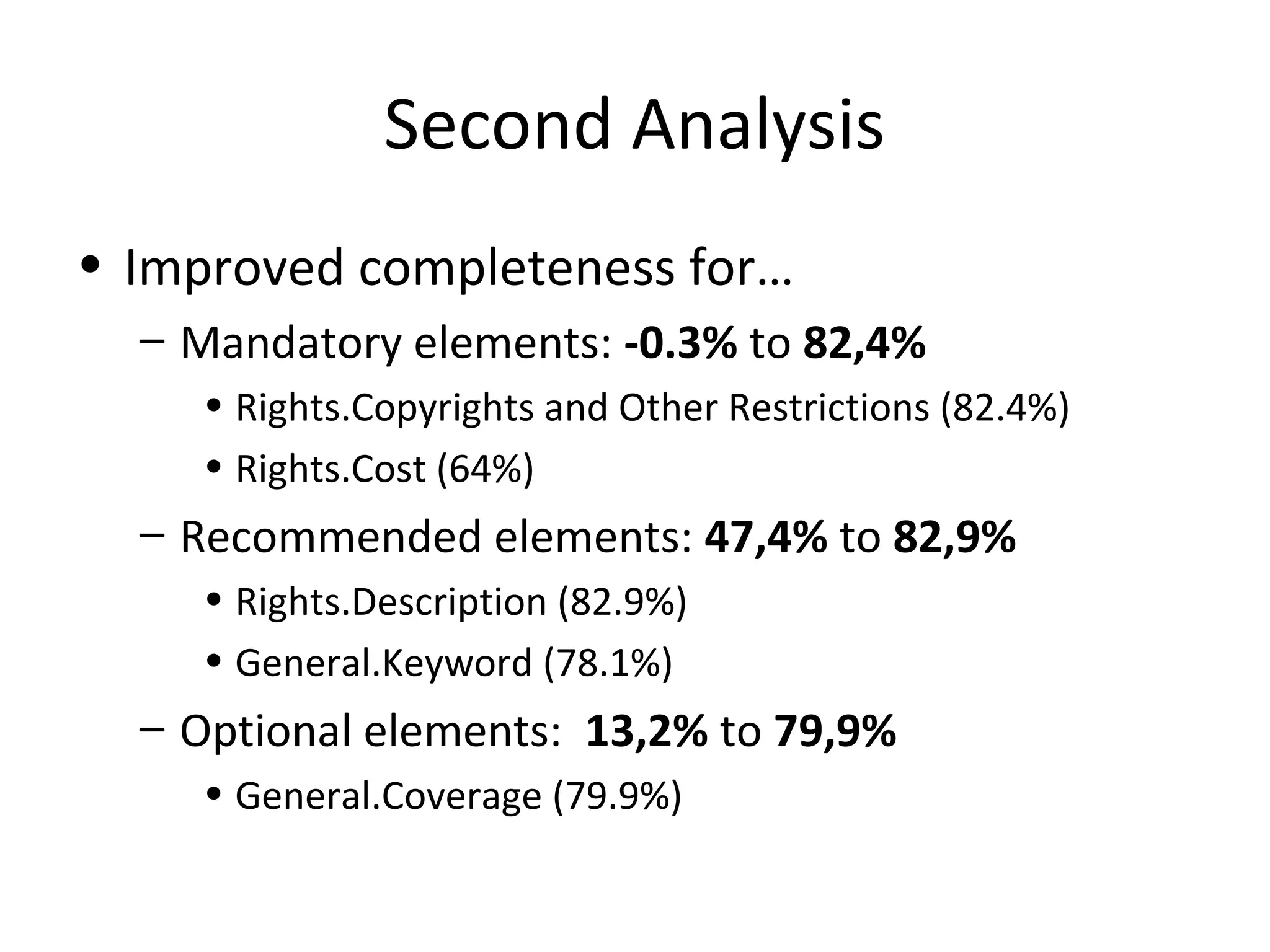

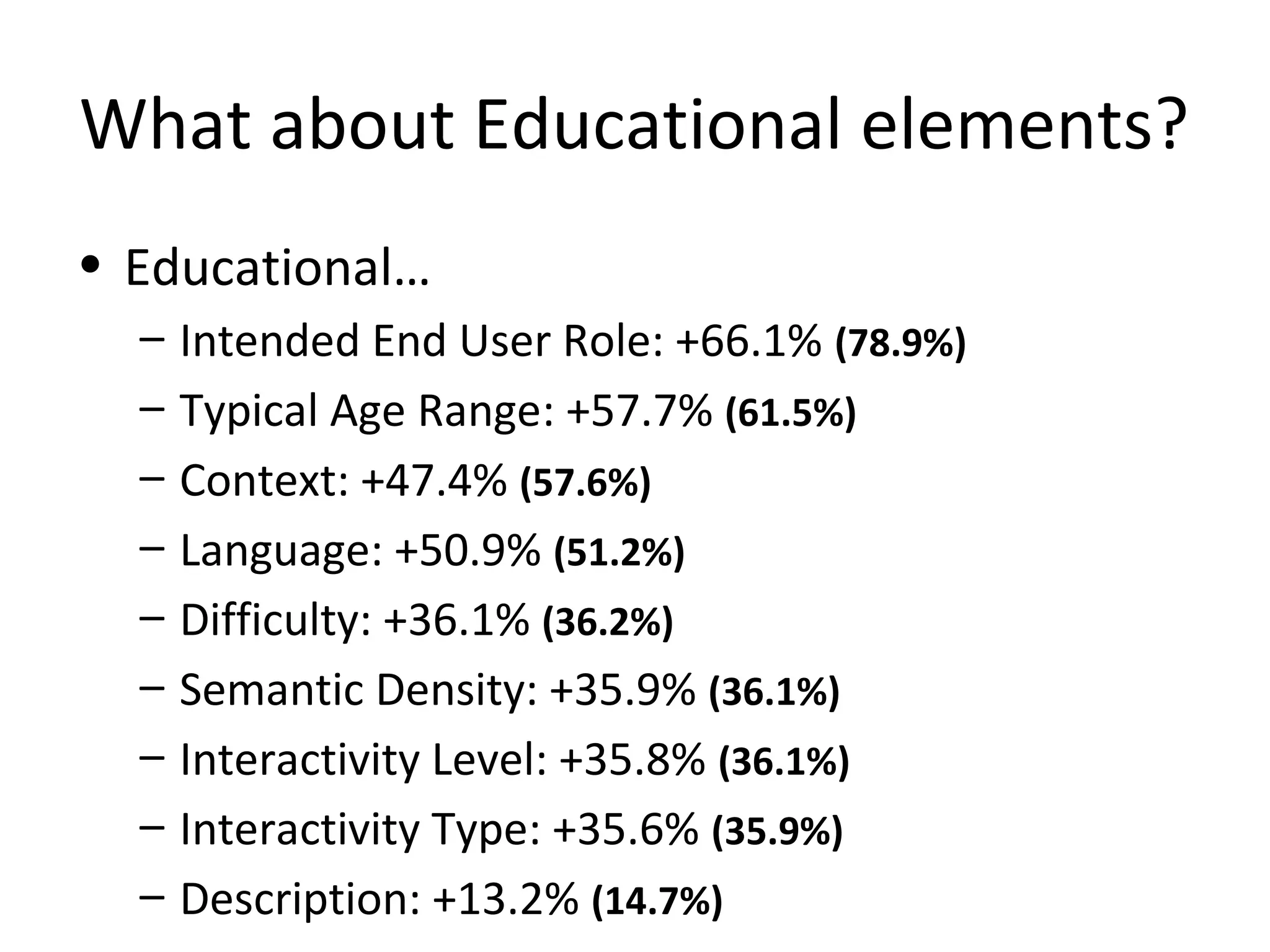

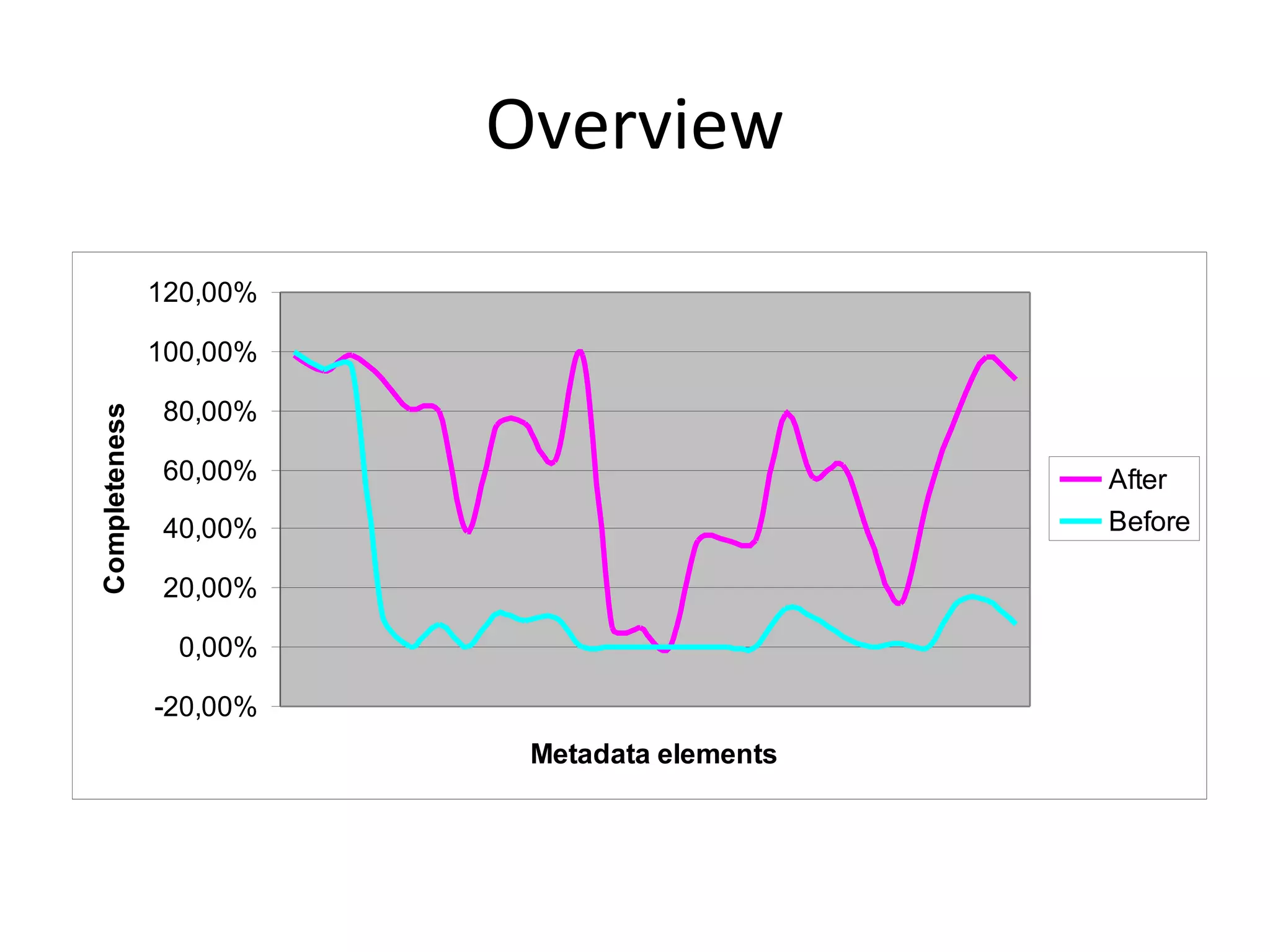

This document discusses metadata quality in learning repositories. It summarizes a study analyzing metadata completeness and quality in the Organic.Edunet learning repository before and after implementing a metadata quality assurance mechanism. The mechanism involved experts reviewing metadata records and providing feedback. Analysis found completeness improved for many mandatory, recommended, and optional metadata elements after implementation, especially for educational elements. However, further work is still needed to improve completeness of optional elements and additional quality metrics.