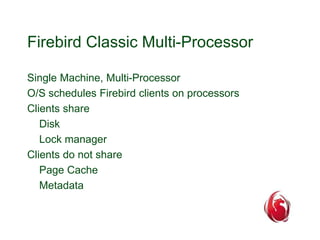

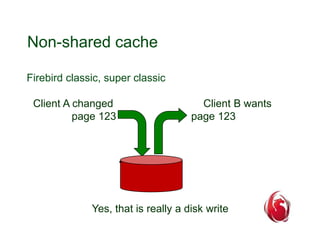

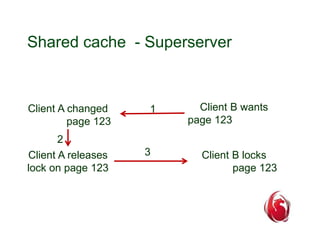

This document discusses the history and future of threading in Firebird databases. It describes how early versions of Firebird were not multi-threaded, but modern versions use threading to improve performance on multi-processor systems. Key aspects of Firebird threading include using limited worker threads to balance load across CPU cores without excessive contention, and fine-grained locking of individual data structures for maximum parallelism.