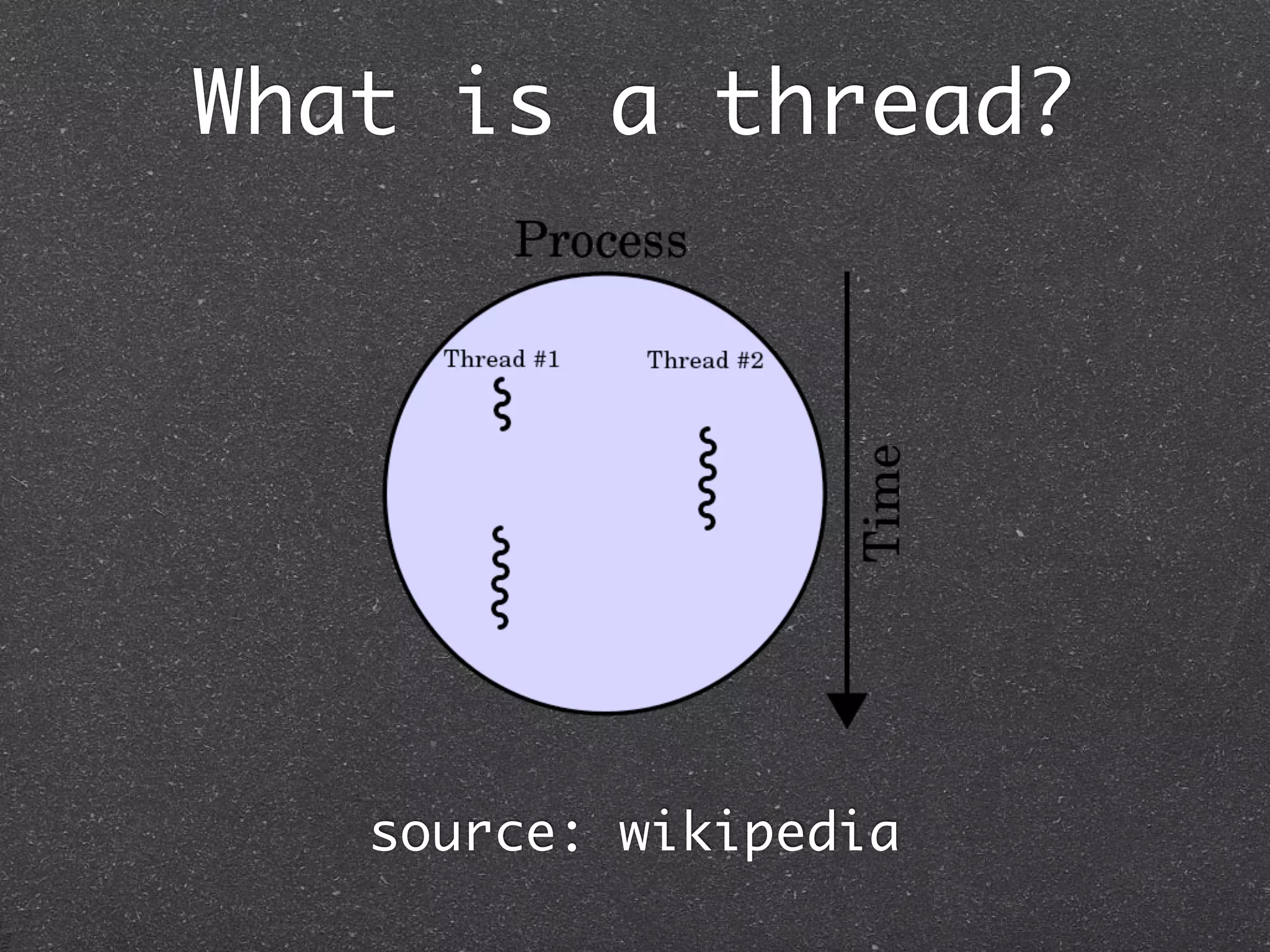

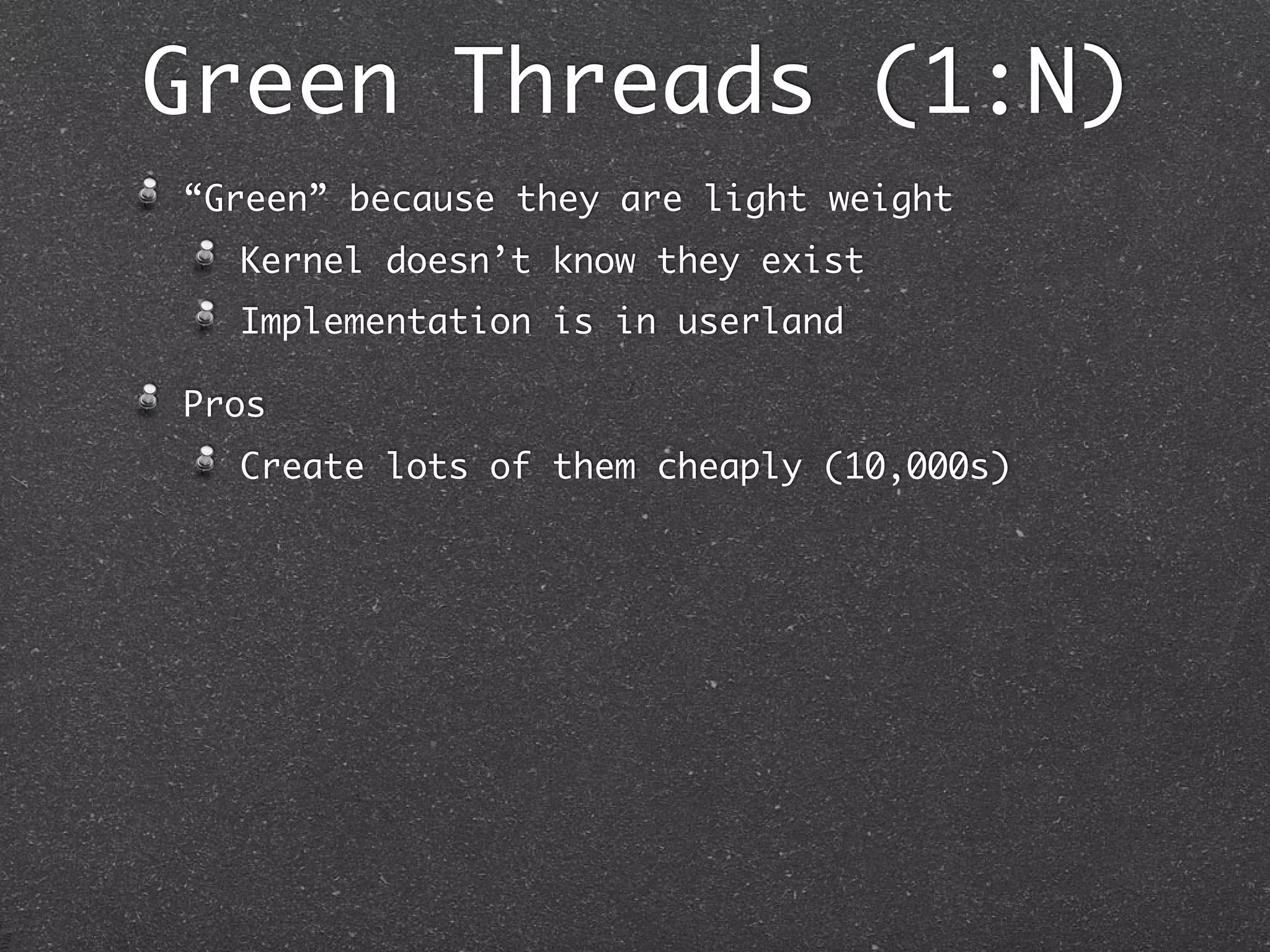

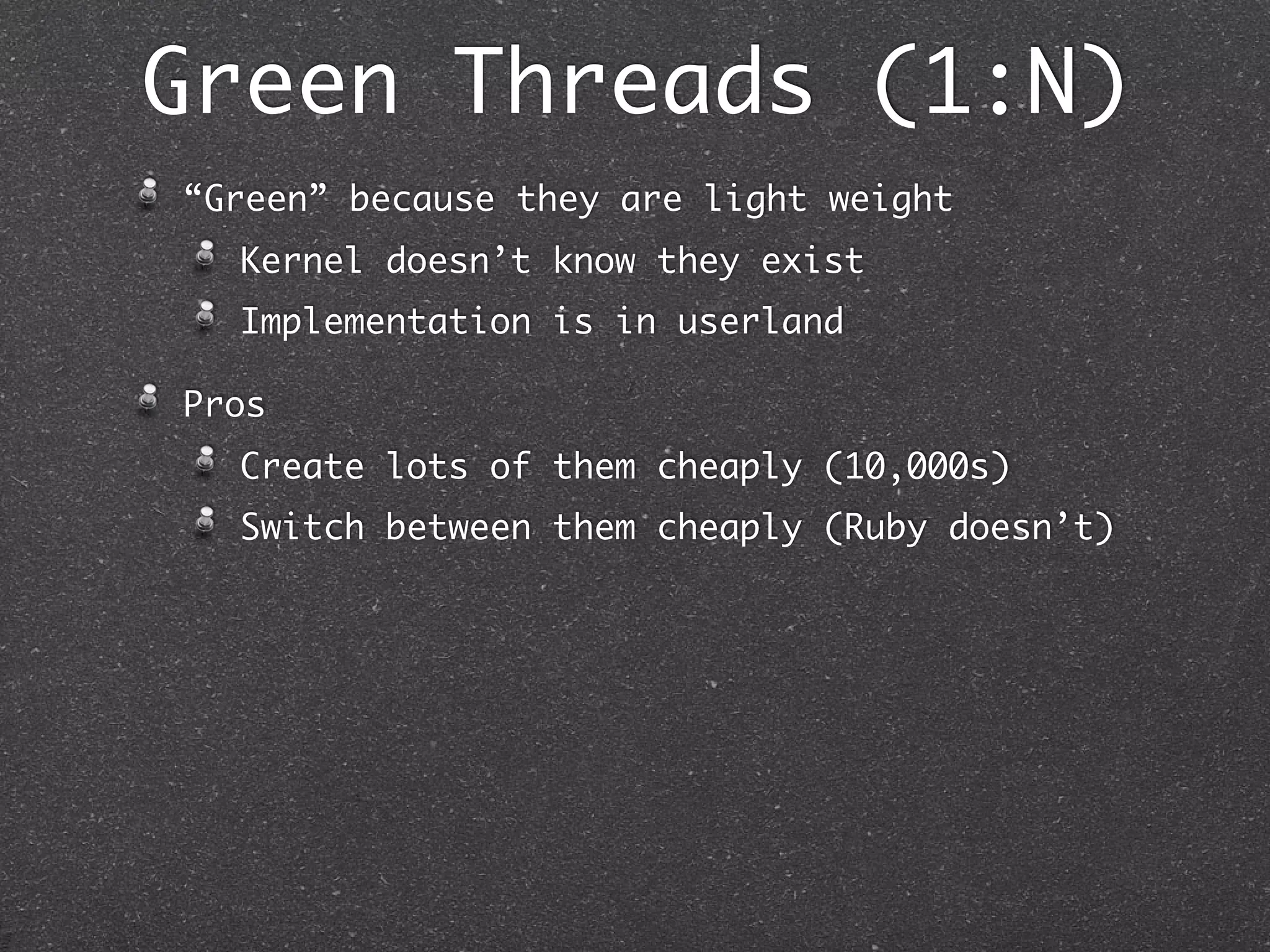

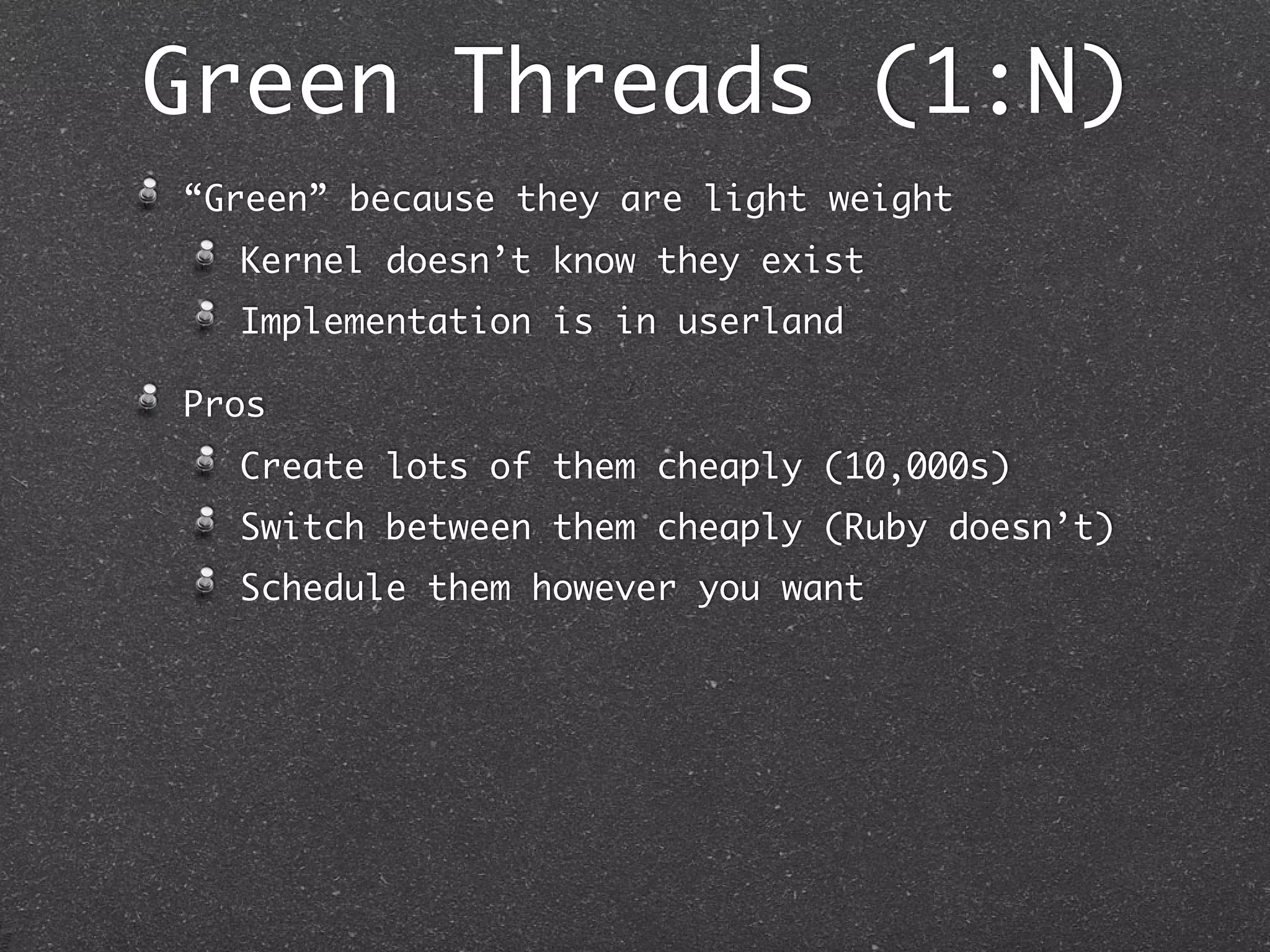

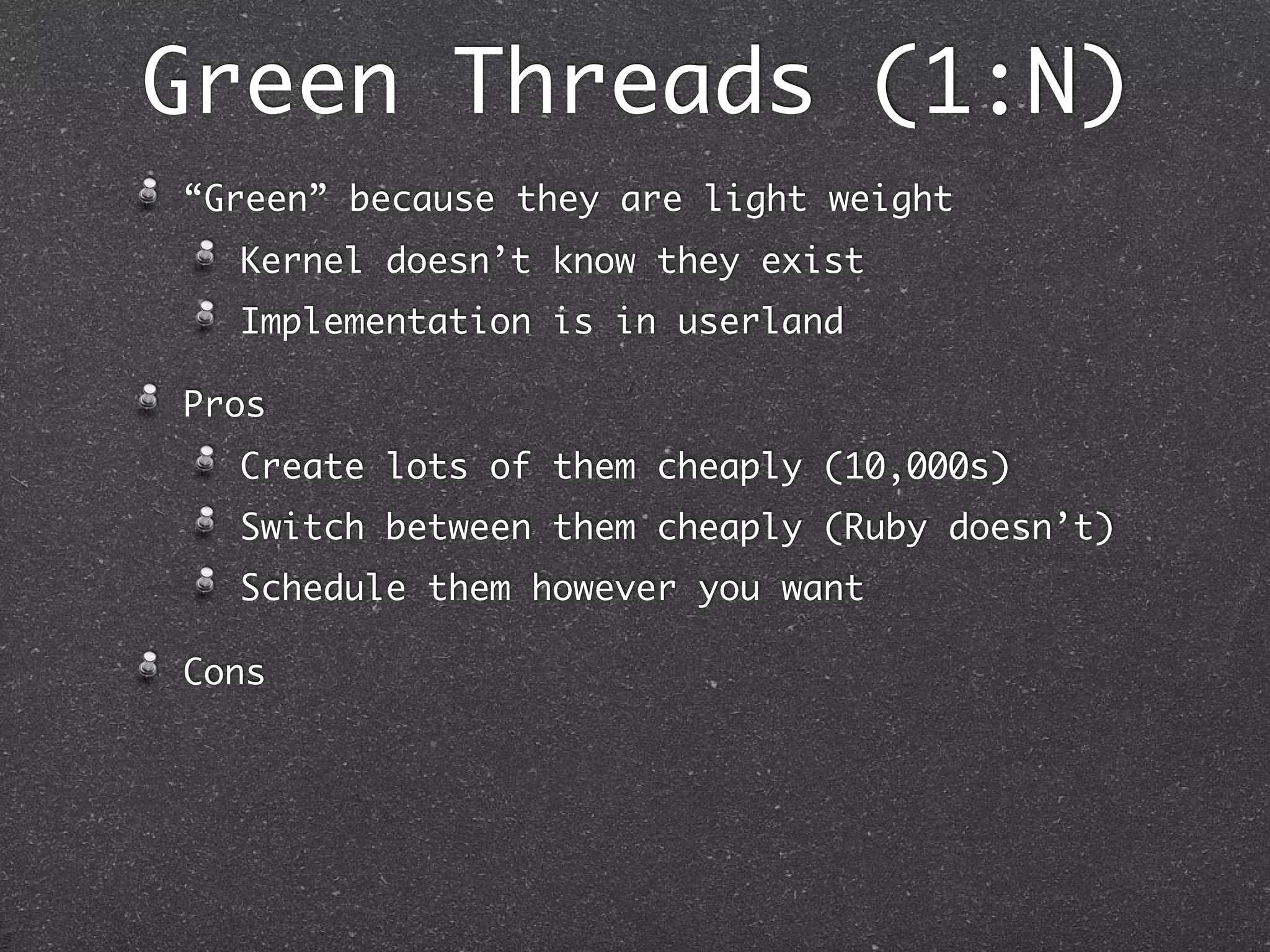

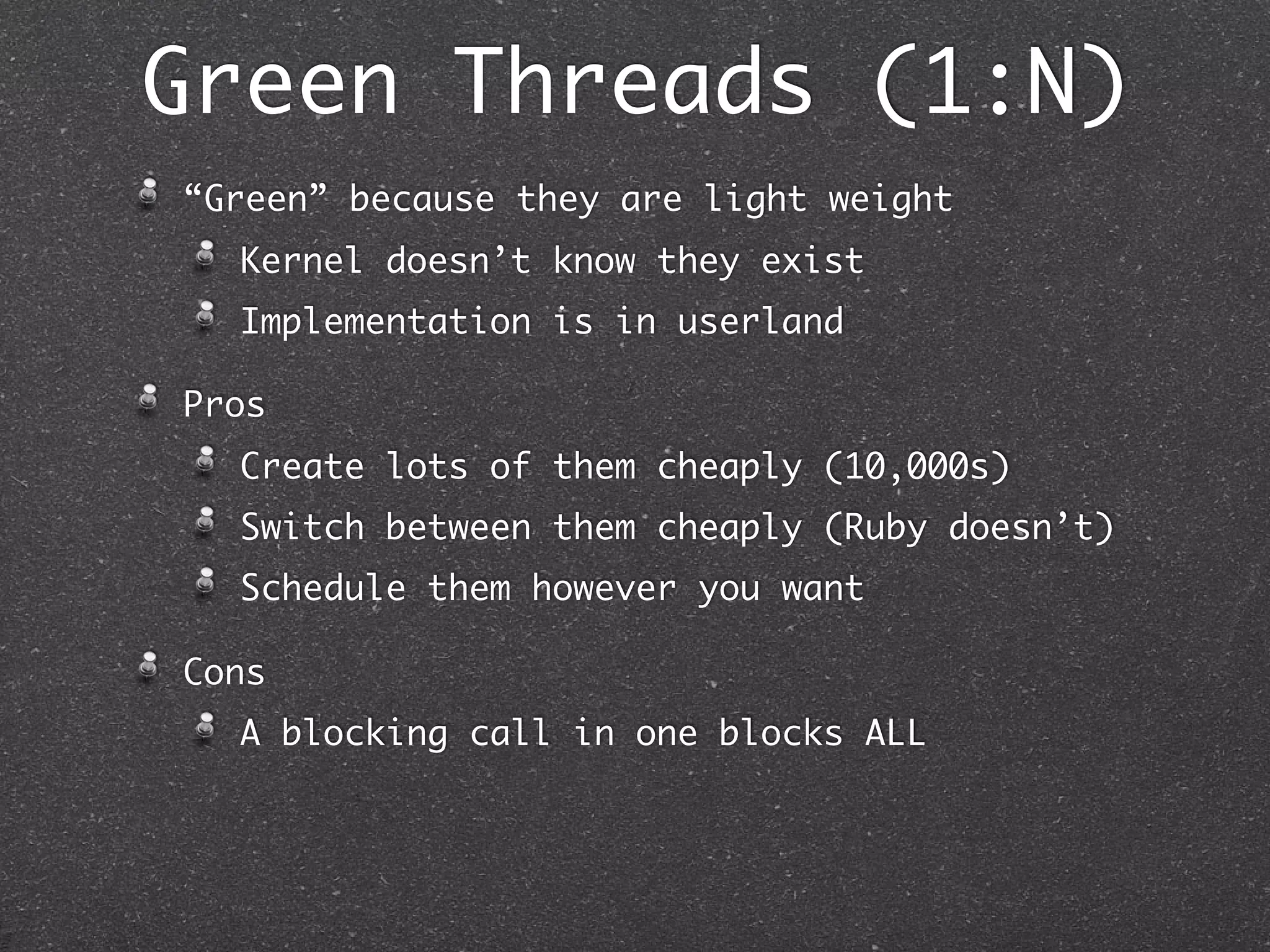

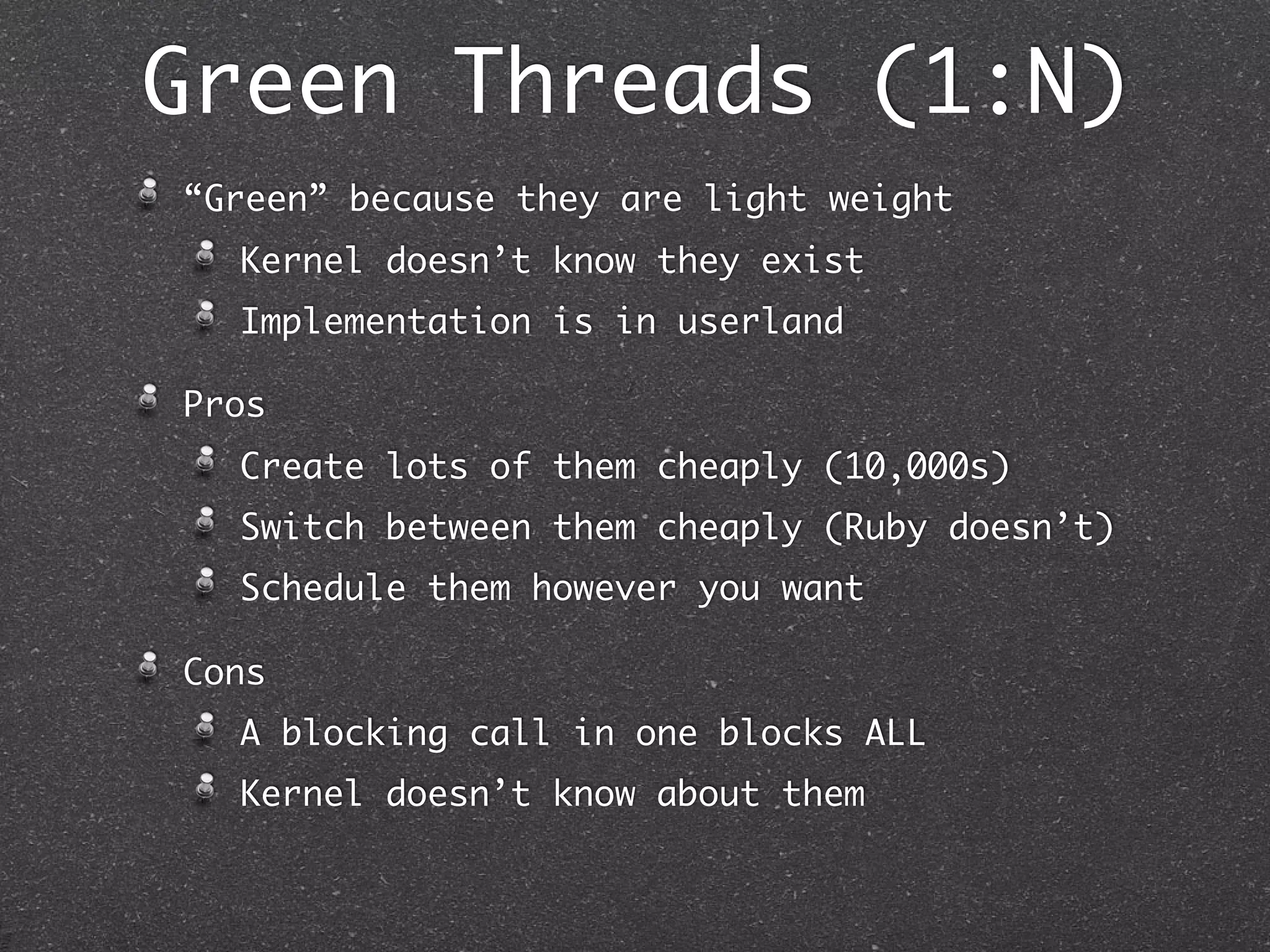

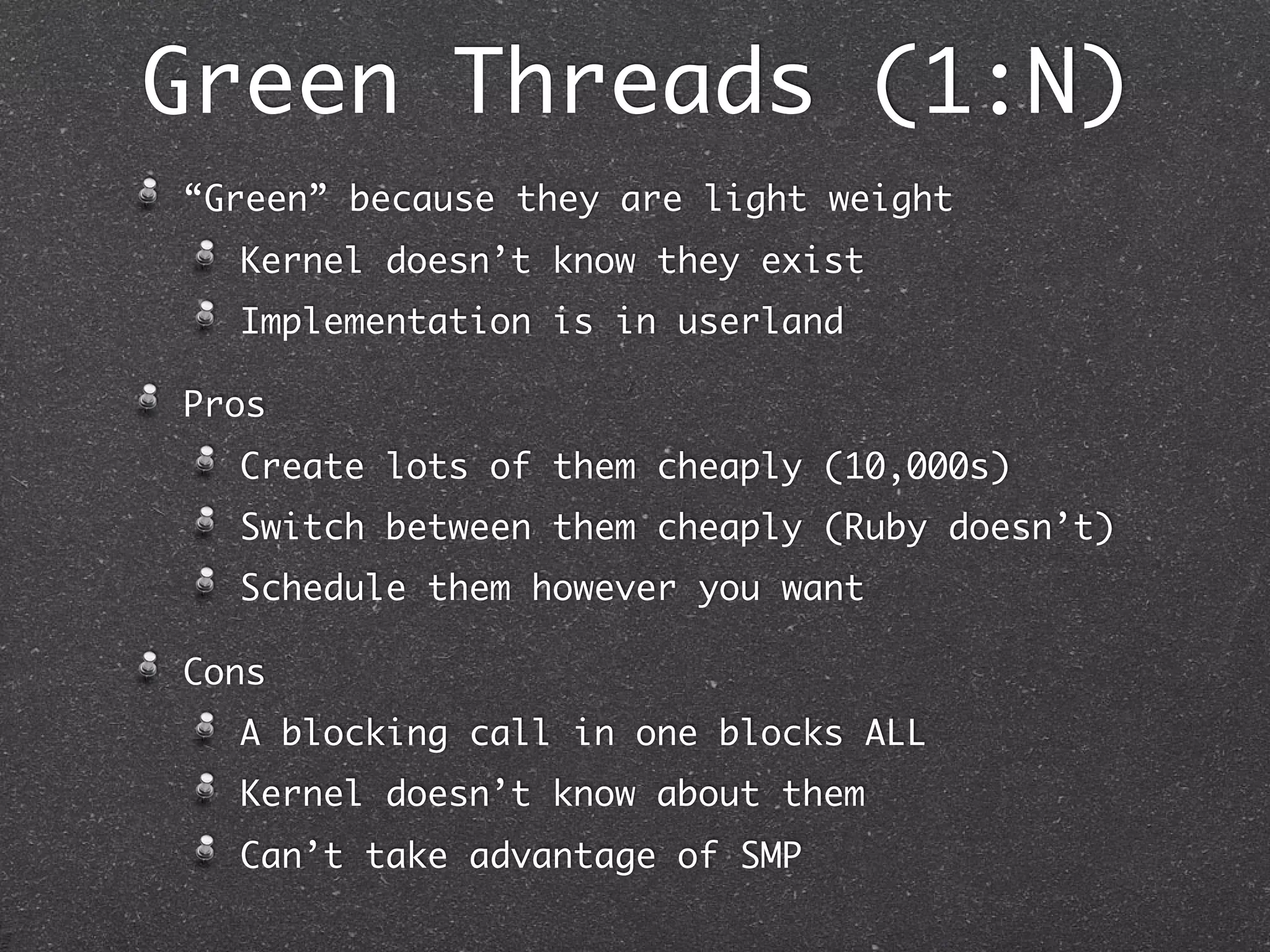

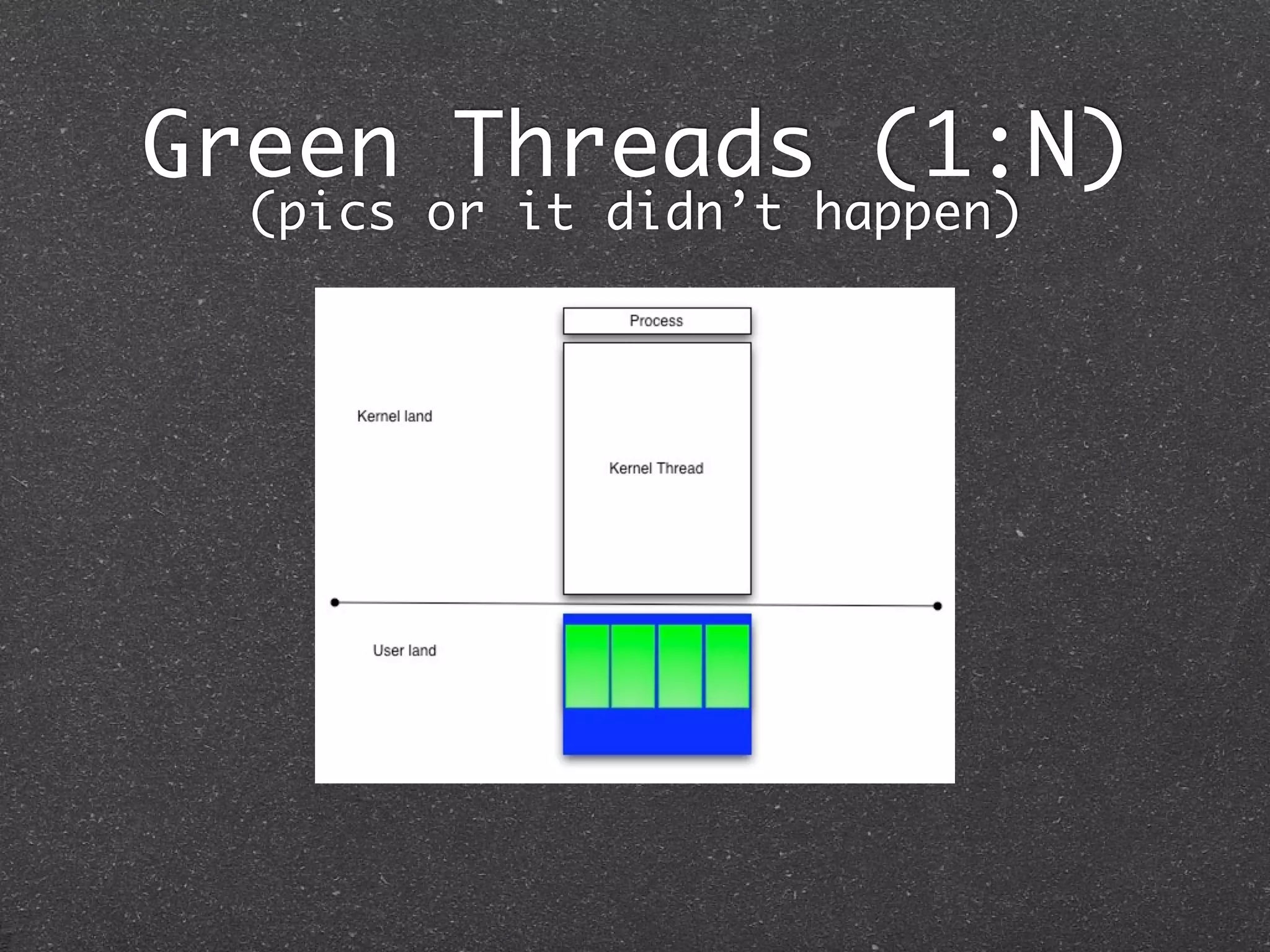

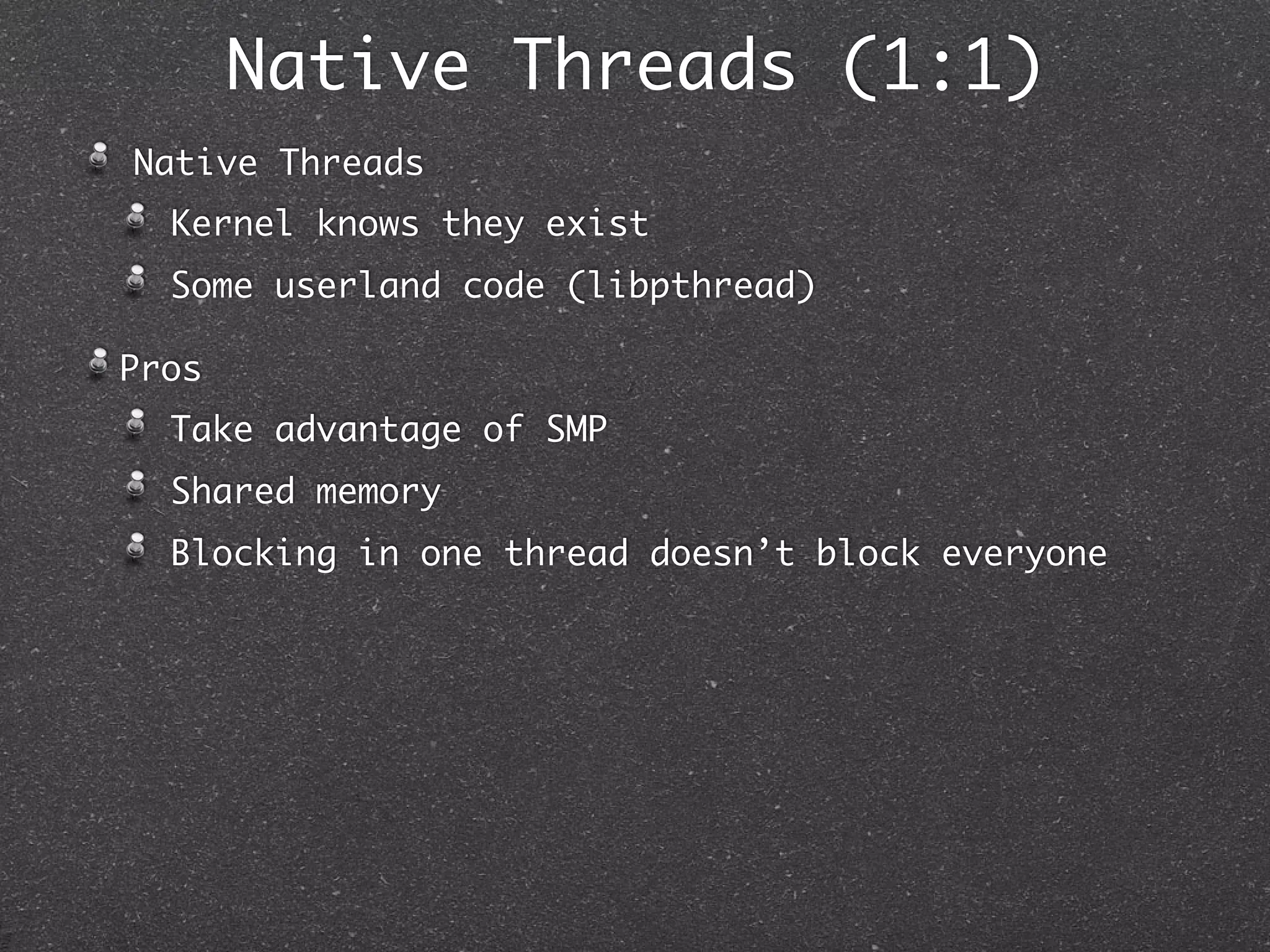

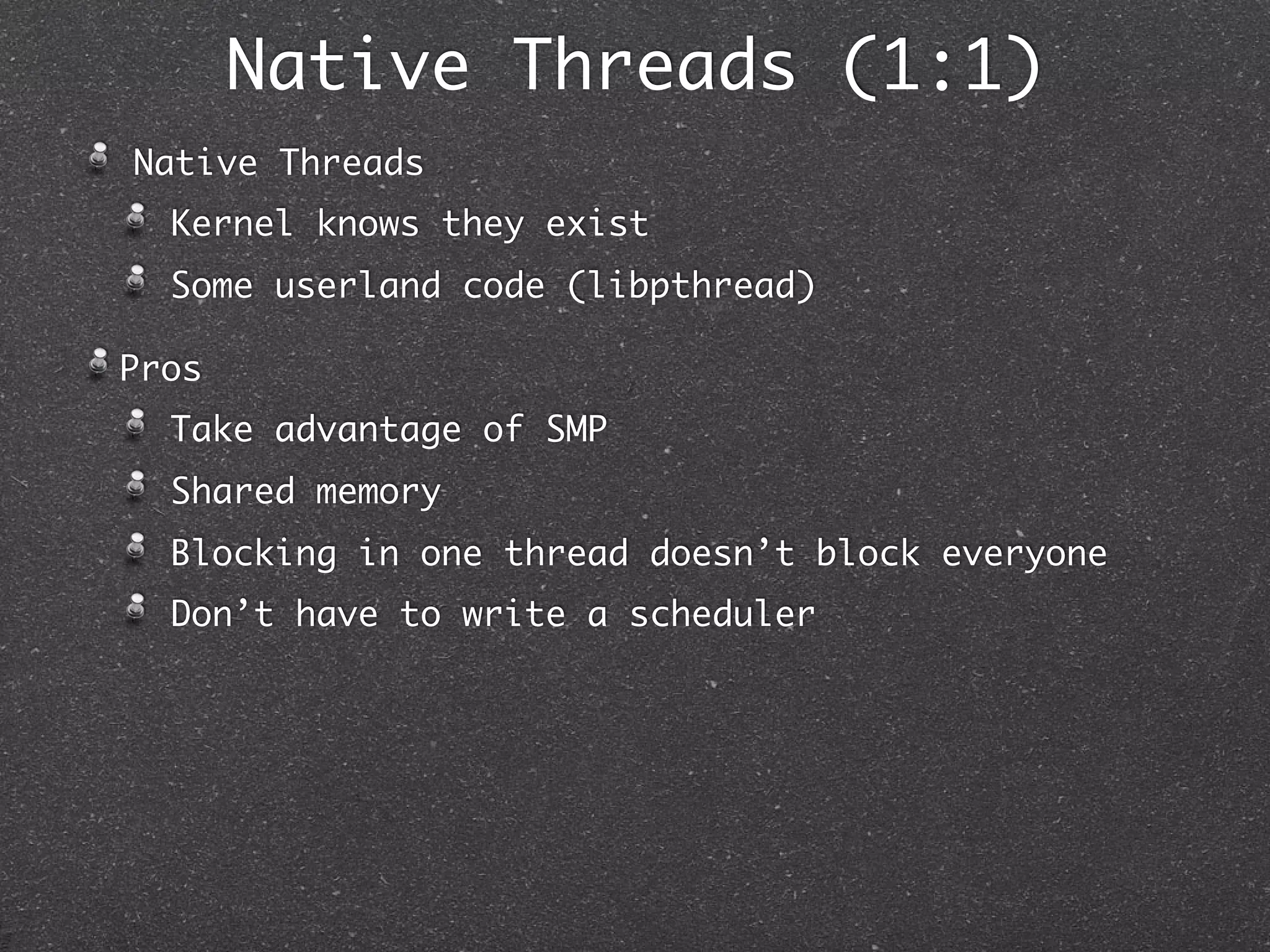

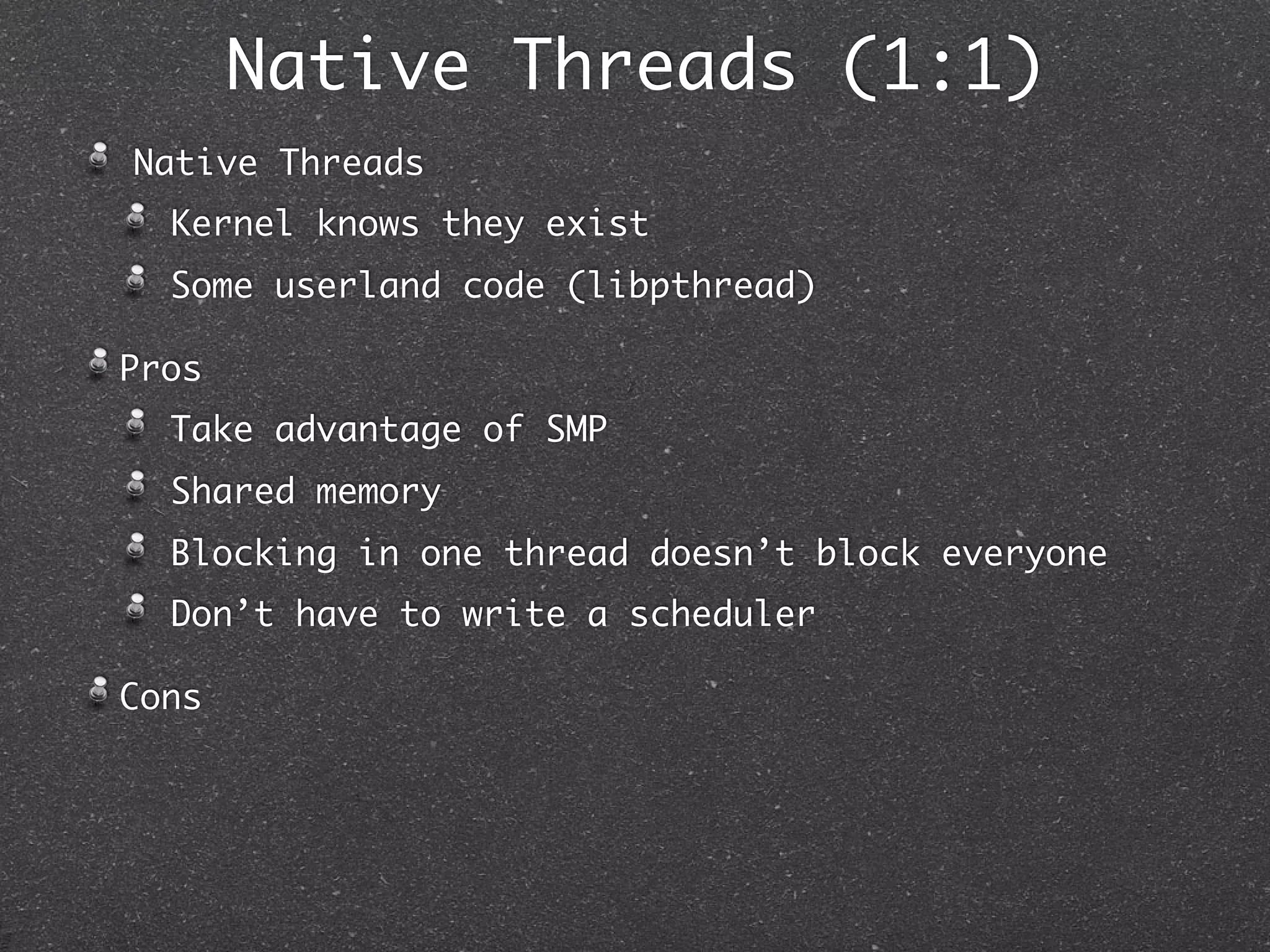

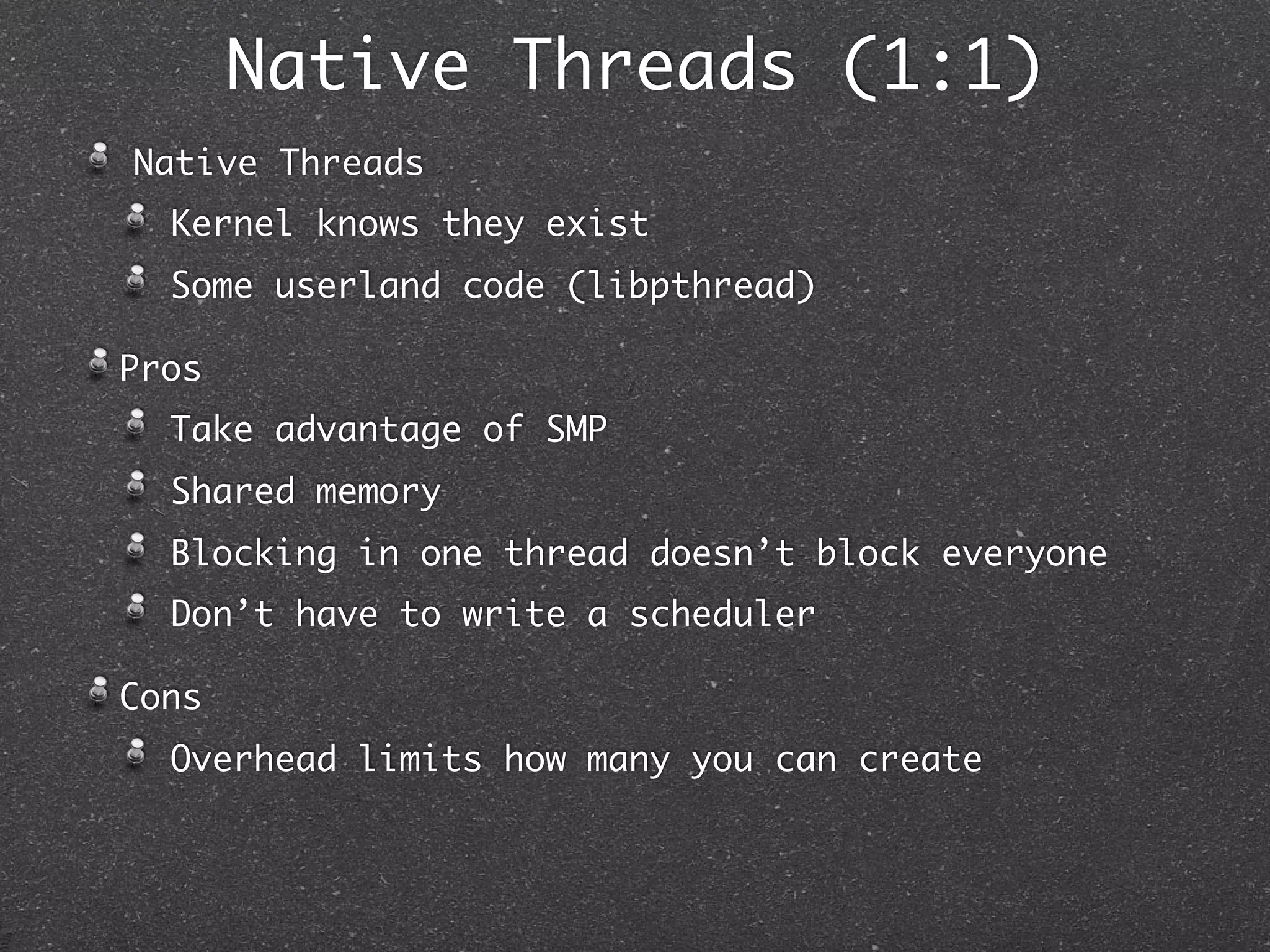

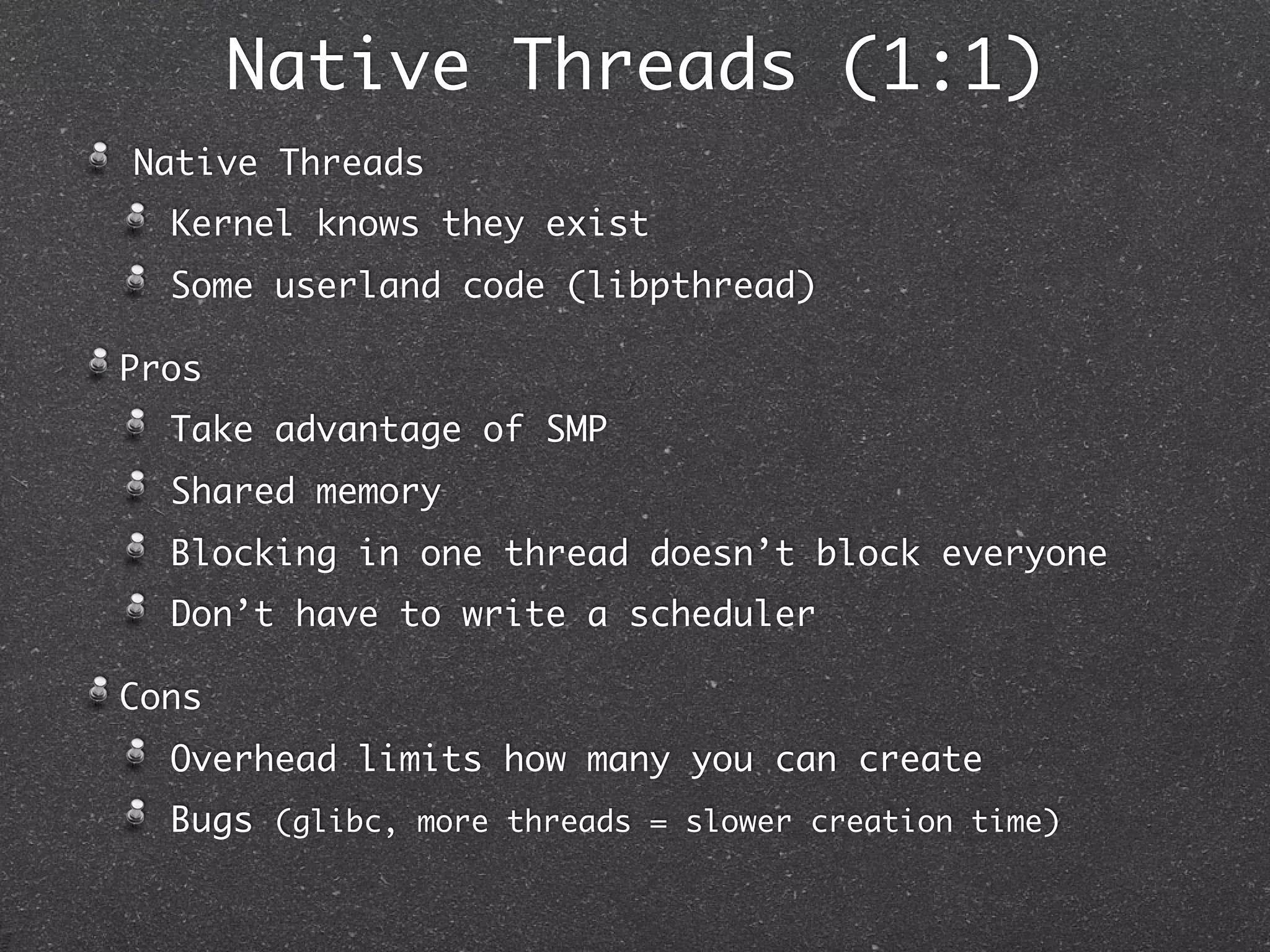

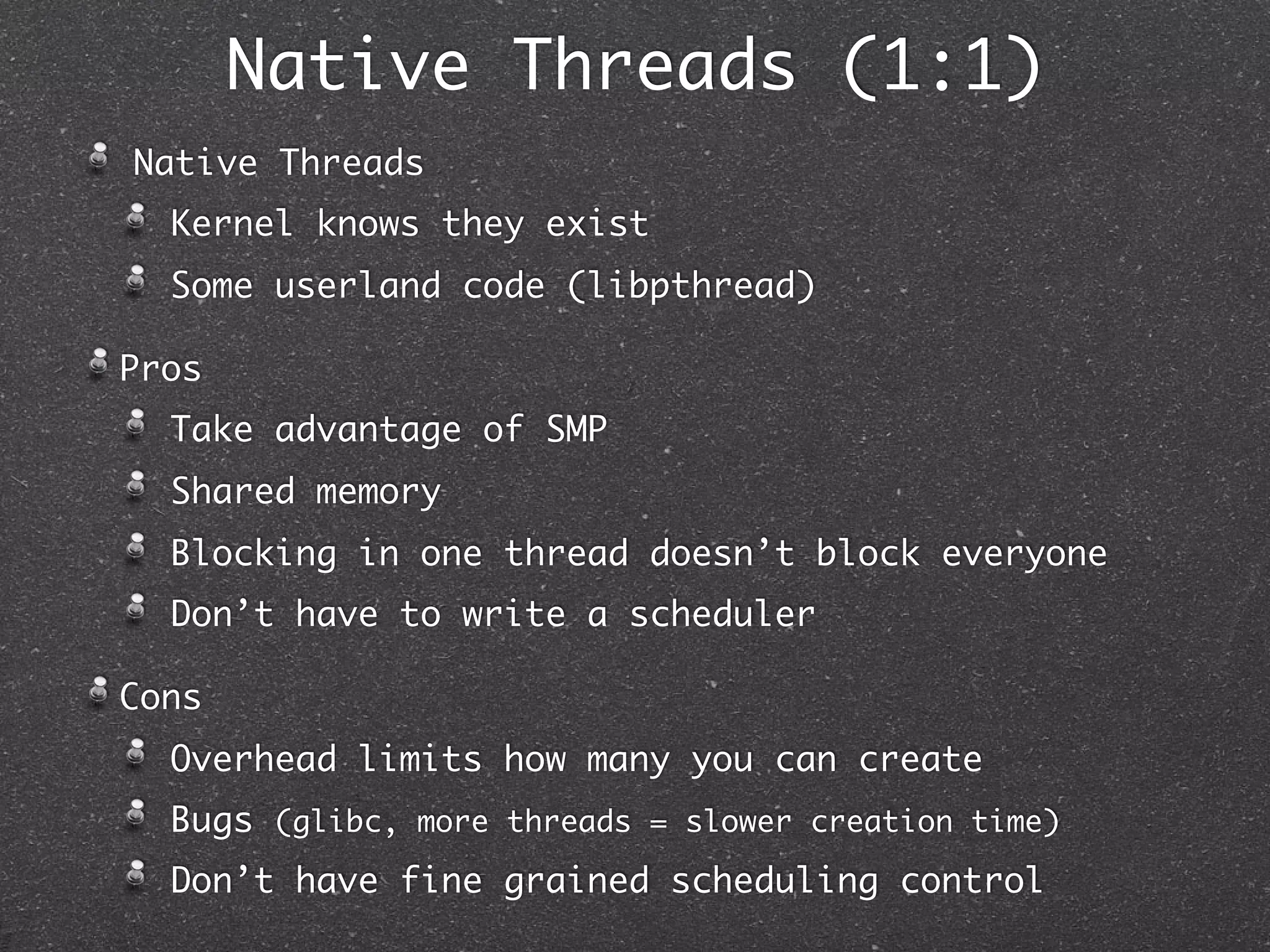

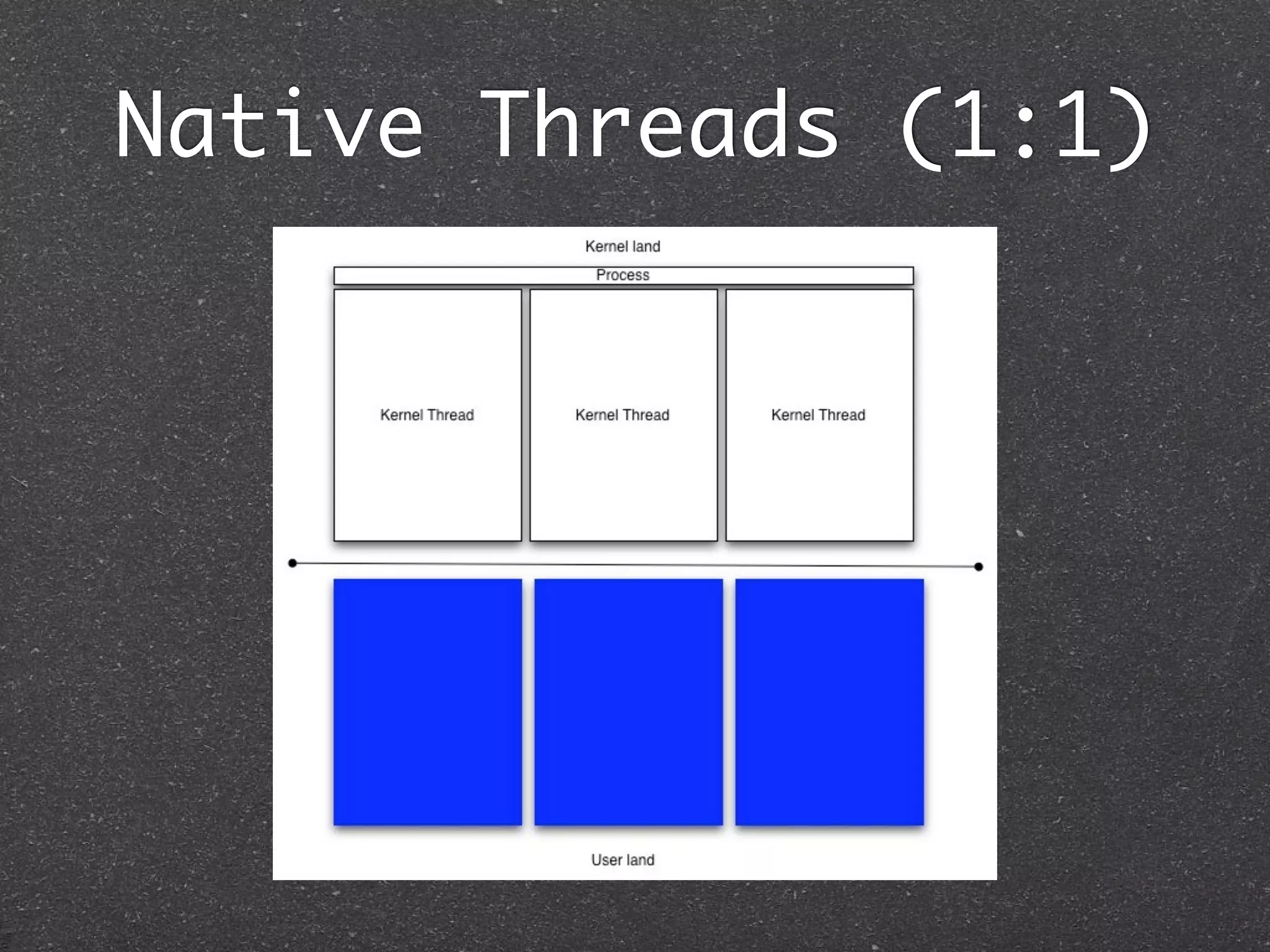

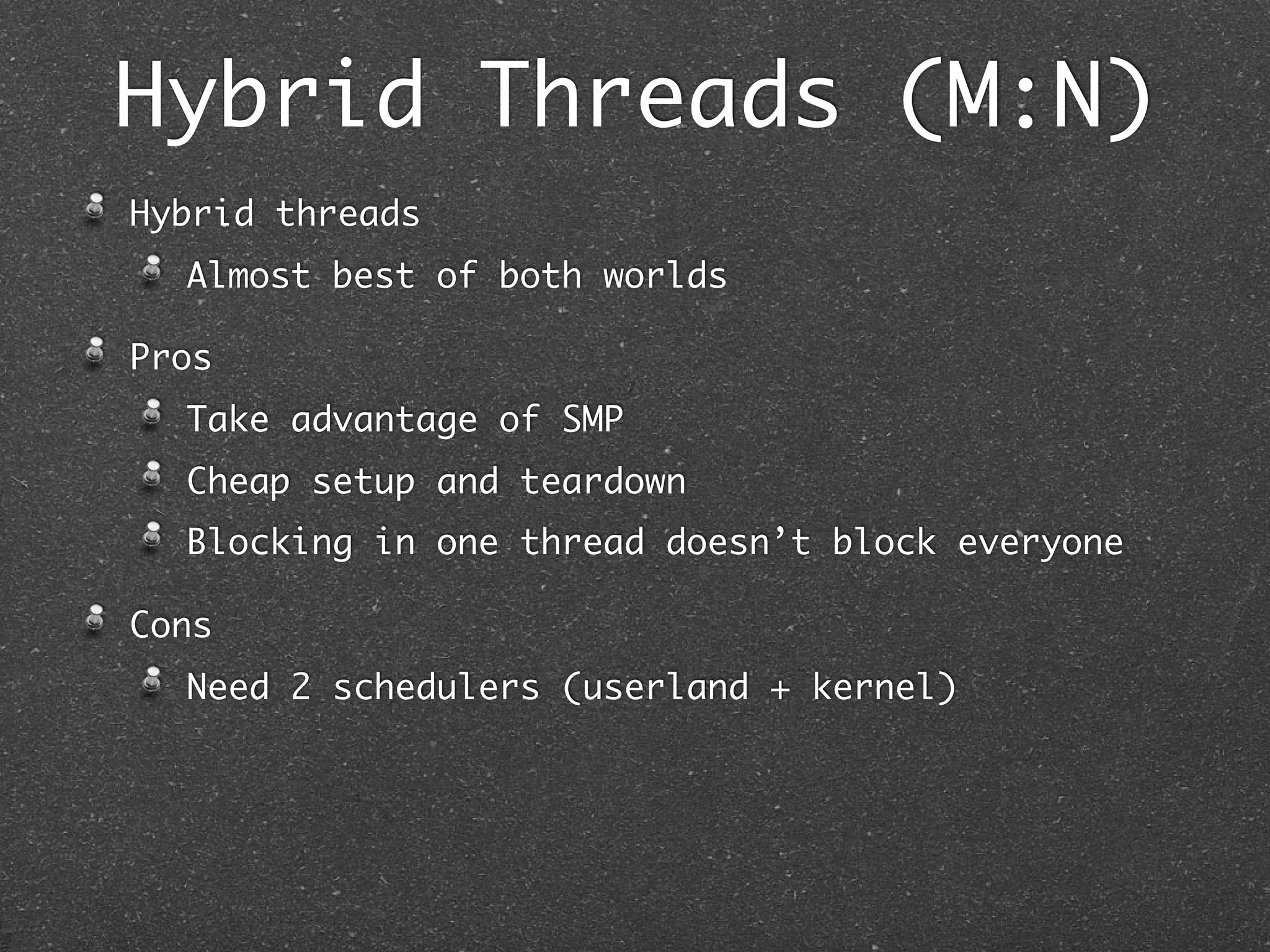

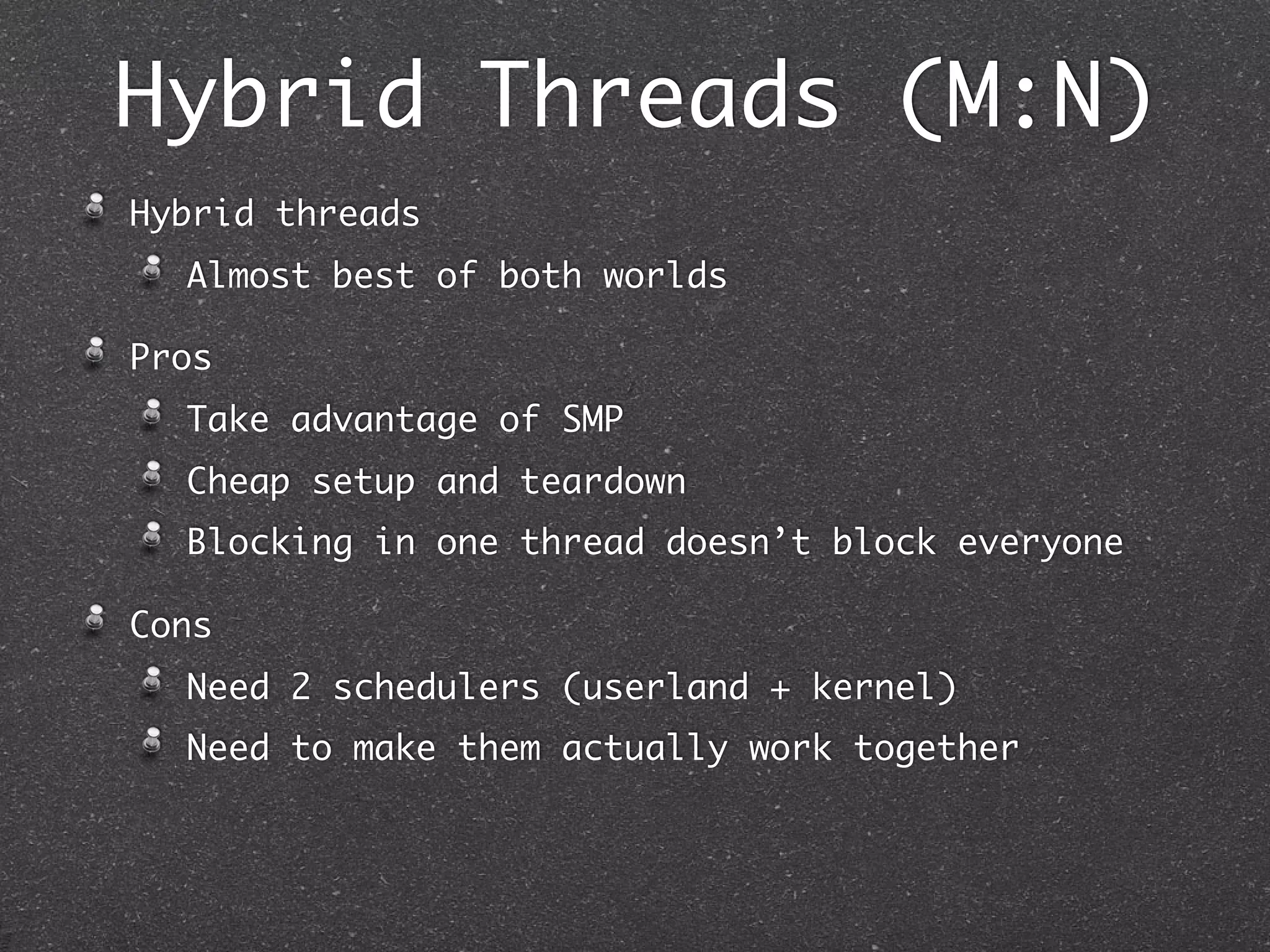

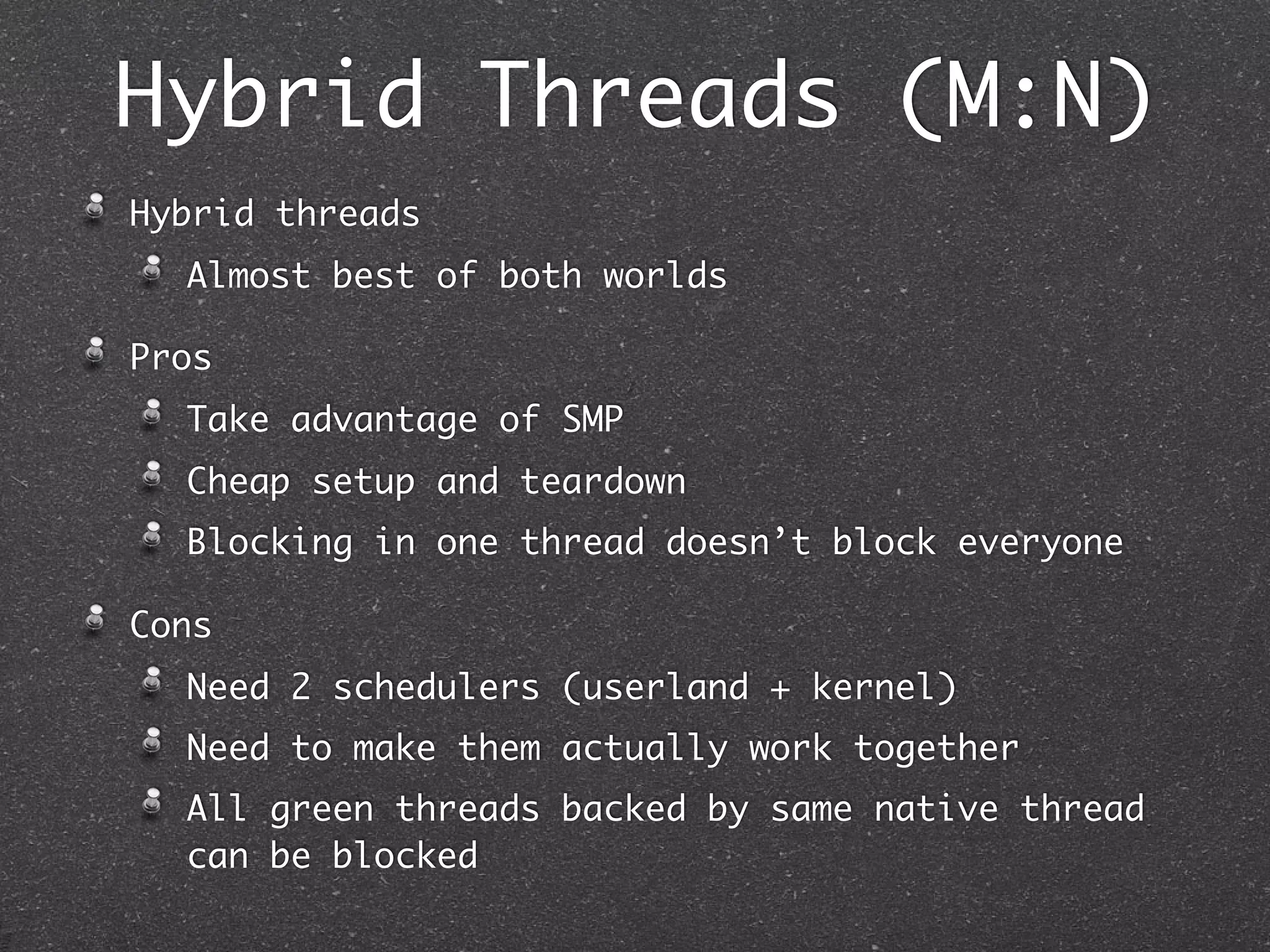

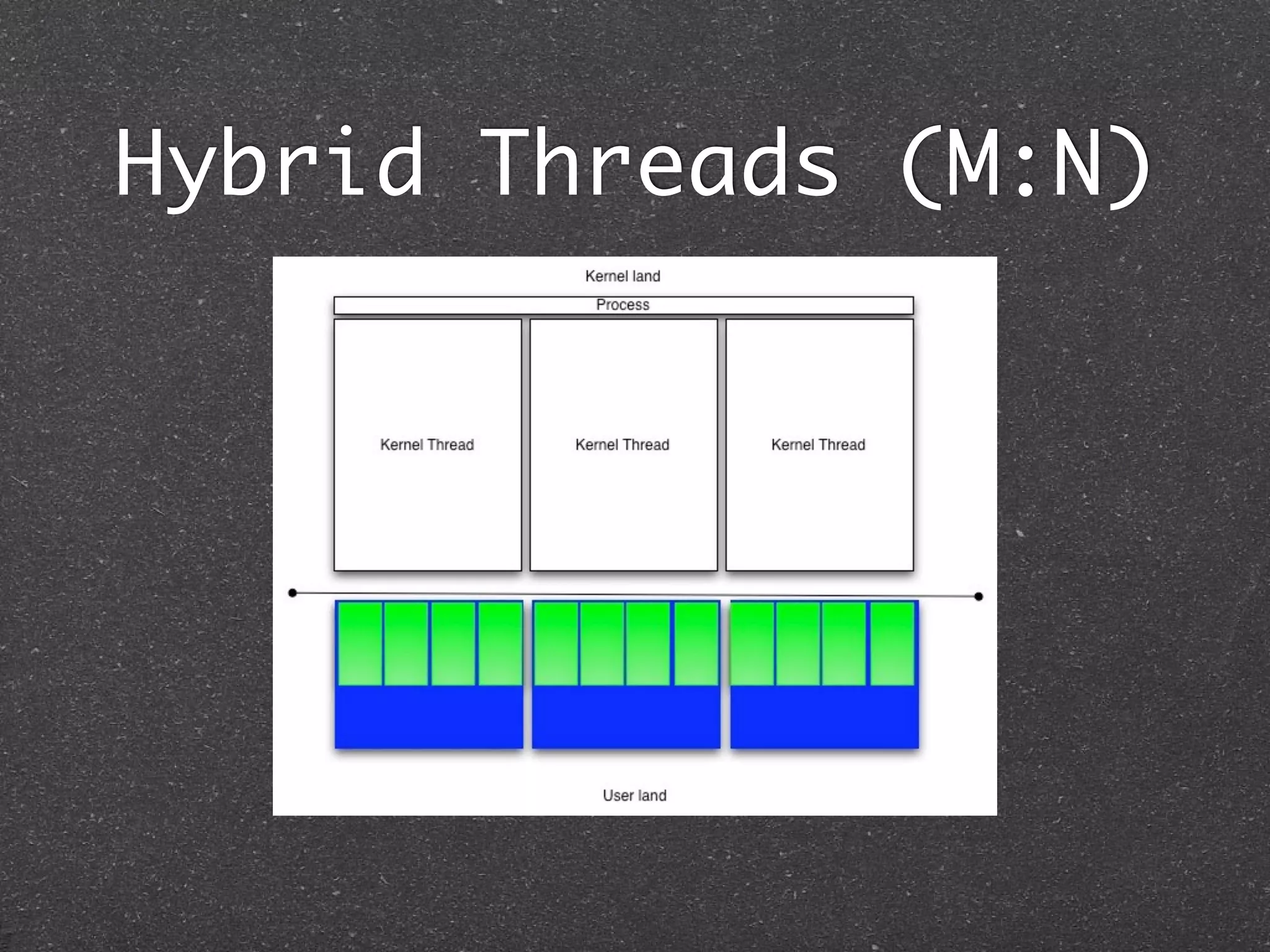

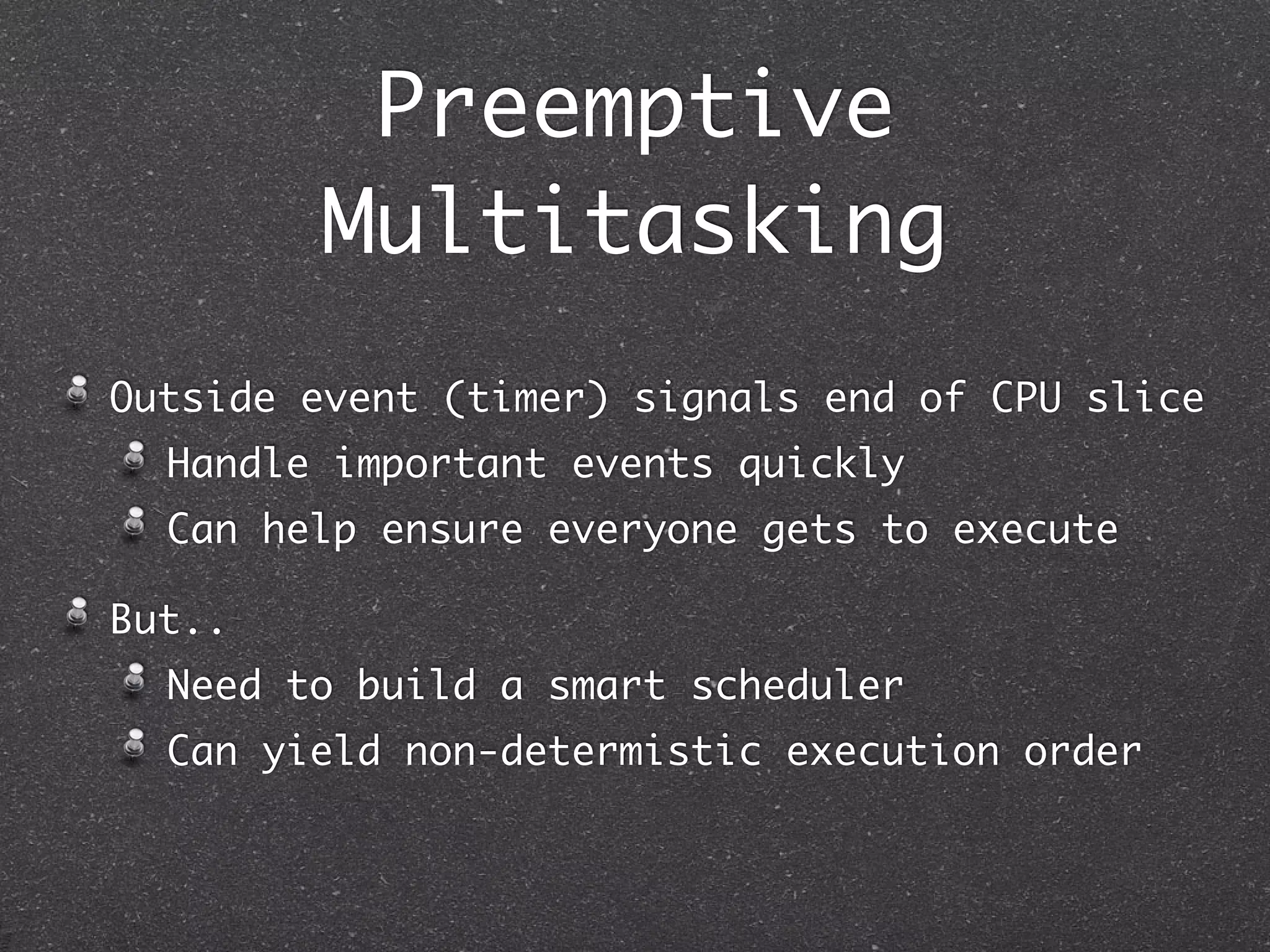

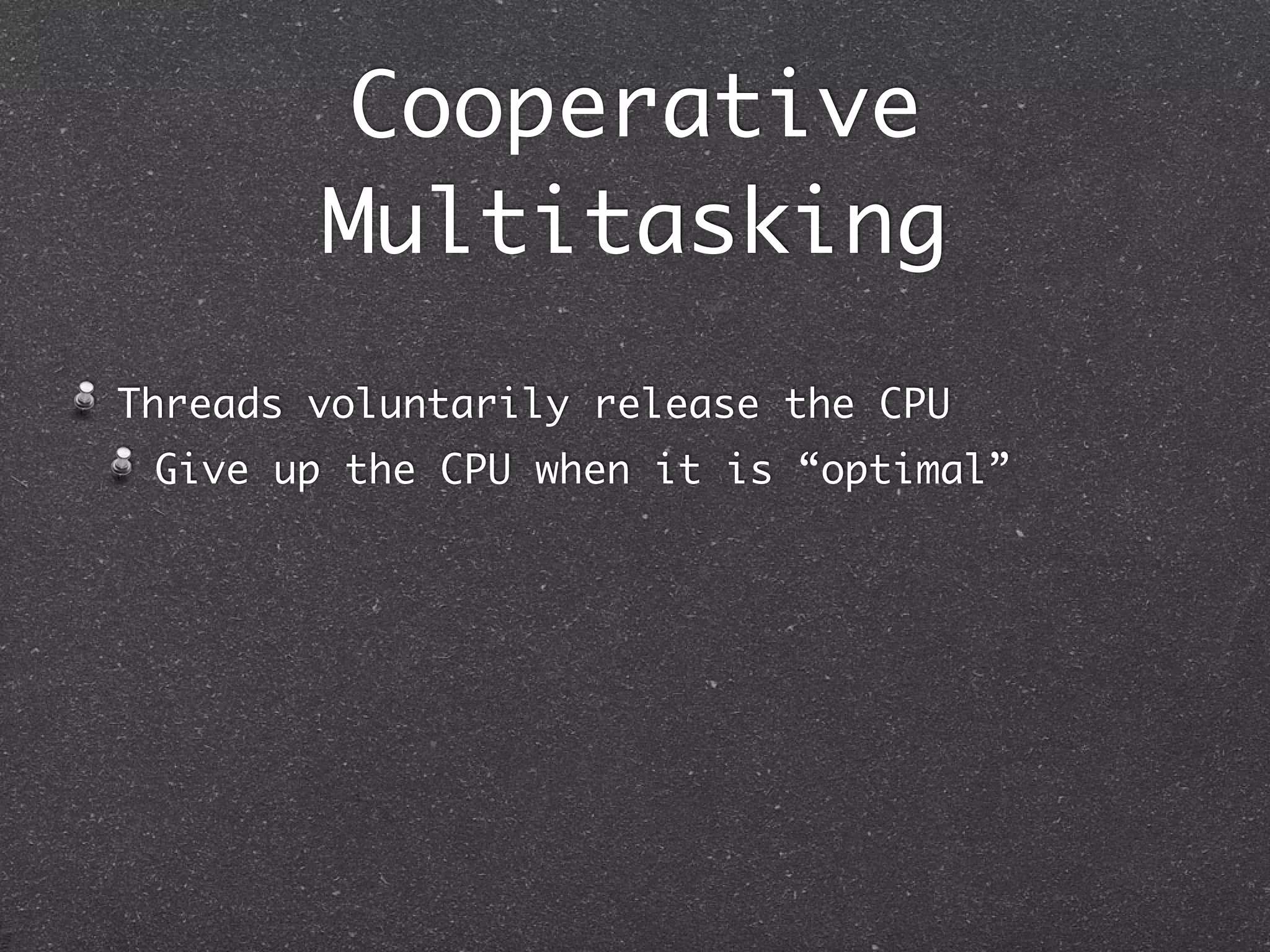

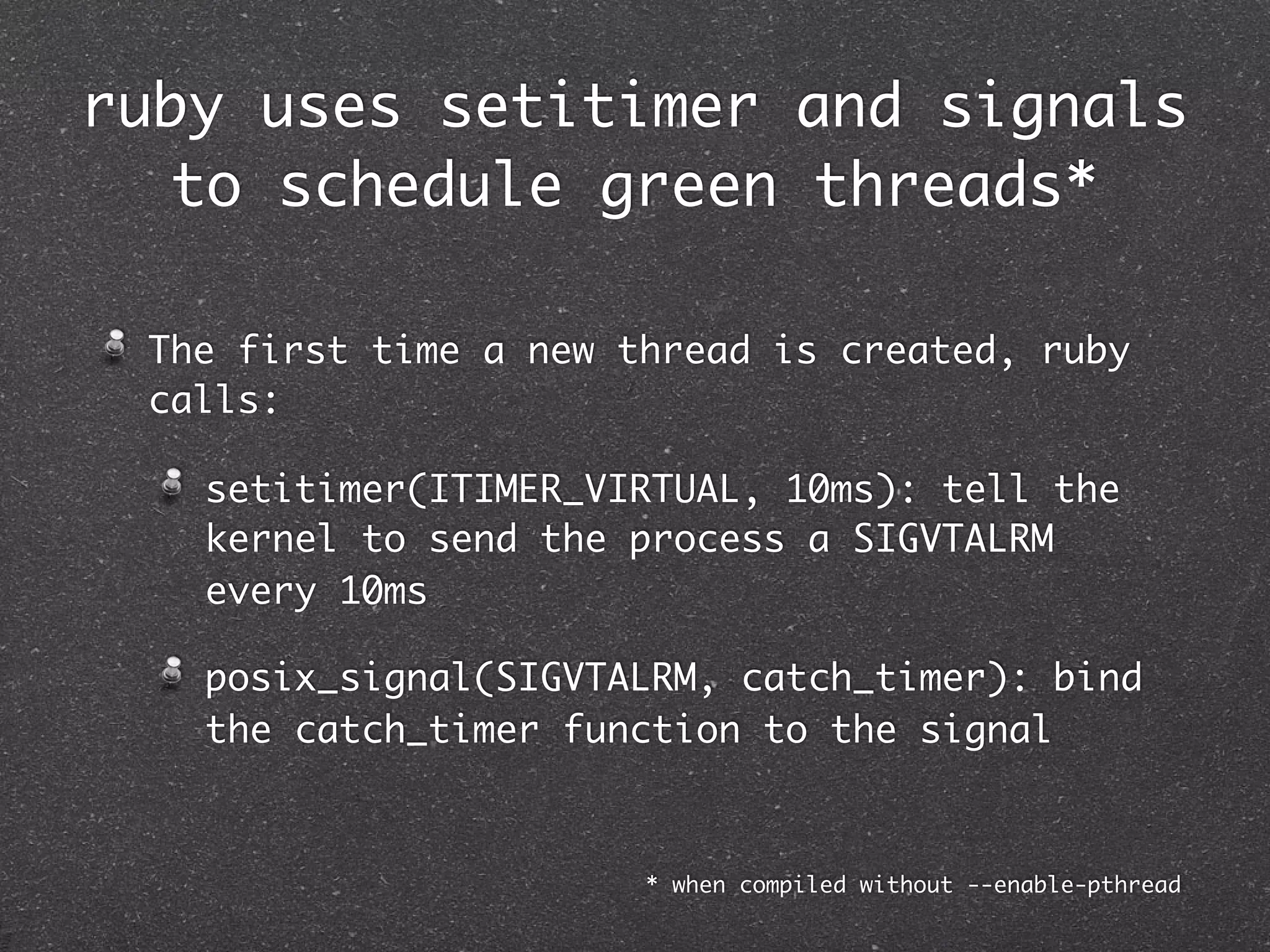

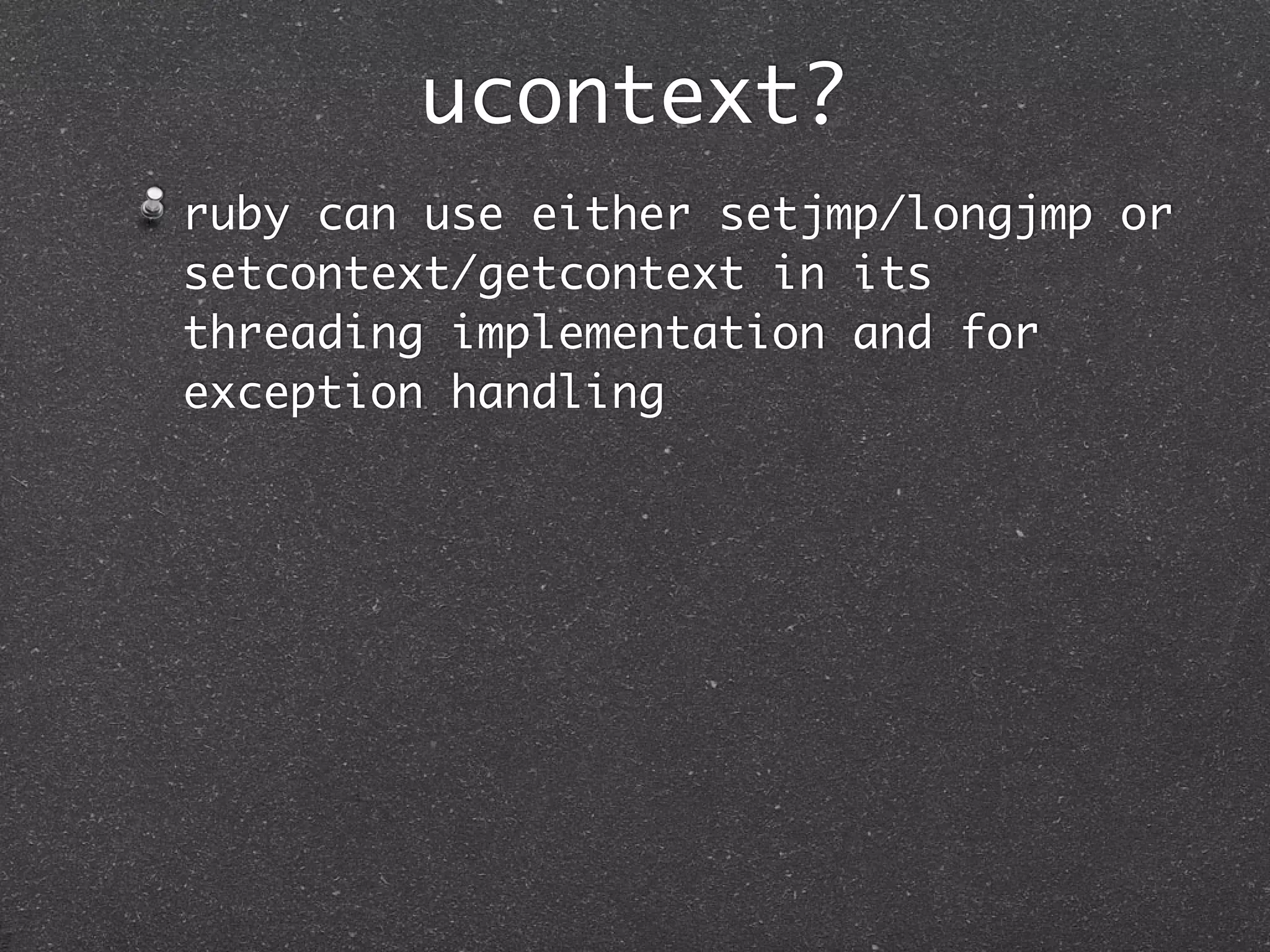

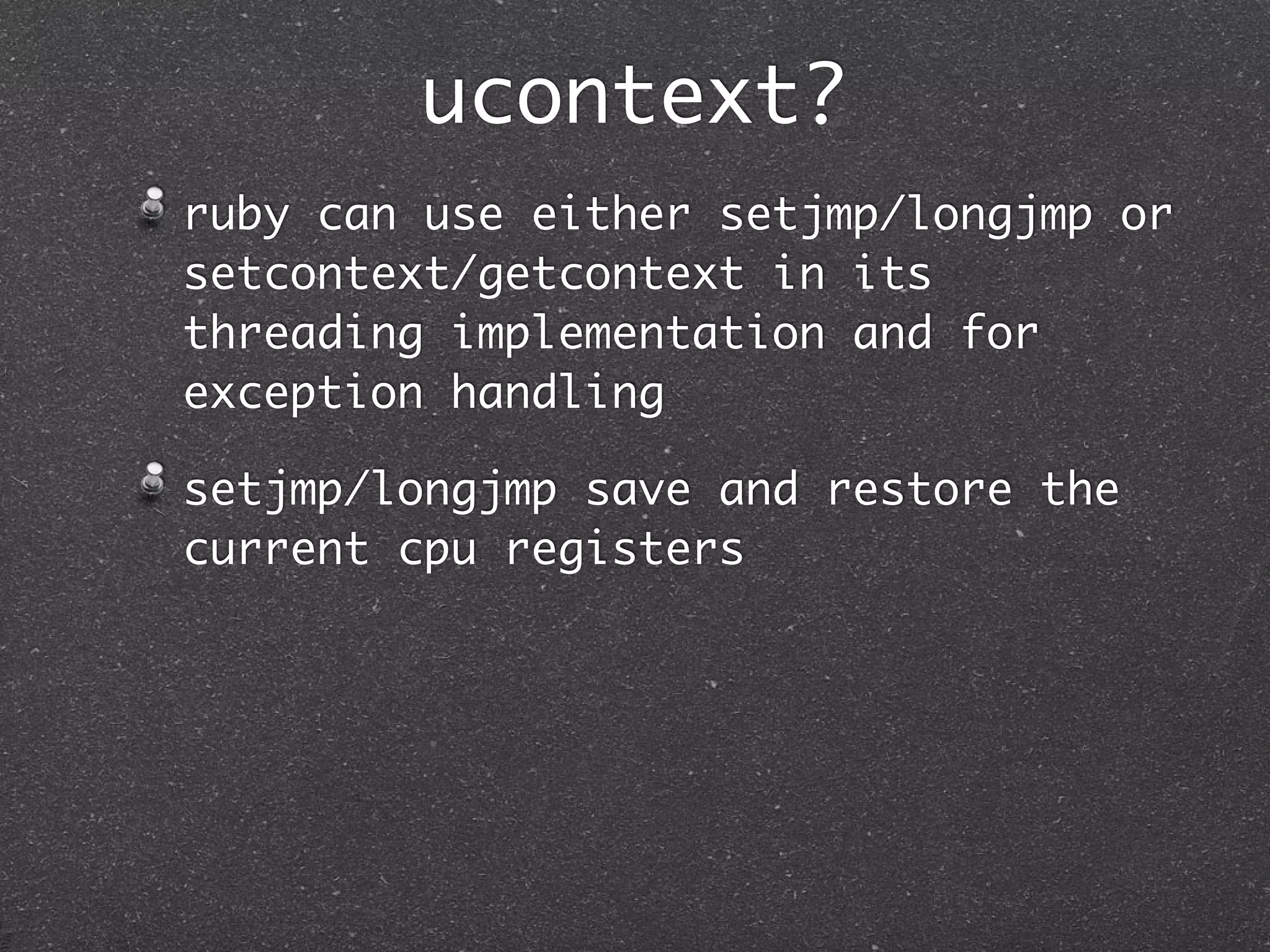

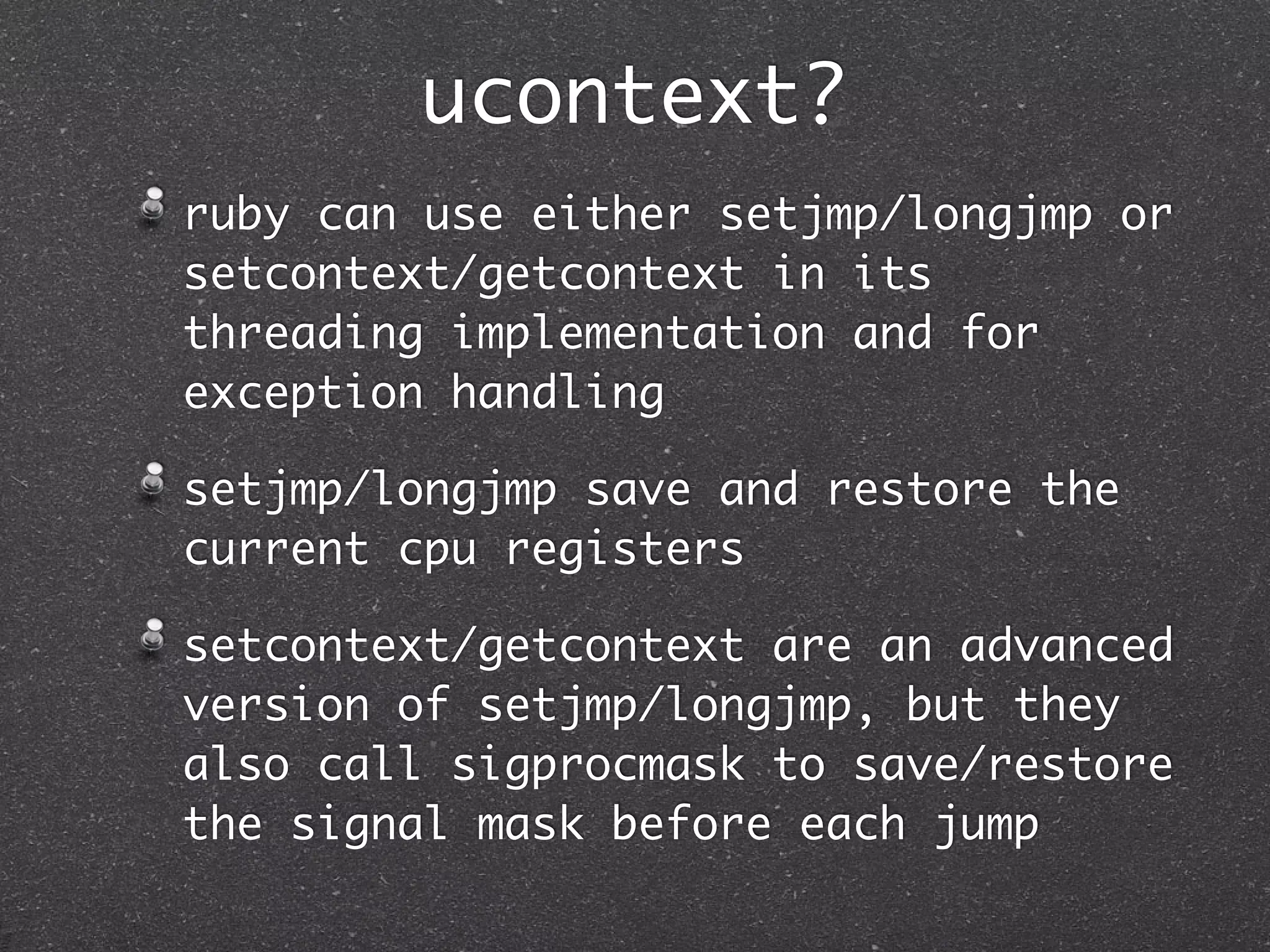

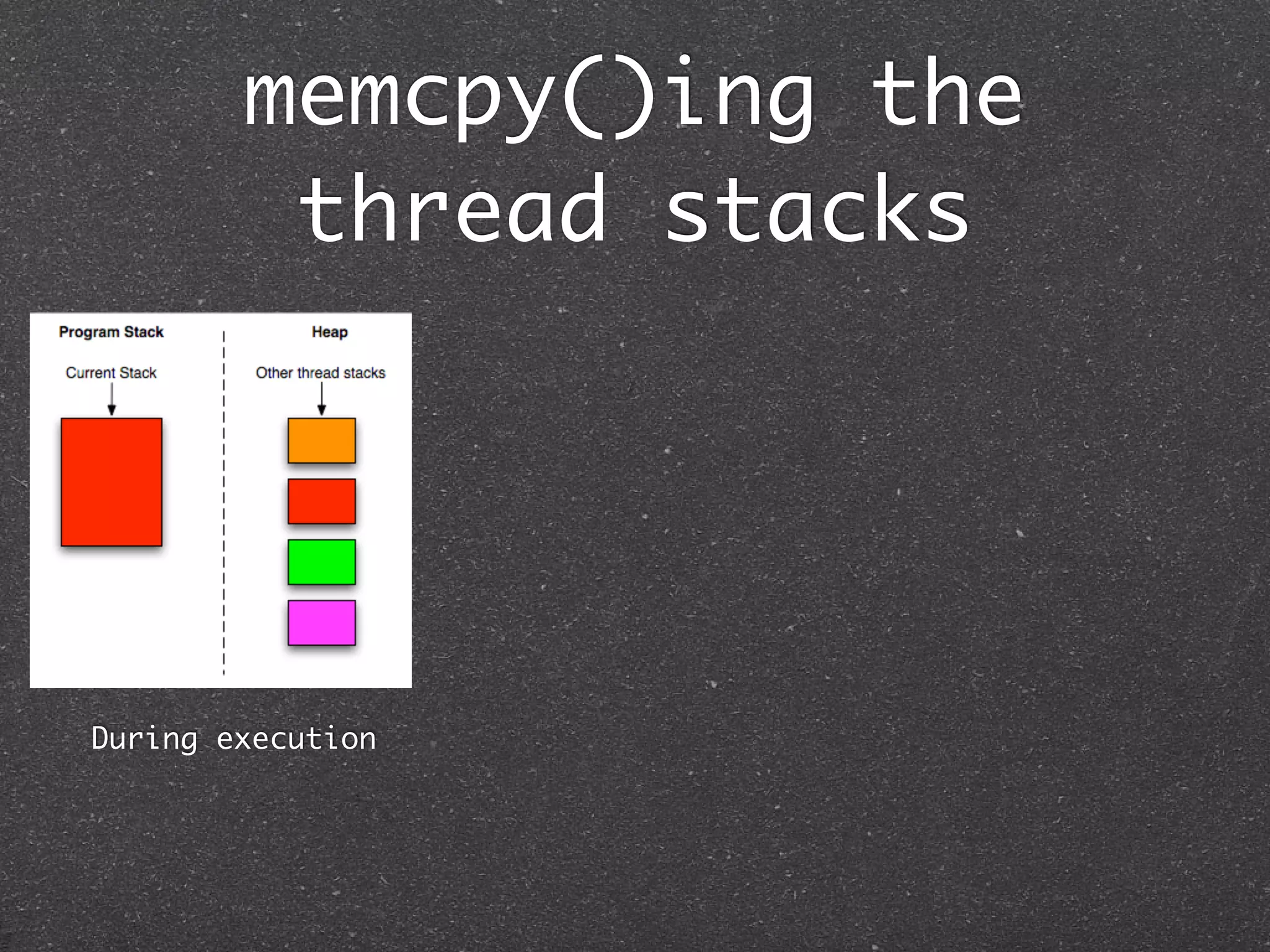

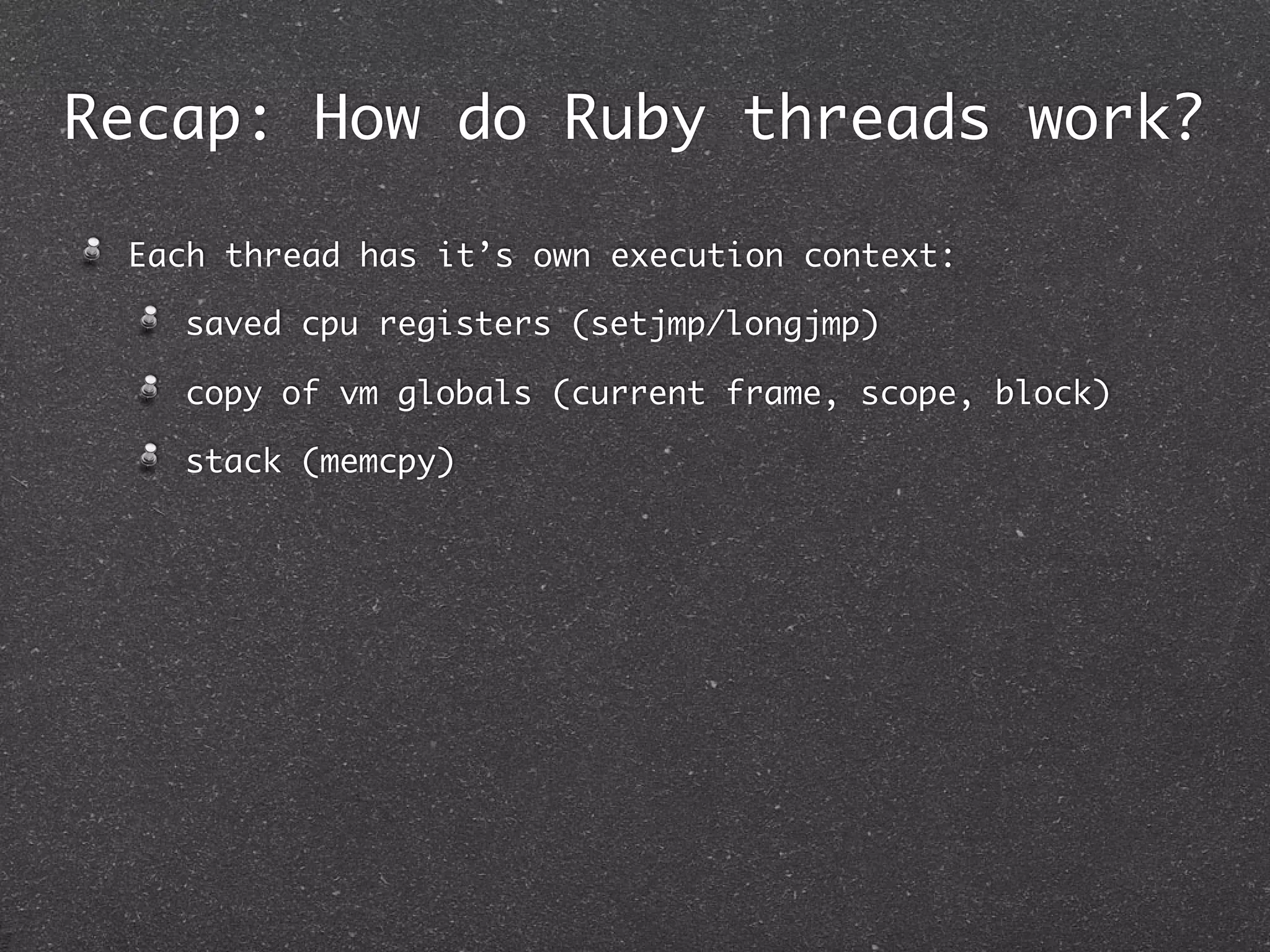

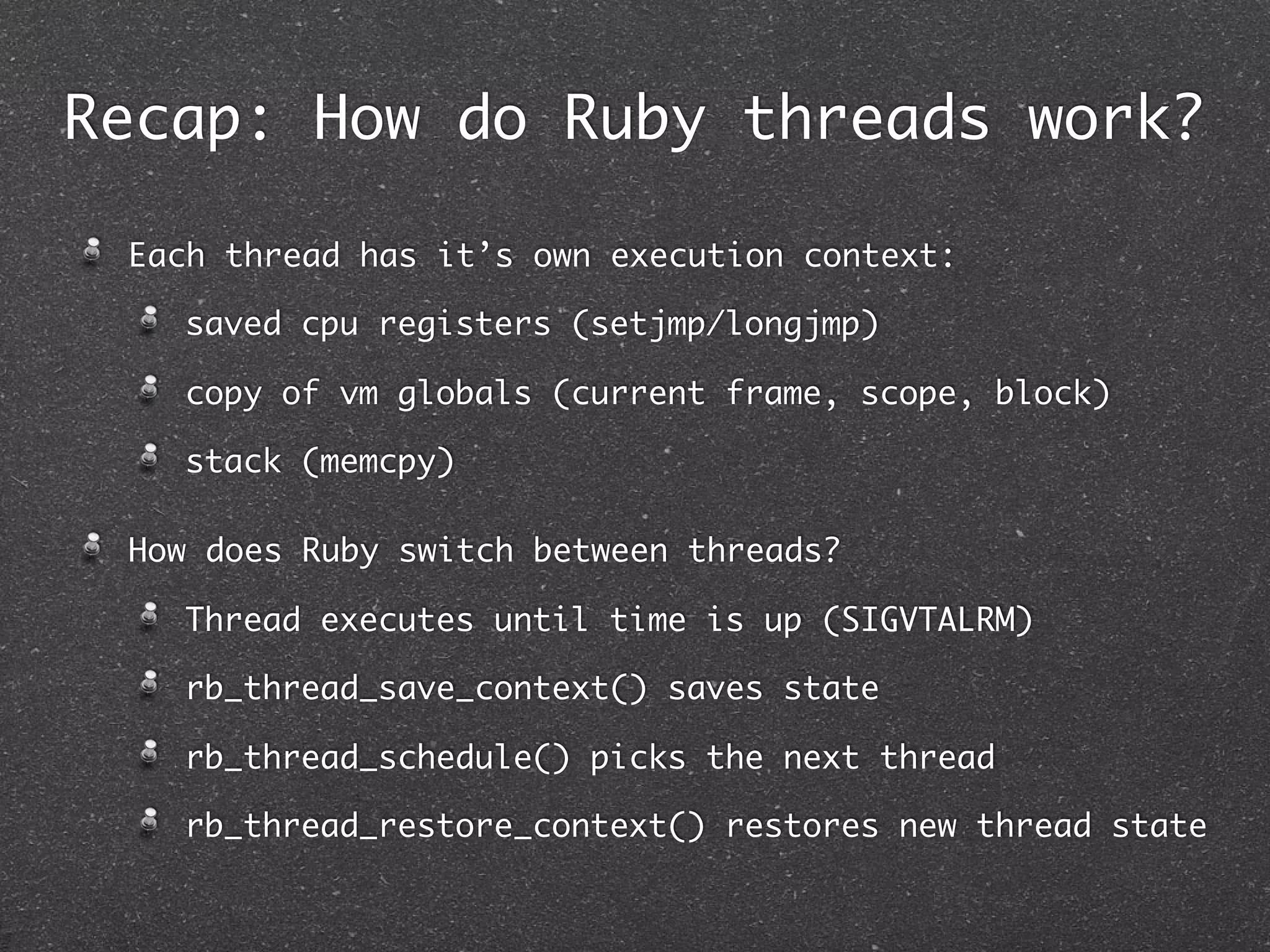

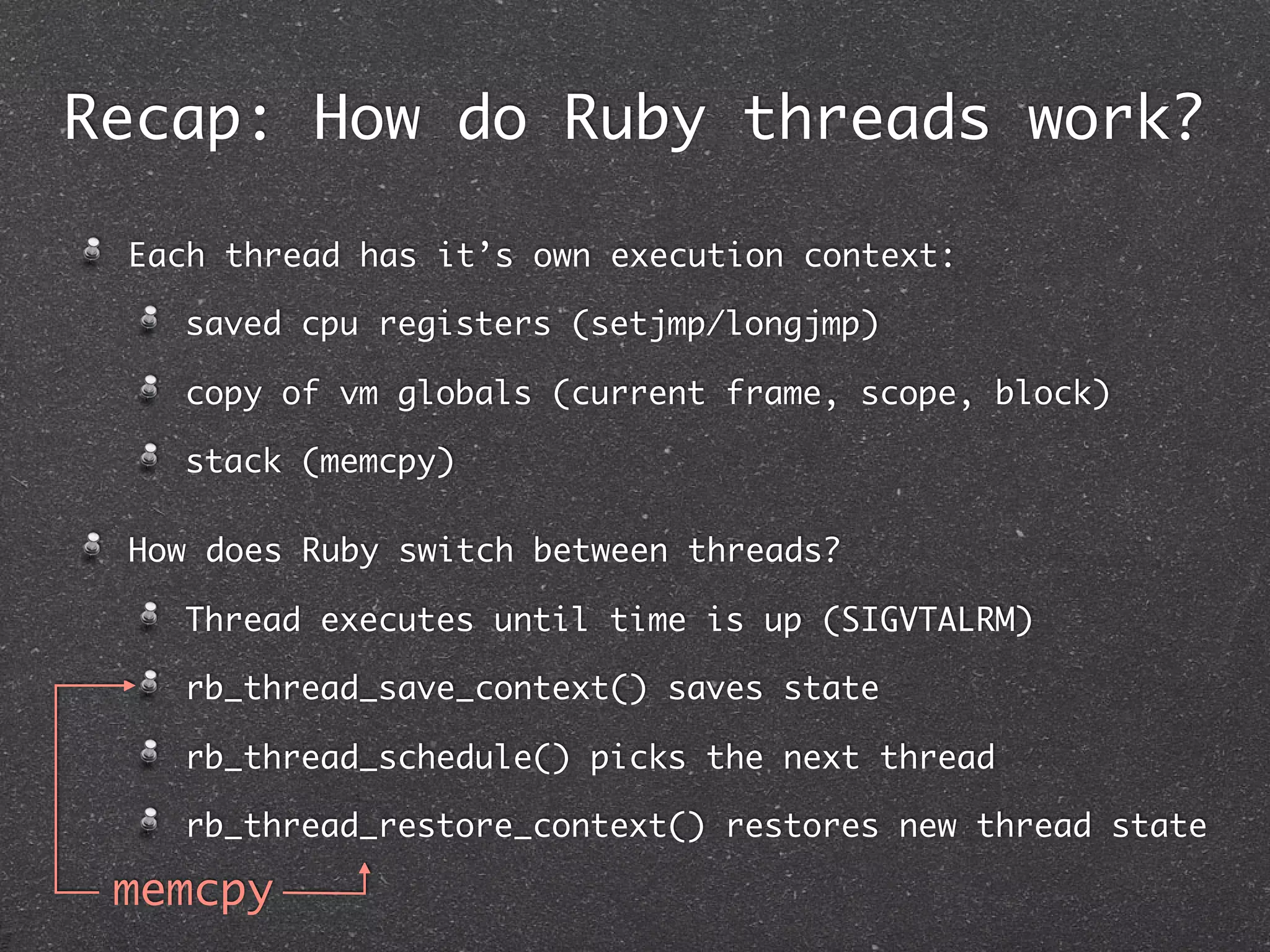

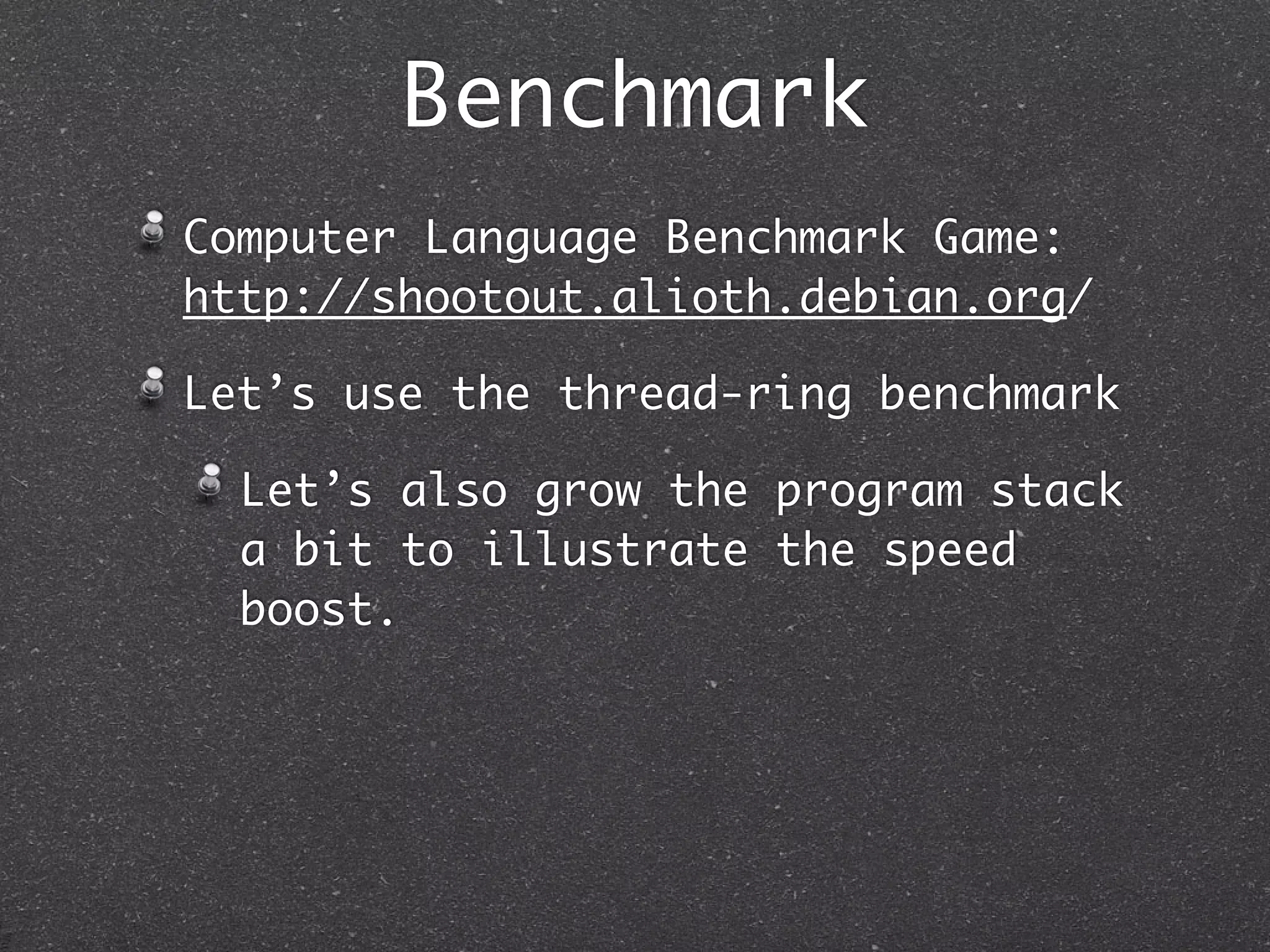

The document discusses different threading models including green threads, native threads, and hybrid threads. Green threads are lightweight threads implemented entirely in userspace that allow for cheap context switching but block all threads when one blocks. Native threads are true OS-level threads that have overhead but allow blocking individual threads. Hybrid threads combine aspects of green threads and native threads. The document also covers preemptive and cooperative multitasking and notes that Ruby uses fibers which are green threads using cooperative multitasking.

![strace -ttTp <pid> -o <file>

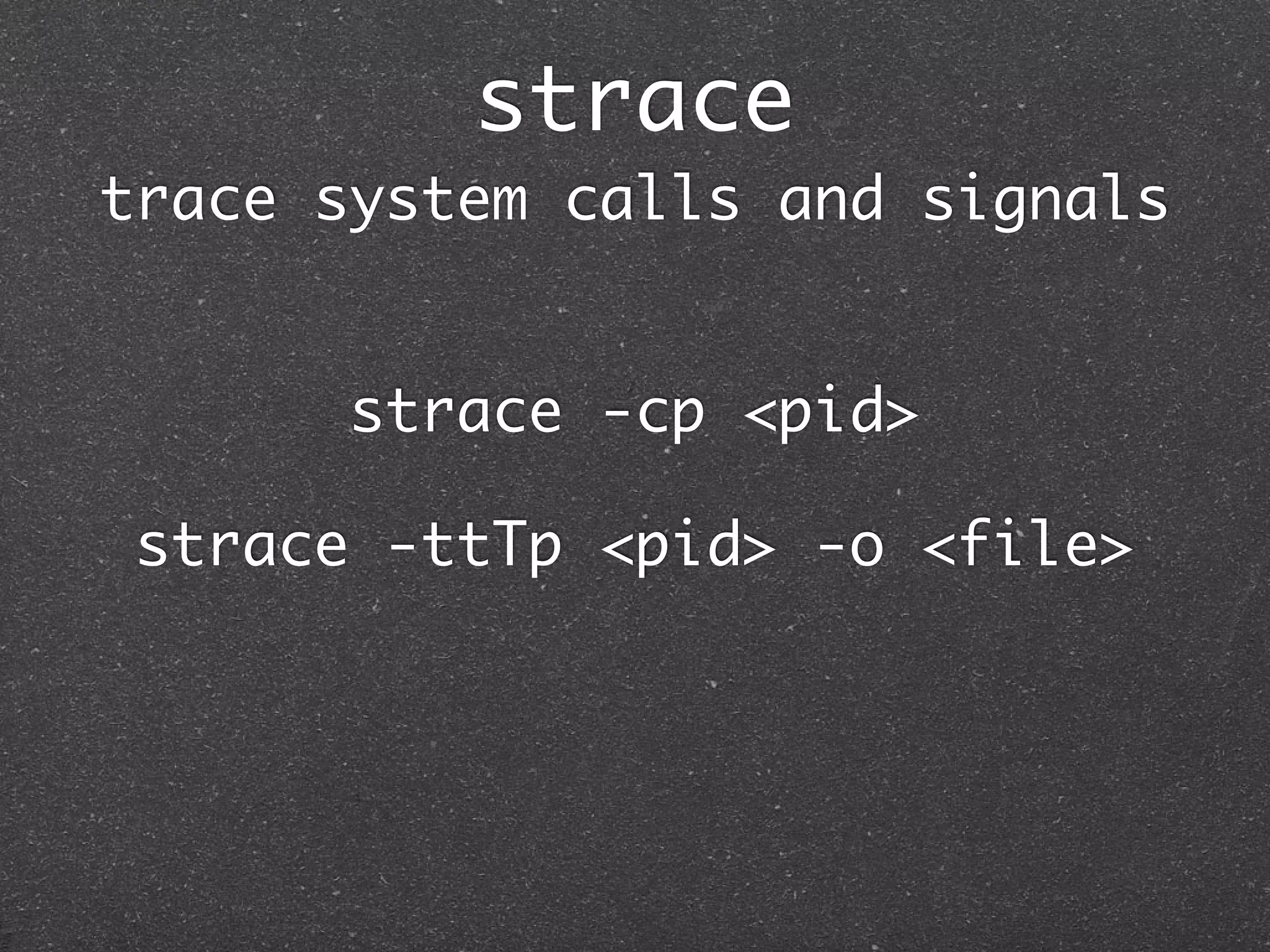

-t

Prefix each line of the trace with the time of day.

-tt

If given twice, the time printed will include the microseconds.

-T

Show the time spent in system calls. This records the time

difference between the beginning and the end of each system call.

-o filename

Write the trace output to the file filename rather than to stderr.

01:09:11.266949 epoll_wait(9, {{EPOLLIN, {u32=68841296, u64=68841296}}}, 4096, 50) = 1 <0.033109>

01:09:11.300102 accept(10, {sa_family=AF_INET, sin_port=38313, sin_addr="127.0.0.1"}, [1226]) = 22 <0.000014>

01:09:11.300190 fcntl(22, F_GETFL) = 0x2 (flags O_RDWR) <0.000007>

01:09:11.300237 fcntl(22, F_SETFL, O_RDWR|O_NONBLOCK) = 0 <0.000008>

01:09:11.300277 setsockopt(22, SOL_TCP, TCP_NODELAY, [1], 4) = 0 <0.000008>

01:09:11.300489 accept(10, 0x7fff5d9c07d0, [1226]) = -1 EAGAIN <0.000014>

01:09:11.300547 epoll_ctl(9, EPOLL_CTL_ADD, 22, {EPOLLIN, {u32=108750368, u64=108750368}}) = 0 <0.000009>

01:09:11.300593 epoll_wait(9, {{EPOLLIN, {u32=108750368, u64=108750368}}}, 4096, 50) = 1 <0.000007>

01:09:11.300633 read(22, "GET / HTTP/1.1r"..., 16384) = 772 <0.000012>

01:09:11.301727 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000007>

01:09:11.302095 poll([{fd=5, events=POLLIN|POLLPRI}], 1, 0) = 0 (Timeout) <0.000008>

01:09:11.302144 write(5, "1000000-0003SELECT * FROM `table`"..., 56) = 56 <0.000023>

01:09:11.302221 read(5, "25101,20x234m"..., 16384) = 284 <1.300897>](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-73-2048.jpg)

![strace -ttTp <pid> -o <file>

-t

Prefix each line of the trace with the time of day.

-tt

If given twice, the time printed will include the microseconds.

-T

Show the time spent in system calls. This records the time

difference between the beginning and the end of each system call.

-o filename

Write the trace output to the file filename rather than to stderr.

01:09:11.266949 epoll_wait(9, {{EPOLLIN, {u32=68841296, u64=68841296}}}, 4096, 50) = 1 <0.033109>

01:09:11.300102 accept(10, {sa_family=AF_INET, sin_port=38313, sin_addr="127.0.0.1"}, [1226]) = 22 <0.000014>

01:09:11.300190 fcntl(22, F_GETFL) = 0x2 (flags O_RDWR) <0.000007>

01:09:11.300237 fcntl(22, F_SETFL, O_RDWR|O_NONBLOCK) = 0 <0.000008>

01:09:11.300277 setsockopt(22, SOL_TCP, TCP_NODELAY, [1], 4) = 0 <0.000008>

01:09:11.300489 accept(10, 0x7fff5d9c07d0, [1226]) = -1 EAGAIN <0.000014>

01:09:11.300547 epoll_ctl(9, EPOLL_CTL_ADD, 22, {EPOLLIN, {u32=108750368, u64=108750368}}) = 0 <0.000009>

01:09:11.300593 epoll_wait(9, {{EPOLLIN, {u32=108750368, u64=108750368}}}, 4096, 50) = 1 <0.000007>

01:09:11.300633 read(22, "GET / HTTP/1.1r"..., 16384) = 772 <0.000012>

01:09:11.301727 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000007>

01:09:11.302095 poll([{fd=5, events=POLLIN|POLLPRI}], 1, 0) = 0 (Timeout) <0.000008>

01:09:11.302144 write(5, "1000000-0003SELECT * FROM `table`"..., 56) = 56 <0.000023>

01:09:11.302221 read(5, "25101,20x234m"..., 16384) = 284 <1.300897>](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-74-2048.jpg)

![strace -ttTp <pid> -o <file>

-t

Prefix each line of the trace with the time of day.

-tt

If given twice, the time printed will include the microseconds.

-T

Show the time spent in system calls. This records the time

difference between the beginning and the end of each system call.

-o filename

Write the trace output to the file filename rather than to stderr.

01:09:11.266949 epoll_wait(9, {{EPOLLIN, {u32=68841296, u64=68841296}}}, 4096, 50) = 1 <0.033109>

01:09:11.300102 accept(10, {sa_family=AF_INET, sin_port=38313, sin_addr="127.0.0.1"}, [1226]) = 22 <0.000014>

01:09:11.300190 fcntl(22, F_GETFL) = 0x2 (flags O_RDWR) <0.000007>

01:09:11.300237 fcntl(22, F_SETFL, O_RDWR|O_NONBLOCK) = 0 <0.000008>

01:09:11.300277 setsockopt(22, SOL_TCP, TCP_NODELAY, [1], 4) = 0 <0.000008>

01:09:11.300489 accept(10, 0x7fff5d9c07d0, [1226]) = -1 EAGAIN <0.000014>

01:09:11.300547 epoll_ctl(9, EPOLL_CTL_ADD, 22, {EPOLLIN, {u32=108750368, u64=108750368}}) = 0 <0.000009>

01:09:11.300593 epoll_wait(9, {{EPOLLIN, {u32=108750368, u64=108750368}}}, 4096, 50) = 1 <0.000007>

01:09:11.300633 read(22, "GET / HTTP/1.1r"..., 16384) = 772 <0.000012>

01:09:11.301727 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000007>

01:09:11.302095 poll([{fd=5, events=POLLIN|POLLPRI}], 1, 0) = 0 (Timeout) <0.000008>

01:09:11.302144 write(5, "1000000-0003SELECT * FROM `table`"..., 56) = 56 <0.000023>

01:09:11.302221 read(5, "25101,20x234m"..., 16384) = 284 <1.300897>](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-75-2048.jpg)

![strace -ttTp <pid> -o <file>

-t

Prefix each line of the trace with the time of day.

-tt

If given twice, the time printed will include the microseconds.

-T

Show the time spent in system calls. This records the time

difference between the beginning and the end of each system call.

-o filename

Write the trace output to the file filename rather than to stderr.

01:09:11.266949 epoll_wait(9, {{EPOLLIN, {u32=68841296, u64=68841296}}}, 4096, 50) = 1 <0.033109>

01:09:11.300102 accept(10, {sa_family=AF_INET, sin_port=38313, sin_addr="127.0.0.1"}, [1226]) = 22 <0.000014>

01:09:11.300190 fcntl(22, F_GETFL) = 0x2 (flags O_RDWR) <0.000007>

01:09:11.300237 fcntl(22, F_SETFL, O_RDWR|O_NONBLOCK) = 0 <0.000008>

01:09:11.300277 setsockopt(22, SOL_TCP, TCP_NODELAY, [1], 4) = 0 <0.000008>

01:09:11.300489 accept(10, 0x7fff5d9c07d0, [1226]) = -1 EAGAIN <0.000014>

01:09:11.300547 epoll_ctl(9, EPOLL_CTL_ADD, 22, {EPOLLIN, {u32=108750368, u64=108750368}}) = 0 <0.000009>

01:09:11.300593 epoll_wait(9, {{EPOLLIN, {u32=108750368, u64=108750368}}}, 4096, 50) = 1 <0.000007>

01:09:11.300633 read(22, "GET / HTTP/1.1r"..., 16384) = 772 <0.000012>

01:09:11.301727 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000007>

01:09:11.302095 poll([{fd=5, events=POLLIN|POLLPRI}], 1, 0) = 0 (Timeout) <0.000008>

01:09:11.302144 write(5, "1000000-0003SELECT * FROM `table`"..., 56) = 56 <0.000023>

01:09:11.302221 read(5, "25101,20x234m"..., 16384) = 284 <1.300897>](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-76-2048.jpg)

![Why are our debian servers so slow?

strace -ttT ruby threaded.rb

18:42:39.566788 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.566836 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.567083 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000006>

18:42:39.567131 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.567415 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-93-2048.jpg)

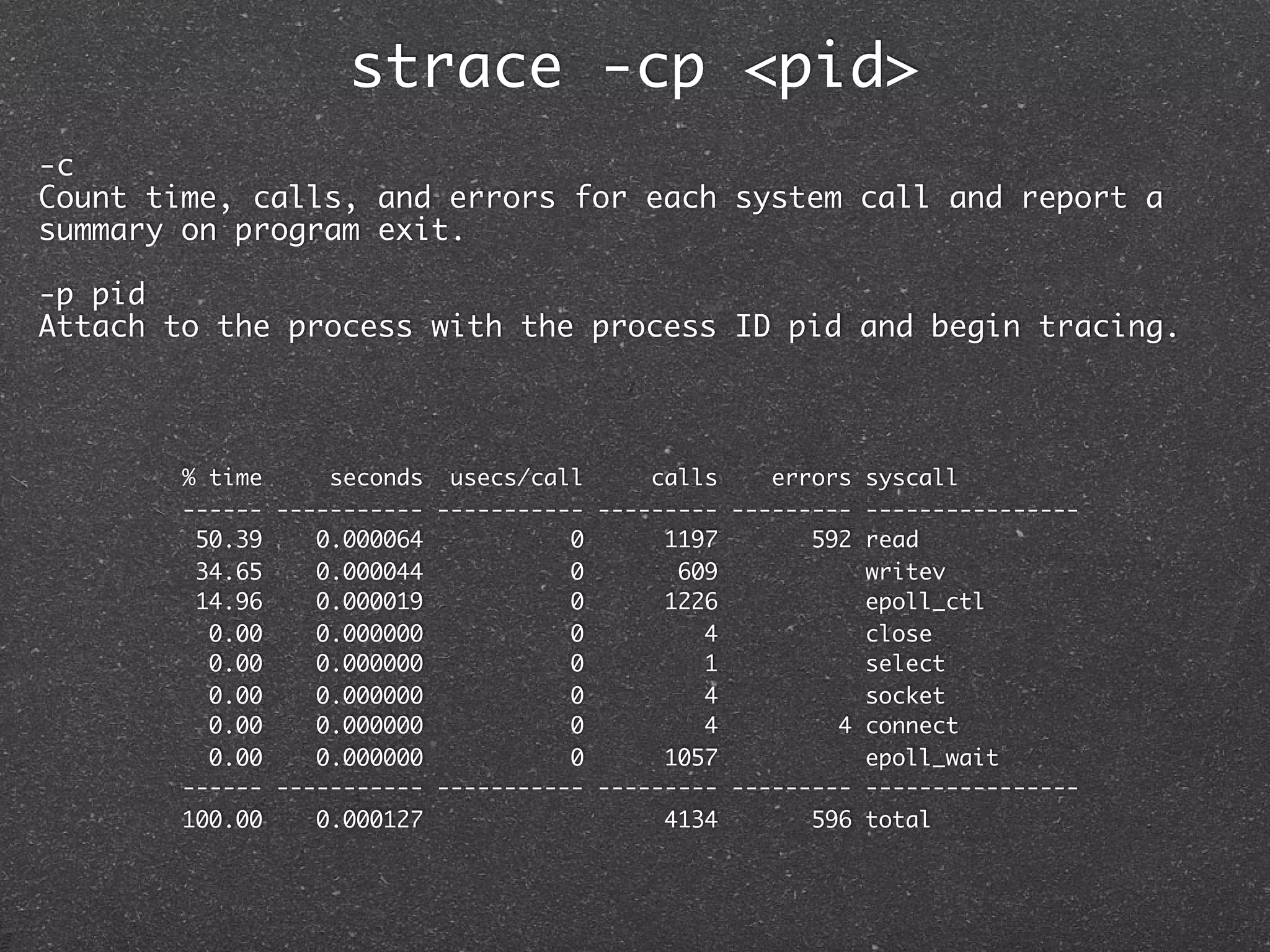

![Why are our debian servers so slow?

strace -c ruby threaded.rb

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

100.00 0.326334 0 3568567 rt_sigprocmask

0.00 0.000000 0 9 read

0.00 0.000000 0 10 open

0.00 0.000000 0 10 close

0.00 0.000000 0 9 fstat

0.00 0.000000 0 25 mmap

------ ----------- ----------- --------- --------- ----------------

100.00 0.326334 3568685 0 total

strace -ttT ruby threaded.rb

18:42:39.566788 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.566836 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.567083 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000006>

18:42:39.567131 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.567415 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-94-2048.jpg)

![Why are our debian servers so slow?

strace -c ruby threaded.rb

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

100.00 0.326334 0 3568567 rt_sigprocmask

0.00 0.000000 0 9 read

0.00 0.000000 0 10 open

0.00 0.000000 0 10 close

0.00 0.000000 0 9 fstat

0.00 0.000000 0 25 mmap

------ ----------- ----------- --------- --------- ----------------

100.00 0.326334 3568685 0 total

strace -ttT ruby threaded.rb

18:42:39.566788 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.566836 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.567083 rt_sigprocmask(SIG_SETMASK, [], NULL, 8) = 0 <0.000006>

18:42:39.567131 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

18:42:39.567415 rt_sigprocmask(SIG_BLOCK, NULL, [], 8) = 0 <0.000006>

3.5 million sigprocmasks.. wtf?](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-95-2048.jpg)

![PATCH: --disable-ucontext

--- a/configure.in

+++ b/configure.in

@@ -368,6 +368,10 @@

+AC_ARG_ENABLE(ucontext,

+ [ --disable-ucontext do not use getcontext()/setcontext().],

+ [disable_ucontext=yes], [disable_ucontext=no])

+

AC_ARG_ENABLE(pthread,

[ --enable-pthread use pthread library.],

[enable_pthread=$enableval], [enable_pthread=no])

@@ -1038,7 +1042,8 @@

-if test x"$ac_cv_header_ucontext_h" = xyes; then

+if test x"$ac_cv_header_ucontext_h" = xyes && test x"$disable_ucontext" = xno; then

if test x"$rb_with_pthread" = xyes; then

AC_CHECK_FUNCS(getcontext setcontext)

fi

./configure --enable-pthread --disable-ucontext](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-103-2048.jpg)

![PATCH: --disable-ucontext

--- a/configure.in

+++ b/configure.in

@@ -368,6 +368,10 @@

+AC_ARG_ENABLE(ucontext,

+ [ --disable-ucontext do not use getcontext()/setcontext().],

+ [disable_ucontext=yes], [disable_ucontext=no])

+

AC_ARG_ENABLE(pthread,

[ --enable-pthread use pthread library.],

[enable_pthread=$enableval], [enable_pthread=no])

@@ -1038,7 +1042,8 @@

-if test x"$ac_cv_header_ucontext_h" = xyes; then

+if test x"$ac_cv_header_ucontext_h" = xyes && test x"$disable_ucontext" = xno; then

if test x"$rb_with_pthread" = xyes; then

AC_CHECK_FUNCS(getcontext setcontext)

fi

./configure --enable-pthread --disable-ucontext

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

nan 0.000000 0 13 read

nan 0.000000 0 21 10 open

nan 0.000000 0 11 close

------ ----------- ----------- --------- --------- ----------------

100.00 0.000000 45 10 total](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-104-2048.jpg)

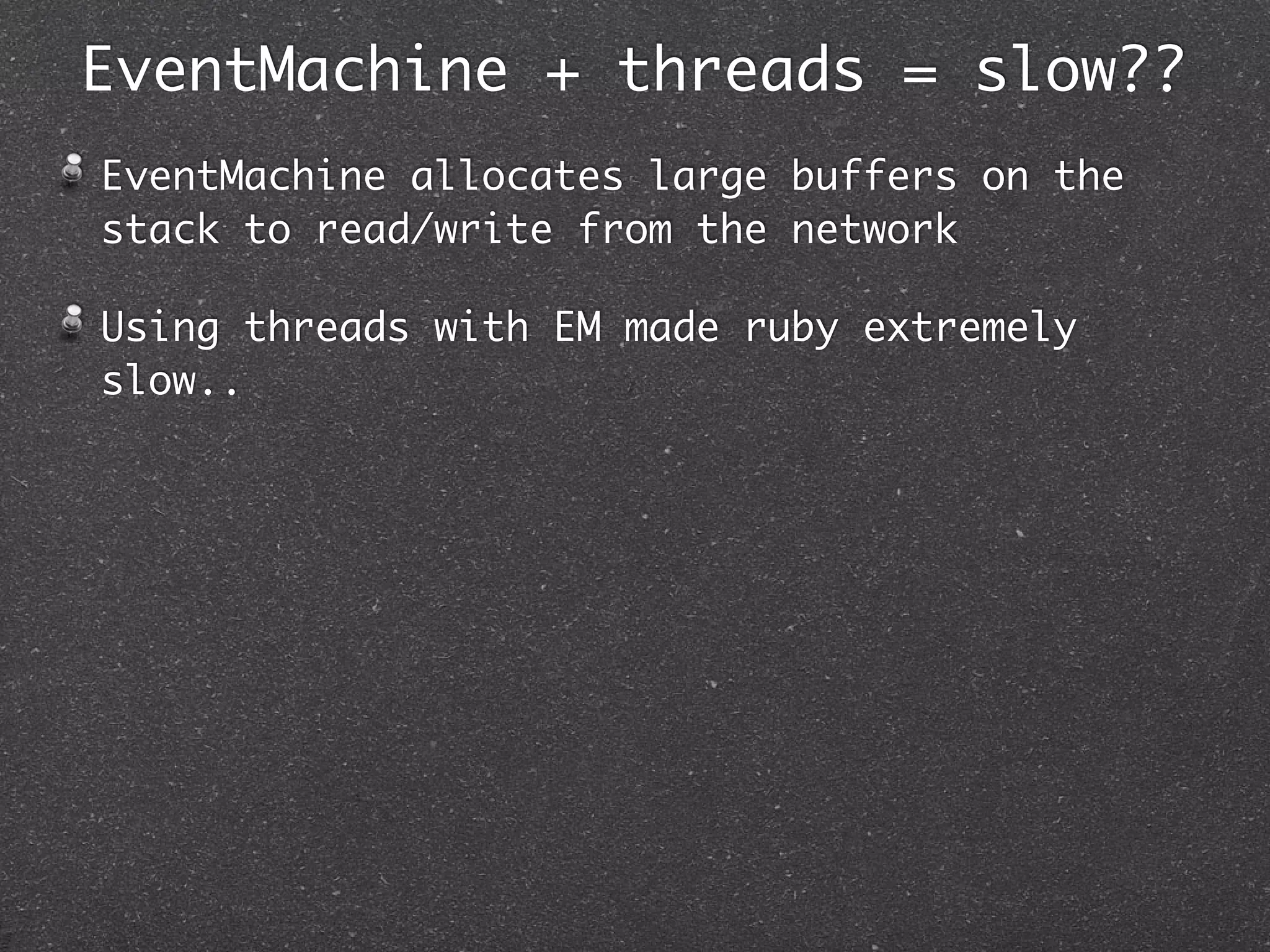

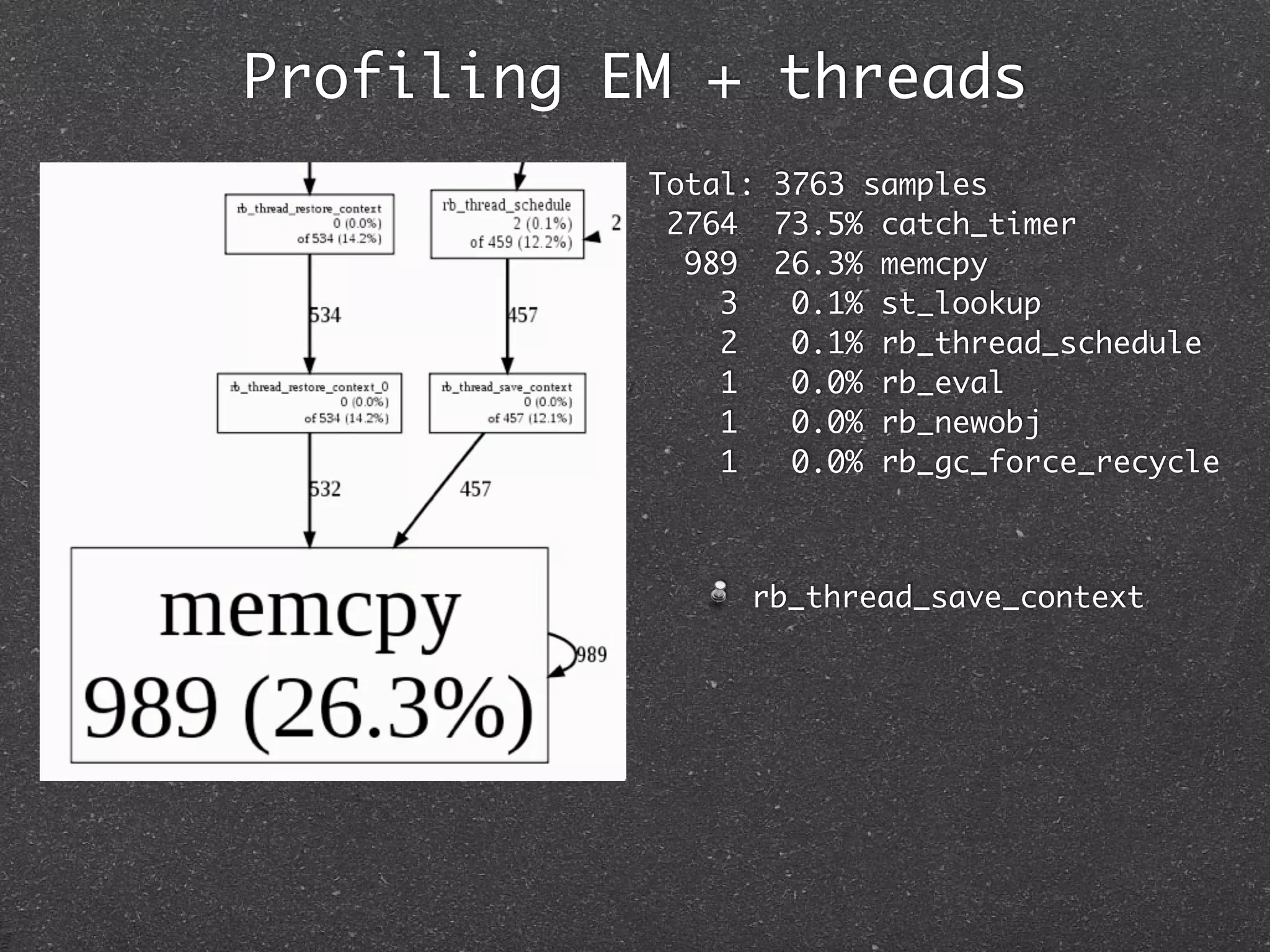

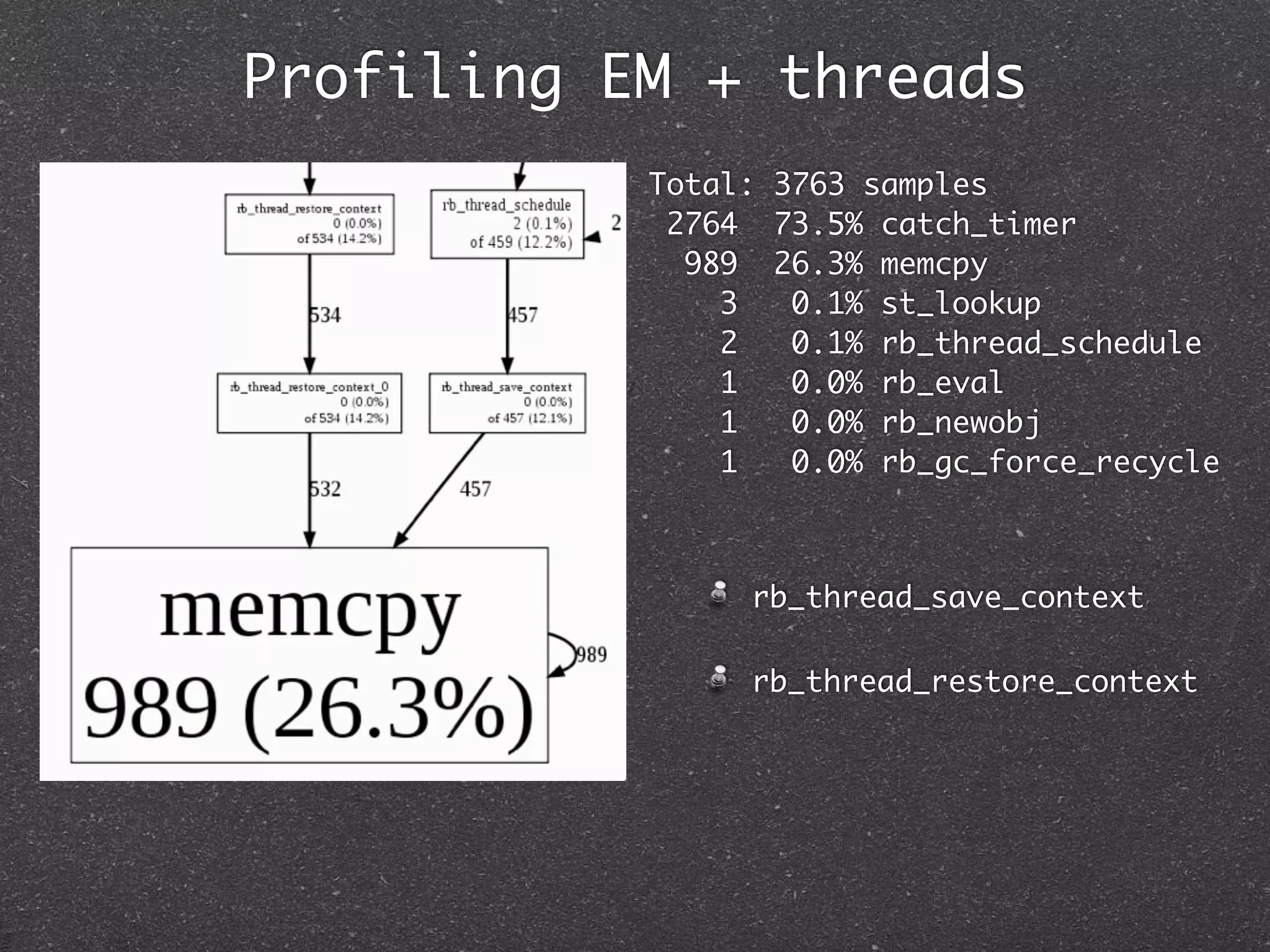

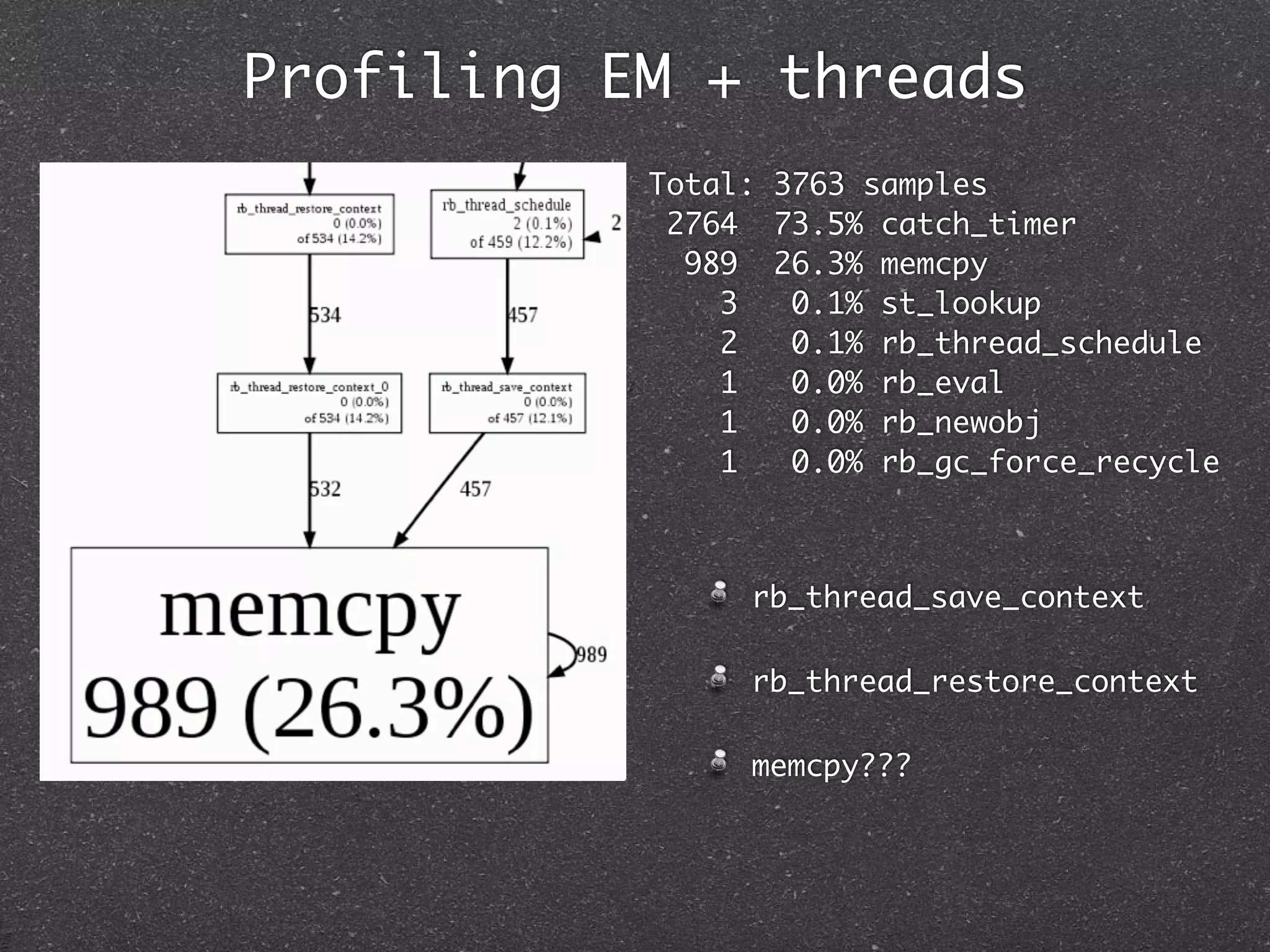

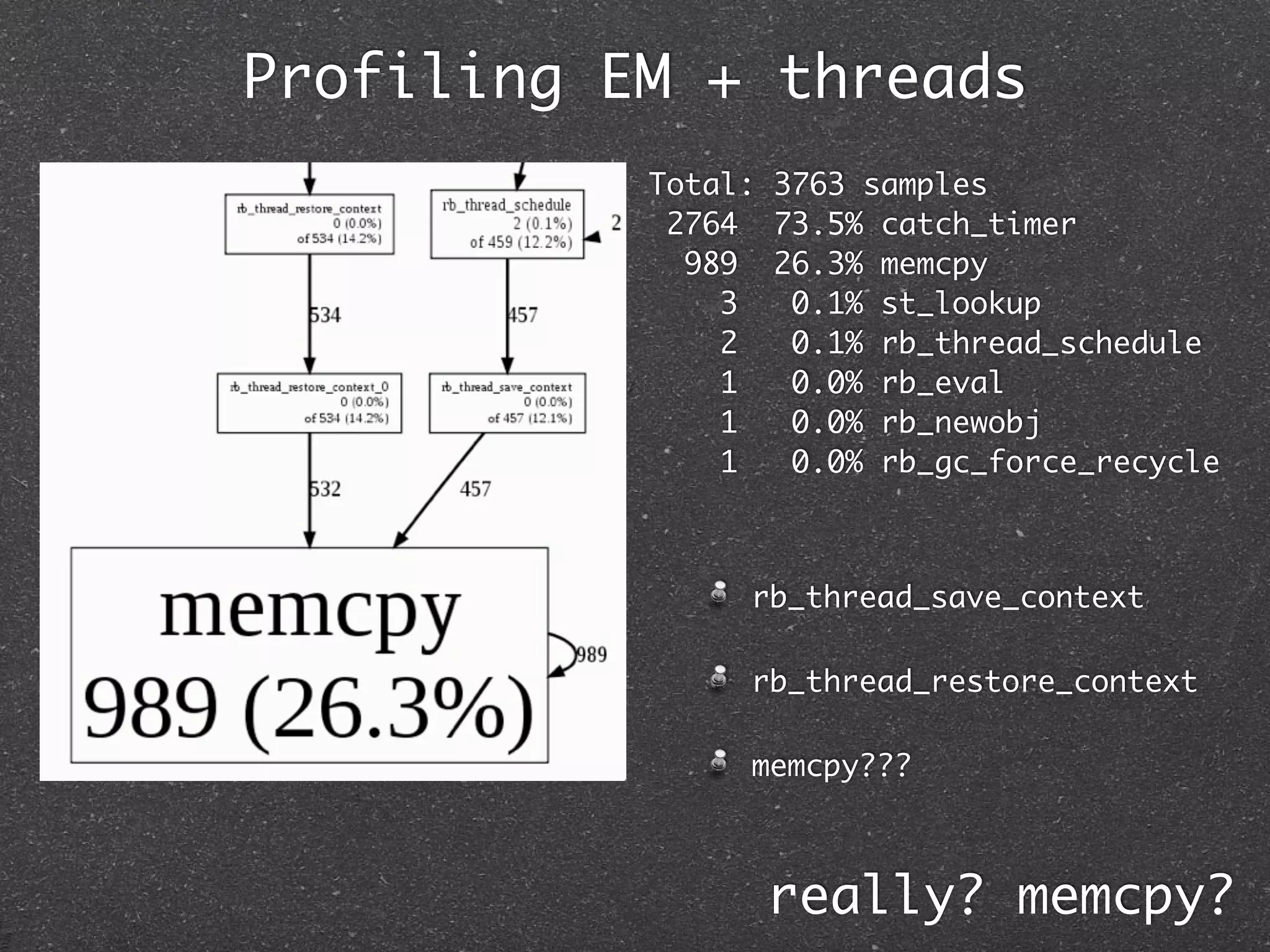

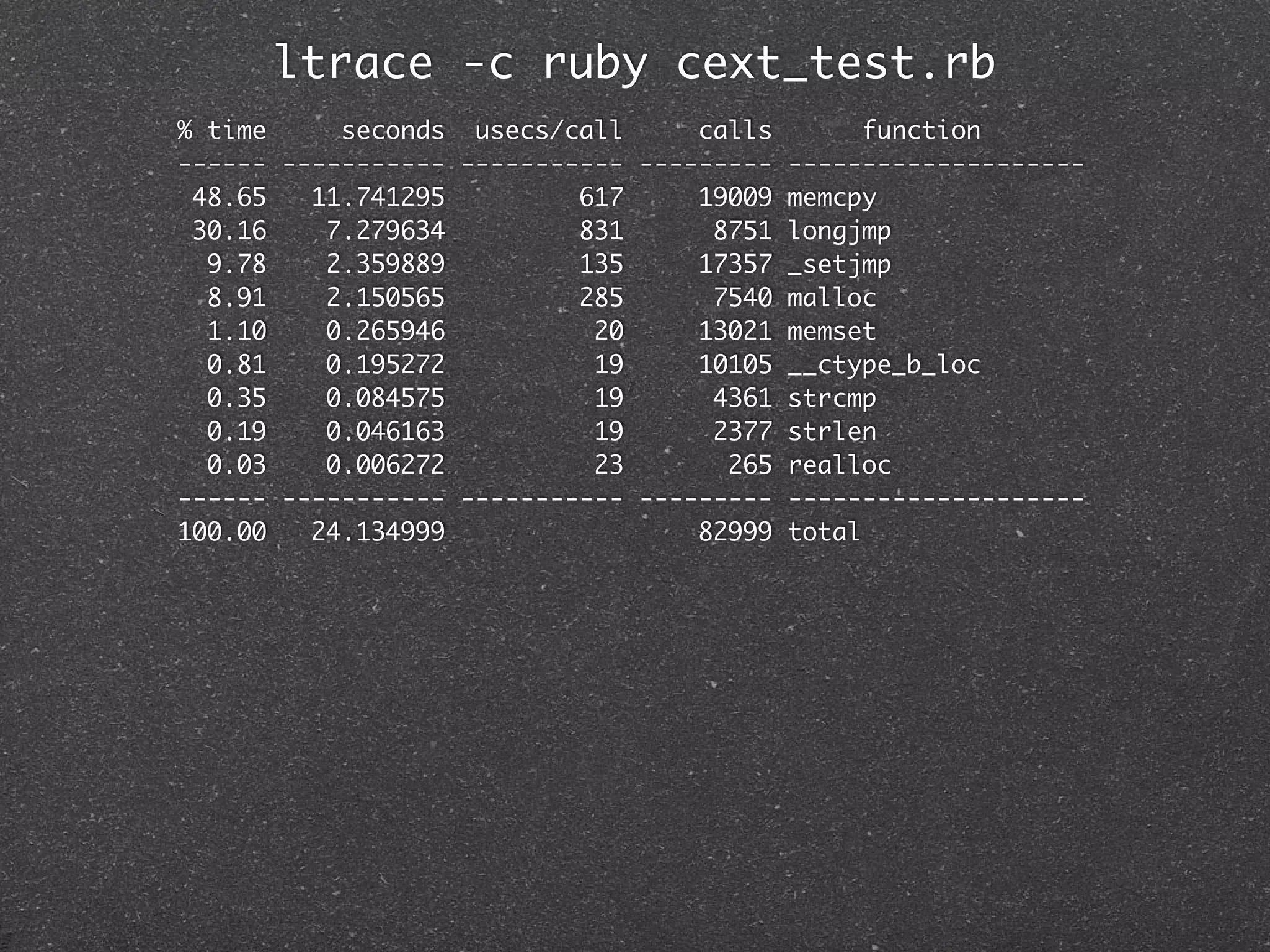

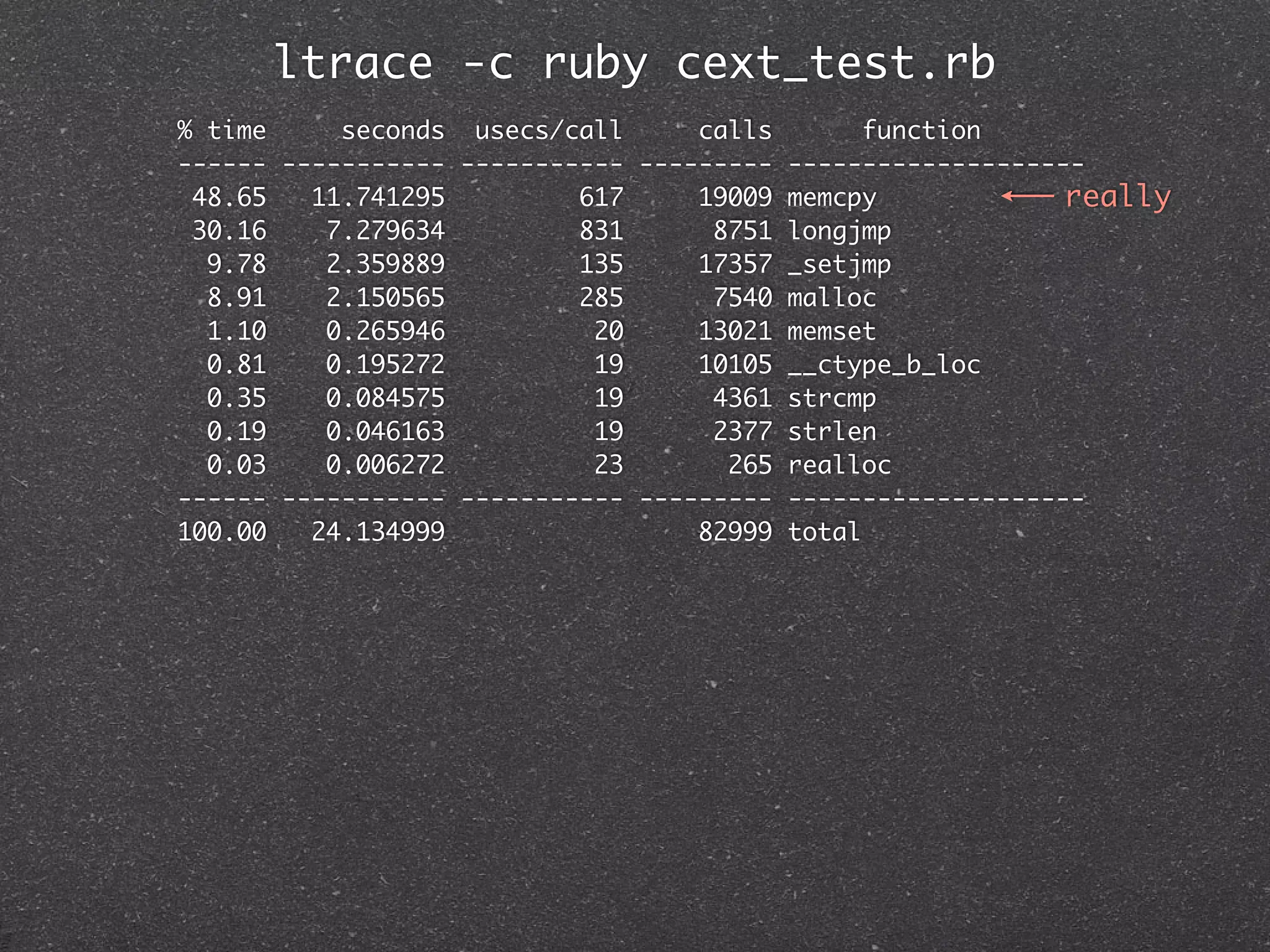

![EventMachine + threads = slow??

EventMachine allocates large buffers on the

stack to read/write from the network

Using threads with EM made ruby extremely

slow..

#include "ruby.h"

require 'cext'

VALUE bigstack(VALUE self)

(1..2).map{ {

Thread.new{ char buffer[ 50 * 1024 ]; /* large stack frame */

CExt.bigstack{ if (rb_block_given_p()) rb_yield(Qnil);

100_000.times{ return Qnil;

1*2+3/4 }

Thread.pass

} void Init_cext()

} {

} VALUE CExt = rb_define_module("CExt");

}.map{ |t| t.join } rb_define_singleton_method(CExt, "bigstack", bigstack, 0);

}

...profile?](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-108-2048.jpg)

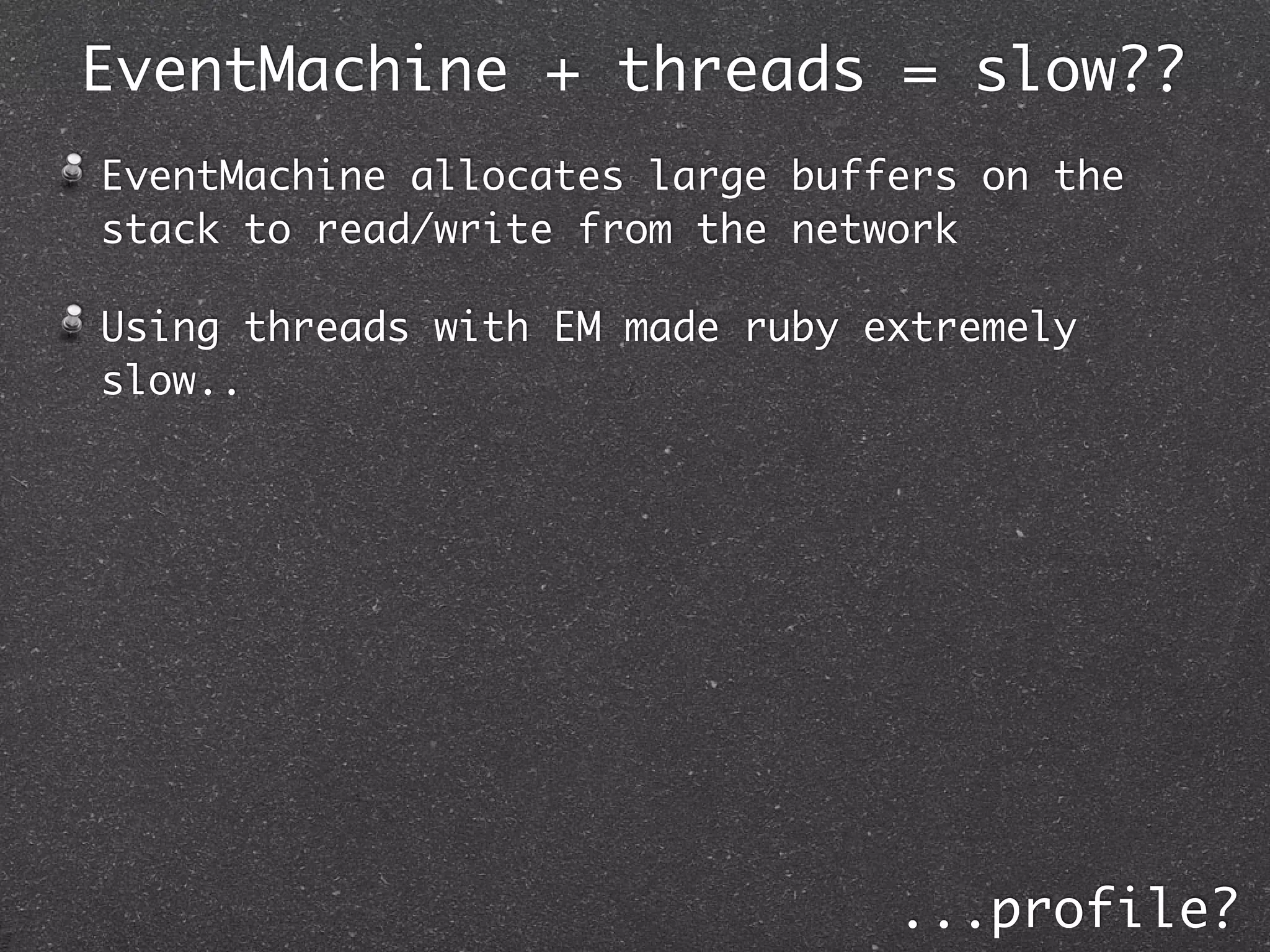

![EventMachine + threads = slow??

EventMachine allocates large buffers on the

stack to read/write from the network

Using threads with EM made ruby extremely

slow..

#include "ruby.h"

require 'cext'

VALUE bigstack(VALUE self)

(1..2).map{ {

Thread.new{ char buffer[ 50 * 1024 ]; /* large stack frame */

CExt.bigstack{ if (rb_block_given_p()) rb_yield(Qnil);

100_000.times{ return Qnil;

1*2+3/4 }

Thread.pass

} void Init_cext()

} {

} VALUE CExt = rb_define_module("CExt");

}.map{ |t| t.join } rb_define_singleton_method(CExt, "bigstack", bigstack, 0);

}

...profile?](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-109-2048.jpg)

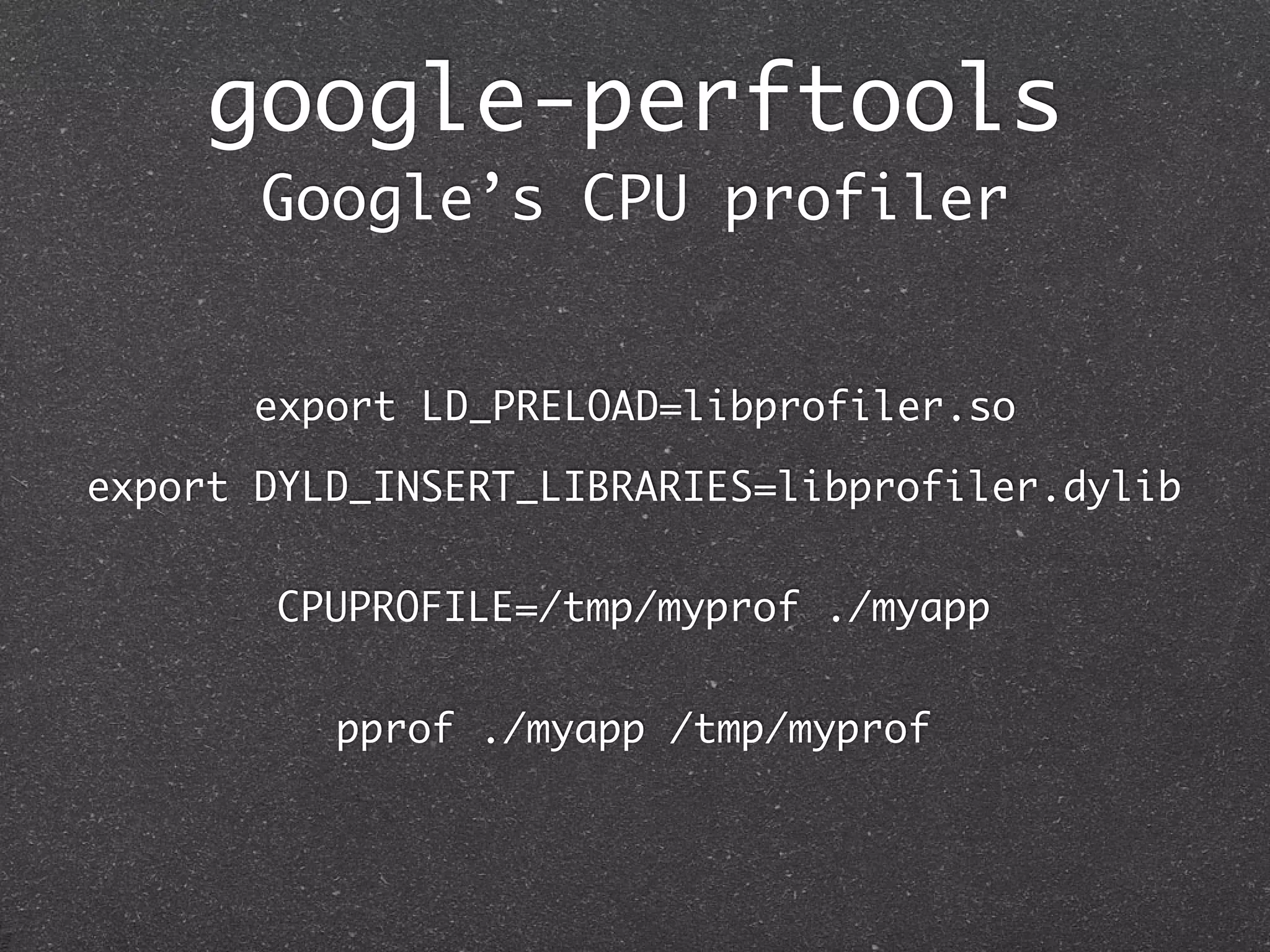

![EventMachine + threads = slow??

EventMachine allocates large buffers on the

stack to read/write from the network

Using threads with EM made ruby extremely

slow..

#include "ruby.h"

require 'cext'

VALUE bigstack(VALUE self)

(1..2).map{ {

Thread.new{ char buffer[ 50 * 1024 ]; /* large stack frame */

CExt.bigstack{ if (rb_block_given_p()) rb_yield(Qnil);

100_000.times{ return Qnil;

1*2+3/4 }

Thread.pass

} void Init_cext()

} {

} VALUE CExt = rb_define_module("CExt");

}.map{ |t| t.join } rb_define_singleton_method(CExt, "bigstack", bigstack, 0);

}

...profile?](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-110-2048.jpg)

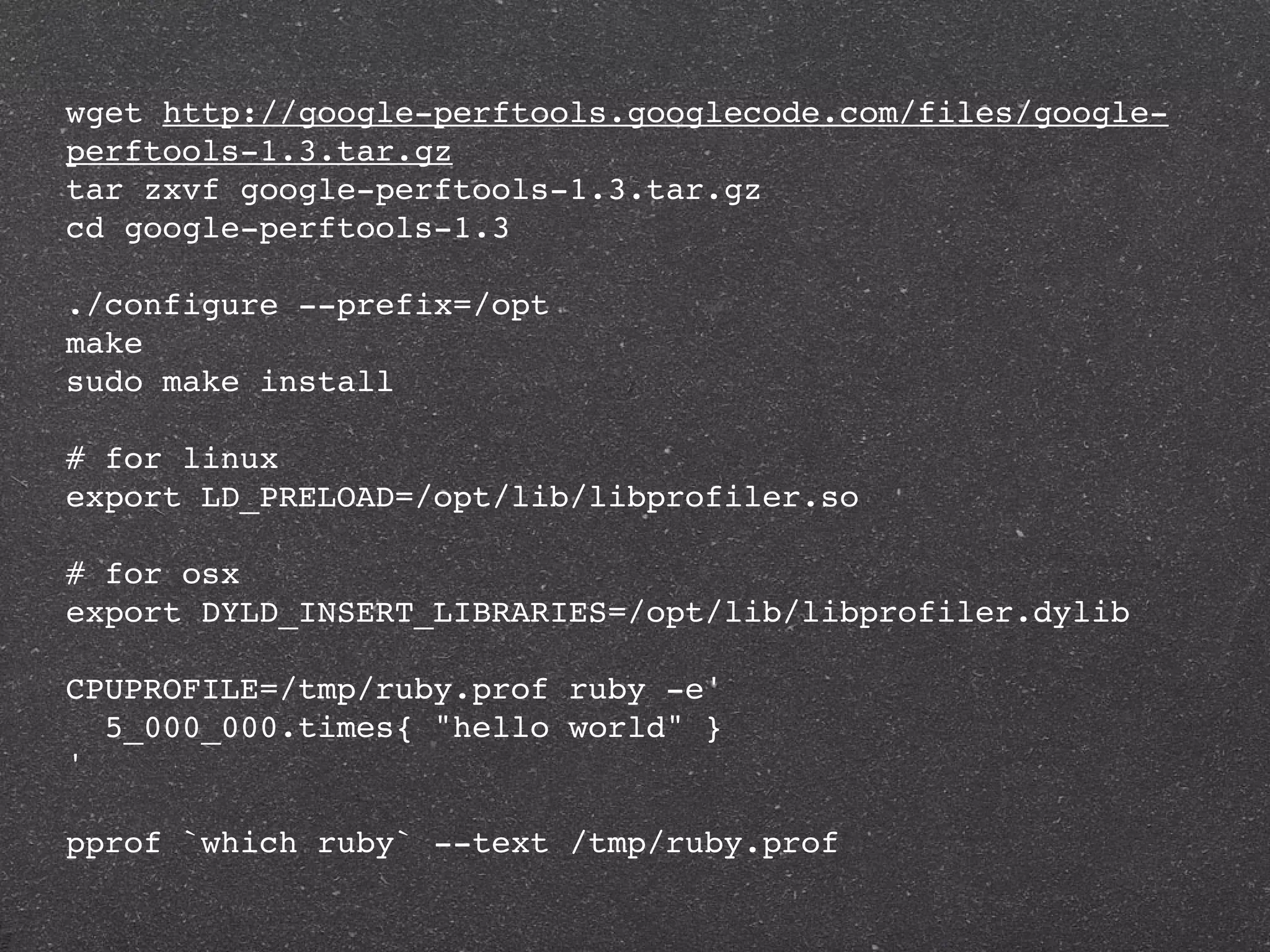

![EventMachine + threads = slow??

EventMachine allocates large buffers on the

stack to read/write from the network

Using threads with EM made ruby extremely

slow..

#include "ruby.h"

require 'cext'

VALUE bigstack(VALUE self)

(1..2).map{ {

Thread.new{ char buffer[ 50 * 1024 ]; /* large stack frame */

CExt.bigstack{ if (rb_block_given_p()) rb_yield(Qnil);

100_000.times{ return Qnil;

1*2+3/4 }

Thread.pass

} void Init_cext()

} {

} VALUE CExt = rb_define_module("CExt");

}.map{ |t| t.join } rb_define_singleton_method(CExt, "bigstack", bigstack, 0);

}

...profile?](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-111-2048.jpg)

![EventMachine + threads = slow??

EventMachine allocates large buffers on the

stack to read/write from the network

Using threads with EM made ruby extremely

slow..

#include "ruby.h"

require 'cext'

VALUE bigstack(VALUE self)

(1..2).map{ {

Thread.new{ char buffer[ 50 * 1024 ]; /* large stack frame */

CExt.bigstack{ if (rb_block_given_p()) rb_yield(Qnil);

100_000.times{ return Qnil;

1*2+3/4 }

Thread.pass

} void Init_cext()

} {

} VALUE CExt = rb_define_module("CExt");

}.map{ |t| t.join } rb_define_singleton_method(CExt, "bigstack", bigstack, 0);

}

...profile?](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-112-2048.jpg)

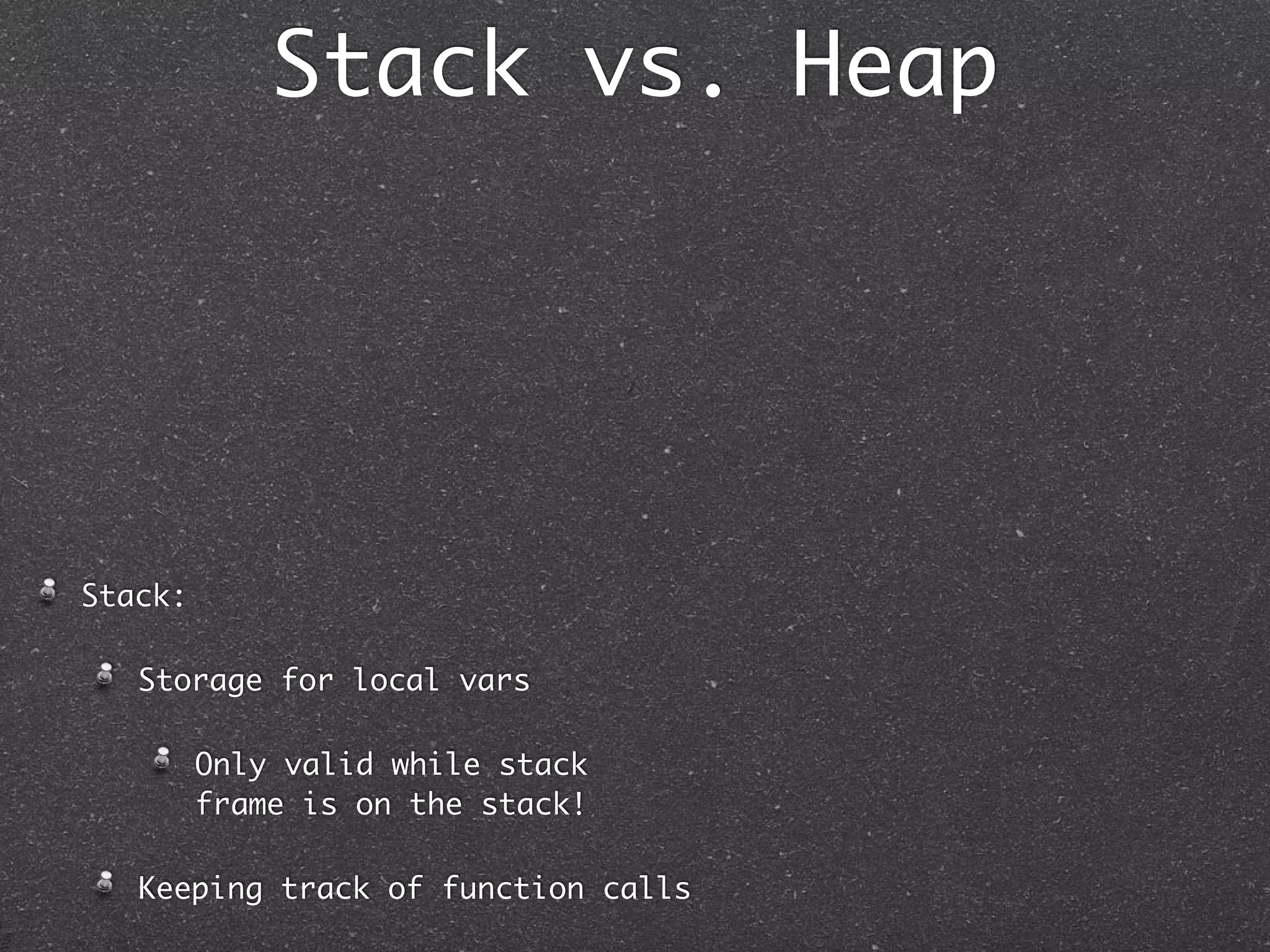

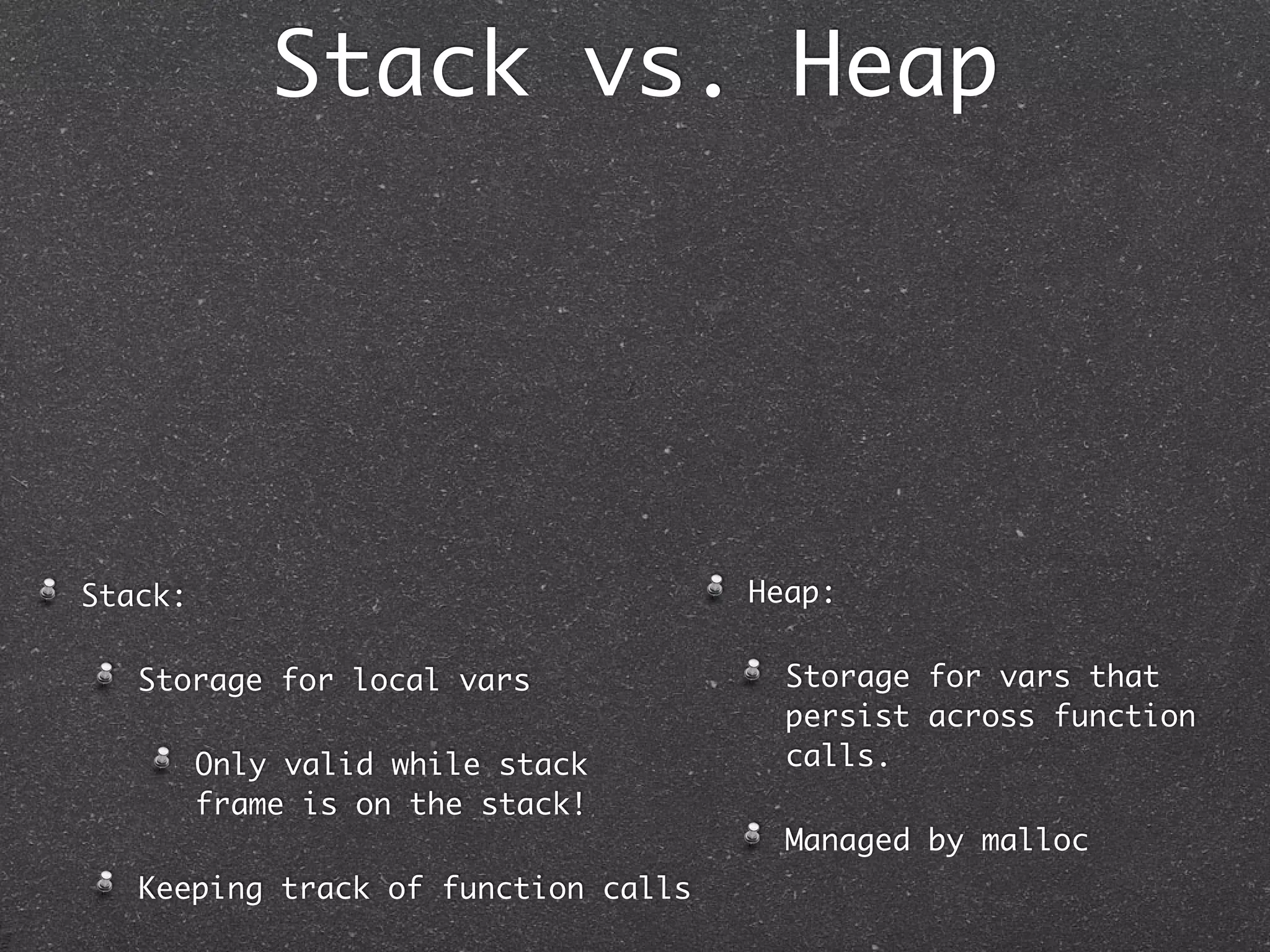

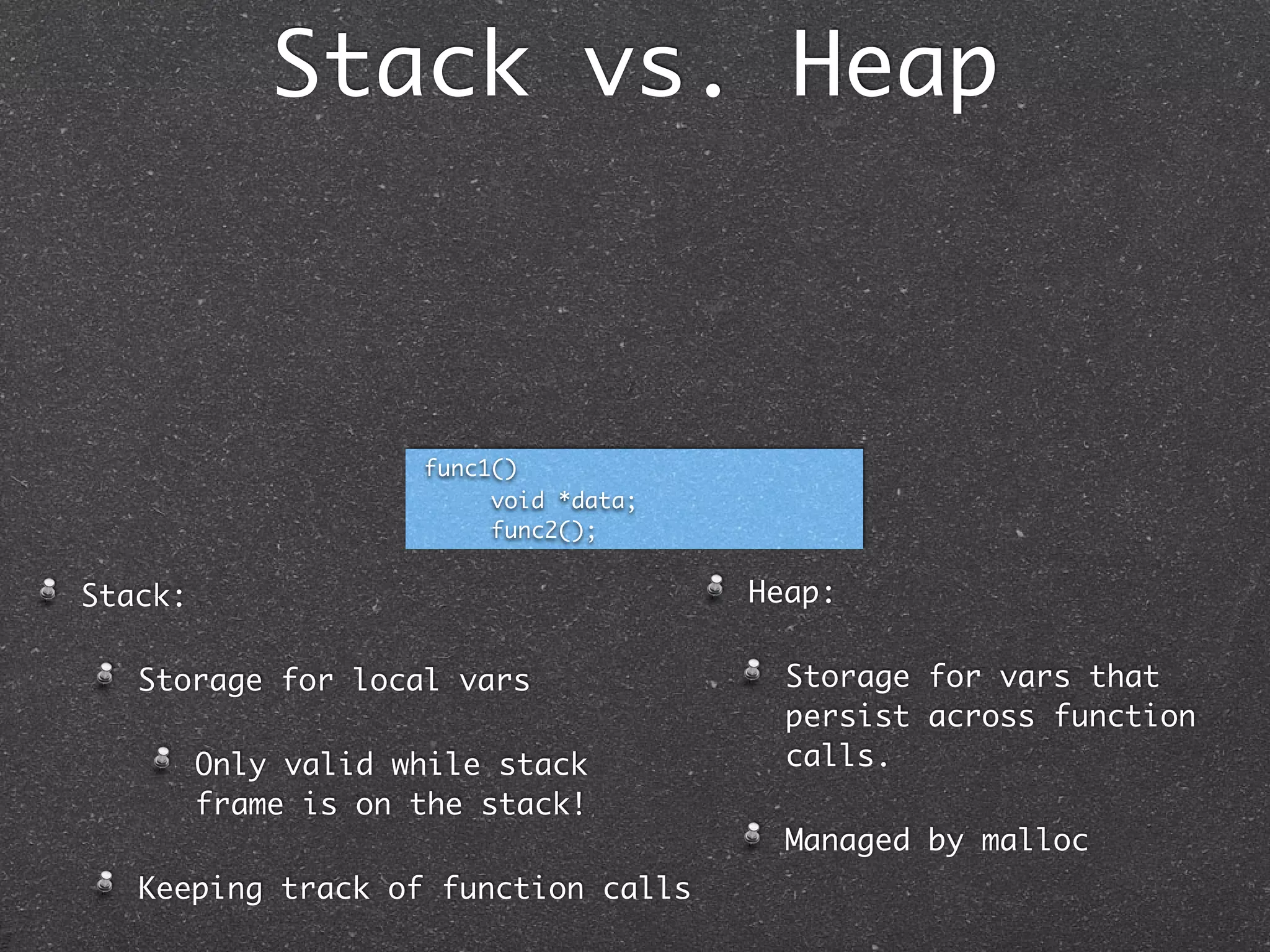

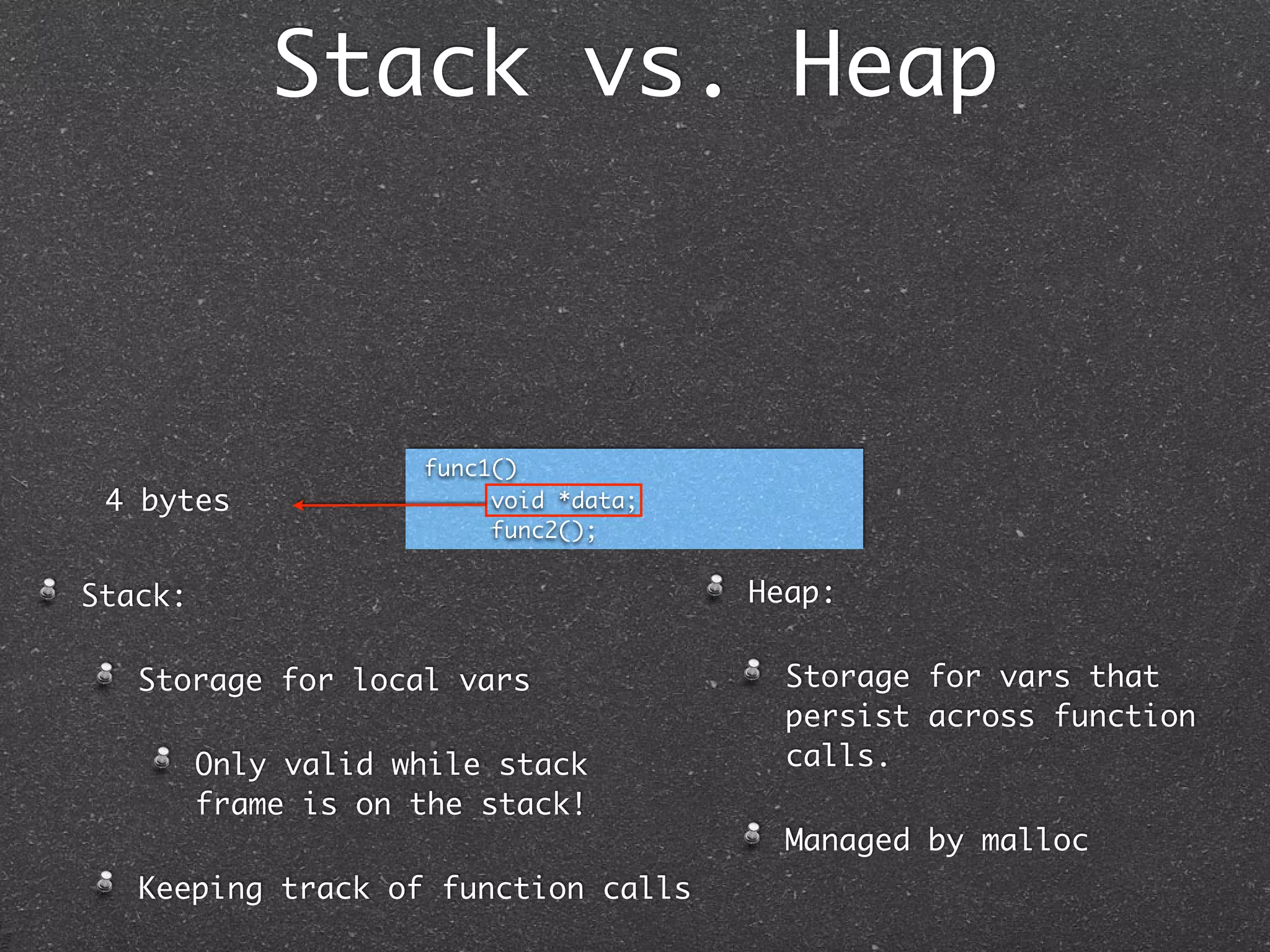

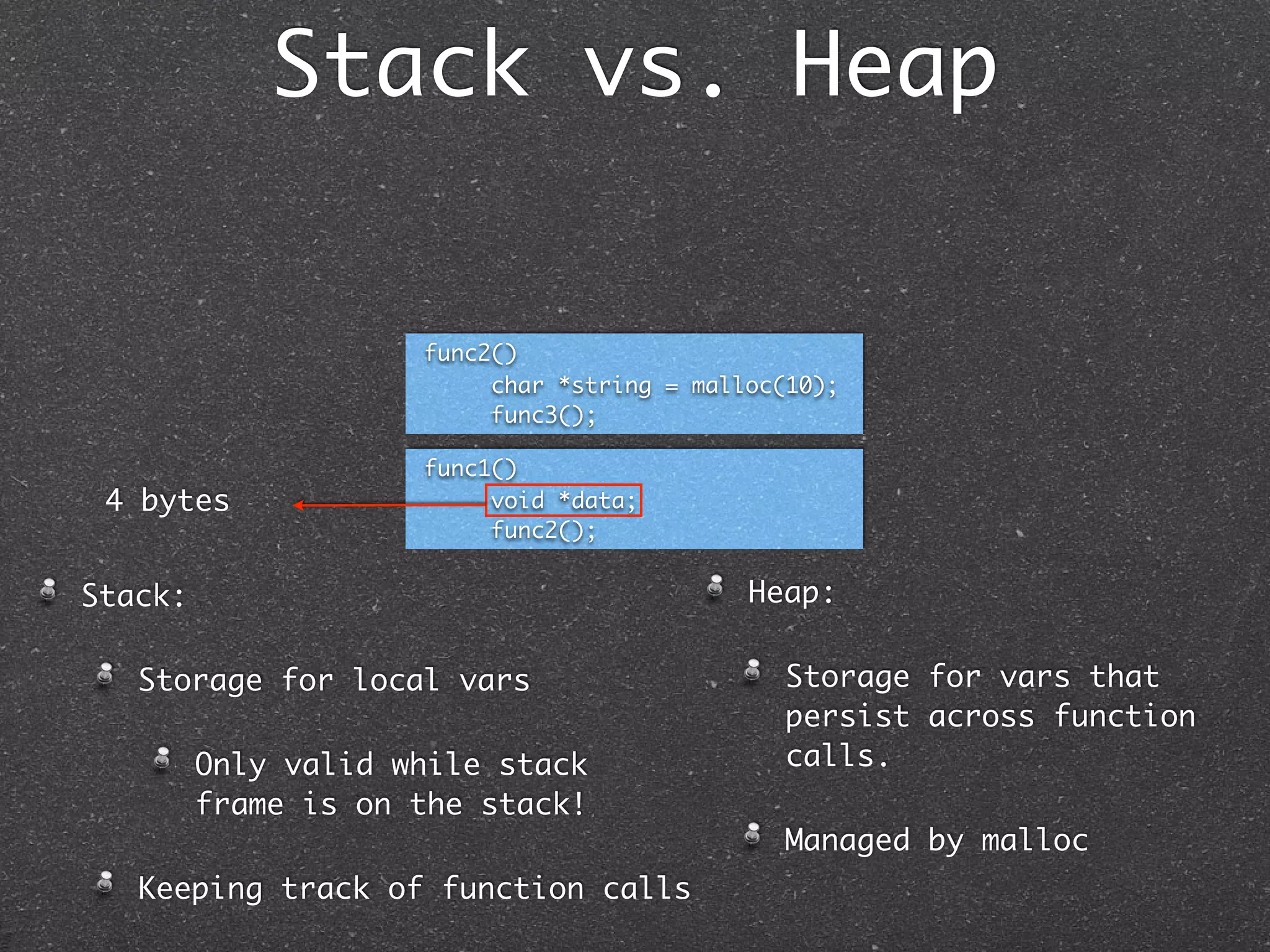

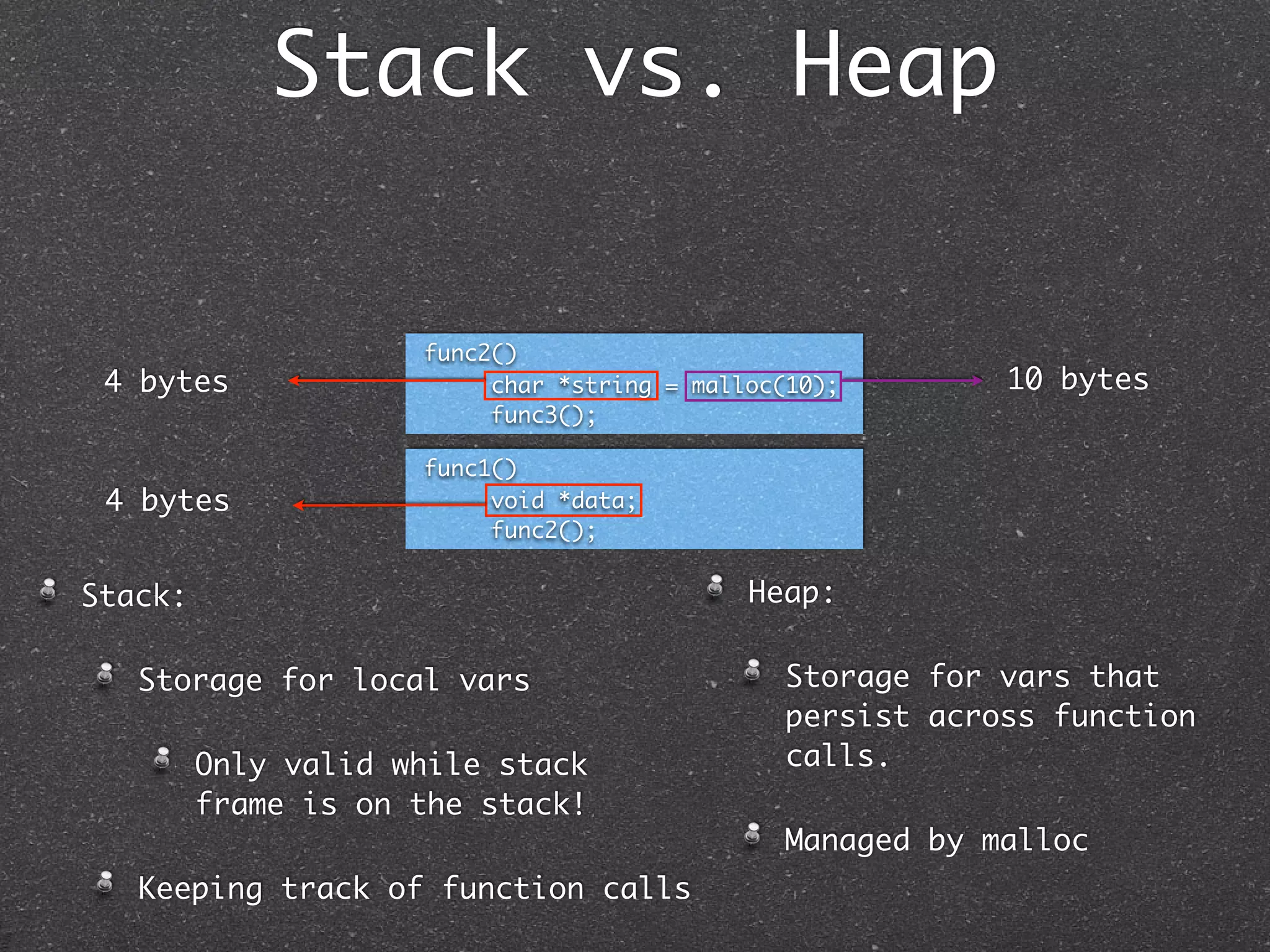

![Stack vs. Heap

func3()

char buffer[8];

func2()

4 bytes char *string = malloc(10); 10 bytes

func3();

func1()

4 bytes void *data;

func2();

Stack: Heap:

Storage for local vars Storage for vars that

persist across function

Only valid while stack calls.

frame is on the stack!

Managed by malloc

Keeping track of function calls](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-160-2048.jpg)

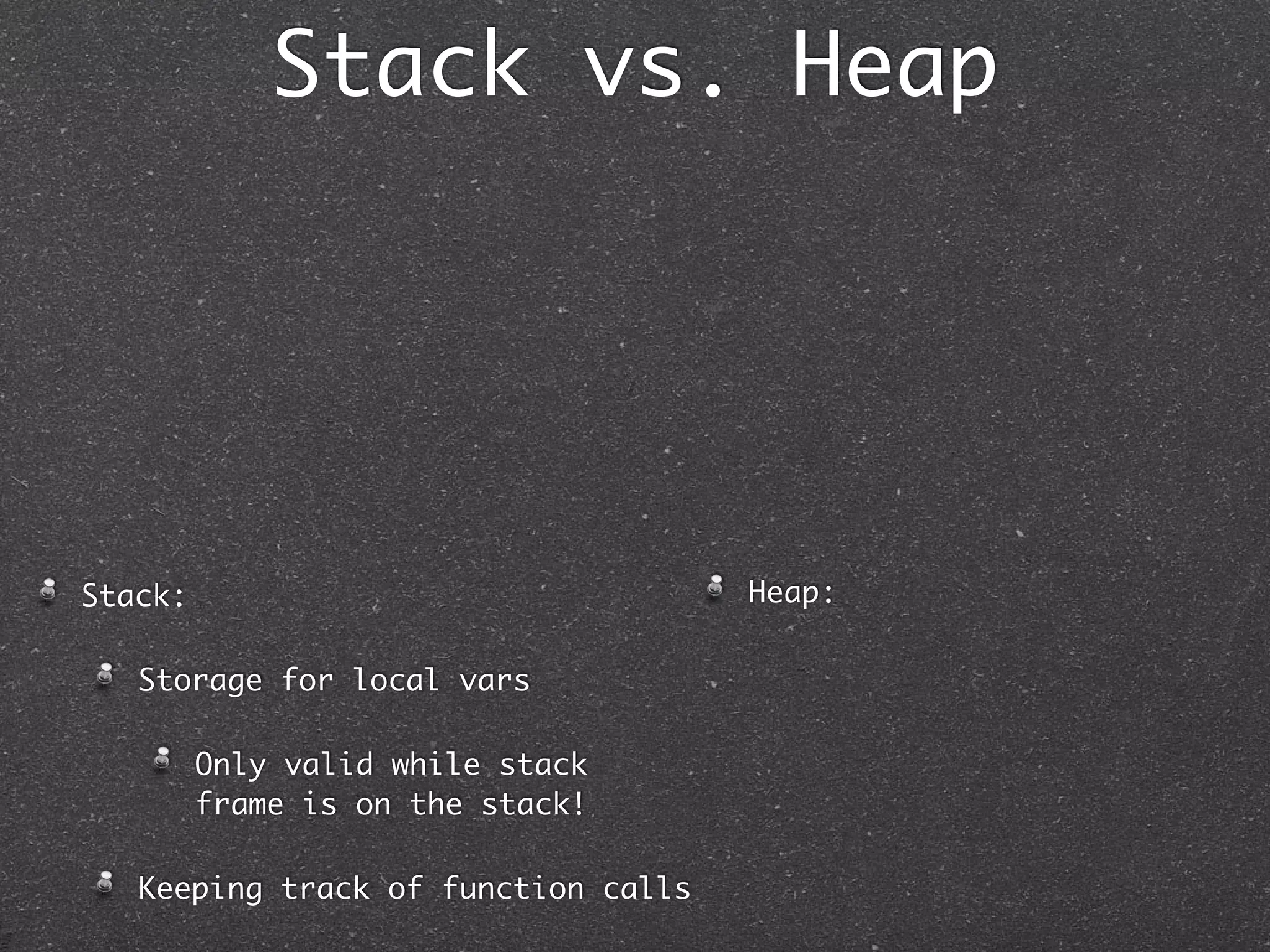

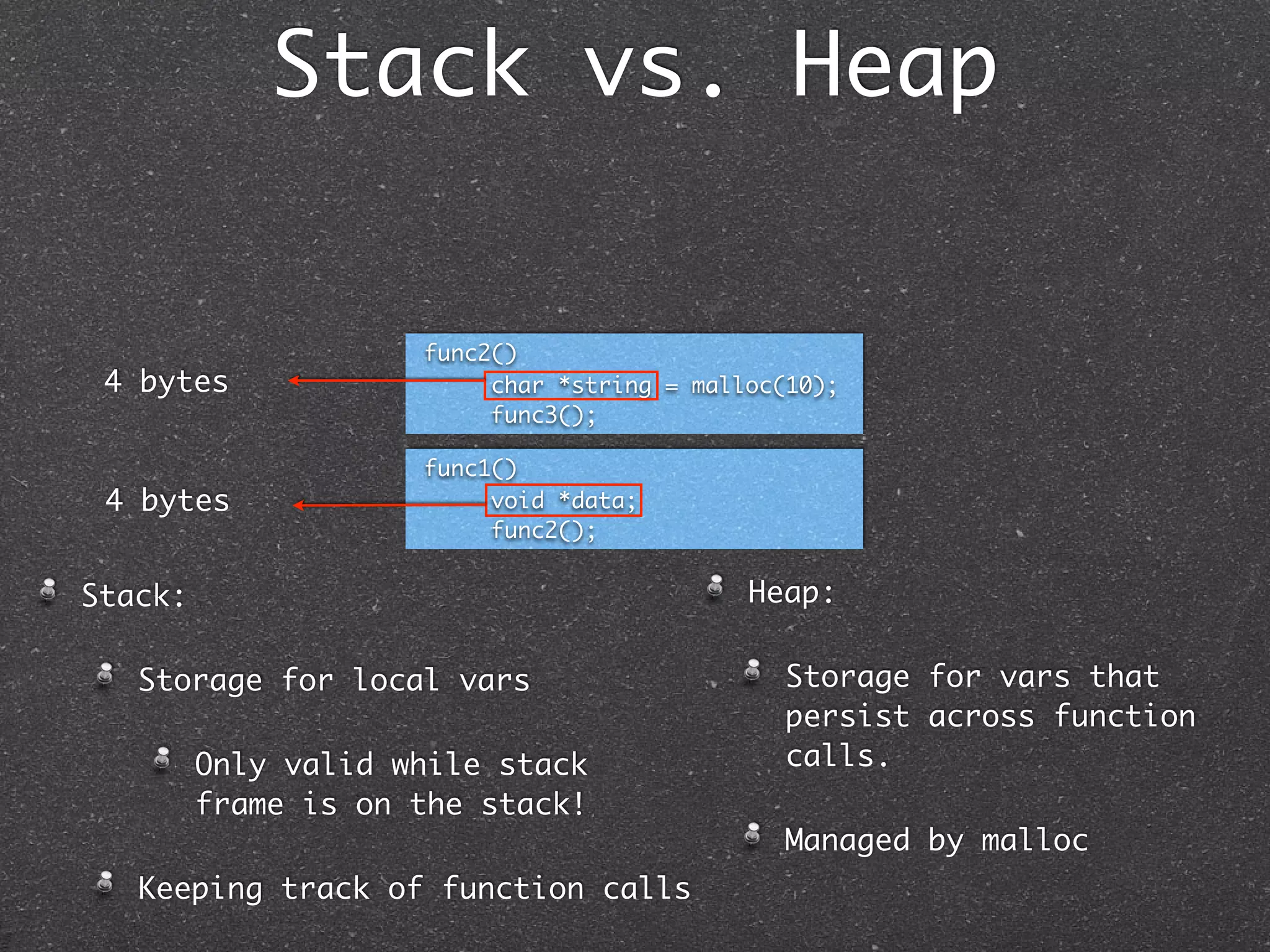

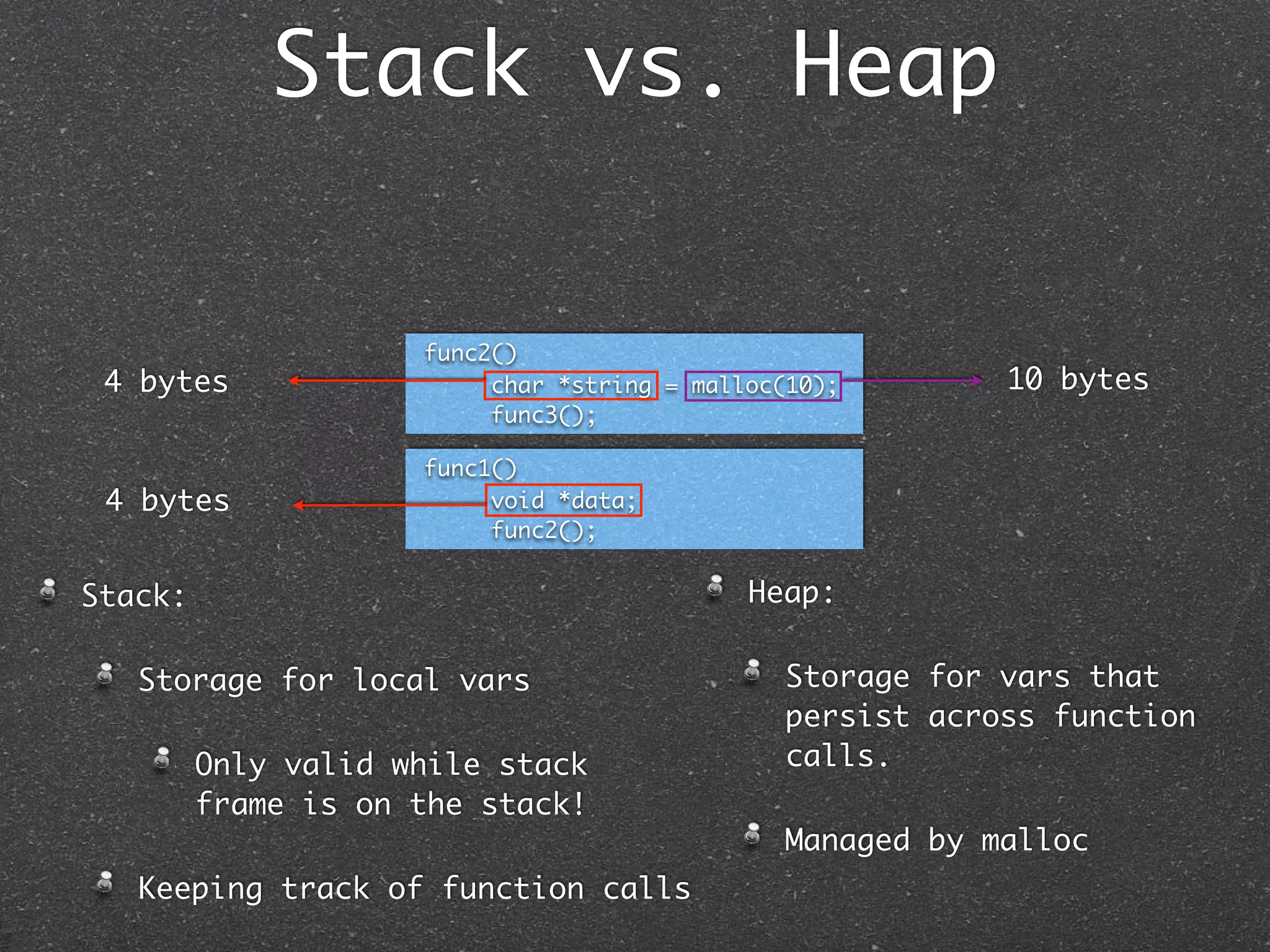

![Stack vs. Heap

func3()

8 bytes char buffer[8];

func2()

4 bytes char *string = malloc(10); 10 bytes

func3();

func1()

4 bytes void *data;

func2();

Stack: Heap:

Storage for local vars Storage for vars that

persist across function

Only valid while stack calls.

frame is on the stack!

Managed by malloc

Keeping track of function calls](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-161-2048.jpg)

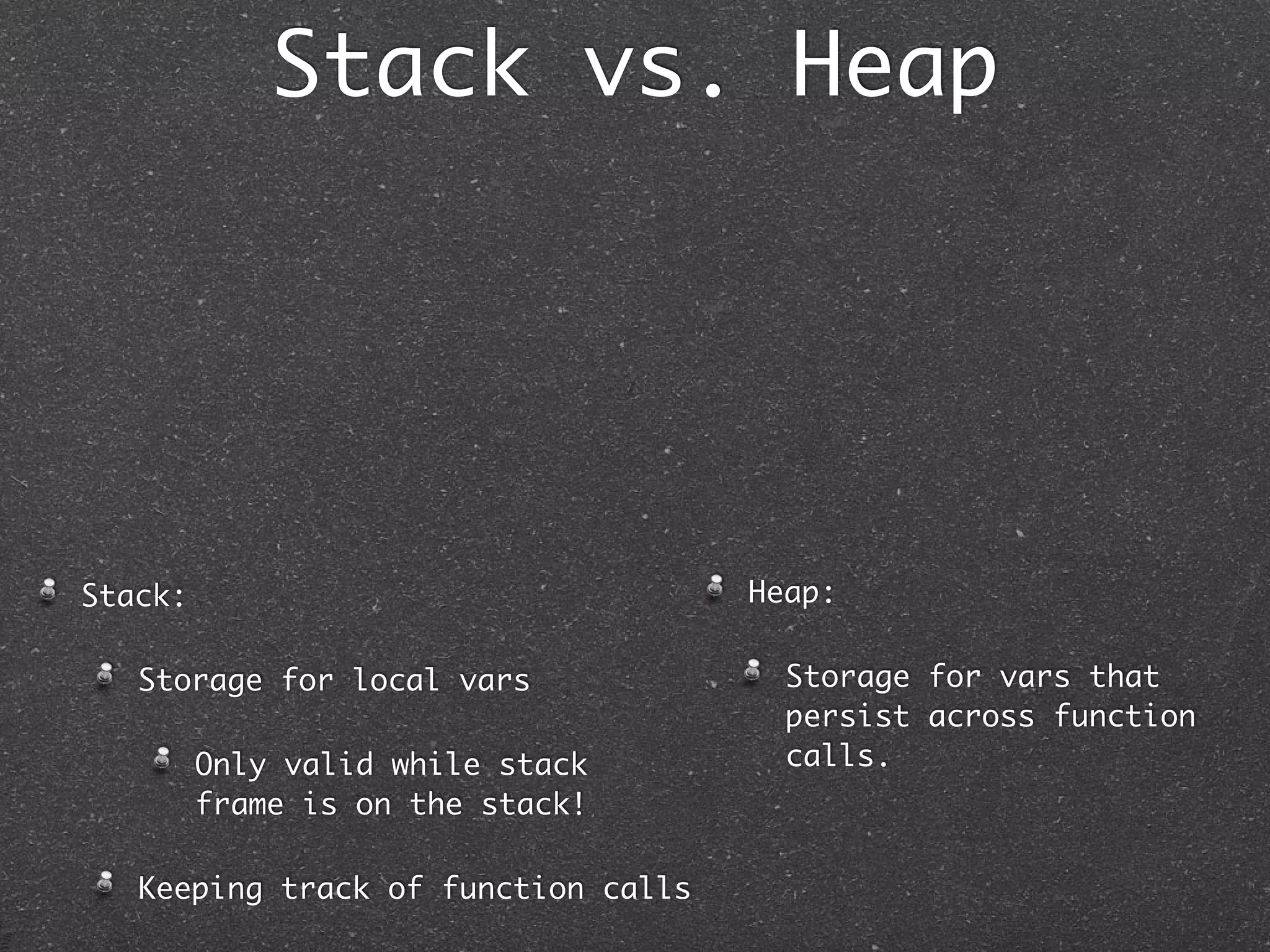

![Stack vs. Heap

func3()

char buffer[8];

func2()

4 bytes char *string = malloc(10); 10 bytes

func3();

func1()

4 bytes void *data;

func2();

Stack: Heap:

Storage for local vars Storage for vars that

persist across function

Only valid while stack calls.

frame is on the stack!

Managed by malloc

Keeping track of function calls](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-162-2048.jpg)

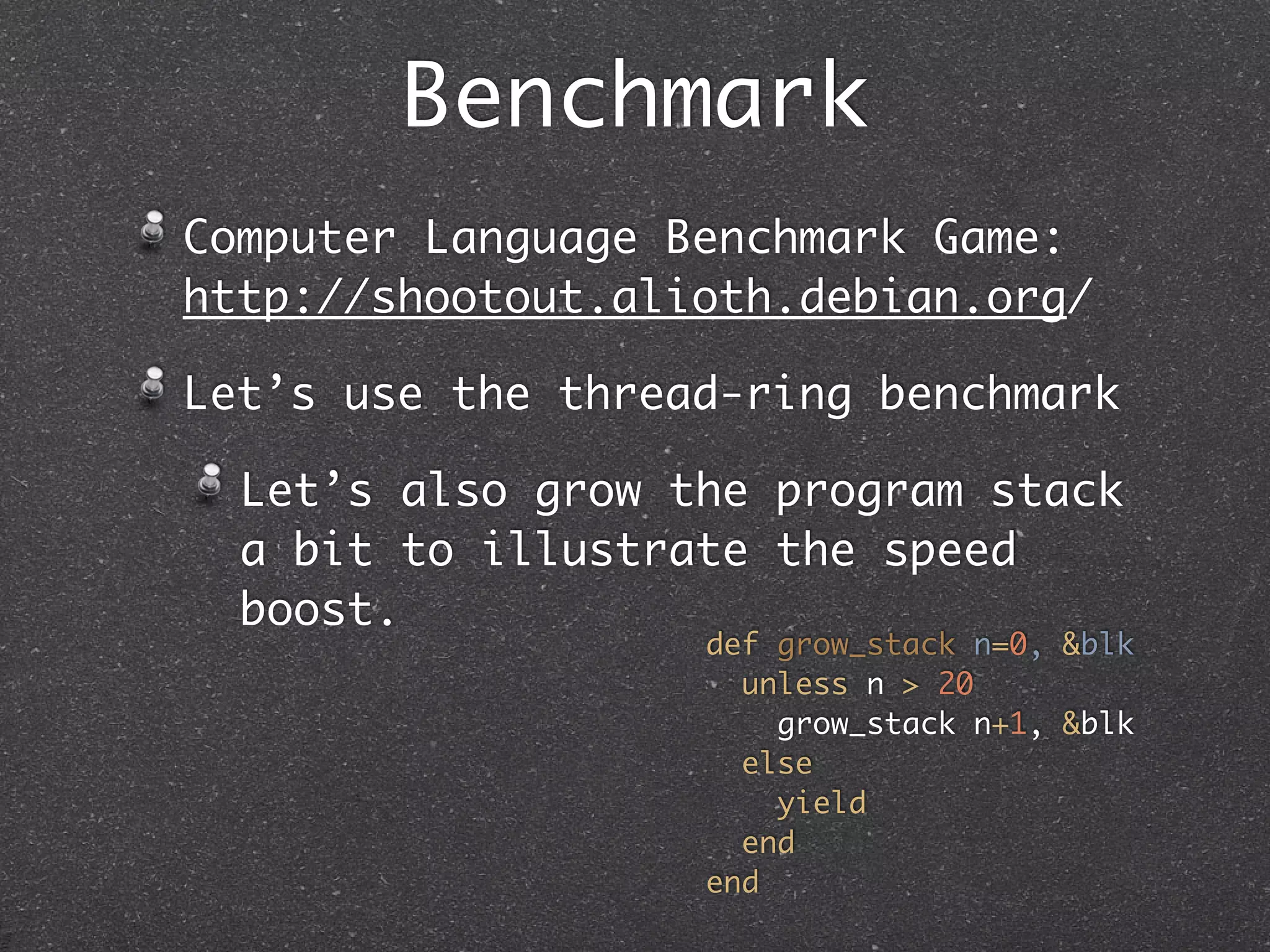

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num|

grow_stack do

while true

Thread.stop

if number > 0

number -= 1

else

puts thr_num

exit 0

end

end

end

end

end](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-230-2048.jpg)

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num| create 503 threads

grow_stack do

while true

Thread.stop

if number > 0

number -= 1

else

puts thr_num

exit 0

end

end

end

end

end](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-231-2048.jpg)

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num| create 503 threads

grow_stack do increase the thread stacks

while true

Thread.stop

if number > 0

number -= 1

else

puts thr_num

exit 0

end

end

end

end

end](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-232-2048.jpg)

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num| create 503 threads

grow_stack do increase the thread stacks

while true

Thread.stop pause the thread

if number > 0

number -= 1

else

puts thr_num

exit 0

end

end

end

end

end](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-233-2048.jpg)

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num| create 503 threads

grow_stack do increase the thread stacks

while true

Thread.stop pause the thread

if number > 0

number -= 1 when resumed, decrement number

else

puts thr_num

exit 0

end

end

end

end

end](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-234-2048.jpg)

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num| create 503 threads

grow_stack do increase the thread stacks

while true

Thread.stop pause the thread

if number > 0

number -= 1 when resumed, decrement number

else prev_thread = threads.last

puts thr_num while true

exit 0 for thread in threads

end Thread.pass until prev_thread.stop?

end thread.run

end prev_thread = thread

end end

end end](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-235-2048.jpg)

![thread-ring

number = 50_000_000

threads = []

for i in 1..503

threads << Thread.new(i) do |thr_num| create 503 threads

grow_stack do increase the thread stacks

while true

Thread.stop pause the thread

if number > 0

number -= 1 when resumed, decrement number

else prev_thread = threads.last

puts thr_num while true

exit 0 for thread in threads

end Thread.pass until prev_thread.stop?

end thread.run

end prev_thread = thread

end end

end end

schedule each thread until number == 0](https://image.slidesharecdn.com/threadedawesome-090829020831-phpapp02/75/Threaded-Awesome-236-2048.jpg)