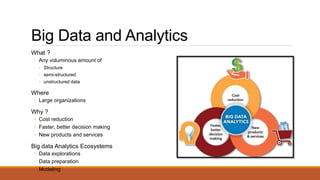

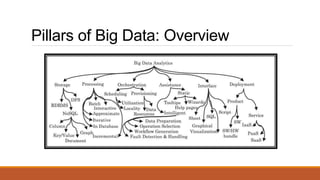

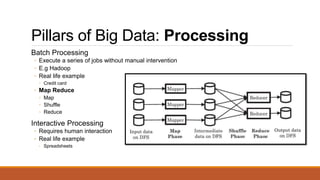

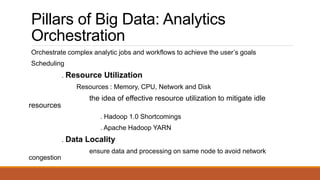

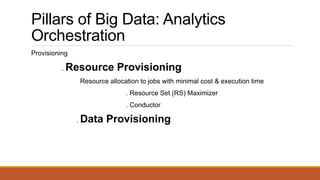

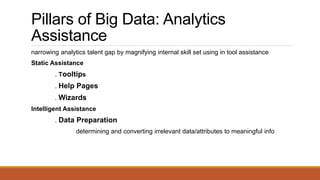

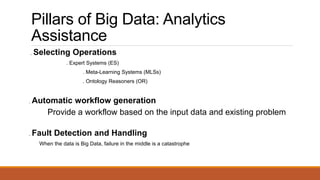

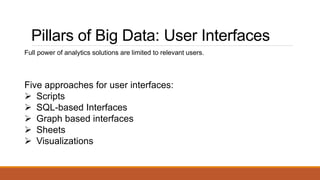

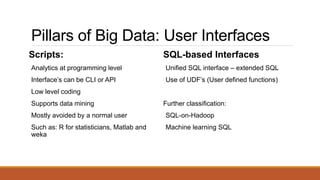

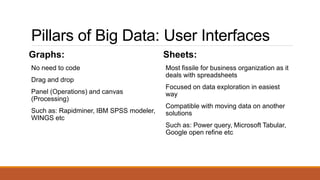

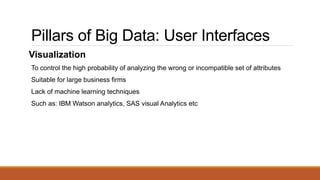

The document discusses the six pillars for building big data analytics ecosystems: storage, processing, analytics, user interfaces, deployment, and future directions. It provides an overview of approaches for each pillar, popular systems, challenges, and how the pillars form a taxonomy to guide organizations in building their ecosystems. Key components discussed include HDFS, MapReduce, YARN, visualizations, product vs service deployment models, and ensuring the components work efficiently together.