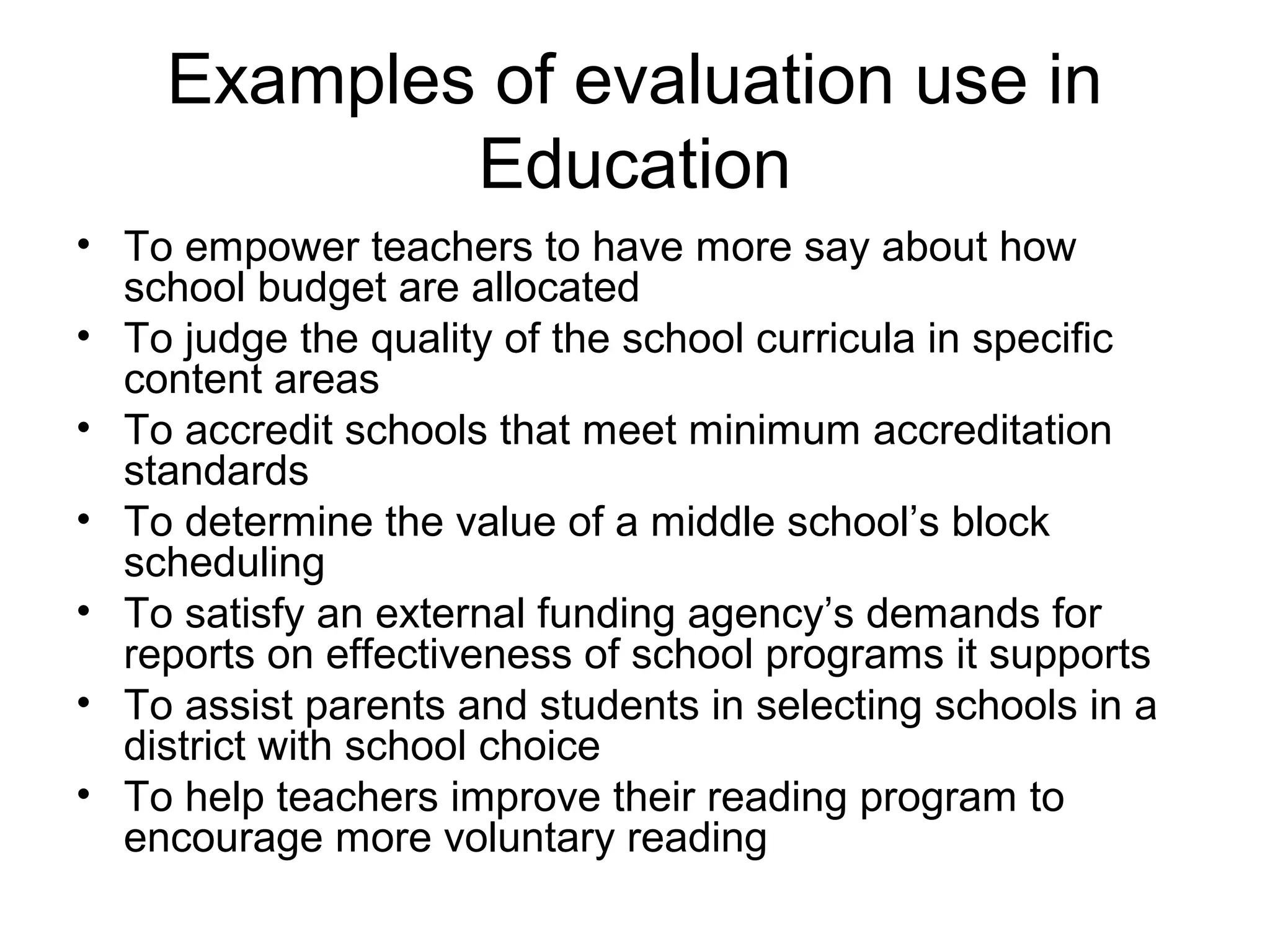

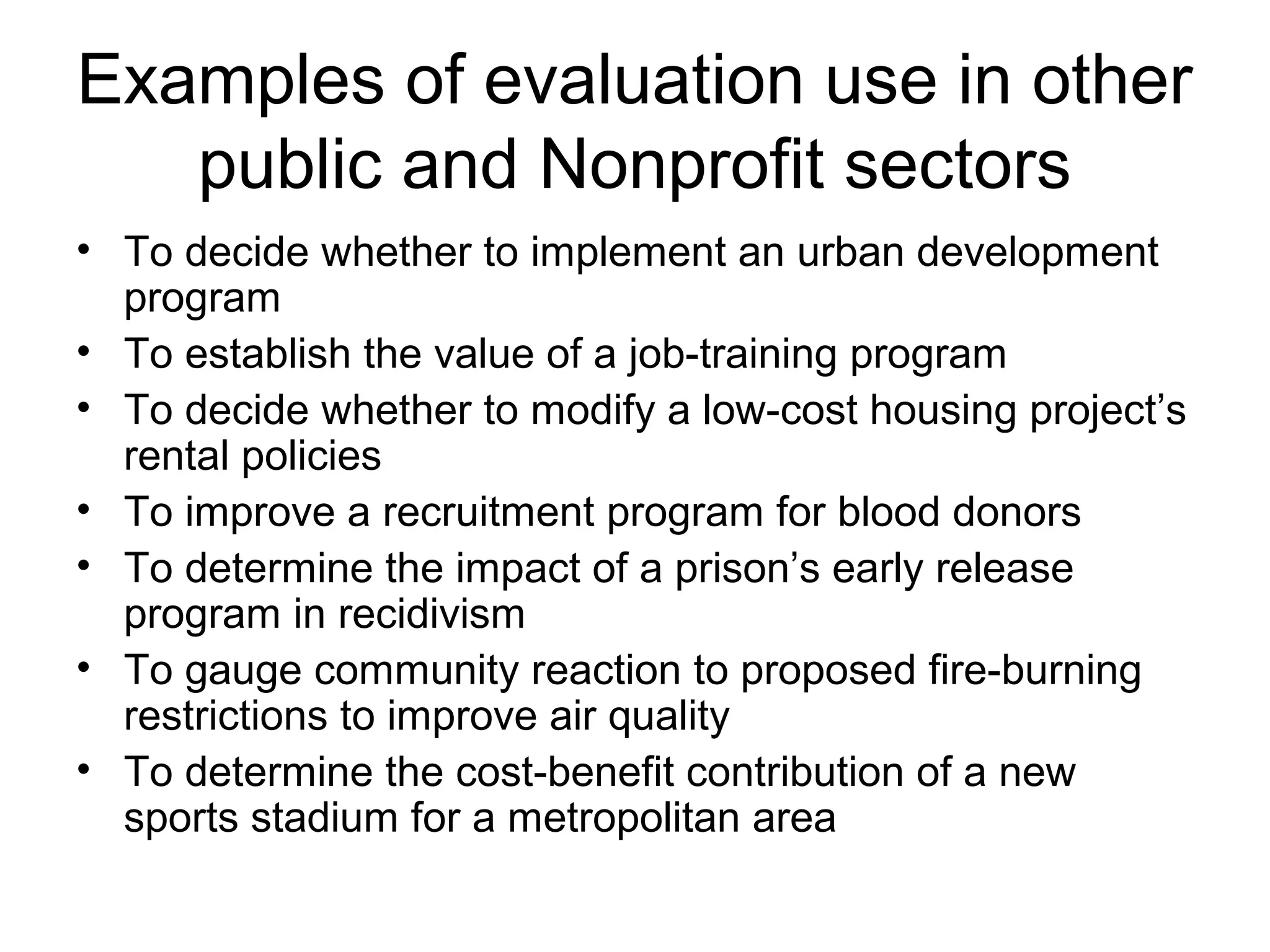

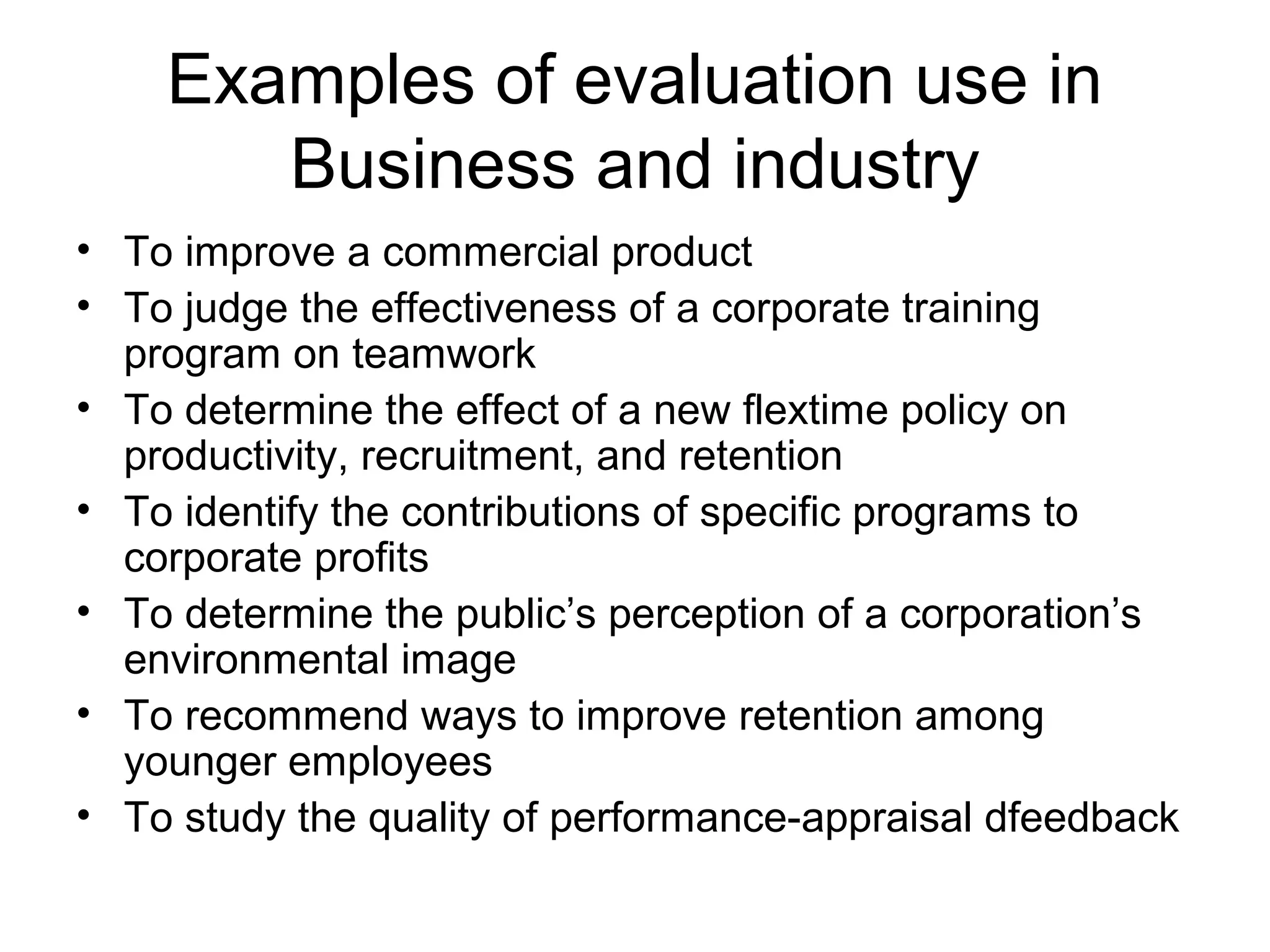

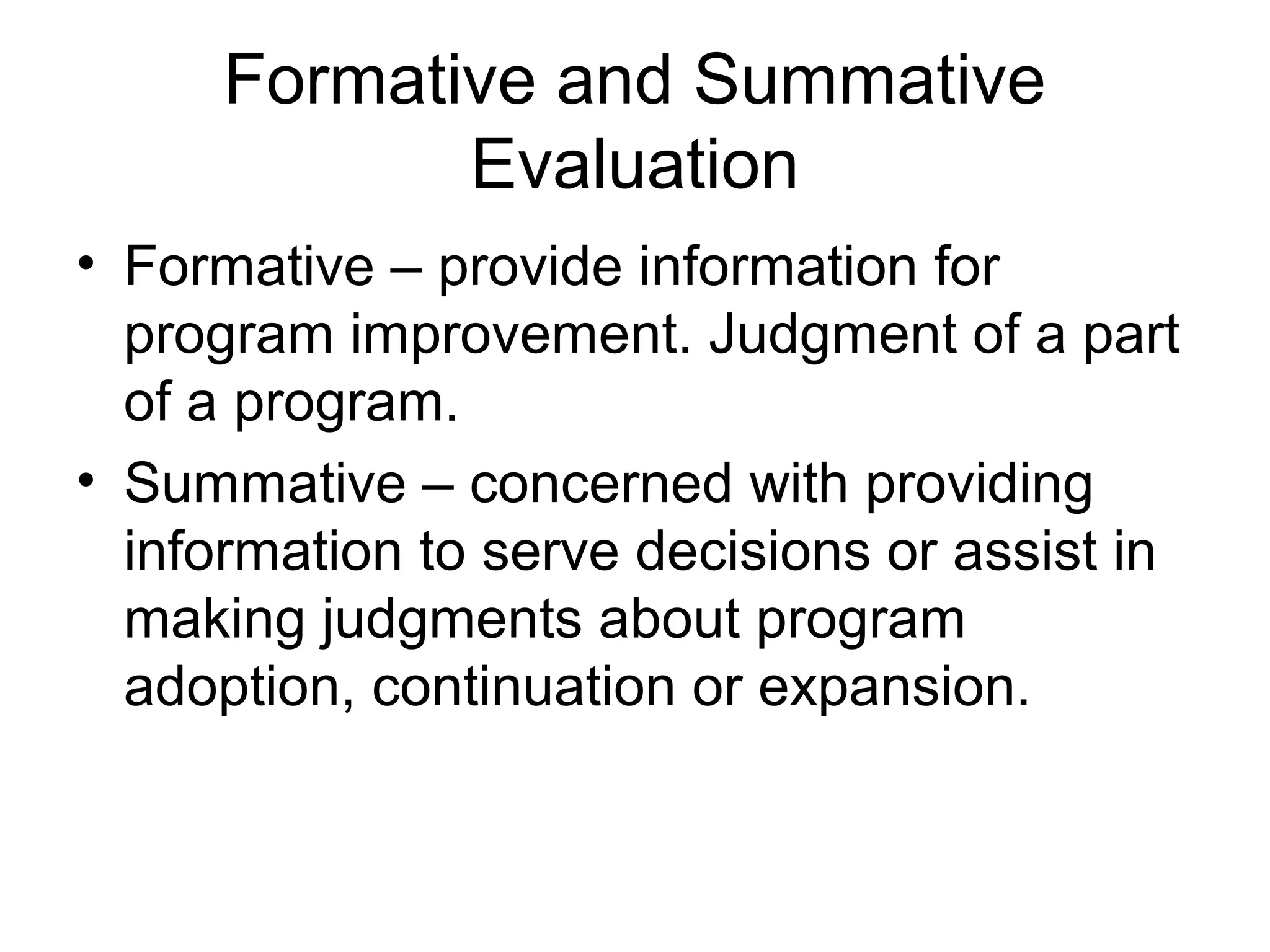

Program evaluation is needed to provide policymakers information on program effectiveness and areas for improvement. The roles of a professional evaluator include collecting relevant information, applying standards to make judgments on a program's merit, worth and significance, and communicating evaluation results to stakeholders. Examples of evaluation uses span various sectors such as education, nonprofit, business and industry to improve programs and make decisions about program adoption and continuation.