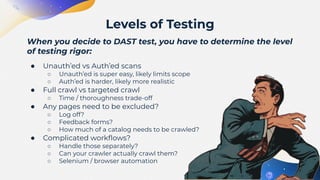

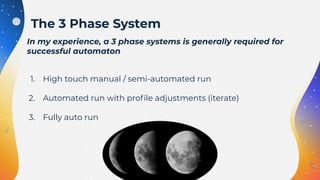

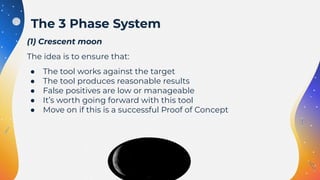

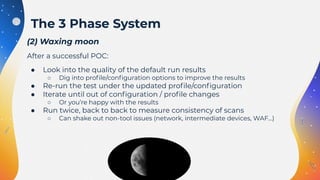

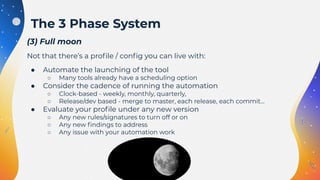

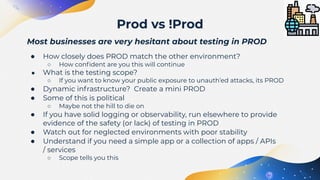

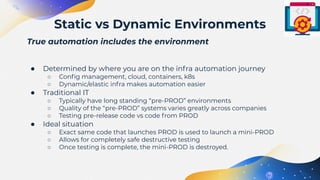

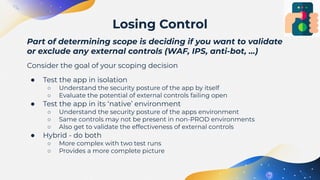

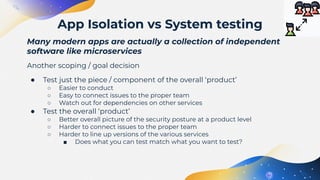

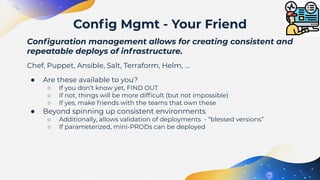

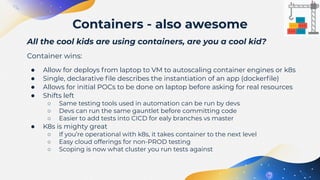

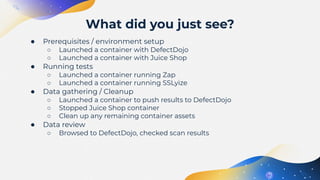

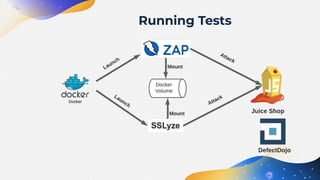

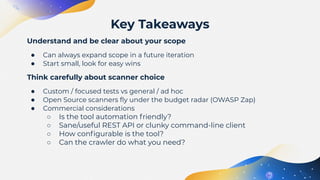

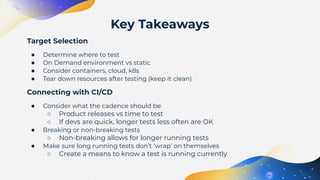

The document discusses the automation of Dynamic Application Security Testing (DAST), emphasizing the challenges and considerations for effectively conducting tests on web applications. It outlines a three-phase system for DAST automation, covering initial testing, iterative improvements, and full automation, while highlighting the importance of environment consistency and tool selection. Key takeaways include the significance of scoping tests appropriately, the integration with CI/CD processes, and leveraging modern practices like containerization to facilitate testing.